The field of data engineering is rapidly evolving, driven by the ever-growing volume and complexity of data businesses collect. In fact, Zippia predicts a staggering 21% increase in job openings for data professionals between 2018 and 2028, highlighting the immense demand for skilled data engineers.

Data engineers are the backbone of this data-driven revolution, building and maintaining the infrastructure that transforms raw data into actionable insights. Data engineers must possess a unique blend of technical and soft skills to thrive in this dynamic field. This guide explores the essential and advanced data engineering skills you’ll need to succeed in 2024.

What Does a Data Engineer Do?

Data engineers act as a bridge between data scientists and the vast quantities of data organizations generate. They design and build data pipelines that extract, transform, and load (ETL) data from various sources into data warehouses or data lakes. They also ensure data quality, security, and accessibility for data analysts and scientists.

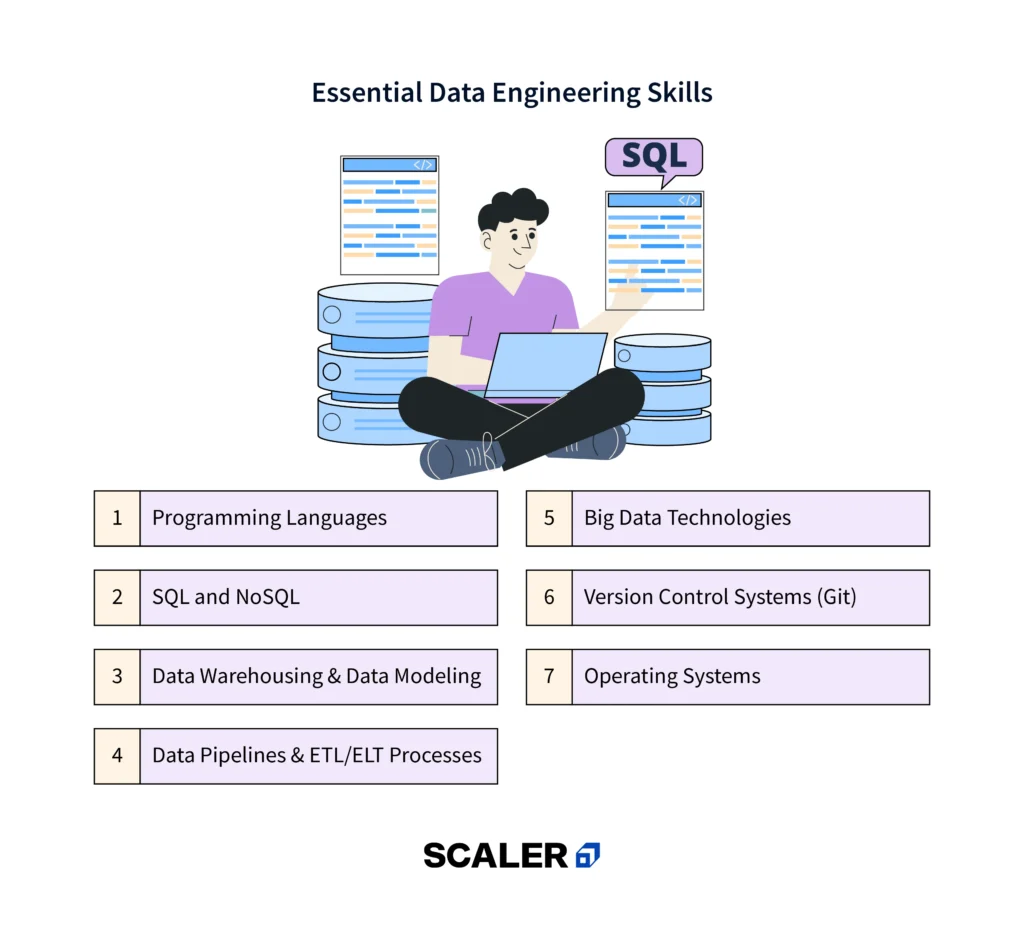

Essential Data Engineering Skills

Data engineering is a dynamic field, and success hinges on a robust skillset. This section dives into the essential data engineering skills you’ll need to build a strong foundation. Mastering these core competencies will empower you to effectively manage, process, and analyze data, forming the backbone of data-driven decision-making.

1. Programming Languages

Data engineers leverage programming languages to automate tasks, manipulate data, and build complex data pipelines that extract, transform, and load data. These languages empower you to write scripts, develop data processing tools, and interact with data programmatically, ensuring efficient data management and analysis.

Importance: Proficiency in programming languages empowers you to write scripts, develop data processing tools, and interact with data programmatically.

Top Topics to Learn:

- Python: A versatile language essential for data manipulation, scripting, and building data pipelines.

- Java or Scala (optional): Valuable for working with big data frameworks like Apache Spark.

2. SQL and NoSQL

SQL (Structured Query Language) is used for querying relational databases, while NoSQL databases handle unstructured or big data.

Importance: Understanding both SQL and NoSQL equips you to work with various data sources. SQL is essential for relational data, while NoSQL is valuable for big data or schema-less applications.

Top Topics to Learn:

- SQL fundamentals (SELECT, INSERT, UPDATE, DELETE)

- Writing complex SQL queries (joins, subqueries)

- NoSQL database concepts (document stores, key-value stores)

- Popular NoSQL databases (MongoDB, Cassandra)

3. Data Warehousing & Data Modeling

Data warehousing involves designing and managing data storage solutions for efficient data analysis. Data modeling focuses on representing data structures effectively.

Importance: Data warehouses provide centralized storage for data analysis, while data modeling ensures data is organized and understandable for users.

Top Topics to Learn:

- Data warehousing concepts (dimensional modeling)

- Data modeling techniques (entity relationship diagrams)

- Data warehouse design tools (e.g., Kimball methodology)

4. Data Pipelines & ETL/ELT Processes

Data pipelines automate the process of extracting data from various sources, transforming it into a usable format, and loading it into a target system (data warehouse, data lake). ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are common data processing approaches.

Importance: Building and maintaining data pipelines ensures efficient data flow and data availability for analytics. Understanding ETL/ELT processes is crucial for data transformation.

Top Topics to Learn:

- Data pipeline design and orchestration tools (Airflow, Luigi)

- ETL/ELT processing concepts and best practices

- Data transformation techniques (data cleaning, formatting)

5. Big Data Technologies

Big data technologies like Hadoop and Spark are designed to handle and process massive datasets that traditional data processing tools struggle with.

Importance: As data volumes grow, familiarity with big data frameworks becomes increasingly valuable for data engineers.

Top Topics to Learn:

- Big data concepts (distributed processing, scalability)

- Apache Hadoop ecosystem (HDFS, MapReduce, YARN)

- Apache Spark for large-scale data processing

6. Version Control Systems (Git)

Git is a distributed version control system that allows data engineers to track code changes, collaborate effectively, and revert to previous versions if necessary.

Importance: Git ensures data engineering projects are well-organized, traceable, and recoverable in case of errors. It also promotes collaboration by enabling multiple engineers to work on the same project simultaneously.

Top Topics to Learn:

- Git fundamentals (adding, committing, pushing, pulling)

- Branching and merging strategies

- Working with remote repositories (GitHub, GitLab)

- Utilizing Git features for collaboration (pull requests, code reviews)

7. Operating Systems

A strong understanding of operating systems like Linux is essential for working with servers and data infrastructure commonly used in data engineering.

Importance: Proficiency in Linux allows data engineers to navigate servers, manage data storage, and interact with the underlying infrastructure that supports data pipelines and data storage systems.

Top Topics to Learn:

- Linux command line basics (navigation, file manipulation)

- User and permission management in Linux

- Working with shell scripting (optional) for automation

Advanced Data Engineering Skills

As you progress in your data engineering journey, these advanced skills will enhance your ability to handle complex data challenges and architect robust data systems:

8. Stream Processing Frameworks

Stream processing frameworks like Apache Kafka or Apache Flink are designed to handle continuous streams of data in real-time. This is in contrast to traditional batch processing, which works with static datasets.

Importance: As data generation becomes increasingly real-time, mastering stream processing frameworks empowers data engineers to analyze and react to data as it arrives, enabling real-time decision-making and applications.

Top Topics to Learn:

- Concepts of stream processing and real-time data pipelines

- Popular stream processing frameworks (Apache Kafka, Apache Flink)

- Building real-time data pipelines and applications

9. Data Version Control & Lineage

Data version control, similar to code version control with Git, tracks changes made to data over time. Data lineage traces the origin and flow of data throughout the data pipeline, ensuring data traceability and simplifying debugging.

Importance: Data version control and lineage become crucial for maintaining data quality and ensuring data security, especially in complex data pipelines. They allow you to revert to previous data states if necessary and understand how data has been transformed throughout the processing stages.

Top Topics to Learn:

- Data version control tools and techniques

- Data lineage concepts and implementation strategies

- Integrating data version control and lineage into data pipelines

Data Modeling and Architecture

Beyond the core technical skills, a data engineer’s ability to design and architect effective data systems is essential. This section explores crucial aspects of data modeling and architecture:

10. Data Quality and Governance

Data quality refers to the accuracy, completeness, and consistency of data. Data governance establishes policies and processes to ensure data quality and manage data access.

Importance: Data quality is paramount for reliable data analysis and decision-making. Data governance ensures data security and compliance with regulations.

Top Topics to Learn:

- Data quality checks and data cleansing techniques

- Data governance frameworks and best practices

- Implementing data quality checks into data pipelines

11. Data Visualization Libraries

Data visualization libraries like Matplotlib or Tableau allow data engineers to create informative charts, graphs, and dashboards to communicate data insights effectively.

Importance: Clear and concise data visualization is crucial for presenting data analysis findings to both technical and non-technical audiences.

Top Topics to Learn:

- Popular data visualization libraries (Matplotlib, Tableau)

- Data visualization best practices and design principles

- Creating interactive dashboards and reports for data communication

12. Data APIs & Data Streaming

Data APIs (Application Programming Interfaces) provide a secure way for other applications to access and interact with data stored in data warehouses or data lakes. Data streaming refers to the continuous flow of real-time data that needs to be integrated and processed.

Importance: Data APIs enable data sharing and collaboration across various applications within an organization. Data streaming is essential for handling and analyzing real-time data feeds.

Top Topics to Learn:

- Designing and building data APIs

- Data streaming concepts and technologies (Apache Kafka)

- Integrating data streaming pipelines with data platforms

13. Cloud Computing Fundamentals

Cloud computing platforms like AWS, Azure, and GCP offer scalable and cost-effective solutions for deploying and managing data infrastructure. Understanding cloud fundamentals equips you to leverage these platforms effectively.

Importance: Cloud adoption is prevalent in data engineering. Familiarity with cloud platforms allows you to build and manage data pipelines in a scalable and elastic manner.

Top Topics to Learn:

- Cloud computing concepts (IaaS, PaaS, SaaS)

- Popular cloud platforms (AWS, Azure, GCP)

- Deploying and managing data infrastructure on cloud platforms

14. Machine Learning & AI Fundamentals

While not core data engineering skills, understanding machine learning (ML) and artificial intelligence (AI) fundamentals can be advantageous. Data engineers are increasingly involved in building data pipelines for AI and ML applications.

Importance: Familiarity with ML and AI concepts allows data engineers to design data pipelines that can be used to train and deploy machine learning models.

Top Topics to Learn:

- Machine learning algorithms and concepts (classification, regression)

- Introduction to artificial intelligence

- Building data pipelines for machine learning applications (optional)

Soft Skills for Data Engineers

Data engineering isn’t just about technical expertise. Beyond the code and infrastructure, successful data engineers possess strong soft skills that enable them to collaborate effectively, solve complex problems, and communicate their findings clearly. Here are some key soft skills to cultivate:

15. Communication

The ability to bridge the gap between technical data concepts and a wider audience, both technical and non-technical, is essential.

16. Problem-Solving & Critical Thinking

Data engineering is full of challenges. Analytical thinking and the ability to break down complex problems into manageable steps are crucial.

17. Teamwork & Collaboration

Data engineers rarely work in isolation. Collaboration with data scientists, analysts, and software developers is essential for successful project completion.

18. Time Management & Organization

Data engineers frequently handle many projects with varied deadlines. Strong time management and organizational abilities enable them to prioritize projects, fulfill deadlines, and manage their workload.

19. Attention to Detail & Accuracy

Data engineers work with large amounts of data, and even minor errors can have serious effects. A strong eye for detail and a dedication to accuracy are essential for maintaining data integrity and achieving effective project results.

20. Business Acumen

Understanding business goals and how data engineering contributes to them enables data engineers to make more informed decisions and prioritize jobs more effectively.

How to Develop Data Engineering Skills?

Equipping yourself with the necessary data engineering skills requires dedication and continuous learning. Here are some effective approaches:

- Formal Education: Consider pursuing a bachelor’s degree in computer science, information technology, or a related field. Data engineering bootcamps or specialized master’s programs can also provide focused training.

- Online Courses and Resources: Numerous online platforms offer comprehensive data engineering courses, tutorials, and certifications. Explore platforms like Scaler, Udemy, Edx, and websites of cloud providers (AWS, Azure, GCP) for relevant courses.

- Open-Source Projects: Contributing to open-source data engineering projects allows you to gain practical experience, learn from experienced developers, and build your portfolio.

- Personal Projects: Build your own data-driven projects to solidify your learning and showcase your skills. This could involve web scraping a dataset, building a data pipeline for personal data analysis, or creating a data visualization dashboard.

How to Become a Data Engineer in 2024?

The path to becoming a data engineer involves a combination of education, skill development, and practical experience:

- Build a Strong Foundation: Start by acquiring a solid understanding of core programming languages (Python), SQL and NoSQL databases, and data warehousing concepts.

- Expand Your Skillset: Delve deeper into advanced data engineering areas like big data technologies, data pipelines, and cloud computing fundamentals.

- Sharpen Your Soft Skills: Develop strong communication, problem-solving, and teamwork skills, all crucial for collaboration in data-driven projects.

- Gain Practical Experience: Look for opportunities to gain hands-on experience through internships, freelance projects, or contributing to open-source projects.

- Build Your Portfolio: Showcase your skills by creating a portfolio that highlights your data engineering projects, personal data analysis work, and any certifications you’ve earned.

Remember, the data engineering landscape is constantly evolving. Continuous learning and staying updated with the latest trends and technologies will be essential for long-term success in this exciting field.

Conclusion

Data engineering plays a pivotal role in unlocking the power of data. By mastering the essential and advanced skills outlined in this guide, you can position yourself for a rewarding career in this dynamic and in-demand field. Tackle the challenges, hone your skills, and start your journey of becoming a data engineering rockstar.

Frequently Asked Questions

Is the ETL developer the same as a Data Engineer?

There’s a significant overlap, but data engineers have a broader scope. ETL developers focus on building and maintaining data pipelines using ETL (Extract, Transform, Load) processes. Data engineers encompass ETL development but also handle tasks like data warehousing, data modeling, and working with big data technologies.

What are the 3 job duties of big data engineers?

- Designing and building big data pipelines: This involves extracting data from various sources, transforming it for analysis, and loading it into storage systems like data lakes.

- Managing and maintaining big data infrastructure: Big data engineers ensure big data frameworks like Hadoop and Spark function smoothly and efficiently.

- Working with big data tools and technologies: They utilize tools like Apache Pig, Hive, and Spark SQL to analyze and process massive datasets.

What is the role of a Data Engineer in SQL?

Data engineers leverage SQL for various tasks:

- Writing queries to extract data from relational databases for data pipelines or analysis.

- Designing and managing data warehouse schemas using SQL to ensure efficient data storage and retrieval.

- Working with data analysts to understand data requirements and translate them into SQL queries for data exploration.

What qualifications or educational background are required to become a data engineer?

There’s no single path. A bachelor’s degree in computer science, information technology, or a related field is a common starting point. However, some data engineers come from non-CS backgrounds with relevant experience and strong technical skills. Certifications and bootcamps can also provide valuable training.

Is a data engineer the same as a data scientist?

No, their roles differ:

- Data engineers: Focus on building and maintaining the infrastructure that processes and stores data. They ensure data is clean, accessible, and usable for analysis.

- Data scientists: Utilize data extracted and prepared by data engineers to build models, conduct statistical analysis, and extract insights to inform business decisions.

Do I need a degree in computer science to become a data engineer?

Not necessarily. While a CS degree provides a strong foundation, it’s not mandatory. Individuals with strong programming abilities, experience with data analysis tools, and a passion for learning can succeed in this field through boot camps, online courses, and gaining practical experience.

How can I gain experience with big data technologies if I’m new to the field?

- Start with online resources: Numerous online tutorials and courses, and sandbox environments (e.g., Cloudera QuickStart) allow you to experiment with big data technologies like Hadoop and Spark.

- Contribute to open-source projects: Look for open-source projects working with big data frameworks. This provides hands-on experience and the chance to learn from experienced developers.

- Personal projects: Consider building your own big data project using publicly available datasets. This allows you to explore big data tools and showcase your skills on your portfolio.