AI Accountability

Overview

As the use of artificial intelligence (AI) grows, so does the need for accountability. AI can be used in a variety of ways, from autonomous vehicles to financial trading algorithms, and its use can have significant impacts on individuals and society as a whole. The question of AI accountability is an important one, as it helps to ensure that AI is used in a responsible and ethical manner.

Introduction

AI has become an integral part of modern society. With its ability to process vast amounts of data and make decisions based on that data, AI is being used in a variety of industries, from healthcare to finance. However, the use of AI can also raise concerns about accountability. When AI is used to make decisions that affect individuals or society as a whole, it is important to ensure that those decisions are fair, transparent, and accountable.

What is AI Accountability?

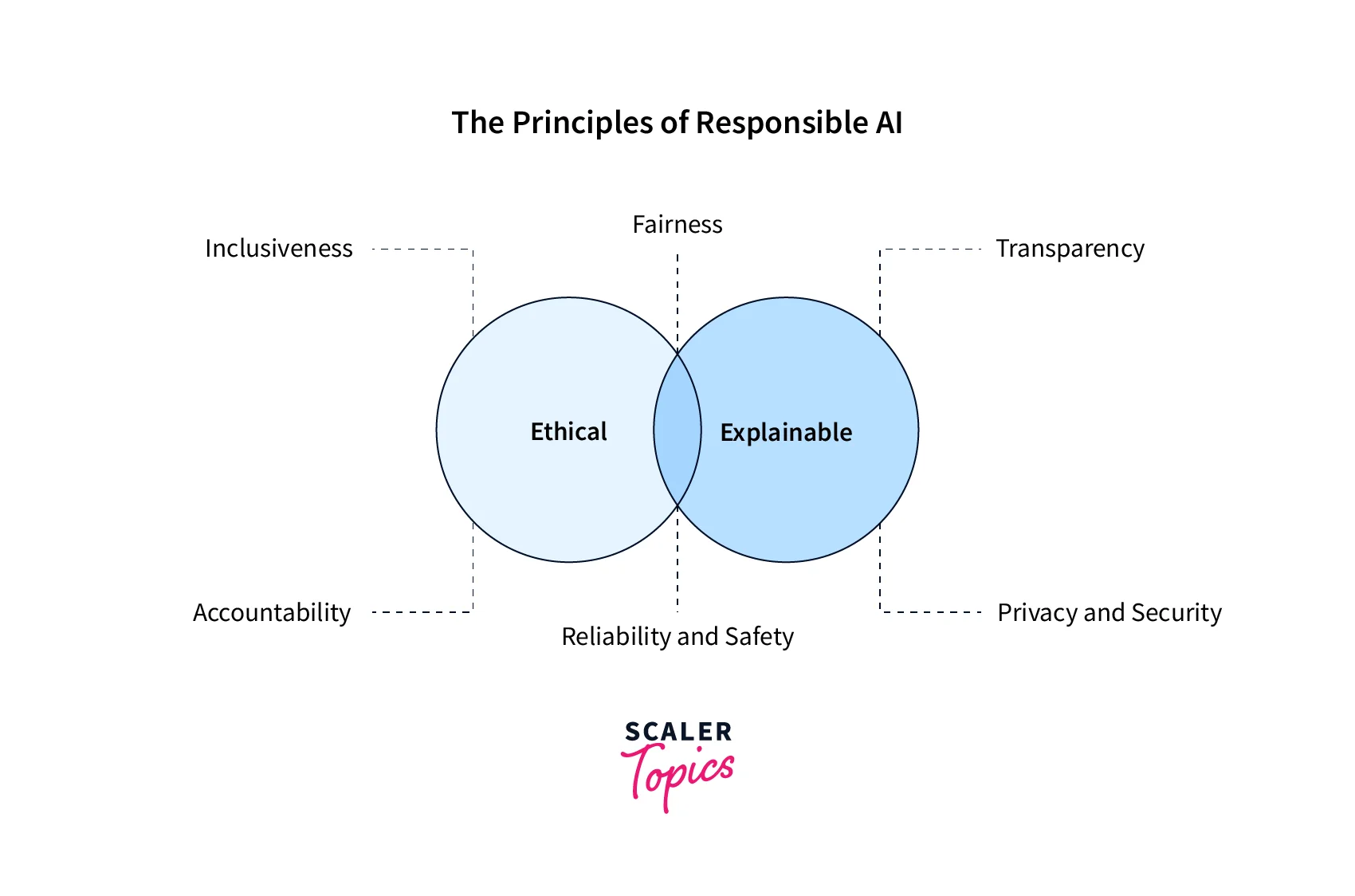

AI accountability refers to the responsibility that individuals and organizations have for the actions and decisions of AI systems. This includes ensuring that AI is used in a way that is transparent, ethical, and in line with legal and regulatory requirements. It also includes ensuring that individuals are able to understand and challenge decisions made by AI systems.

Challenges in AI Accountability

Some of the major challenges in ensuring AI accountability include:

- The "black box" problem, where the decision-making processes of AI systems are opaque and difficult to understand.

- The potential for AI systems to perpetuate and amplify biases, particularly if they are trained on biased data.

- The lack of clear regulatory frameworks and standards for AI accountability.

- The rapidly evolving nature of AI, which can make it difficult to anticipate all of the potential uses and impacts of AI systems.

- The potential for individuals and organizations to circumvent regulations and standards, or to use AI systems in ways that are not transparent or ethical.

Creating AI Accountability

Creating AI accountability is a crucial task that requires attention to several areas to ensure that AI systems are ethical, transparent, and accountable.

- The first area of focus is functional performance. To produce accurate, fair, and legal decisions, all components of an AI system, including machine learning and natural language processing, must function properly. For example, a credit card fraud detection system must have finely tuned algorithms to protect customers against unauthorized use of their credit cards but not decline legitimate purchases. A system that makes too many false positives or false negatives could lead to loss of trust and confidence in the system.

- The second area of focus is data. AI systems require a high volume of high-quality data to function effectively. Organizations need to ensure that the data used to train and use AI is clean, unbiased, and protected against unauthorized access and use.

- The third area of focus is preventing unintended biases. Data and algorithms must be designed in a way that avoids producing results that discriminate. For example, if a hiring model is trained on resumes submitted mostly by men, it may inherently try to hire more men than women, leading to biased decisions. Organizations must be vigilant to prevent unintended biases in their AI systems.

- Additionally, creating ethical AI and accountability means safeguarding against unintended uses. AI systems are designed for specific use cases and transferring them to different use cases can lead to incorrect results. Organizations must ensure that their AI systems are used only for their intended purpose.

Organizations that are attentive to all three of these areas are more likely to have AI-based systems that produce accurate and fair results. Such organizations are also more likely to identify early when their systems are not working well, creating a level of accountability above less attentive organizations.

No Guarantee on AI Accountability

Despite the efforts made to create ethical AI and accountability frameworks, there is no guarantee that AI systems will always produce accurate and fair results. There are several reasons for this:

- Firstly, AI systems are only as good as the data and algorithms used to train them. If the data used to train an AI system is biased or incomplete, the system may produce biased or inaccurate results. For example, if a facial recognition system is trained on a dataset that includes mostly white faces, it may struggle to recognize people of other races.

- Secondly, AI systems can be vulnerable to attacks and hacking, leading to unauthorized access and use of data. This can compromise the accuracy and fairness of the system's decisions. Additionally, AI systems can be subject to intentional manipulation or interference, further compromising their accountability.

- Thirdly, lack of transparency in AI decision-making processes can make it difficult to identify and correct errors or biases. AI systems often operate as black boxes, with little insight into how they arrive at their decisions. This can make it difficult for humans to understand how the system arrived at a particular decision, and to identify and correct errors or biases in the system's decision-making process.

- Fourthly, legal and regulatory frameworks may not be adequate to address issues related to AI accountability. Current legal frameworks may not fully account for the unique challenges presented by AI systems, leaving gaps in accountability and regulation.

- Finally, as AI continues to advance, new ethical and accountability challenges may arise that require ongoing attention and effort to address. This means that AI accountability frameworks will need to evolve alongside the technology itself, in order to ensure that AI systems are used ethically and responsibly.

Regulations for Accountable AI

Governments and organizations around the world are developing regulations and guidelines to promote accountable AI. Here are some examples of regulations for accountable AI:

- European Union's General Data Protection Regulation (GDPR):

The GDPR includes regulations for data protection and privacy, which are particularly relevant to AI systems that rely on personal data. - Algorithmic Accountability Act:

This proposed legislation in the United States would require large companies to audit their AI systems for bias and discrimination, and to make the results of those audits public. - AI Ethics Guidelines for Trustworthy AI:

This set of guidelines, developed by the European Commission's High-Level Expert Group on AI, provides a framework for ethical and trustworthy AI. The guidelines emphasize transparency, accountability, and human oversight of AI systems. - Singapore Model AI Governance Framework:

The Singapore government developed this framework to promote the responsible and ethical use of AI. The framework includes guidelines for AI decision-making, data management, and human oversight of AI systems. - Montreal Declaration for Responsible AI:

This declaration, developed by a group of AI researchers and experts, includes principles for responsible AI development and use. The principles include transparency, accountability, and human-centric design.

Conclusion

- AI accountability refers to the responsibility that individuals and organizations have for the actions and decisions of AI systems. It includes ensuring that AI is used in a way that is transparent, ethical, and in line with legal and regulatory requirements.

- Challenges in ensuring AI accountability include the "black box" problem, the potential for AI systems to perpetuate and amplify biases, the lack of clear regulatory frameworks, the rapidly evolving nature of AI, and the potential for individuals and organizations to circumvent regulations and standards.

- Creating AI accountability requires attention to functional performance, data, and preventing unintended biases.

- However, there is no guarantee that AI systems will always produce accurate and fair results due to issues such as biased data, vulnerability to attacks and hacking, lack of transparency, inadequate legal and regulatory frameworks, and evolving ethical and accountability challenges.

- Governments and organizations are developing regulations and guidelines for accountable AI to address these challenges and ensure that AI systems are used ethically and responsibly.