Understanding Bidirectional RNN

Overview

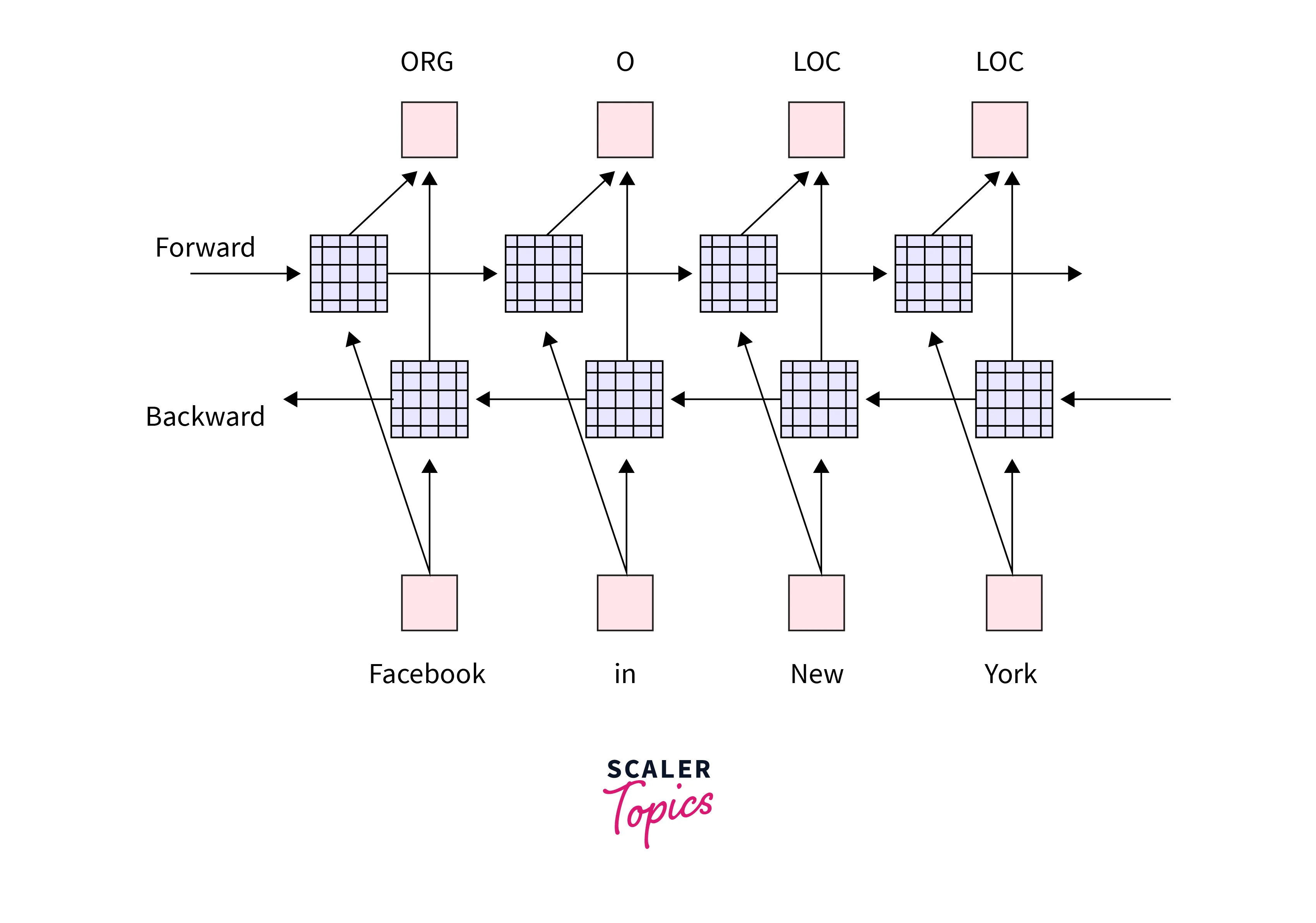

Bi-directional recurrent neural networks (Bi-RNNs) are artificial neural networks that process input data in both the forward and backward directions. They are often used in natural language processing tasks, such as language translation, text classification, and named entity recognition. In addition, they can capture contextual dependencies in the input data by considering past and future contexts. Bi-RNNs consist of two separate RNNs that process the input data in opposite directions, and the outputs of these RNNs are combined to produce the final output.

What is Bidirectional RNN?

A bi-directional recurrent neural network (Bi-RNN) is a type of recurrent neural network (RNN) that processes input data in both forward and backward directions. The goal of a Bi-RNN is to capture the contextual dependencies in the input data by processing it in both directions, which can be useful in various natural language processing (NLP) tasks.

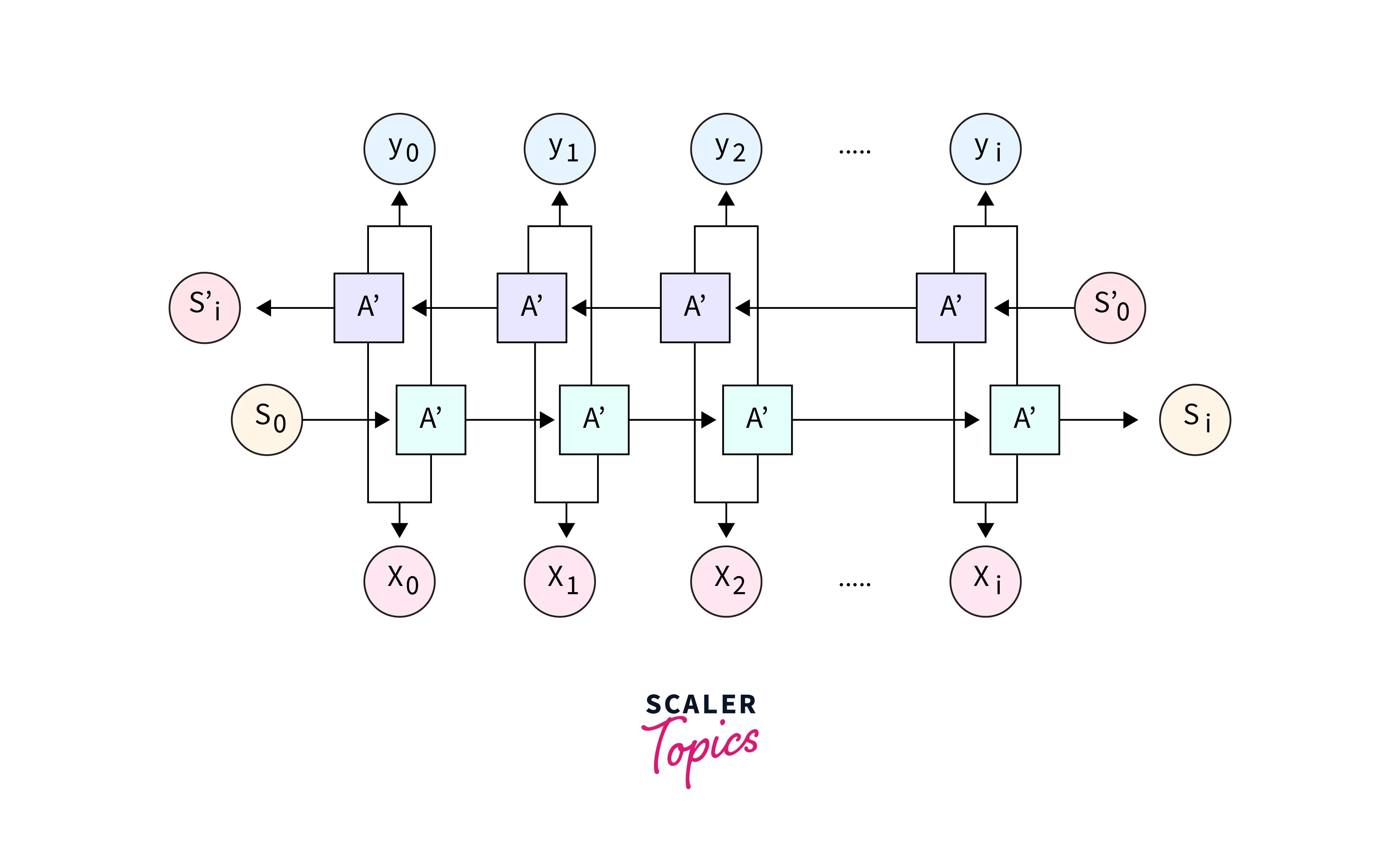

In a Bi-RNN, the input data is passed through two separate RNNs: one processes the data in the forward direction, while the other processes it in the reverse direction. The outputs of these two RNNs are then combined in some way to produce the final output.

One common way to combine the outputs of the forward and reverse RNNs is to concatenate them. Still, other methods, such as element-wise addition or multiplication, can also be used. The choice of combination method can depend on the specific task and the desired properties of the final output.

Need for Bi-directional RNNs

- A uni-directional recurrent neural network (RNN) processes input sequences in a single direction, either from left to right or right to left.

- This means the network can only use information from earlier time steps when making predictions at later time steps.

- This can be limiting, as the network may not capture important contextual information relevant to the output prediction.

- For example, in natural language processing tasks, a uni-directional RNN may not accurately predict the next word in a sentence if the previous words provide important context for the current word.

Consider an example where we could use the recurrent network to predict the masked word in a sentence.

- Apple is my favorite _____.

- Apple is my favourite _____, and I work there.

- Apple is my favorite _____, and I am going to buy one.

In the first sentence, the answer could be fruit, company, or phone. But it can not be a fruit in the second and third sentences.

A Recurrent Neural Network that can only process the inputs from left to right may not accurately predict the right answer for sentences discussed above.

To perform well on natural language tasks, the model must be able to process the sequence in both directions.

Bi-directional RNNs

- A bidirectional recurrent neural network (RNN) is a type of recurrent neural network (RNN) that processes input sequences in both forward and backward directions.

- This allows the RNN to capture information from the input sequence that may be relevant to the output prediction. Still, the same could be lost in a traditional RNN that only processes the input sequence in one direction.

- This allows the network to consider information from the past and future when making predictions rather than just relying on the input data at the current time step.

- This can be useful for tasks such as language processing, where understanding the context of a word or phrase can be important for making accurate predictions.

- In general, bidirectional RNNs can help improve a model's performance on various sequence-based tasks.

This means that the network has two separate RNNs:

- One that processes the input sequence from left to right

- Another one that processes the input sequence from right to left.

These two RNNs are typically called forward and backward RNNs, respectively.

During the forward pass of the RNN, the forward RNN processes the input sequence in the usual way by taking the input at each time step and using it to update the hidden state. The updated hidden state is then used to predict the output.

Backpropagation through time (BPTT) is a widely used algorithm for training recurrent neural networks (RNNs). It is a variant of the backpropagation algorithm specifically designed to handle the temporal nature of RNNs, where the output at each time step depends on the inputs and outputs at previous time steps.

In the case of a bidirectional RNN, BPTT involves two separate Backpropagation passes: one for the forward RNN and one for the backward RNN. During the forward pass, the forward RNN processes the input sequence in the usual way and makes predictions for the output sequence. These predictions are then compared to the target output sequence, and the error is backpropagated through the network to update the weights of the forward RNN.

The backward RNN processes the input sequence in reverse order during the backward pass and predicts the output sequence. These predictions are then compared to the target output sequence in reverse order, and the error is backpropagated through the network to update the weights of the backward RNN.

Once both passes are complete, the weights of the forward and backward RNNs are updated based on the errors computed during the forward and backward passes, respectively. This process is repeated for multiple iterations until the model converges and the predictions of the bidirectional RNN are accurate.

This allows the bidirectional RNN to consider information from past and future time steps when making predictions, which can significantly improve the model's accuracy.

Merge Modes in Bidirectional RNN

In a bidirectional recurrent neural network (RNN), two separate RNNs process the input data in opposite directions (forward and backward). The output of these two RNNs is then combined, or "merged," in some way to produce the final output of the model.

There are several ways in which the outputs of the forward and backward RNNs can be merged, depending on the specific needs of the model and the task it is being used for. Some common merge modes include:

- Concatenation: In this mode, the outputs of the forward and backward RNNs are concatenated together, resulting in a single output tensor that is twice as long as the original input.

- Sum: In this mode, the outputs of the forward and backward RNNs are added together element-wise, resulting in a single output tensor that has the same shape as the original input.

- Average: In this mode, the outputs of the forward and backward RNNs are averaged element-wise, resulting in a single output tensor that has the same shape as the original input.

- Maximum: In this mode, the maximum value of the forward and backward outputs is taken at each time step, resulting in a single output tensor with the same shape as the original input.

Which merge mode to use will depend on the specific needs of the model and the task it is being used for. Concatenation is generally a good default choice and works well in many cases, but other merge modes may be more appropriate for certain tasks.

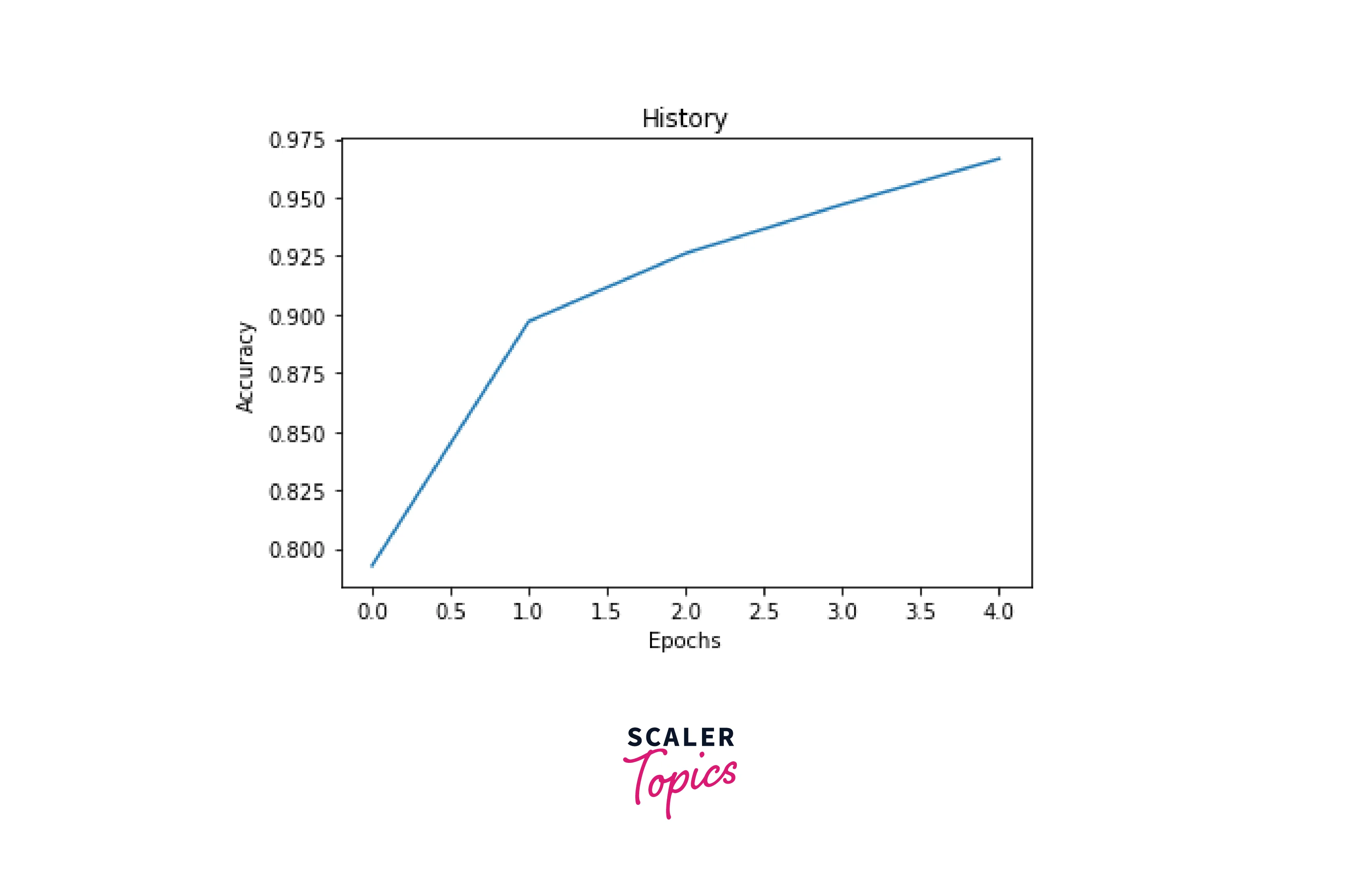

Simple Bidirectional RNN for Sentiment Analysis

This simple Bi-RNN model for sentiment analysis can take in text data as input, process it in both forward and backward directions, and output a probability score indicating the sentiment of the text.

Import

Importing and loading the dataset required libraries to perform the sentiment analysis tasks.

Output

Padding

Padding is a common technique used in natural language processing (NLP) to ensure all input sequences have the same length. This is often necessary because many NLP models, such as neural networks, require fixed-length input sequences.

Build the Model

Train

Train the defined model with the data imported

Output

Accuracy

Evaluate the accuracy

Output

Predict

Output

What’s the Difference Between BRNN and Recurrent Neural Network?

Unlike standard recurrent neural networks, BRNN’s are trained to simultaneously predict both the positive and negative directions of time.

| Characteristic | BRNN | RNN |

|---|---|---|

| Definition | Bidirectional Recurrent Neural Networks. | Recurrent Neural Networks. |

| Purpose | Process input sequences in both forward and backward directions. | Process input sequences in a single direction. |

| Output | Output at each time step depends on the past and future inputs. | Output at each time step depends only on the past inputs. |

| Training | Trained on both forward and backward sequences. | Trained on a single sequence. |

| Examples | Natural language processing tasks, speech recognition. | Time series prediction, language translation. |

BRNN’s split the neurons of a regular RNN into two directions, one for the forward states (positive time direction) and another for the backward states (negative time direction). Neither of these output states is connected to inputs of opposite directions. By employing two-time directions simultaneously, input data from the past and future of the current time frame can be used to calculate the same output, which is the opposite of standard recurrent networks that requires an extra layer for including future information.

Applications of Bi-directional RNNs

Bidirectional recurrent neural networks (RNNs) can outperform traditional RNNs on various tasks, particularly those involving sequential data processing. Some examples of tasks where bidirectional RNNs have been shown to outperform traditional RNNs include:

- Natural language processing tasks, such as language translation and sentiment analysis, where understanding the context of a word or phrase can be important for making accurate predictions.

- Time series forecasting tasks, such as predicting stock prices or weather patterns, where the sequence of past data can provide important clues about future trends.

- Audio processing tasks, such as speech recognition or music generation, where the information in the audio signal can be complex and non-linear.

In general, bidirectional RNNs can be useful for any task where the input data has a temporal structure and where understanding the context of the data is important for making accurate predictions.

Advantages and Disadvantages of Bi-directional RNNs

Advantages:

Bidirectional Recurrent Neural Networks (RNNs) have several advantages over traditional RNNs. Some of the key advantages of bidirectional RNNs include the following:

- Improved performance on tasks that involve processing sequential data. Because bidirectional RNNs can consider information from past and future time steps when making predictions, they can outperform traditional RNNs on tasks such as natural language processing, time series forecasting, and audio processing.

- The ability to capture long-term dependencies in the data. Traditional RNNs can struggle to capture relationships between data points far apart because the network's hidden state is reset at each time step. In contrast, bidirectional RNNs can use past and future information to capture long-term dependencies in the data.

- Better handling of complex input sequences. Bidirectional RNNs can capture complex patterns in the input data that may be difficult for traditional RNNs to model because they can consider both the past and future context of the data.

Disadvantages:

However, Bidirectional RNNs also have some disadvantages. Some of the key disadvantages of bidirectional RNNs include the following:

- Increased computational complexity. Because bidirectional RNNs have two separate RNNs (one for the forward pass and one for the backward pass), they can require more computational resources to train and evaluate than traditional RNNs. This can make them more difficult to implement and less efficient regarding runtime performance.

- More work to optimize. Because bidirectional RNNs have more parameters (due to the two separate RNNs), they can be more difficult to optimize. This can make finding the right set of weights for the model challenging and lead to slower convergence during training.

- The need for longer input sequences. For a bidirectional RNN to capture long-term dependencies in the data, it typically requires longer input sequences than a traditional RNN. This can be a disadvantage when the input data is limited or noisy, as it may not be possible to generate enough input data to train the model effectively.

Limitations of Bidirectional RNN

While bidirectional RNNs have proven to be effective in a wide range of natural language processing tasks, they also have some limitations that you should be aware of:

-

Computational complexity: Bidirectional RNNs are more computationally complex than their uni-directional counterparts, as they need to process the input sequence twice, once in each direction. This can make them more resource-intensive to train and deploy.

-

Memory requirements: Bidirectional RNNs require more memory to store the internal state of the forward and backward RNNs. This can be an issue when working with large input sequences or running the model on devices with limited memory.

-

Limited context: While bidirectional RNNs can capture contextual information from past and future input, they are still limited to a fixed-size context window. This means they may be unable to capture long-range dependencies or context that extends beyond this window.

-

Training difficulty: Training bidirectional RNNs can be more difficult than training uni-directional RNNs, as the gradients of the two directions can interfere with each other. This can make it harder to optimize the model and may require special techniques, such as gradient clipping, to prevent the gradients from exploding or vanishing.

While bidirectional RNNs are a powerful tool for modeling sequential data, it is important to consider their limitations carefully and whether they are the appropriate model for a given task.

Ready to transform your AI skills? Our Deep Learning free course will equip you with the tools to understand, design, and implement complex neural networks.

Conclusion

- Thus, bidirectional RNNs have increased computational complexity because bidirectional RNNs have two separate RNNs.

- BiDirectional RNNs are commonly used for sequence encoding and estimating observations given bidirectional context.

- Bidirectional RNNs are very costly to train due to long gradient chains.