Fashion-MNIST Clothing Classification Using TensorFlow

Overview

A brand-new benchmark dataset for computer vision and deep learning is the Fashion-MNIST clothes classification problem. The Zalando-provided Fashion-MNIST dataset is a collection of photos from fashion-related articles. Despite the dataset's simplicity, it can be used for learning and applying deep convolutional neural network development, evaluation, and application for image classification.

What are we Building?

This article will build a deep learning model using the TensorFlow framework to handle a problem. More specifically, we will learn how to create a classification model with a dataset other than numeric-like images.

Problem Statement

The online fashion market is constantly expanding. An artificial intelligence system that recognizes and categorizes human clothing is required to increase sales or better understand users. An algorithm that can identify clothing can assist businesses in the clothing sales industry in understanding the profile of potential customers and focusing on sales targeting particular niches.

Pre-requisites

You need to know about the Deep Learning model and its framework. If not, have a conceptual understanding of how those work. To solve this problem, We will adopt the Fashion MNIST dataset available in TensorFlow 2.0 that uses Keras as a high-level API library to recognize human clothing from images.

How are we Going to Build this?

We are using the Fashion MNIST dataset from Keras API to build a deep learning model with feedforward and backpropagation techniques using different layers that initiate computation to learn features from the dataset and predict the class to which the images belong.

Final Output

As a result, we could predict the class of the image it belongs.

Requirements

This article guides you through building a deep learning model for the fashion MNIST image classification problem end to end with the Python TensorFlow library. In addition, we use Numpy and Matplotlib libraries to handle arrays and create Python visualizations. Ensure you have the latest version of all the mentioned libraries to implement the code below.

Fashion-MNIST Clothing Classification Using TensorFlow

Zalando's article photos comprise the Fashion-MNIST dataset released in 2017, which has a training set of 60,000 samples and a test set of 10,000 examples. Each illustration is a 28 x 28 grayscale graphic paired with a label drawn from one of ten classes. Zalando wants Fashion-MNIST to be a simple drop-in substitute for the original MNIST dataset when testing machine learning methods.

Fashion MNIST's class labels are :

| Index | Class Name |

|---|---|

| 0 | T-shirt/top |

| 1 | Trouser |

| 2 | Pullover |

| 3 | Dress |

| 4 | Coat |

| 5 | Sandal |

| 6 | Shirt |

| 7 | Sneaker |

| 8 | Bag |

| 9 | Ankle boot |

As we can see from the image above, the "target" dataset comprises 10 class labels (0 for t-shirts/tops, 1 for trousers,..9 for ankle boots). We must categorize the articles into one of these groups based on their photographs, so the problem is one of "Multi-class Classification".

Import Dataset

Import necessary libraries to carry out this classification

Explore the Data

The Fashion-MNIST train and test datasets are loaded, and their shapes are printed. We can see that the training dataset contains 60,000 samples, whereas the test dataset contains 10,000. We can also see that the images are square, measuring 28 28 pixels.

Output:

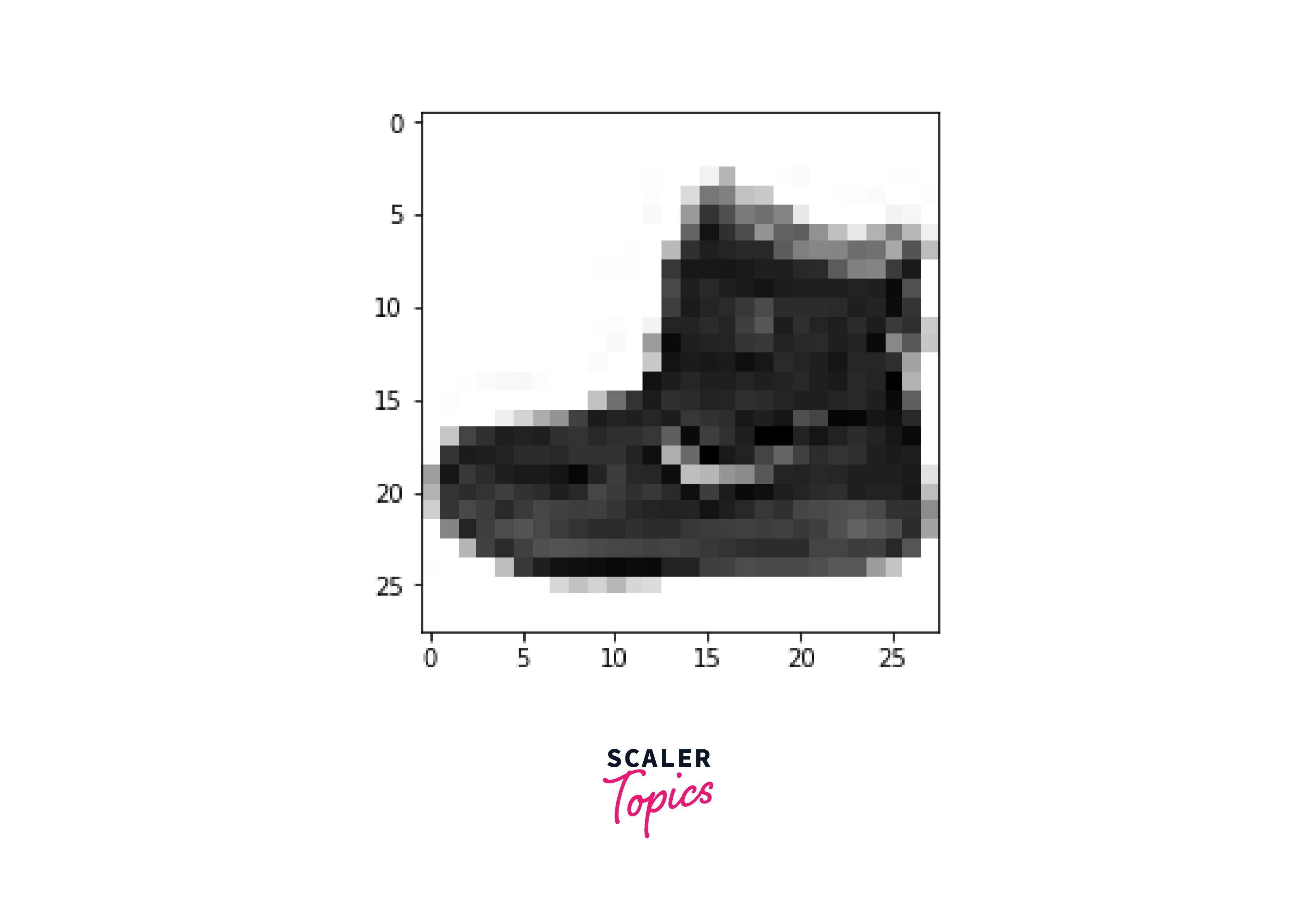

We'll now plot an input image to observe how it appears :

Output:

Preprocess the Data

Each image in the collection has unsigned integer pixel values that fall between 0 and 255 or black and white. Although we are still determining the ideal modeling scaling strategy, we know some scaling will be needed.

Grayscale images should first have their pixel values normalized, for example, by rescaling them to the [0, 1] range. Normalization must first convert unsigned integers in the data type to floats, and the pixel values must then be divided by the maximum value.

Build Model

Let's move on and construct a straightforward neural network model to predict our apparel categories.

Another widely used deep learning package is Keras. At Google, François Chollet created it. It is known for its quick prototyping and ease of model construction. However, it is a high-level library, so it doesn't carry out low-level tasks like convolution. To accomplish that, it makes use of a backend engine like TensorFlow. TensorFlow 2.0 uses the Keras API, available in tf.keras, as its main API.

Four critical processes are involved in model construction in Keras :

- Defining the model

- Compiling the model

- Fitting the model

- Evaluating the model

Defining the Model

Keras provides two APIs for defining the model :

- The Sequential API

- The Functional API

In the top layer of this network, The format of the photos was changed from a two-dimensional array (28 by 28 pixels) to a one-dimensional array (28 * 28 = 784 pixels) when layers flattened them. This layer restructures the fashion MNIST data. There are no parameters to learn. Consider this layer lining up and unstacking rows of pixels in the image. A series of two tf.keras.layers make up the network when the pixels are flattened.

Dense layers:

These neuronal layers are fully or densely linked. There are 128 nodes in the dense top layer (or neurons). A 10-element logits array is returned by the second (and final) layer. Each node has a score that indicates which of the ten classes the current image belongs to.

Let's begin by defining our model as a Sequential() model, as seen below :

Define the layers now by following the code below :

Output:

Activation Functions

These functions are important in a neural network, where they decide which neuron should be activated. They meany to decide whether the input to the layers is important for prediction using mathematical operations. In general, activation functions are of two types :

-

Linear activation functions:

The output of this function will not be constrained between ranges. -

Non-linear activation functions:

The output of this function helps to make a graph, making the model easy to generalize and adapt to a variety of data. These functions are mainly divided based on their range or curve shape :- Sigmoid or Logistic Activation Function.

- Softmax Activation Function.

- Tanh or hyperbolic tangent Activation Function.

- ReLU (Rectified Linear Unit) Activation Function.

- Leaky ReLU.

Softmax Activation Function

The activation function we use for our problem is the softmax activation function. The softmax activation function converts the neural network's unprocessed outputs into a vector of probabilities—a probability distribution over the input classes. For example, imagine an N-class multiclass classification task. The softmax activation returns an output vector with N items.

Compile the Model

The model's compilation comes next after it has been defined. In this stage, we configure the model's learning process. When building the model, we specify three parameters :

-

The optimizer's setting:

This specifies the optimization algorithm we want to employ, in this instance, the stochastic gradient descent. -

The loss factor is:

In each of the ten classes, this objective function—such as the mean squared error for regression or cross-entropy loss for classification is what we are attempting to reduce. -

The model's performance would be evaluated using the metrics parameter. An example of a metric would be accuracy. To compile the model, execute the following code :

Train the Model

Above, we created the model as well as built it. We'll start training the model now. The model can use the fit function to train the model. The following is used to specify our features, labels, the number of training epochs we desire, and the batch size :

Output:

Evaluate the Accuracy

We will assess the model on the test set after training. To understand the training accuracy, we may also evaluate the model on the same training set :

Output:

Make Predictions

You can utilize the model after it has been trained to generate predictions about some images. With the softmax function, we can transform the model's linear outputs—logits—into probabilities, which should be simpler to understand.

Verify Predictions

In this instance, the model predicted each label for an image in the testing set. Let's match the prediction index to the class name with a class_names array. Look at the prediction first :

Output:

The model is the most certain that this image represents a class name [9] ankle boot. The test label examination reveals that this categorization is accurate :

Output:

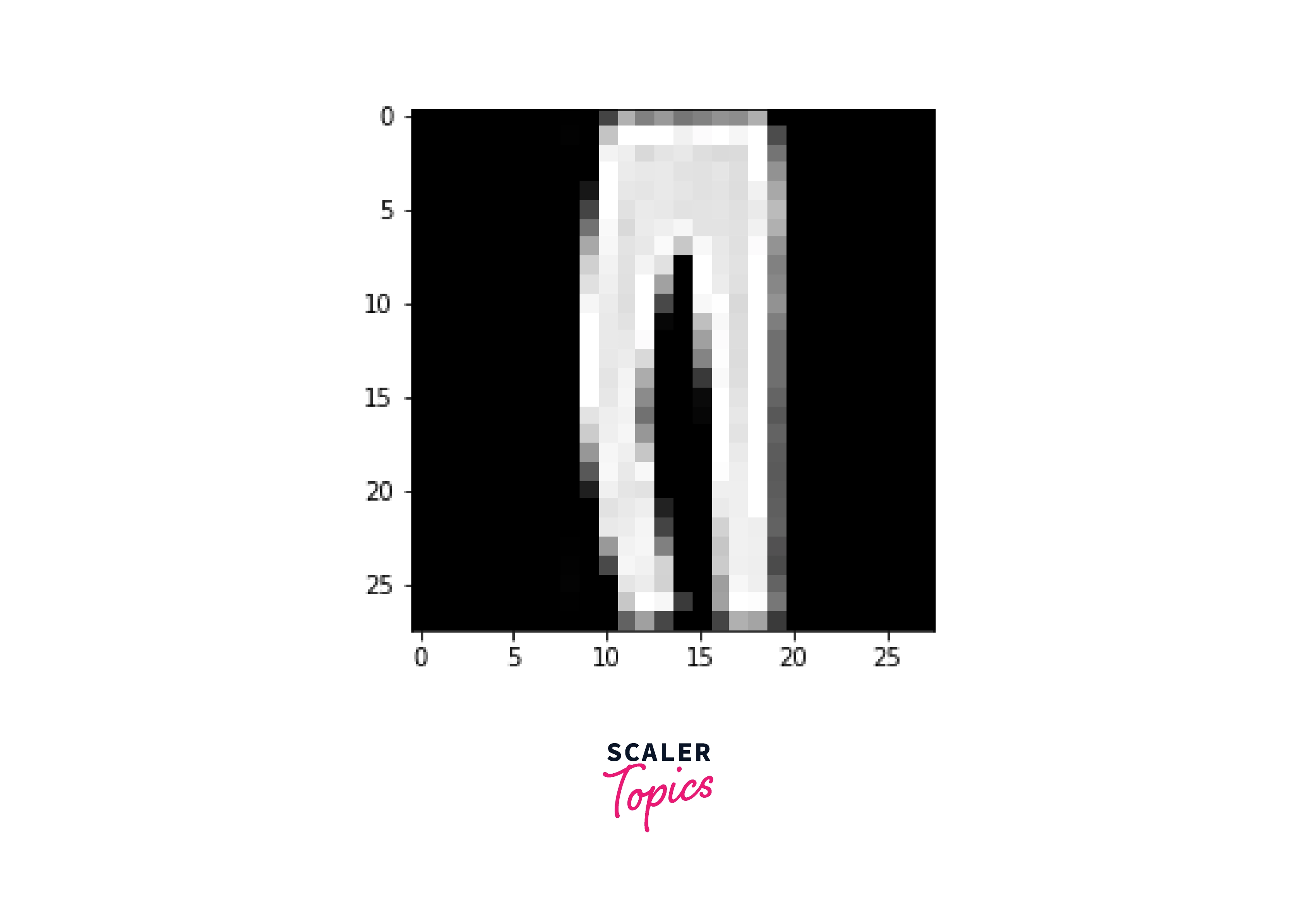

Let's visualize and find the particular fashion MNIST dataset from the test dataset :

Output:

Develop an Improved Model

To develop the performance of our existing model, we could better restructure the model layers with convolutional neural networks(CNN). These networks were specially designed to handle image data. The CNN becomes more complicated with each layer, detecting larger areas of the image. Early layers highlight essential elements like colors and borders. The object's more prominent features or shapes are first recognized when the visual data moves through the CNN layers, and eventually, the intended object is identified.

Conclusion

- In this article, you learned how to use Keras to train a simple deep-learning model on the Fashion MNIST dataset. In addition, we could use convolutional neural networks to improve the model performance, which was especially helpful for identifying image patterns to identify items, faces, and scenes.

- Even though the Fashion MNIST dataset is more complicated than the MNIST digit recognition dataset unless you preprocess the image the same way as Fashion MNIST, you cannot use it in real-world fashion classification tasks (segmentation, thresholding, grayscale conversion, resizing, etc.).