ONNX Model

Overview

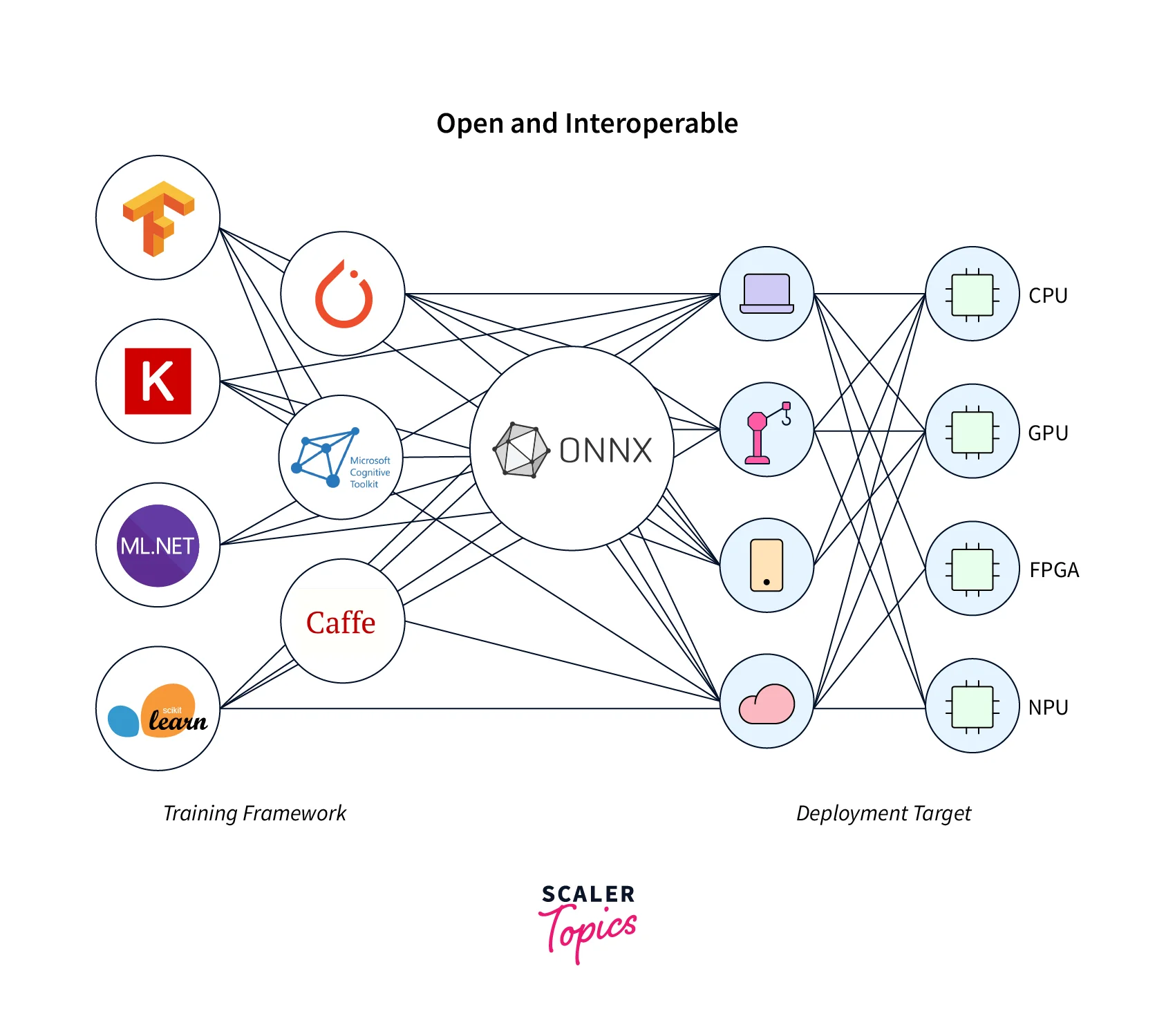

ONNX (Open Neural Network Exchange) is an open format for representing deep learning models that enables model interoperability and sharing between different frameworks, such as PyTorch, TensorFlow, and Caffe2.

ONNX also supports exporting and importing models from different languages like Python, C++, C#, and Java, making it easy for developers working with various programming languages to share and use models with other community members.

Introduction

ONNX, or Open Neural Network Exchange, is an open-source format for deep learning models. It allows for the seamless interchangeability and sharing of models between deep learning frameworks such as PyTorch, TensorFlow, and Caffe2.

ONNX allows developers to move models between different frameworks with minimal retraining and without having to rewrite the entire model. This makes it easier to optimize and accelerate models using a variety of hardware platforms, such as GPUs and TPUs and allows researchers to share their models with others using a common format.

ONNX also provides tools for working with models in the ONNX format, including the ONNX Runtime, a high-performance inference engine for executing models, and the ONNX Converter, which we can use to convert models between different frameworks.

ONNX is an actively developed project with contributions from Microsoft, Facebook, and other companies in the AI community. It's supported by various deep learning frameworks, libraries, and hardware partners, such as Nvidia, intel, etc., and it's also supported by some cloud providers such as AWS, Microsoft Azure, and Google Cloud.

Overall, ONNX is used for Interoperability between different deep learning libraries, which makes it a very important technology for the industry to move forward.

What is ONNX?

ONNX (Open Neural Network Exchange) is a standard format for representing deep learning models. It enables interoperability between deep learning frameworks, such as TensorFlow, PyTorch, Caffe2, and more. With ONNX, developers can more easily transfer models between different frameworks and run them on different platforms, such as a desktop, a cloud service, or an edge device.

ONNX provides a common representation of the computation graph, which is a data flow graph that defines the operations (or "nodes") that make up a model and the connections (or "edges") between them. The graph is defined using a standard data format called the protobuf, a language- and platform-agnostic data format. ONNX also includes a set of standard types, operations, and attributes used to define the computations performed by the graph and the input and output tensors.

ONNX is an open format developed and maintained by Facebook and Microsoft. It constantly evolves, and newer versions are released with more features and support for new deep-learning operations.

How to Convert?

To convert a PyTorch model to an ONNX model, you need both the PyTorch model and the source code that generates the PyTorch model. Then you can load the model in Python using PyTorch, define dummy input values for all input variables, and run the ONNX exporter to get an ONNX model. There are 4 things to make sure of while converting a model using ONXX.

1. Training the Model

To use ONNX for model conversion, you must train a model using a framework such as TensorFlow, PyTorch, or Caffe2. We will then convert the trained model to ONNX format for other frameworks or environments.

2. Input & Output Names

Before converting a model to ONNX format, it's important to ensure that the input and output tensors of the model have unique, descriptive names. ONNX uses the tensors' names to identify the model's inputs and outputs. Descriptive names help ensure the correct tensors are used when working with the model in other frameworks or environments.

3. Dynamic Axes

In ONNX, tensors can have various dimensions called dynamic axes. We can use these dynamic axes to represent a model's batch size or sequence length. When converting a model to ONNX format, it's important to consider how the dynamic axes are handled and ensure that the resulting ONNX model can be used in other frameworks or environments.

4. Conversion Evaluation

After a model has been converted to ONNX format, it's a good idea to evaluate the converted model to ensure it's equivalent to the original one. We can do this by comparing the outputs of the original model and the converted model for a set of input data.

Tools to Convert your Model into ONNX

There are several tools that We can use to convert a model to ONNX format, including:

- ONNX libraries:

The ONNX libraries provide functions for converting models from different frameworks (such as TensorFlow, PyTorch, and Caffe2) to ONNX format. These libraries are available in multiple programming languages, including Python, C++, and C#. - ONNX Runtime:

ONNX Runtime is an open-source inference engine that we can use to execute ONNX models. It also provides a tool called onnx2trt that can convert ONNX models to TensorRT format, which We can use for inferencing on NVIDIA GPUs. - Netron:

Netron is an open-source viewer for neural network models that can be used to inspect and visualize ONNX models. We can also convert ONNX models to other formats, such as TensorFlow or CoreML. - ONNX-Tensorflow:

ONNX-Tensorflow is a conversion library that allows importing ONNX models into Tensorflow. - Model Optimizer:

A command-line tool that helps to convert a trained model into an Intermediate Representation (IR) format that can be loaded and executed by the Inference Engine. - ONNXmIzer:

Tool that converts various neural network representations to the ONNX format. Microsoft develops it, and the current version supports Pytorch, TensorFlow, CoreML, and several other frameworks.

You can choose one based on the framework you are using to train your model and the target framework you want to use for inferencing.

How to Convert PyTorch Model to ONNX?

Here we will build a simple Neural Network with 10 inputs and 10 outputs using the PyTorch NN module and then convert the PyTorch model to ONNX format using the ONNX library.

Step - 1:

First import the Pytorch library, and ONNX library to do the conversion.

Step - 2:

We define our model architecture here in this example. It is simple to feed-forward networks. Then we create an instance of the model and define an example input.

Step - 3:

We use the torch.onnx.export() function to export the model to ONNX format and save it to a file named "mymodel.onnx".

Step - 4:

Once the model is exported, you can use the onnx.checker to validate the model consistency and shape of input and output,

The onnx.checker.check_model() function will raise an exception if there's something wrong with the model. Otherwise, it will return None.

Step - 5:

You can also compare the output of the original model and the ONNX-converted model to ensure that they are equivalent:

Note: that you will need an onnxruntime package to be installed to compare the output. Also, the input to onnxruntime should be in numpy format.

Take your first step towards becoming a deep learning guru. Enroll in our free Deep Learning certification course and learn from industry experts!

Conclusion

- ONNX is a format for model interoperability, allowing you to convert models trained in one framework to a format we can use in another. This allows you to use models trained in one framework with other frameworks, libraries, or environments without retraining the model.

- It is important to ensure that the input and output tensors of the model have unique, descriptive names when converting a model to ONNX format, as ONNX uses these names to identify the inputs and outputs of the model.

- When converting a model to ONNX, it is important to consider how dynamic axes are handled, as we can use them to represent things like batch size or sequence length of a model.

- Various open-source tools are available for converting a model to ONNX formats, such as ONNX libraries, ONNX Runtime, Netron, ONNX-Tensorflow, and the Model Optimizer. Each tool has its strengths and supports different source and target frameworks.