Mobile Deployment Using TfLite

Overview

Machine learning (ML) has rapidly evolved in recent years, and its applications have spread across various domains. One of the most exciting areas of ML application is on-device machine learning, where ML models are deployed within mobile or embedded applications. This enables a wide range of use cases, such as image classification, object detection, and natural language processing, to be performed locally on a user's device without requiring a round-trip to a server.

Pre-requisites

To be able to convert your model to TensorFlow Lite, you will need the following:

- A TensorFlow model (Keras .h5 format or TensorFlow saved model in .pb format)

- TensorFlow and TensorFlow Lite are installed on your machine.

- Knowledge of TensorFlow and deep learning workflow.

Introduction

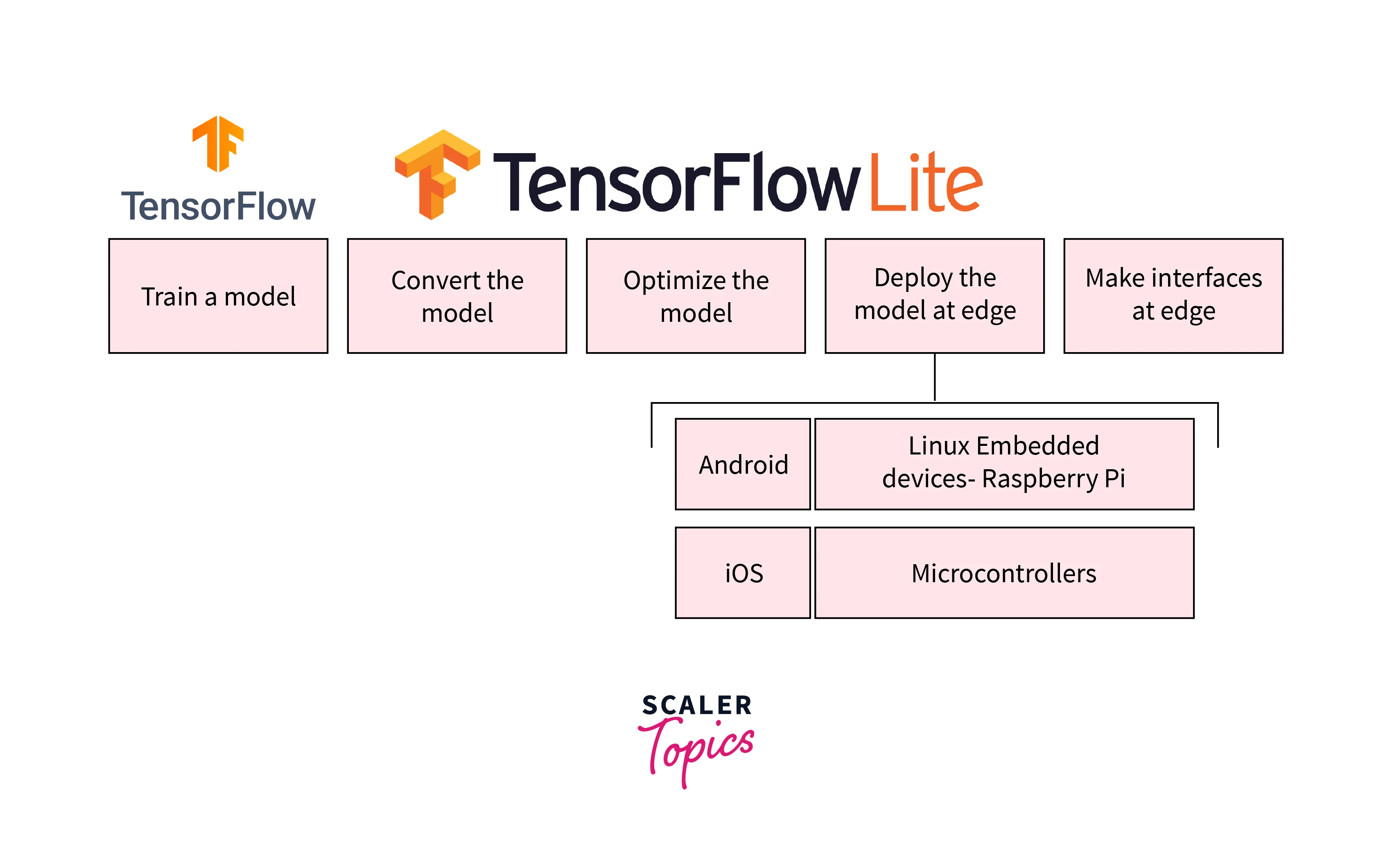

TensorFlow Lite is a lightweight version of TensorFlow that is specifically designed for mobile and embedded devices. It allows developers to run their models on a variety of devices, including smartphones and tablets running Android and iOS, as well as embedded Linux systems and microcontrollers.

TensorFlow Lite addresses five key constraints for on-device machine learning: latency, privacy, connectivity, size, and power consumption.

For example, it reduces model and binary size to save storage space and memory, and it uses efficient inference and lack of network connections to reduce power consumption.

What Does It Mean to Have an ML Model Deployed in an App?

Deploying an ML model in an Android app means incorporating a pre-trained machine learning model into the mobile application. This allows the app to perform various ML tasks on the user's device, such as image classification, object detection, and natural language processing, without requiring a round-trip to a server.

For example, recognizing a face in a mobile phone occurs locally, without the need to send the image to a server for recognition. This means that the application can still function even if there is no internet connection.

When an ML model is deployed in an Android app, it is included as part of its codebase and runs locally on the device. The app can then use the model to make predictions or classifications based on input data, such as an image or text, without sending the data to a remote server. This can provide many benefits, such as reducing latency, improving privacy, and eliminating the need for internet connectivity.

In addition to on-device inferences, having an ML model deployed in an Android app enables a new class of scenarios that were not possible before, as it does not rely on any internet connectivity, user data doesn’t leave the device, users don’t need to sign in or give permission for any other type of access, and it will work seamlessly in areas with poor connectivity. Furthermore, deploying models locally can allow real-time processing, enabling the Android app to respond to user input or sensors.

What is TensorFlow Lite?

TensorFlow Lite is a set of tools that enables on-device machine learning by helping developers run their models on mobile, embedded, and edge devices. TFLite provides a simple API for developers to use, which makes it easy to integrate machine learning models into mobile apps. It allows Android developers to help run machine learning models on-device, providing the ability to perform machine learning tasks such as image classification, object detection, and natural language processing locally on the user's device. It allows developers to take advantage of the computational power of modern Android devices, reducing latency, improving privacy, and eliminating the need for internet connectivity. It's fully compatible with Android Studio and supports various Android device architectures, including ARM, x86, and even microcontrollers. With TensorFlow Lite, developers can now deploy powerful ML models on mobile devices, enabling new use cases and improving user experience.

Advantges of Using TfLite

- Reduced size and footprint:

TensorFlow Lite is designed to run efficiently on mobile and embedded devices, which typically have limited processing power, memory, and storage. - Improved performance:

TensorFlow Lite provides several optimizations for running machine learning models on mobile devices, such as quantization and pruning, that can significantly improve performance. - Cross-platform compatibility:

TensorFlow Lite is designed to run on a wide range of platforms, including Android, iOS, and Raspberry Pi, making it a versatile solution for deploying machine learning models on mobile devices. - Ease of integration:

TensorFlow Lite provides a simple API for integrating machine learning models into mobile applications, making it easy for developers to add machine learning capabilities to their apps

How to Convert Your Model to TensorFlow Lite Model?

Converting a TensorFlow model to a TensorFlow Lite model is a straightforward process. Here are the general steps:

-

First, you must obtain a pre-trained TensorFlow model (in .h5 or .pb format).

-

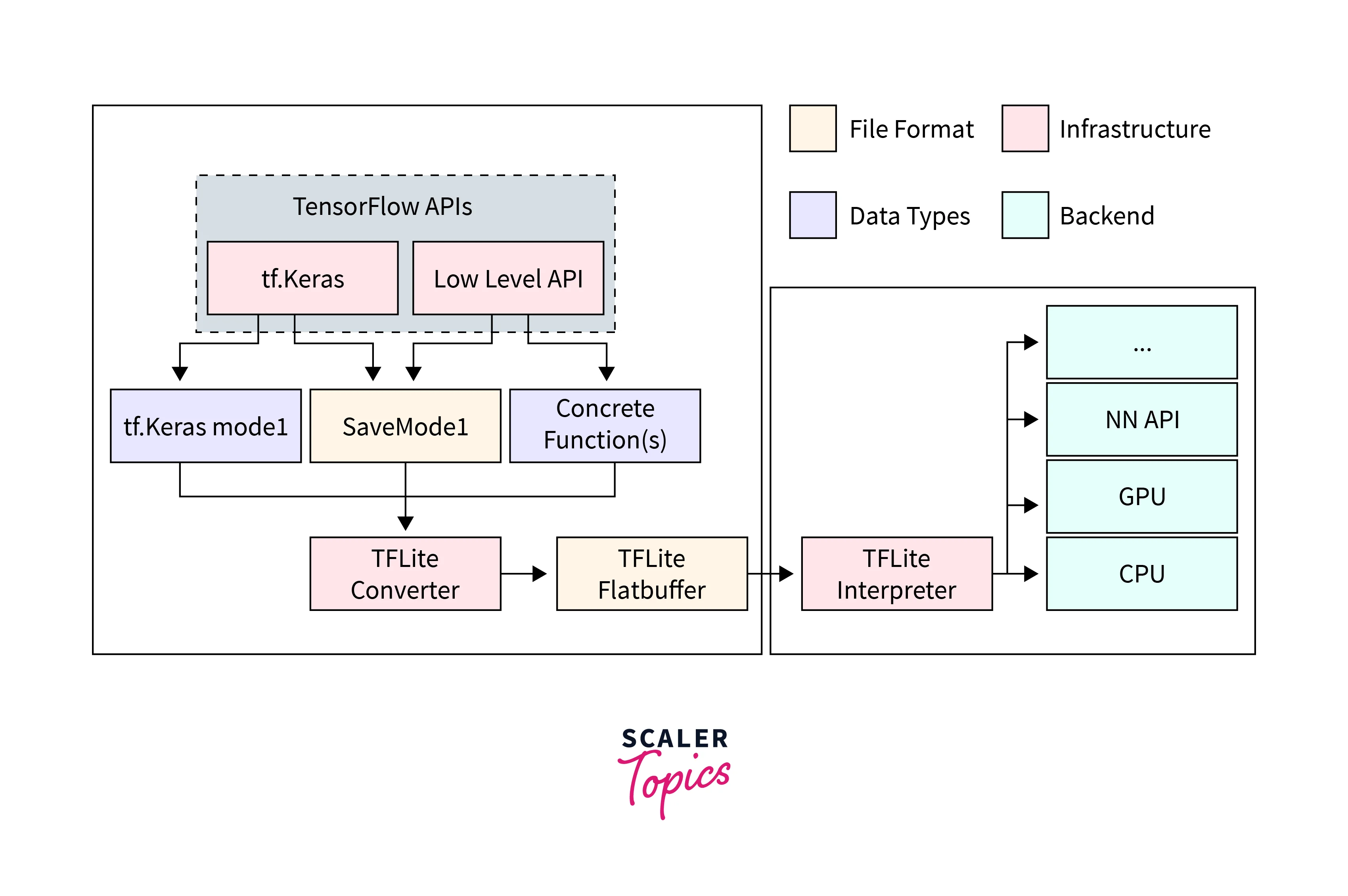

Next, you must convert the TensorFlow model into a format that TensorFlow Lite can use. This can be done using the TensorFlow Lite converter.

-

The TensorFlow Lite converter can also apply optimization, such as quantization, to the model to reduce its size and improve inference performance.

-

Finally, the TensorFlow Lite model can be used for on-device inferences on your Android device.

To give you an idea of how the conversion process might look in code, here is an example of how you might convert a TensorFlow model to a TensorFlow Lite model in Python:

Requirements

Before starting, the following libraries must be installed using pip install <library>.

-

TensorFlow/Keras:

This is the main library for working with machine learning models in TensorFlow. It provides functionality for training, evaluating, and deploying models. -

TensorFlow Lite:

The main TensorFlow library for working with TensorFlow Lite models provides functionality for converting TensorFlow models to TensorFlow Lite format and running inferences with TensorFlow Lite models.

Model Conversion Using TFLite

How to Use TfLite Model on Android?

Android is one of the most popular mobile operating systems, with a large user base, making it an ideal platform for deploying machine learning models to a wide audience. Android devices come with a wide range of hardware, including GPUs and DSPs, which can be used to accelerate TFLite models for better performance. Also, Android Studio provides a comprehensive set of tools for developers to create and deploy mobile apps, including support for TFLite.

Here is an example of how you might load a TensorFlow Lite model and perform inference on Android in Java:

- In this example, the loadModelFile() method is used to load the TensorFlow Lite model file, which should be present in the assets folder of the android project. The Interpreter class is then used to create an interpreter for the model.

- The input and output tensors are defined as 4-dimensional arrays for image classification models.

- The run method of the interpreter is used to perform inference with the input tensor and store the results in the output tensor.

- Finally, the argmax function is used to find the index of the highest value in the output tensor, corresponding to the input image class.

Conclusion

In this article, we have seen what TfLite is and how to convert TensorFlow models using TfLite. We learned the following:

- TensorFlow Lite is a tool for deploying machine learning models on mobile and embedded devices.

- It enables low latency, improved privacy, and offline inferencing capabilities.

- TensorFlow models can be converted to TensorFlow Lite format using the TensorFlow Lite Converter.

- TensorFlow Lite models are highly optimized for on-device inferencing and can be used with the TensorFlow Lite Interpreter.

- It allows for further optimizations such as quantization and pruning and hardware acceleration support.

- TensorFlow Lite provides many possibilities and use cases for AI on edge.