Kubernetes Architecture Explained with Diagrams

Overview

Kubernetes is a container orchestration platform that follows a master-slave architecture. It consists of a master node that manages the cluster's control plane components, including API server, etcd, scheduler, and controller manager. Multiple worker nodes form the cluster's data plane, running containerized applications managed by the master node. Communication between the master and worker nodes allows Kubernetes to efficiently schedule, deploy, and scale applications in a resilient and automated manner.

What is Kubernetes Architecture?

Kubernetes is a powerful open-source platform for managing containerized applications, enabling automated deployment, scaling, and efficient orchestration across a cluster of machines. It provides a robust and flexible framework for running microservices and complex applications across a cluster of machines.

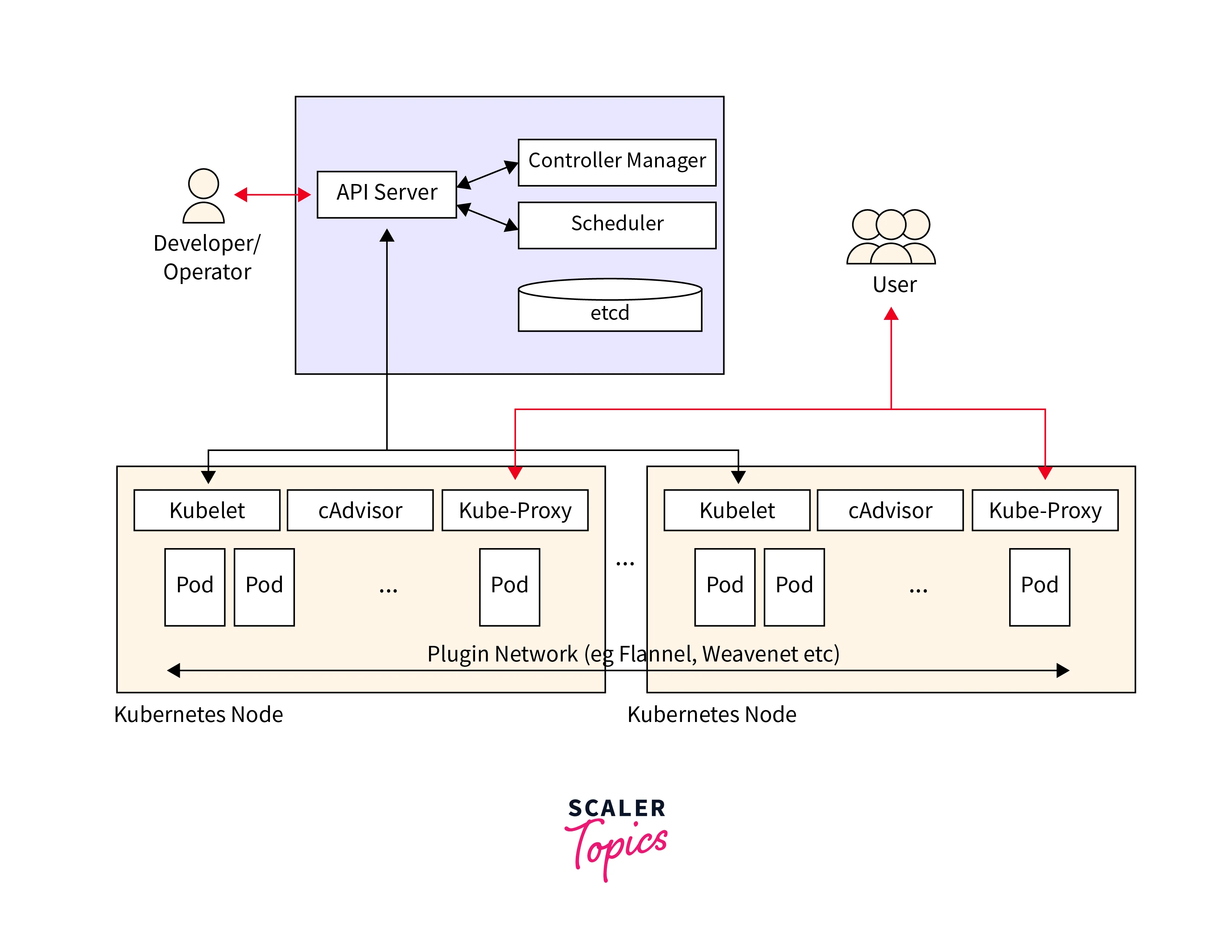

Kubernetes Architecture Diagram

Kubernetes is built upon a master-worker architecture that allows for the management of containerized applications across a cluster of machines. A few important points associated with Kubernetes Architecture are:

- Kubernetes follows a master-worker architecture, where the master node controls the cluster, and worker nodes run the application containers.

- The master node comprises several components, including the API server, etcd, scheduler, and controller manager, which collectively manage the cluster's control plane.

- The worker nodes host the application containers and include the kubelet, container runtime, and kube-proxy to manage containers and networking.

Workflow of Requests in Kubernetes

In the Kubernetes architecture, the workflow of a request from the master control plane to Kubernetes nodes can be summarized as follows:

- API Request Initiation:

User or external system initiates an API request. - API Server Validation:

API server validates the request and permissions. - Desired State Retrieval:

API server retrieves the current desired state from etcd. - Processing by Controllers:

Controllers in the control manager process the request. - Scheduling Decision:

Scheduler determines the best worker node for the pod. - Pod Definition Creation:

API server creates a Pod definition based on the request and scheduler's decision. - Pod Assignment to Nodes:

Pod definition is sent to the appropriate Kubelet on the chosen worker node. - Container Runtime Invocation:

Kubelet communicates with the container runtime to start the containers. - Networking and Load Balancing:

Kube-proxy sets up networking and load balancing for the Pod. - Reporting to Control Plane:

Kubelet reports the status of containers and pods back to the control plane. - Eventual Consistency:

The Control plane continuously monitors the cluster state to maintain the desired state over time.

Components of Kubernetes Architecture

Kubernetes architecture consists of several components that work together to manage and orchestrate containerized applications across a cluster of machines. Below are the key components of Kubernetes architecture:

Master Node Components:

- API Server:

The API server acts as the front-end for the Kubernetes control plane. It exposes the Kubernetes API, which allows users and other components to interact with the cluster. API requests are authenticated, authorized, and then processed by the API server, which then updates the desired state of the cluster. - etcd:

etcd is a distributed key-value store that serves as the Kubernetes cluster's primary data store. It stores the configuration data representing the desired state of the cluster, such as information about pods, services, deployments, and namespaces. All cluster-wide configuration and status information are stored in etcd. - Scheduler:

The scheduler is responsible for assigning pods (the smallest deployable units in Kubernetes) to available worker nodes. It considers factors like resource requirements, node constraints, and affinity/anti-affinity rules to optimize pod placement across the cluster. The scheduler ensures that workloads are distributed efficiently. - Controller Manager:

The controller manager consists of several controllers that monitor the cluster's state and work towards achieving and maintaining the desired state. Examples of controllers include the Replication Controller, which ensures the desired number of pod replicas are running, and the Node Controller, which handles node-related events like node failures or additions.

Worker Node Components:

- Kubelet:

Kubelet is an agent that runs on each worker node and is responsible for managing containers on that node. It ensures that containers are running as per their desired state, which is defined in the cluster's control plane. Kubelet communicates with the control plane to receive pod definitions and reports the status of containers back to the control plane. - Container Runtime:

The container runtime is the software responsible for running containers on the worker nodes. Kubernetes supports multiple container runtimes, such as Docker, containerd, and others. - Kube-proxy:

Kube-proxy is responsible for managing network communications between different pods and services in the cluster. It maintains network rules and performs packet forwarding, enabling load balancing for services.

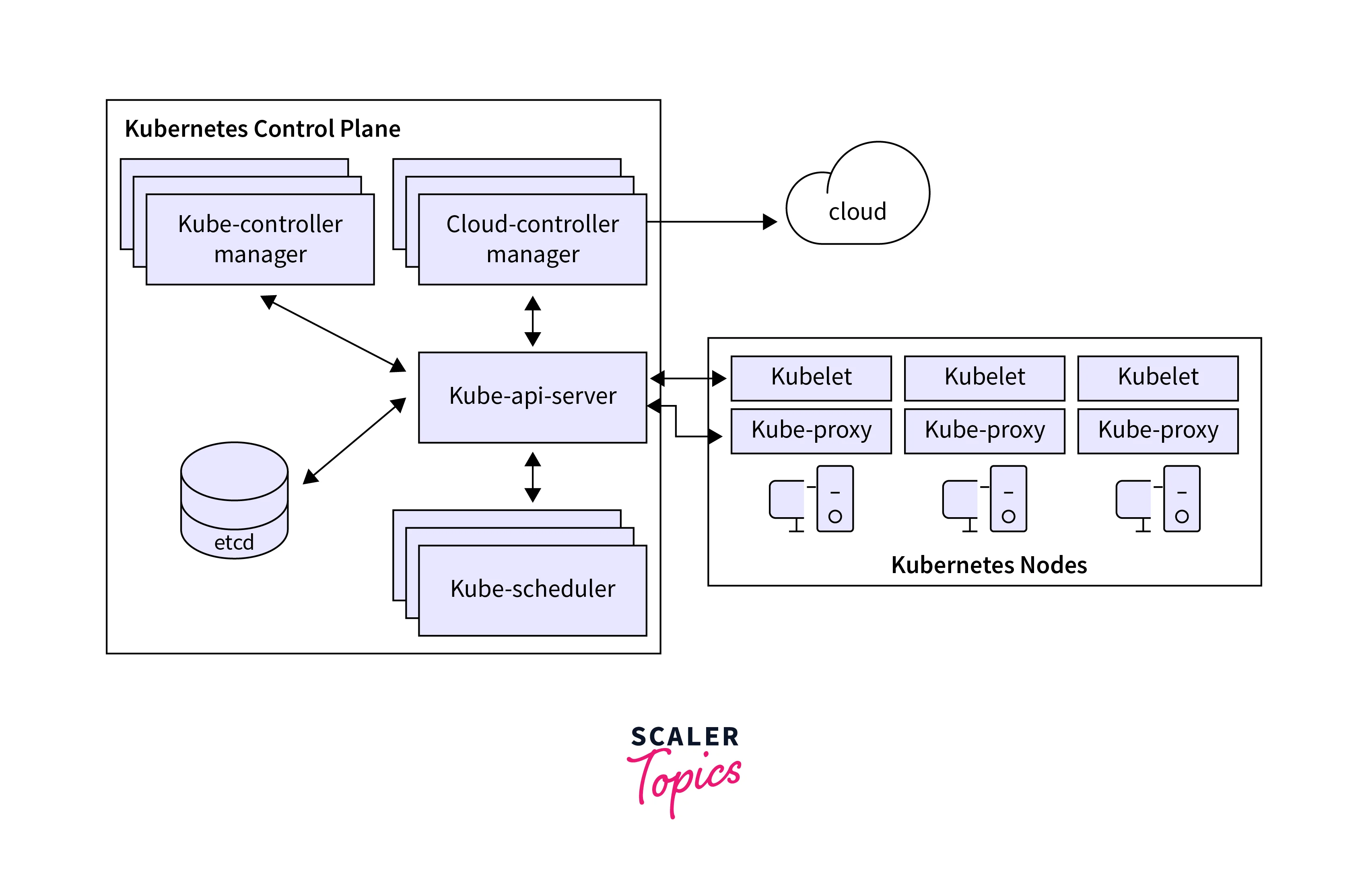

Control Plane Kubernetes

In Kubernetes, the Control Plane is the central set of components responsible for managing the overall state of the cluster and making global decisions. It acts as the brain of the Kubernetes cluster, ensuring that the desired state defined by users or applications is continuously maintained and reconciled with the actual state of the system.

The Control Plane components run on the Master Node(s) and collectively provide the API server, scheduling, and controller functionalities. Here's a brief overview of the key Control Plane components:

API Server

The API Server is the central component of the Control Plane. It exposes the Kubernetes API, which serves as the primary interface for users, administrators, and other components to interact with the cluster. It processes RESTful API requests and enforces authentication, authorization, and admission control policies. All cluster-related operations and control commands are communicated to the API server.

etcd

etcd is a distributed, consistent, and highly available key-value store that acts as the persistent data store for Kubernetes. It stores the cluster's configuration data, including objects like nodes, pods, services, and configurations. All Control Plane components interact with etcd to read and write data, ensuring consistent state management across the cluster.

Scheduler

The Scheduler is responsible for making decisions about pod placement within the cluster. When a new pod is created or when the number of replicas for a workload needs to be adjusted, the Scheduler determines which worker node should host the pod. It takes into consideration various factors, such as resource requirements, hardware constraints, and affinity/anti-affinity rules, to make optimal decisions for resource distribution and utilization.

Controller Manager

The Controller Manager includes several built-in controllers responsible for maintaining the desired state of the cluster. Each controller continuously watches the state of specific resources and takes corrective actions to ensure the actual state matches the desired state. Some examples of controllers are:

- Replication Controller:

Ensures the desired number of pod replicas are running and maintained. - Node Controller:

Handles node-related events and monitors node health. - Service Controller:

Manages service objects and ensures service endpoints are correctly updated.

Worker Node Kubernetes

In Kubernetes, the Worker Node is where the actual containerized applications, known as pods, are deployed and executed. Worker nodes are responsible for running the workload assigned to them by the master node and providing the necessary resources for running containers. They form the foundation of a Kubernetes cluster and execute the application workloads while ensuring the desired state of the cluster is maintained.

Kubelet

The Kubelet is an agent that runs on each worker node and communicates with the Kubernetes API server on the master node. It is responsible for managing the containers and ensuring that the pods are running and healthy as defined in the desired configuration. The Kubelet pulls container images, starts and stops containers, and reports the current status of the pods to the master node.

Container Runtime

Kubernetes supports various container runtimes, with Docker being one of the most commonly used ones. The container runtime is responsible for pulling container images from the container registry and executing the containers with the required resources and configurations as specified by the Kubelet.

Kube-proxy

Kube-proxy runs on each worker node and is responsible for managing network communication between different pods and services in the cluster. It handles network routing, and load balancing, and ensures that network traffic is directed to the appropriate pods based on service IP addresses and ports.

CNI (Container Network Interface) Plugins

CNI plugins manage the network connectivity between pods running on different nodes in the cluster. They are responsible for setting up networking for the containers, allowing them to communicate with each other across nodes. Various CNI plugins are available to provide different networking solutions based on the cluster requirements.

Managed Services in Kubernetes

Managed services in Kubernetes refer to services and resources that are provided and maintained by cloud service providers or third-party platforms. These managed services simplify the deployment and management of specific functionalities within a Kubernetes cluster, allowing developers and operations teams to focus on their core applications rather than the underlying infrastructure.

Some common managed services for Kubernetes include:

Azure Kubernetes Service (AKS)

- Provided by: Microsoft Azure.

- Cluster Management:

AKS simplifies the deployment, management, and scaling of Kubernetes clusters on Azure. It abstracts away the underlying infrastructure complexities. - Integration:

Seamless integration with Azure services like Azure Active Directory, Azure Monitor, and Azure Policy. - Security:

Built-in security features, including Azure Active Directory integration, Role-Based Access Control (RBAC), and managed identities. - Monitoring:

Integration with Azure Monitor allows for monitoring cluster health, performance, and diagnostics.

Oracle Kubernetes Engine (OKE)

- Provided by: Oracle Cloud Infrastructure (OCI).

- Cluster Management:

OKE offers fully managed Kubernetes clusters on Oracle Cloud. It abstracts the complexity of cluster management. - High Availability:

OKE supports multi-master clusters for high availability and resilience. - Network Flexibility:

Integration with Oracle Cloud Networking allows for easy setup and configuration. - Cluster Autoscaling:

OKE can automatically adjust the number of nodes in the cluster based on workload demands.

Elastic Kubernetes Service (EKS)

- Provided by: Amazon Web Services (AWS).

- Cluster Management:

EKS provides managed Kubernetes clusters on AWS, handling the underlying infrastructure and control plane. - High Availability:

EKS supports multi-AZ deployments to ensure high availability of master nodes. - Security:

Integrates with AWS Identity and Access Management (IAM) for fine-grained access control. - Persistent Storage:

Seamlessly integrates with AWS storage services like Elastic Block Store (EBS) and Elastic File System (EFS) for persistent storage.

Google Kubernetes Engine (GKE)

- Provided by: Google Cloud Platform (GCP).

- Cluster Management:

GKE offers a fully managed Kubernetes service that automates cluster provisioning, scaling, and maintenance. - Google Cloud Integration:

Tight integration with Google Cloud services like Google Cloud Identity and Access Management (IAM), Stackdriver for monitoring, and Cloud Logging for centralized logging. - Autopilot Mode:

GKE Autopilot provides a serverless experience, abstracting away node management for an easier deployment experience. - Preemptible Nodes:

Utilizes preemptible virtual machines to optimize costs for non-critical workloads.

Container Deployment in Kubernetes

Let's start by covering traditional and virtualized deployments, and then move on to container deployment.

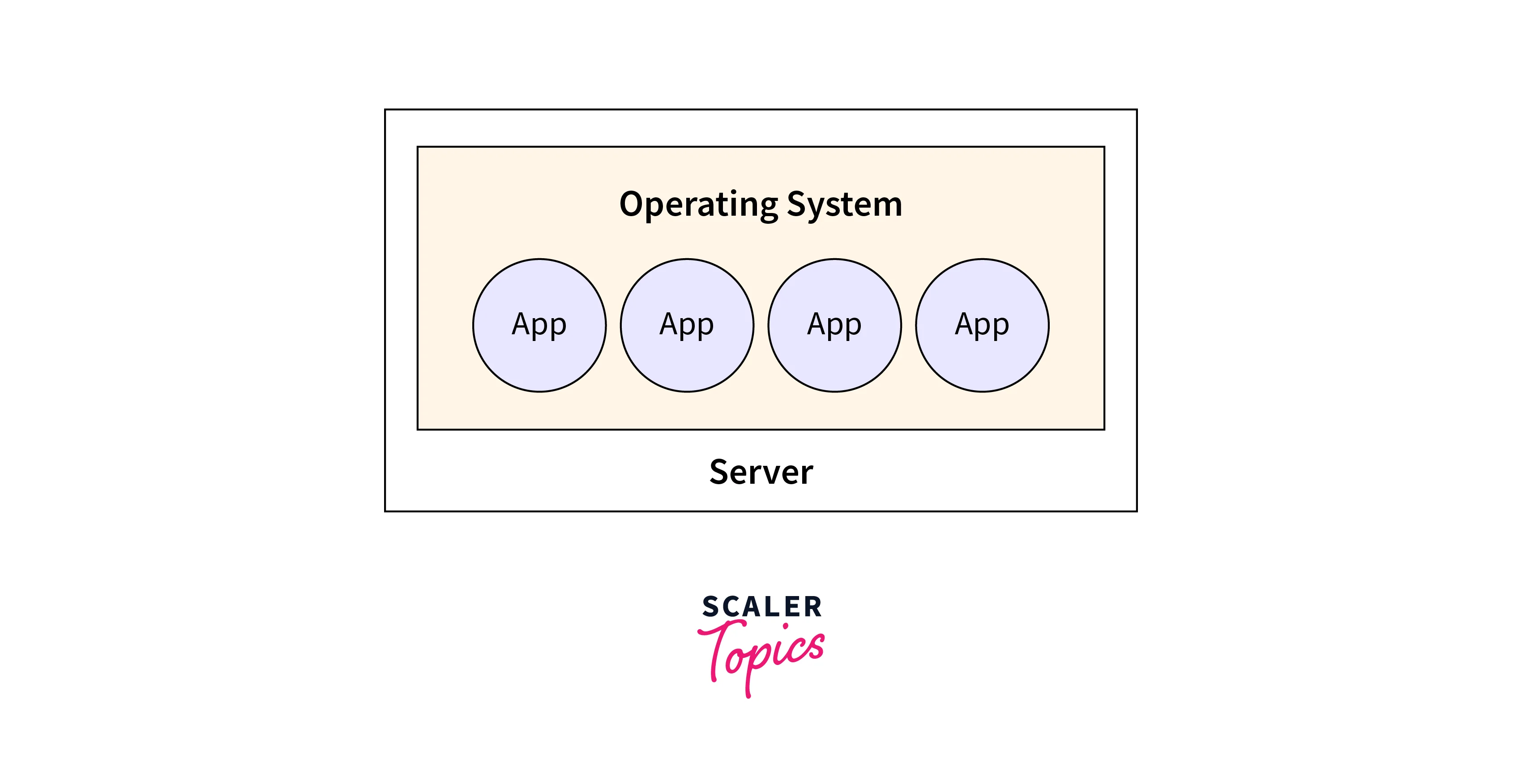

Traditional Deployment

In a traditional deployment model, applications are typically installed and run directly on physical servers or virtual machines (VMs). Each application is deployed with its required dependencies, libraries, and configurations, making it tightly coupled to the underlying operating system and hardware. This tight coupling can lead to challenges in managing and scaling applications, as changes or updates to one application might affect others sharing the same system.

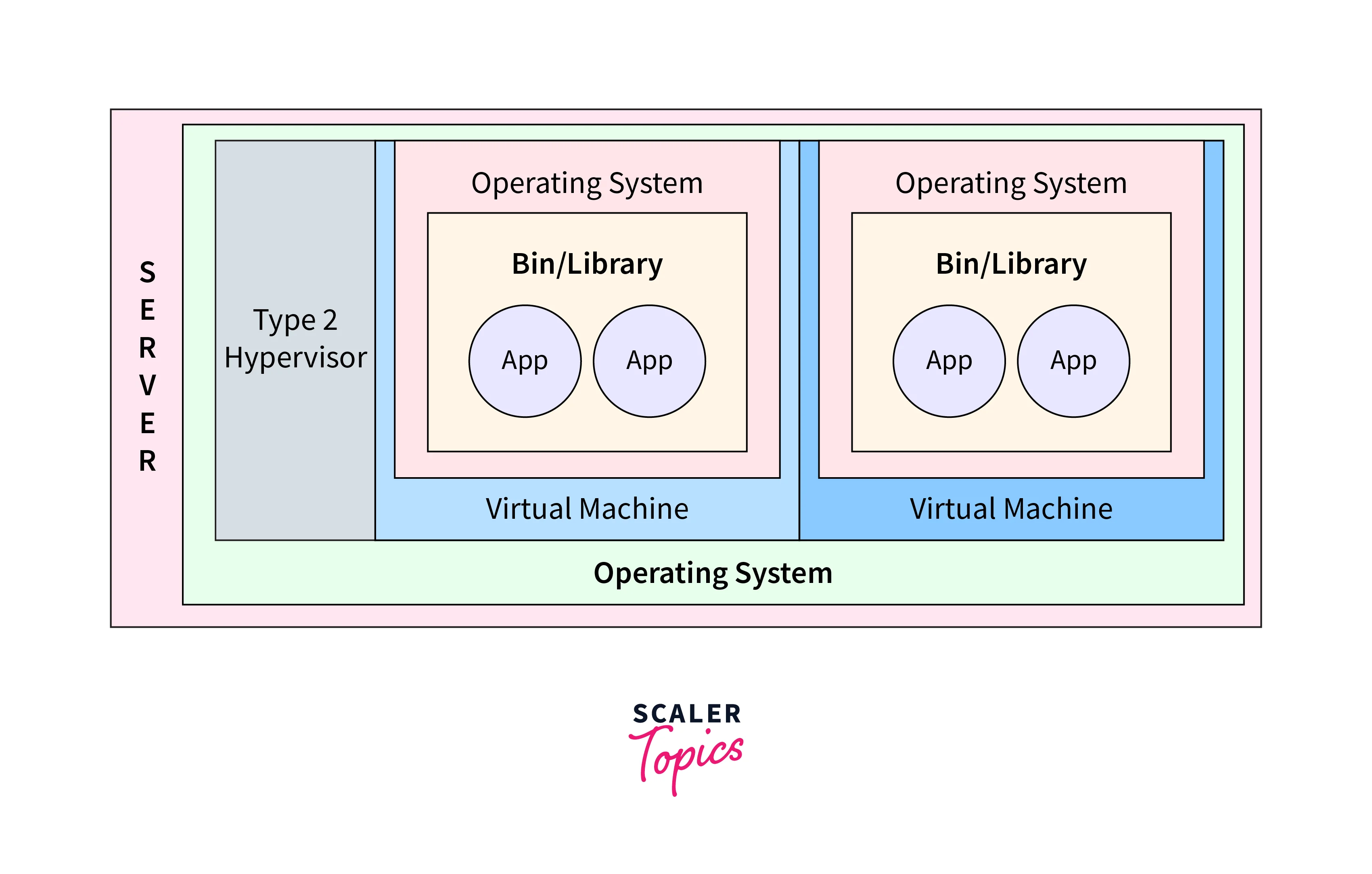

Virtualized Deployment

Virtualized deployment involves using virtualization technologies to run multiple virtual machines on a physical server. Each virtual machine operates as a self-contained unit, running its operating system and applications. Virtualization allows for better resource utilization and isolation between applications, as each VM can have its own set of dependencies and configurations. However, VMs are still relatively heavyweight, leading to slower provisioning and increased resource overhead compared to other deployment models.

In both traditional and virtualized deployment models, applications are not inherently designed for portability. Moving applications from one environment to another can be challenging due to differences in configurations, dependencies, and library versions. This is where container deployment comes in.

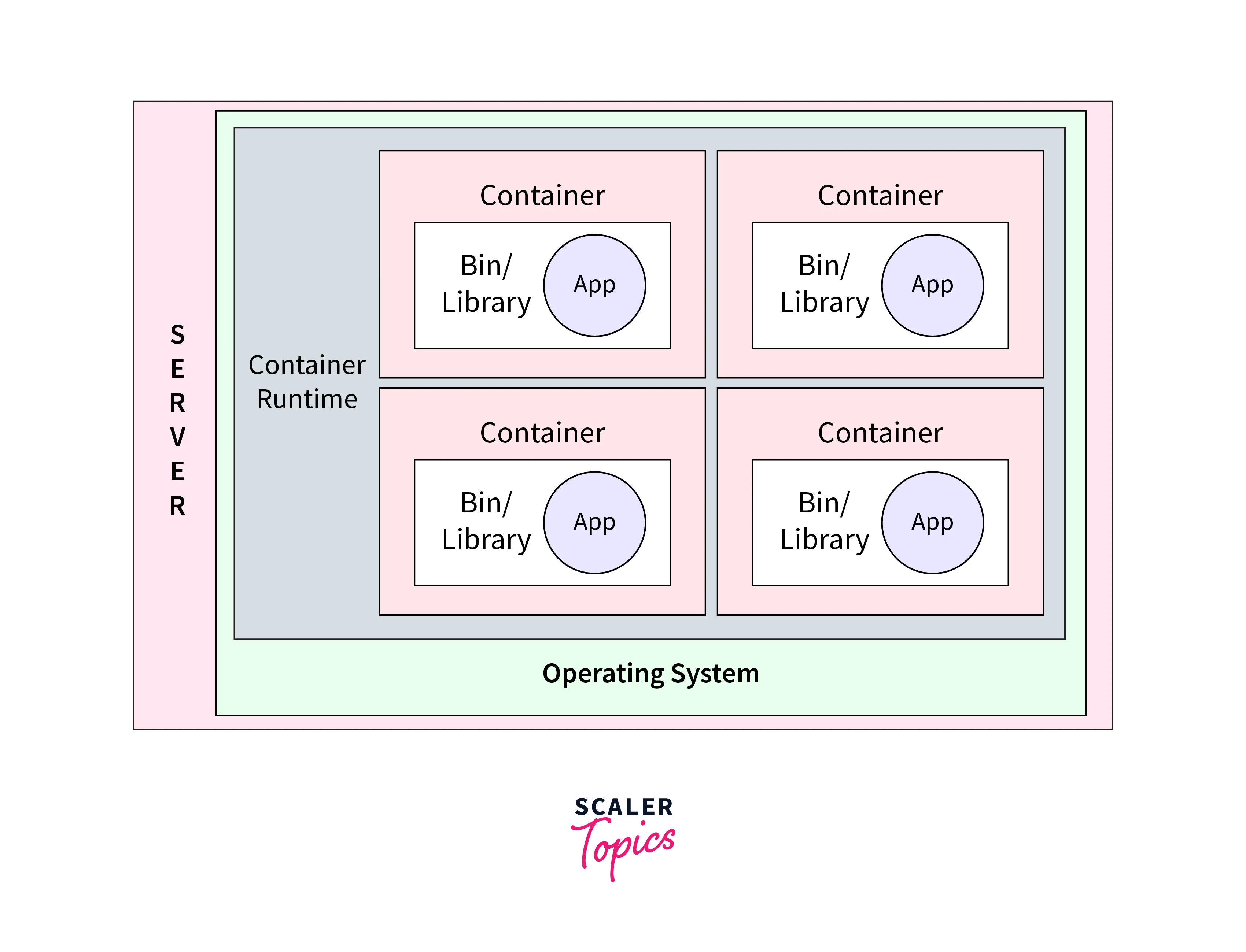

Container Deployment

Container deployment is a method of packaging, distributing, and running applications along with their dependencies, libraries, and configurations in a consistent and isolated environment called a container.

Containers provide a standardized unit for software delivery, making applications highly portable and enabling seamless deployment across different environments, from development to testing and production. They provide an isolated runtime environment, ensuring that the application runs consistently across various environments, from development to production.

FAQs

Q. What is Kubernetes and its architecture?

A. Kubernetes is a powerful open-source platform for managing containerized applications, enabling automated deployment, scaling, and efficient orchestration across a cluster of machines. Its architecture consists of a Master Node (control plane) and Worker Nodes (where containers run), providing a scalable and flexible framework for running microservices across a cluster of machines.

Q. What is a pod in Kubernetes?

A. In Kubernetes, a pod is the smallest deployable unit that represents one or more containers deployed together on a single node. Pods are used to encapsulate related containers that need to share resources, networks, and storage, and they enable applications to run in isolation while still being able to communicate with each other within the same pod.

Q. What is pod vs node?

A. In Kubernetes, a pod is the smallest deployable unit that can contain one or more containers, while a node is an individual worker machine within the cluster where pods are scheduled and run. Pods are used to encapsulate and manage containers, while nodes provide the physical or virtual resources for running those containers.

Conclusion

- Kubernetes is a powerful open-source platform for managing containerized applications, enabling automated deployment, scaling, and efficient orchestration across a cluster of machines.

- It follows a master-worker architecture, where the master node controls the cluster, and worker nodes run the application containers.

- Key components of the master node include API Server, etcd, Scheduler, and Controller Manager.

- Worker node components include Kubelet, Container Runtime, and Kube-proxy.

- Managed services in Kubernetes are provided by cloud service providers to simplify deployment and management tasks. Examples include Azure Kubernetes Service (AKS), Oracle Kubernetes Engine (OKE), Elastic Kubernetes Service (EKS), and Google Kubernetes Engine (GKE).

- Container Deployment in Kubernetes:

- Container deployment involves packaging and running applications with their dependencies in isolated containers.

- Containers provide portability, consistency, scalability, and efficient resource utilization.

- Container orchestration platforms like Kubernetes automate container deployment, management, and scaling.