Bagging in Machine Learning

Overview

There are several techniques in Machine Learning that help us to build a model and improve its performance. One such technique is Ensemble Learning. In this process, multiple individual models called the base model are combined to form a well-optimized prediction model. Ensemble learning has revolutionized the way one approaches an ML problem. This article revolves around bagging, an ensemble learning technique.

What is Bagging in Machine Learning?

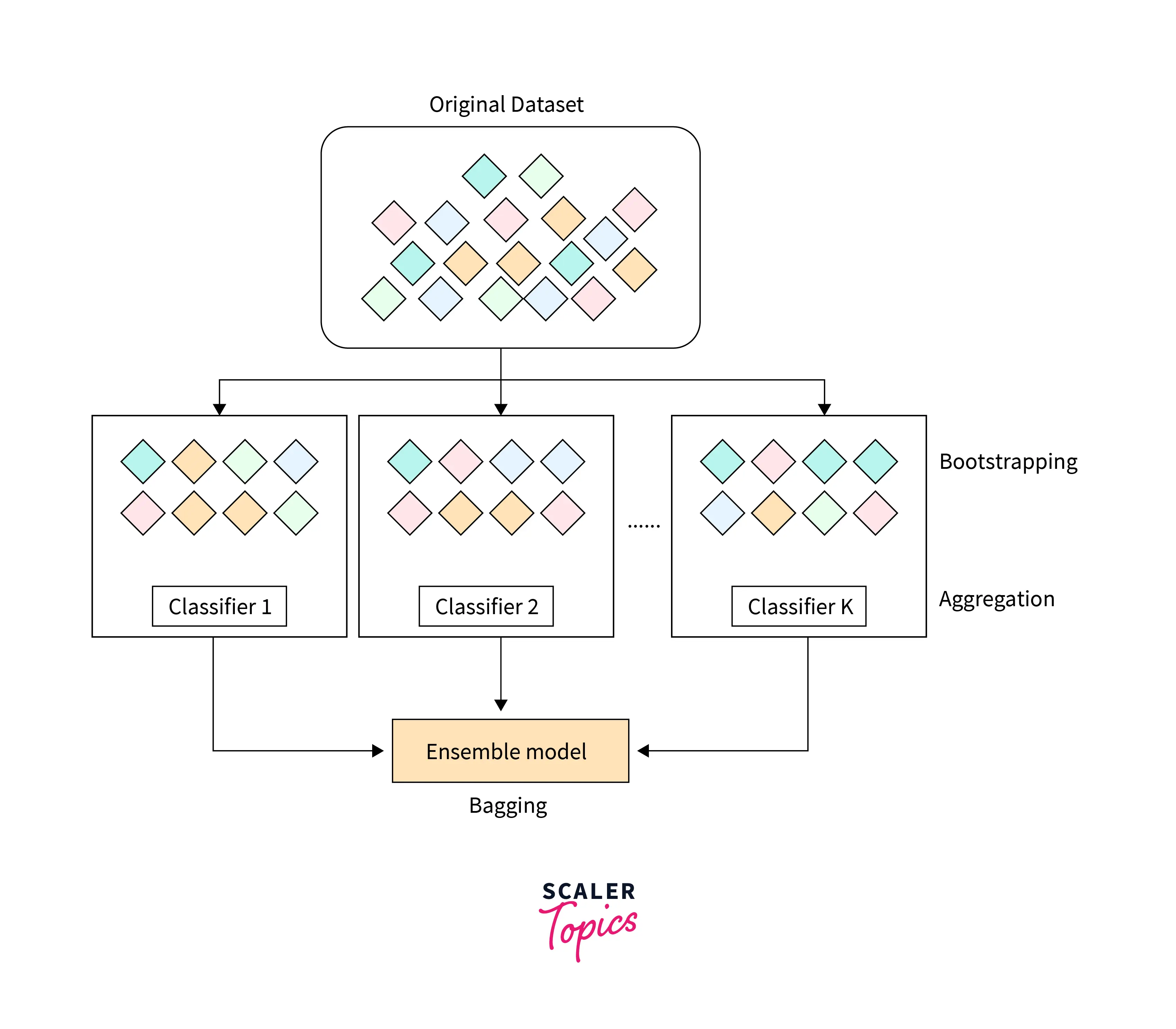

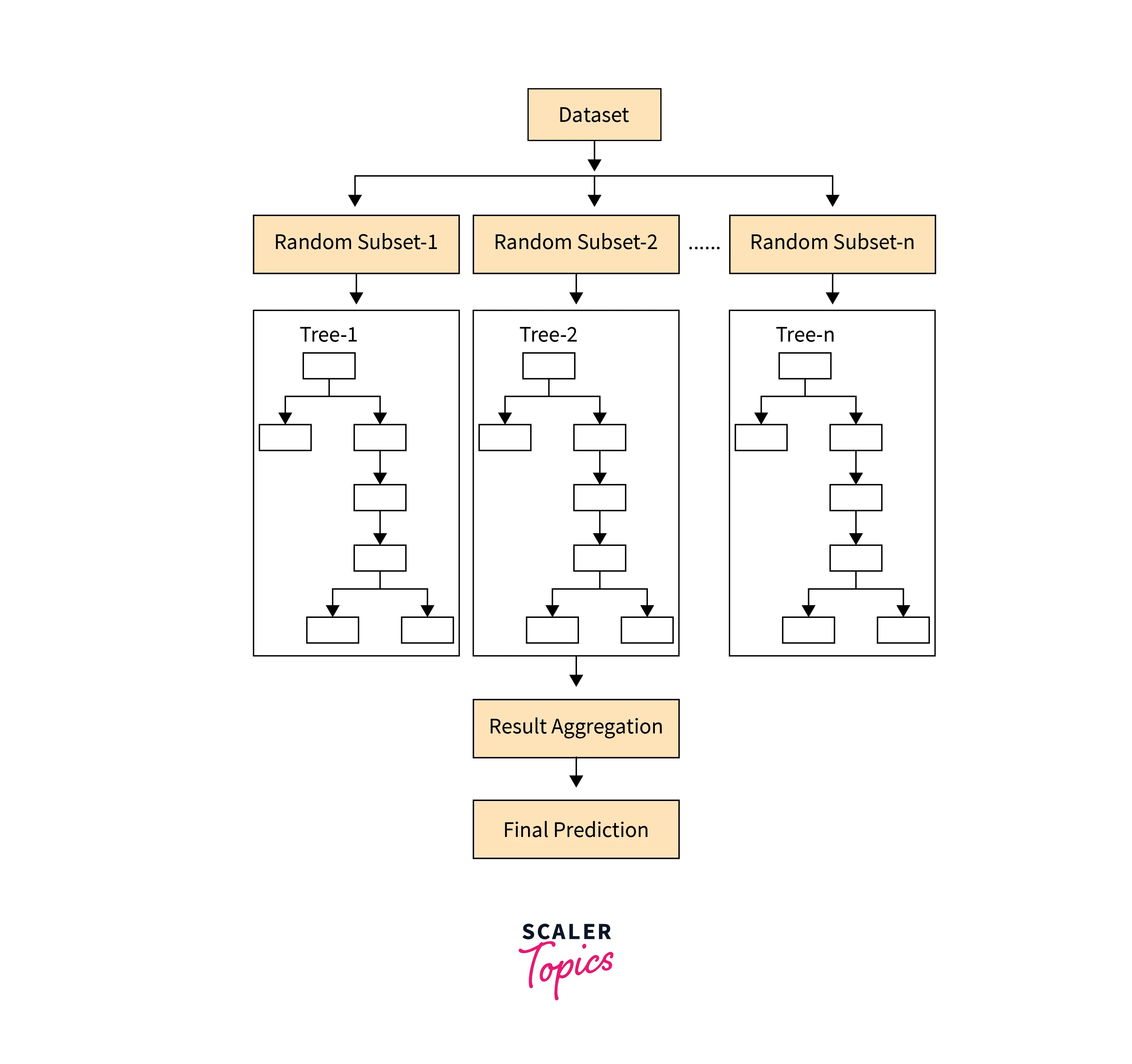

Bagging is also called Bootstrap Aggregation and is an ensemble learning method that helps improve the performance and accuracy of an ML model. Primarily, it helps to overcome the overfitting problem in classification. This process takes random subsets of a dataset, with replacement, and pushes it into a classifier or a regressor. The predictions for each subset are then calculated through a majority vote for classification or averaging for regression, thus increasing the prediction accuracy.

Steps to Perform Bagging

- Let's take a dataset with x observations and y features. The task is to select a random sample from the training dataset without replacement.

- Then we create a model using sample observations which is a subset of y features chosen randomly.

- The feature offering the best split out of the lot is used to split the nodes for the decision tree, and the tree is formed so that we have the best nodes.

- The above steps are repeated n times. It aggregates the output of individual decision trees to give the best prediction

Evaluating a Base Classifier

To visualize the importance of bagging, we first need to see how a base classifier performs on a dataset.

We are going to use the sklearn's wine dataset to classify different types of wine using the decision trees model.

Let's see the code:-

Here's what the dataset looks like:-

Output:-

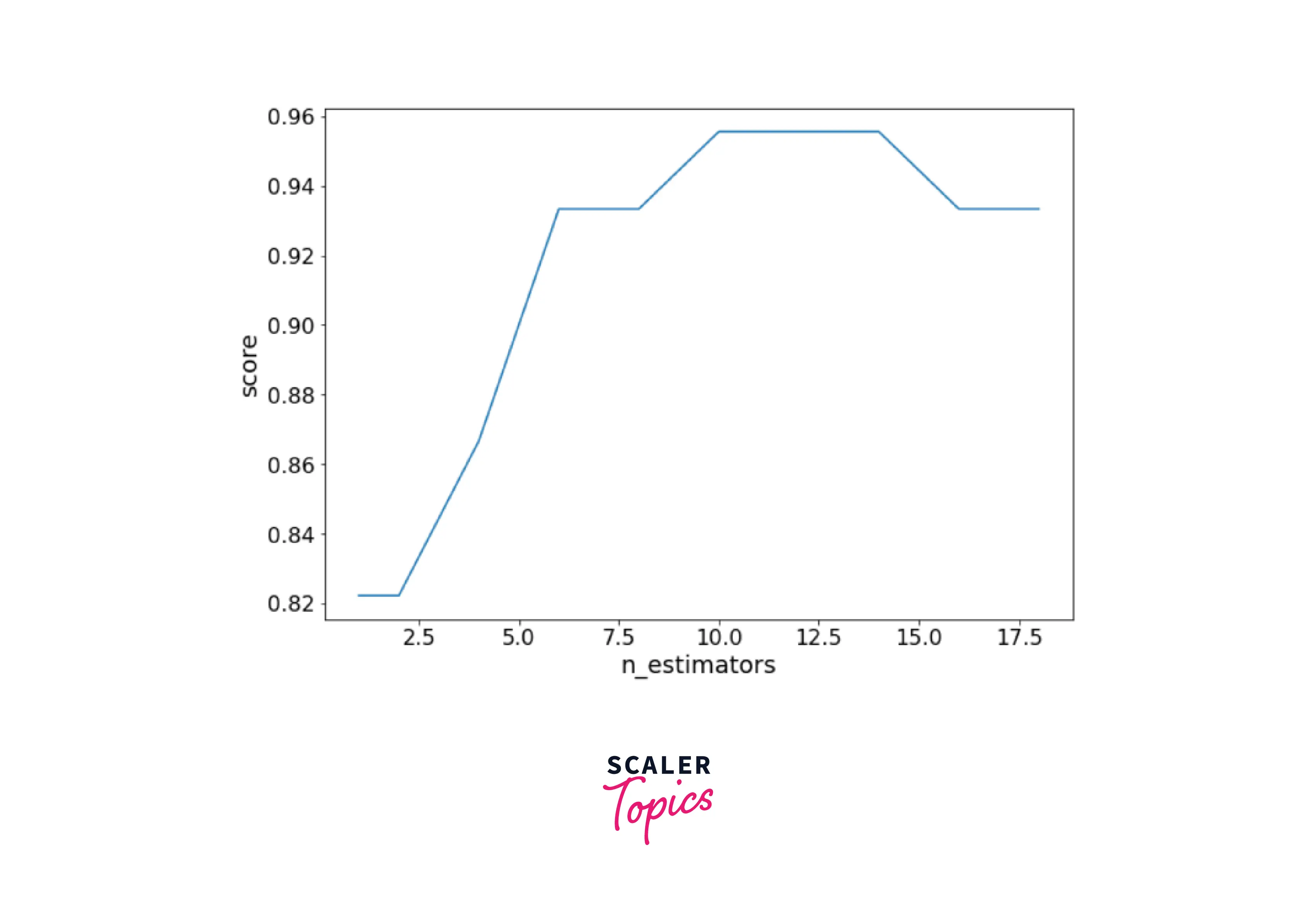

So we get an 82% accuracy on the test dataset with the available parameters. Let's see how the Bagging Classifier outperforms a single Decision Tree Classifier. For bagging, we need to set the hyperparameter n_estimators. It is the number of base classifiers that the model will aggregate together.

Now, let's plot the data for visualization.

Output:-

It's visible from the graph that at a certain range of n_estimators the accuracy shoots up to the north of 95% which is a good improvement on the base decision tree classifier.

Benefits and Challenges of Bagging

Benefits

- It minimizes the overfitting of data.

- Bagging improves the model’s accuracy.

- It deals with higher dimensional data with higher efficiency than base models.

Challenges

- It introduces a loss of interpretability of a model.

- It can be computationally expensive.

- Bagging works particularly well with less stable algorithms.

Applications of Bagging

The applications of bagging have a broad spectrum. To concur with this, here are a few examples:-

- Healthcare:- Ensemble methods such as bagging have been used for a series of bioinformatics problems, such as gene or protein selection to identify a specific trait of interest.

- Technology:- Bagging improves upon the precision and accuracy of IT systems, such as the ones in network intrusion detection systems.

- Finance:- This process helps with fraud detection, credit risk evaluations, and option pricing problems by leveraging deep learning models and improving accuracy.

Bagged Trees

Bagging or Bootstrap Aggregation constructs B regression trees using B bootstrapped training sets and averages the resulting predictions. These trees are grown deep and are not pruned. These trees are called bagged trees.

Unlock the power of mathematics in machine learning. Enroll in Maths for Machine Learning Certification Course today!

Conclusion

The key takeaways from this article are:-

- Bagging is an ensemble learning method.

- It helps in improving the performance and accuracy of an ML model.

- A dataset is divided into subsets, and each subset is pushed into a classifier or regressor. The final result is obtained by aggregating the obtained results.

- Bagging also minimizes the overfitting of data.

- A python programming example has also been provided in the article fordeveloping better understanding of the readers.