Ensemble Methods in Machine Learning

Overview

The ensemble method is a technique in which the output of multiple predictors is combined to generate a more accurate outcome for a problem. It is intuitively meaningful because using multiple models instead of one is expected to create better results. This article will cover some basic yet popular ensemble methods in machine learning. Advanced techniques like bagging and boosting will be covered in subsequent articles.

Prerequisite

- Learners must know the basics of supervised machine learning techniques.

- Knowing the basics of optimization techniques and mean, median, and mode will be helpful.

Introduction:

Ensemble methods in machine learning are a way to consolidate outcomes from multiple small models to produce a better result for a predictive problem. These small models are called base learners. A machine learning model suffers from different errors, namely bias, variance, and residuals or noise. The Ensemble technique reduces these error-causing factors, making the machine learning model more accurate and robust. There are a seemingly uncountable number of ensemble techniques one can develop. This article will discuss some of the widespread yet powerful ensemble methods in machine learning: mode, mean, weighted average, stacking, and blending.

Popular Ensemble Methods

Mode

The mode technique is also called max voting. Let's consider an example where a machine learning model tries to classify an image as a cat or a dog. Suppose we have three base learners, among which two classify it as a cat, whereas the third one classifies it as a dog. In the Mode technique, we take the max voting, and the final outcome appears as a cat.

Mean

Here the outcome of a model is nothing but the average of multiple base learners. Consider an example where one has to predict the rent of a real estate property. Suppose three base learners return 32K, 35K, and 23K; the final outcome will be 30K.

Weighted Average

Here, instead of assigning equal weight to all base learners, the weight for some learners is changed based on different criteria. The weighted average technique penalizes the importance of some learners and boosts weight for others to generate accurate outcomes.

Each model is assigned a fixed weight multiplied by the model's prediction and used in the sum or average prediction calculation. The challenge here is calculating or setting for model weights that perform better than any contributing model and an ensemble that uses equal model weights.

Let's take an example. Suppose we have three models with learned/fixed weights of 0.75, 0.84, and 0.91. Their outcomes for the prediction task are 100, 93, and 97. Hence the final outcome for the weighted average based ensemble is: (100 * 0.75 + 93 * 0.84 + 97 * 0.91)/(0.75+0.84+0.91) = 96.556

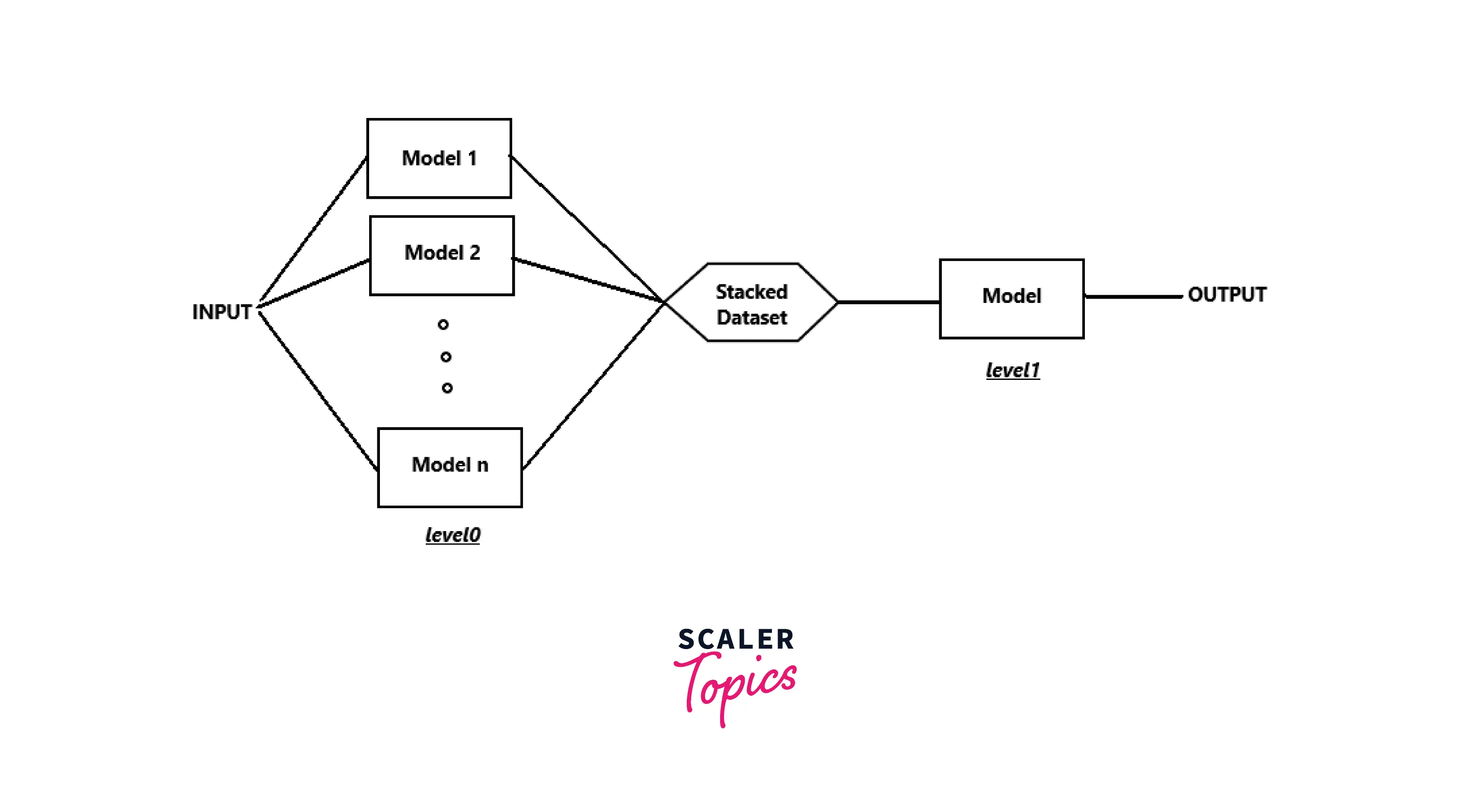

Stacking

The stacking ensemble method for machine learning is also known as Stacking Generalisation. The key to stacking is to reduce the error of different base learners. The final model is trained with an additional ML model, commonly known as the "final estimator." Stacking learns the weight of varying base learners with the help of a final estimator. Sometimes, the base model predictions are concatenated with the original data before training the final estimator.

Blending

Blending is nothing but generalized Stacking. The steps for blending ensemble technique are as follows:

- First, the training data is divided into training and validation sets.

- Base models are fit on the training set.

- The predictions are made on the validation set and the test set.

- Predictions on validation data and validation data are used as features to build a final model.

- This model is used to make final predictions on the test data.

Application of Ensemble Methods in Machine Learning

- Ensembling technique helps solve classification, regression, feature selection, etc.

- Ensembling technique performs better (for almost all applications) than the average prediction performance of any contributing member. Hence, it is one of the favorite choices among data science practitioners for solving different real-life problems.

- Ensemble models' outcomes can easily be explained using explainable AI algorithms. Hence, ensemble learning is used extensively in applications where an explanation is a must.

- In all applications, it helps to reduce the spread in the average skill of a predictive model.

- The mechanism for improved performance with ensembles is often the reduction in the variance component of prediction errors made by the contributing models.

Conclusion:

- In this article, we have learned about ensemble methods in machine learning.

- Some popular yet potent ensemble learning techniques, like mean, mode, weighted average, stacking, and blending, are discussed here.

- Next, you will learn about bagging and boosting.