Feature Scaling in Machine Learning

Overview

Building a good machine learning model requires us to find out the perfect composition or blend of features. Think of it like this, when you make a mixed fruit juice, you don't want the taste of a particular fruit to dominate over others, in the same way, in an ML model, we don't want a feature to have a better standing than others. We need to scale the features so that a feature with a large magnitude does not dominate over the others.

Introduction

Feature Scaling also known as data normalization is a data preprocessing step. It is a method used to normalize the range of independent variables or features of data. Independent variables are of different types. The numerical ones can be of type age(0-100 years), salary(in the range of 1000s), dimensions(decimal points), and many more. We don't want our machine learning model to confuse a feature with a larger magnitude as a better one. Feature scaling in Machine Learning would help all the independent variables to be in the same range, for example- centered around a particular number(0) or in the range (0,1), depending on the scaling technique.

Why Feature Scaling?

The machine learning models assign weights to the independent variables according to their data points and conclusions for output. In that case, if the difference between the data points is high, the model will need to provide more significant weight to the farther points, and in the final results, the model with a large weight value assigned to undeserving features is often unstable. This means the model can produce poor results or can perform poorly during learning.

When to do Scaling?

Feature Scaling in Machine Learning is recommended while using most of the basic algorithms like linear and logistic regression, artificial neural networks, clustering algorithms with k value, etc. The features must be of different ranges to be brought down to a common field.

Gradient Descent Algorithm

The gradient descent function works by sliding through the data set while applied to the data set step by step. It is a first-order iterative optimization algorithm for finding a local minimum of a different function. The difference in the ranges of features will cause different step sizes for each feature. Here's the formula:

Where theta is the feature, x is the input, h(x) is the model's output and y is the actual output. Feature scaling helps the gradient descent converge more quickly towards the minima.

Distance Based-Algorithm

Machine learning algorithms, such as KNN, K-means, SVM, etc., use distance-based algorithms to go about their business. They use distances between data points to determine their similarity. Hence these algorithms are most affected by the range of features. There is a need for rescaling the data so the data is well spread in the data space and algorithms can learn better from it.

Tree-based Algorithms

Feature scaling in Machine Learning does not have a lot of effect on Tree based algorithms such as decision trees and random forests. This is because a decision tree splits a node based on a single feature, and this split on a feature is not influenced by other features.

Techniques to Perform Feature Scaling

Min-Max Normalization (or Min-Max scaling)

This technique rescales a feature or observation value with a distribution value between 0 and 1.

Formula:

Here, x is the feature, and min() and max() are the minima and maximum values of the feature, respectively.

Standardization

This technique rescales a feature value so that it has a distribution with 0 mean value and variance equal to 1.

Formula:

Here, σ is the standard deviation of the feature vector, and x̄ is the average of the feature vector.

Log Scaling

Log scaling calculates the log of the feature's values to reduce a wide range to a small range. Formula:

Where x is the concerned feature.

Normalization or Standardization

There is no correct answer as to whether normalization or standardization should be preferred.

Here are the conditions when normalization is preferred:-

- When the distribution of data does not follow a Gaussian distribution.

- While doing image processing, where pixel intensities have to be normalized to fit within a certain range (i.e., 0 to 255 for the RGB color range) or any similar use cases which require a specific range.

When to use standardization:-

- When the distribution of data follows a Gaussian distribution.

- While performing clustering or principal component analysis(PCA).

Implementation of Feature Scaling on Data

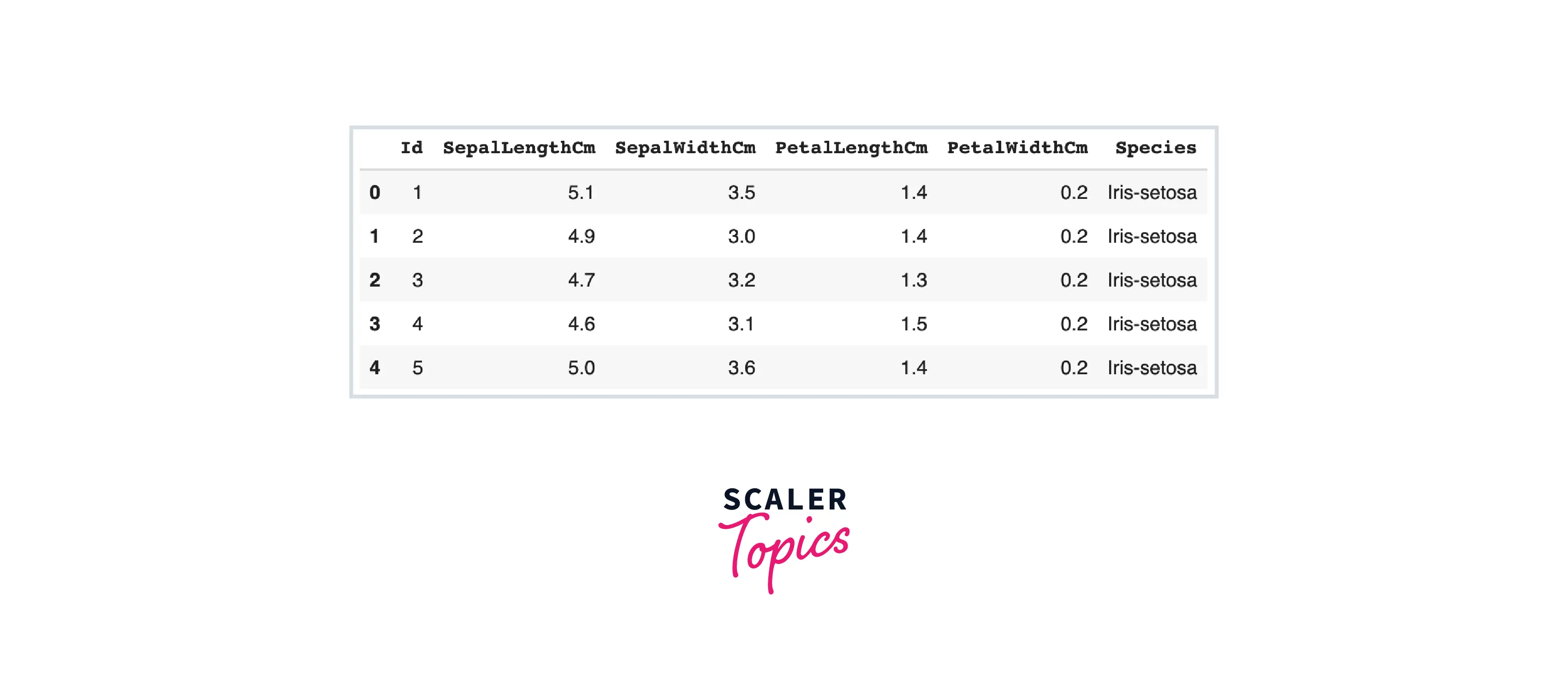

Implementation of Feature scaling in python is simple and quick. We use the sklearn's preprocessing.MinMaxScaler and Standardisation for normalization and standardization. Code example:- We use Kaggle's Iris dataset for this example.

Let us load the dataset first:-

Output:-

The features of sepal length, width, petal length, and width are of different ranges. Then we select the features that need to be scaled:-

Output:-

Next, we perform min-max scaling on the selected features.

Output:-

Now we see how standardization is done.

Output:-

Elevate your machine learning proficiency with our Maths for Machine Learning Certification Course. Get started today!

Conclusion

The key takeaways from this article are:-

- Feature scaling in Machine Learning is a method used to normalize the range of independent variables or features of data.

- Gradient descent and distance-based algorithms are heavily impacted by the range of features.

- Standardization and normalization are two primary ways to apply feature scaling in Machine Learning.

- We use the sklearn's preprocessing.MinMaxScaler and Standardisation for normalization and standardization