Grid Search in Machine Learning

Overview

When it comes to Computer Science, it is hard to ignore Machine Learning. One of the most emerging and sought-after fields, Machine Learning aims to make a computer learn things on its own, with the help of past data. Tasks like image recognition, speech recognition, email filtering, and recommender systems are some of the many tasks that Machine Learning comprises.

In this article, we will talk about Grid Search, a method that is used to increase the accuracy of our Machine Learning models by changing the hyperparameters of said algorithms.

Pre-Requisites

To get a better understanding of this article, the reader should:

- Be well aware of basic Machine Learning algorithms like Linear and Logistic Regression.

Introduction

As we discussed earlier, Grid Search is a machine-learning tool that is used for hyperparameter tuning. Grid Search performs multiple computations on the hyperparameters that are available on every machine learning algorithm and provides an ideal set of hyperparameters that help us achieve better results.

Apart from helping us achieve better results, Grid Search gives us an evaluated metric system, which gives us better insights into our machine-learning model.

Without further ado, let us dive into the very need for Grid Search in Machine Learning Optimization

Types of Optimization in Machine Learning

A standard way of Machine Learning is to keep on trying and comparing various models and see which algorithm gives us better accuracy. Although this is a good method, but we can certainly optimize it.

-

Optimizing the Choice of the Best Machine Learning Model

Gathering the correct dataset is an integral part of Machine Learning, as they provide more than just data. By looking at a dataset, we can easily figure out relationships between the dependent and independent variables. This doesn't apply to complex datasets though, as decoding relationships would be a bit complicated.

When the relationship between variables is somewhat clear, we can directly use a few of the Machine Learning algorithms. If we see a linear relationship between the variables and the number of variables is quite less, we can simply use the Linear Regression algorithm.

For a robust and well-fitting model, we need to separate the data into train and test data, fit several models on the training data, and test each one on the test data to find a model that fits the data best. We will keep the model with the lowest error on the test data.

-

Optimize a Model's Fit using Hyperparameter Tuning

As mentioned above, choosing a Machine Learning model by analyzing the dataset is a great way of optimizing a Machine Learning model. However, a much better approach is to optimize the hyperparameters of a Machine Learning model. The various configurations and options of the training phase of a Machine Learning model are called hyperparameters. They have a great influence on the accuracy of a Machine Learning model.

Therefore, hyperparameter tuning can further reduce the error on the testing dataset. Grid Search allows us to find out the perfect set of hyperparameters that in turn increase the accuracy and decrease the error on our Machine Learning models.

Here are some of the hyperparameters of the Decision Tree classifier:

DecisionTreeClassifier(ccp_alpha= 0.0, class_weight=None, criterion='gini',

max_depth=None, max_features=None, max_leaf_nodes=None,

min_impurity_decrease = 0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf = 0.0, presort= 'deprecated',

randon_state=None, splitter='best')

What is Grid Search?

Now that we have got a better understanding of why there exists a need to tune our hyperparameters using Grid Search, let us understand Grid Search in a detailed manner.

-

The "Search" in Grid Search

The search in Grid Search symbolizes the problem statement that Grid Search solves; finding the perfect combination of hyperparameters that will minimize the error and maximize the accuracy of our model. Using Grid Search, we try to find the said specific combination using various permutations and combinations of hyperparameters.

-

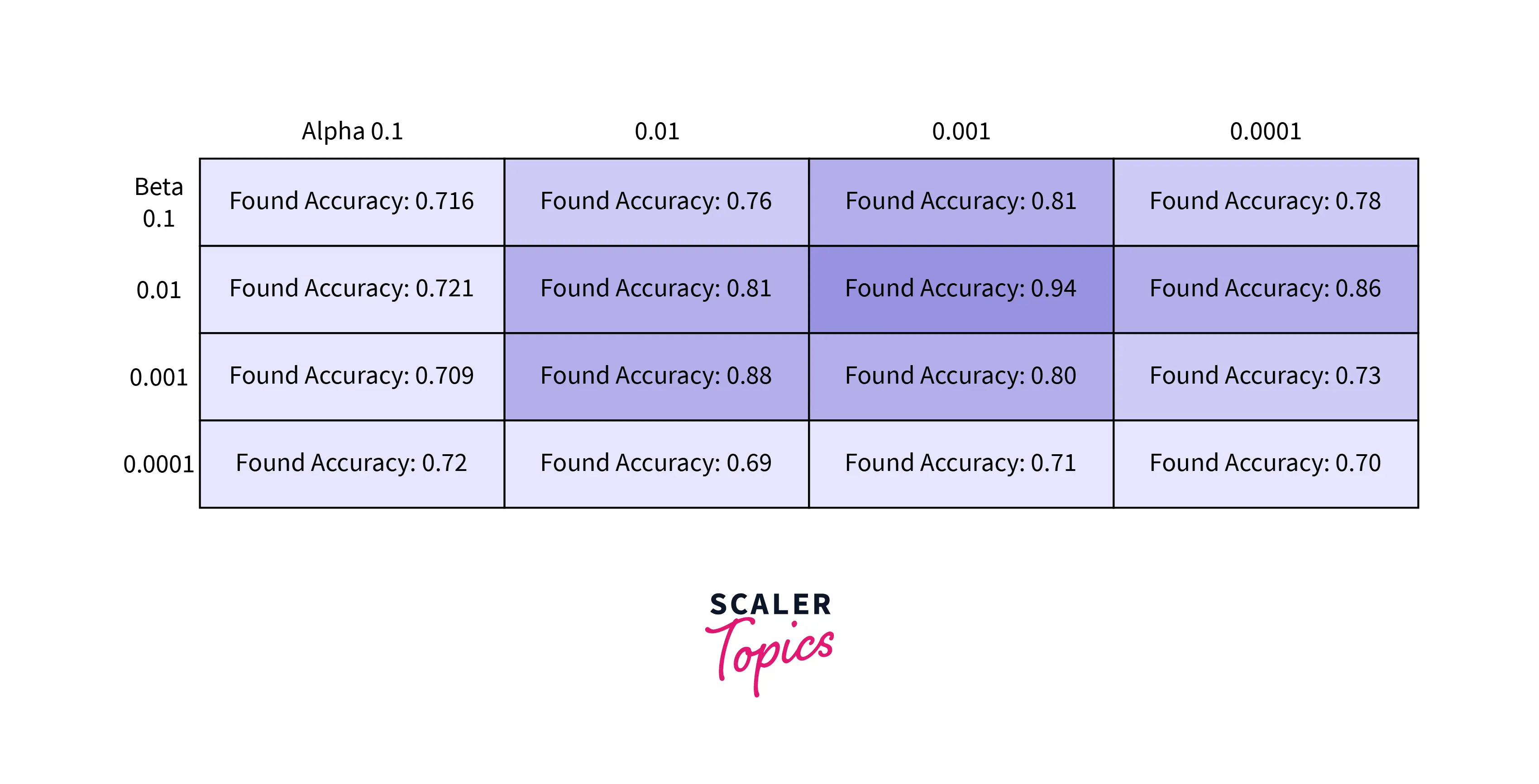

The "Grid" in Grid Search

It is computationally impossible for us to test all conceivable parameter combinations and choose the optimal one now that we know the goal of Grid Search. To solve this problem, we create a Grid.

The Grid helps us to define the limits for testing, and the Grid Search only tries the various combinations in the said range. This Grid specifies which values should be tried for each hyperparameter.

-

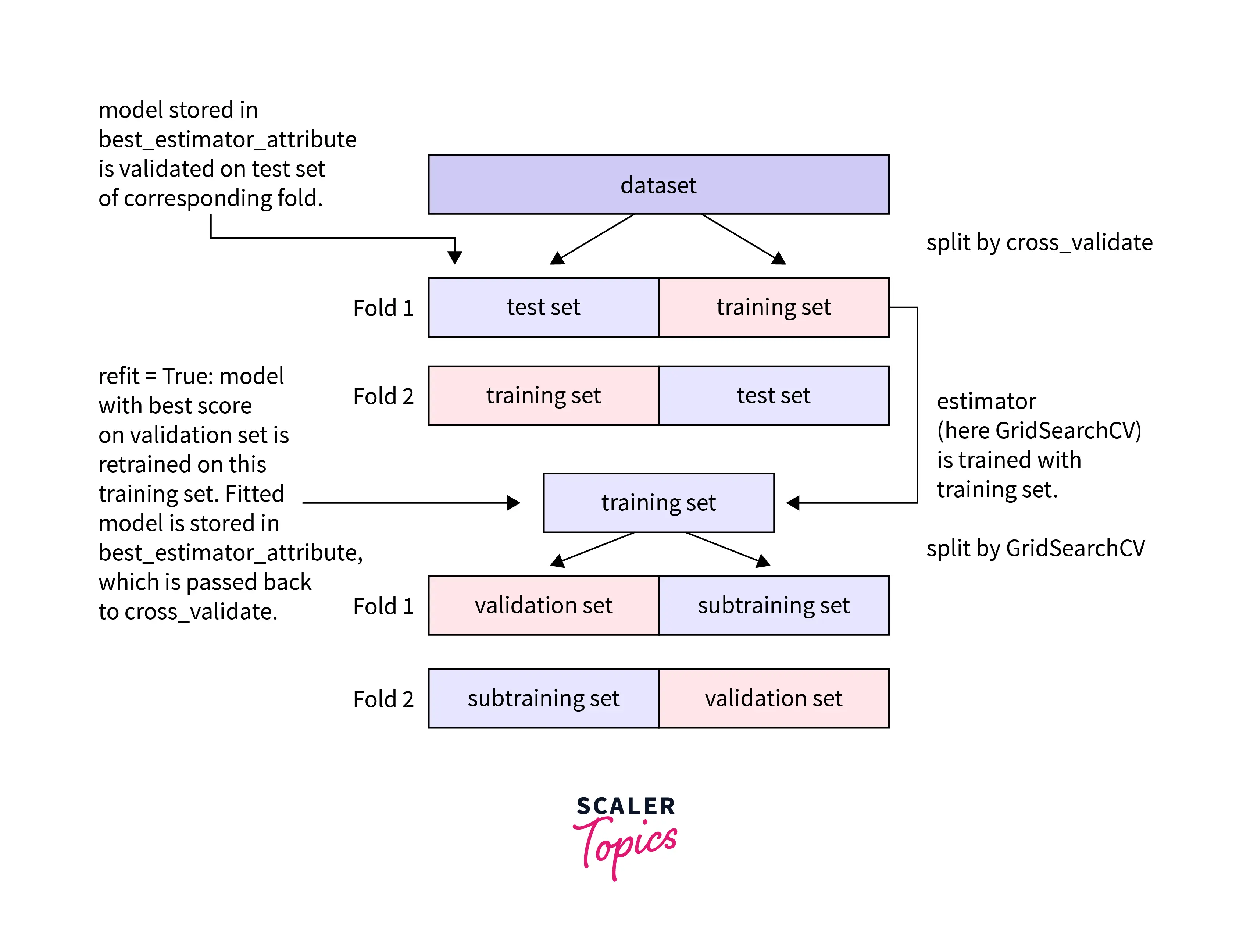

The "Cross-Validation" in Grid Search

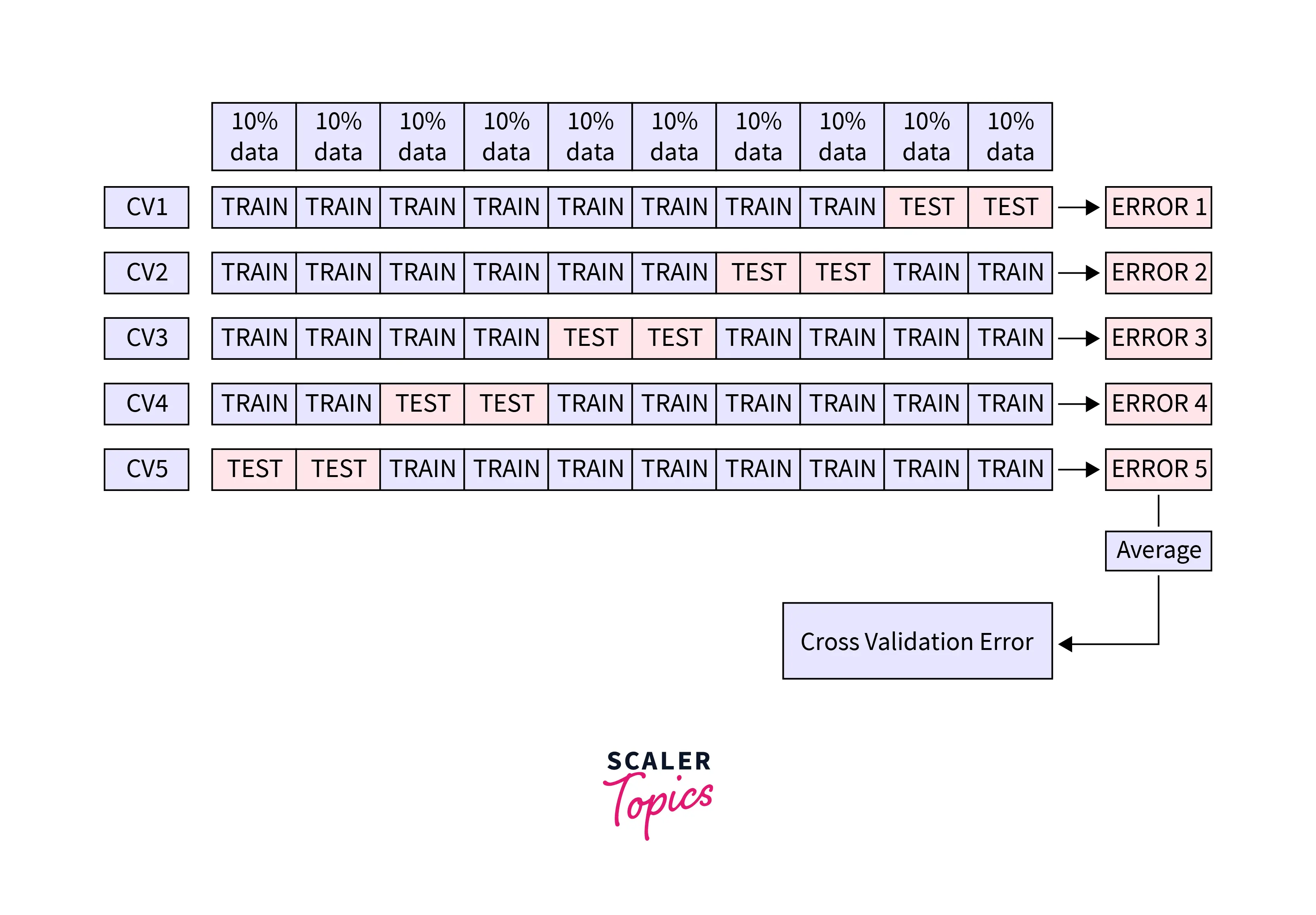

With Machine Learning, every combination of hyperparameters presents itself with the risk of overfitting. The combination might work well with train data but will perform terribly with the test data. To reduce the deficit caused, we use the Cross Validation Error.

We split the dataset into K parts (K->Number of parts). Then, we fit the model K times while leaving one-fifth data out. This one-fifth of data is later used to calculate errors and measure performances.

The average of five errors is regarded as cross-validation. As we can see, this greatly lowers the errors and increases the dependability of the final choice.

What makes GridSearch so Important?

Automation is a concept that developers use on a daily basis. It makes their lives a whole lot easier. Grid Search allows developers to automate the process of finding the perfect combination of hyperparameters to get the best Machine Learning model possible.

This doesn't take out the fact that processes like deciding error metrics, the type of Machine Learning model we need to choose, and data preparation are still left to do. But a major part of continuously trying out various combinations of parameters is handled effectively by Grid Search.

Implementation of Grid Search in Python

Since we know the basics of Grid Search, let us go on and implement it using GridSearchCV provided by sklearn.

Output

After execution, we can see the best parameters we can use and the best score we can achieve on our model.

Limitations of Grid Search

Now that we have learned everything that is there to learn and implement Grid Search, let us go through some limitations that Grid Search presents.

- The amount of time taken by Grid Search to compute the perfect combination of hyperparameters is very high. This is because there are a lot of computations that the Grid Search tool has to do to find the perfect combination of hyperparameters.

- When the dimensionality of our dataset is high, sometimes the Grid Search algorithm stops working/takes a lot of time, as Grid Search is not compatible with datasets having a lot of dimensions.

Conclusion

- In this article, we learned about Grid Search; a technique that enables us to identify the ideal set of hyperparameters, which improves the precision and lowers the error of our machine learning models.

- We also learned why we need the Grid Search to find the perfect hyperparameters for our Machine Learning model.

- Apart from this, we also learned about the various metrics directly influencing Grid Search in Machine Learning.

- To conclude, we understood the various limitations that Grid Search presents.