Validation Using Forward Chaining

Overview

Machine learning can identify underlying cycles and patterns in a dataset in addition to forecasting values. Using time-series analysis, we can forecast things like stock prices, vogue trends, home values, etc.

In this article, we will talk about cross validation and forward chaining, two of the most underrated techniques in time-series analysis.

Pre-requisites

For maximum benefit from this article, the reader should:

- Be aware of the basics of time-series analysis, as we won't dive deep into it specifically.

- Knowledge of Python basics is definitely a plus.

Introduction

Time series analysis is a means of examining a collection of data points collected over an interval of time. Instead of just capturing the data points intermittently or arbitrarily, time series analyzers record the data points at regular intervals over a predetermined length of time.

Time series analysis has a profound impact on organizations that want to understand the underlying causes of trends or systemic patterns over time.

In this article, we will look at two techniques that help us be better at time series analysis; cross validation and forward chaining.

What is Cross-Validation?

A well-known method for fine-tuning hyperparameters and generating accurate measures of model performance is cross-validation (CV). It helps us to generate and fine-tune our hyperparameters, which in turn helps us to create better and more robust models.

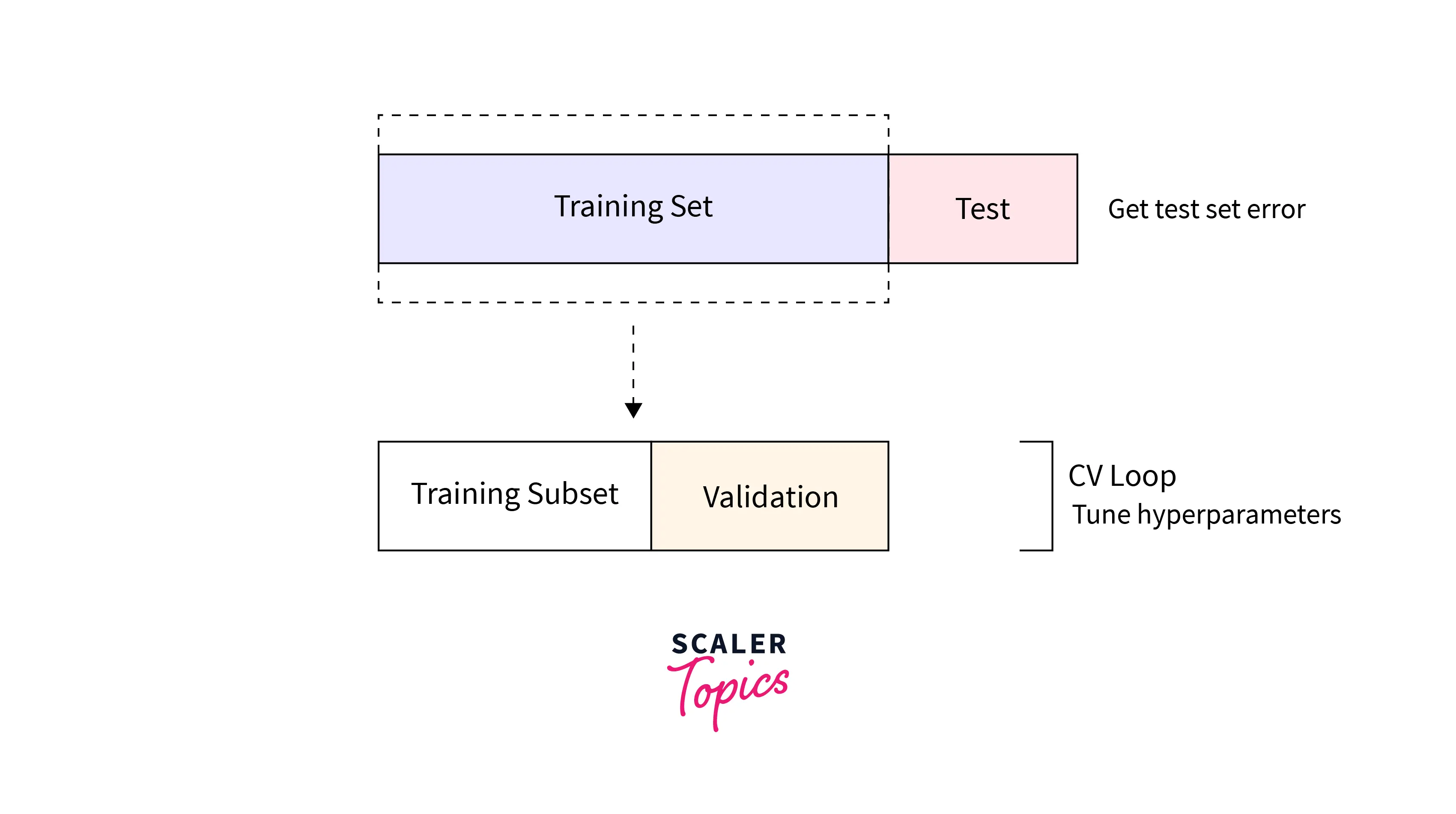

The dataset was initially divided into two subsets: the training set and the test set. We divide the training set into a training subset and a validation set if any parameters need to be fine-tuned. The parameters that minimize error on the validation set are selected after the model has been trained on the training subset. The model is then trained using the selected parameters on the entire training set, and the error on the test set is recorded.

Why is Cross-Validation Different from Time Series

A lot of time, people use cross-validation and time series interchangeably. This is completely wrong, as both of them are quite different. Here are the reasons why they are different:

-

Temporal Dependencies

Data leaking must be avoided while separating time series data; hence special care must be given. The forecaster must withhold any information about events that take place chronologically after the events utilized for model fitting in order to accurately imitate the "actual world forecasting context, in which we stand in the present and forecast the future" (Tashman, 2000). So, for time series data, we employ hold-out cross-validation instead of k-fold cross-validation, where a portion of the data (divided temporally) is kept aside for verifying the model performance.

-

Arbitrary Choice of Test Set

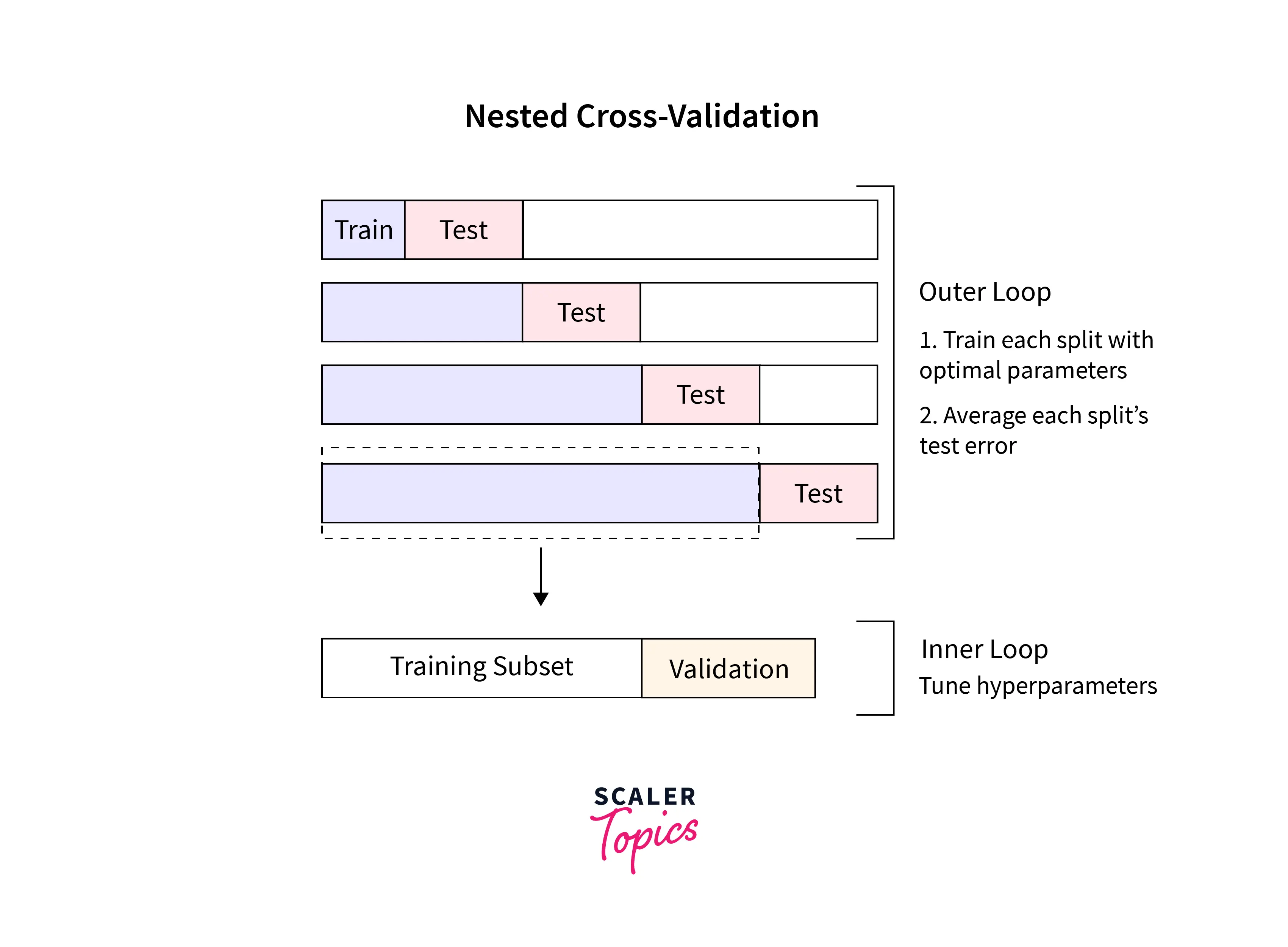

You may have noticed that the test set used for cross-validation was chosen quite arbitrarily. As a result, our test set error may not be an accurate representation of the error on an independent test set. We employ a technique called Nested Cross-Validation to address this. A nested CV has an inner loop for parameter tweaking and an outer loop for error estimation. The inner loop operates exactly as previously mentioned, with the training set being divided into a training subset and a validation set, the model being trained on the training subset, and the parameters that minimize error on the validation set being chosen.

However, now we add an outer loop that splits the dataset into multiple different training and test sets, and the error on each split is averaged to compute a robust estimate of model error. Here's an image depicting Nested CV (Cross Validation).

What is Forward Chaining?

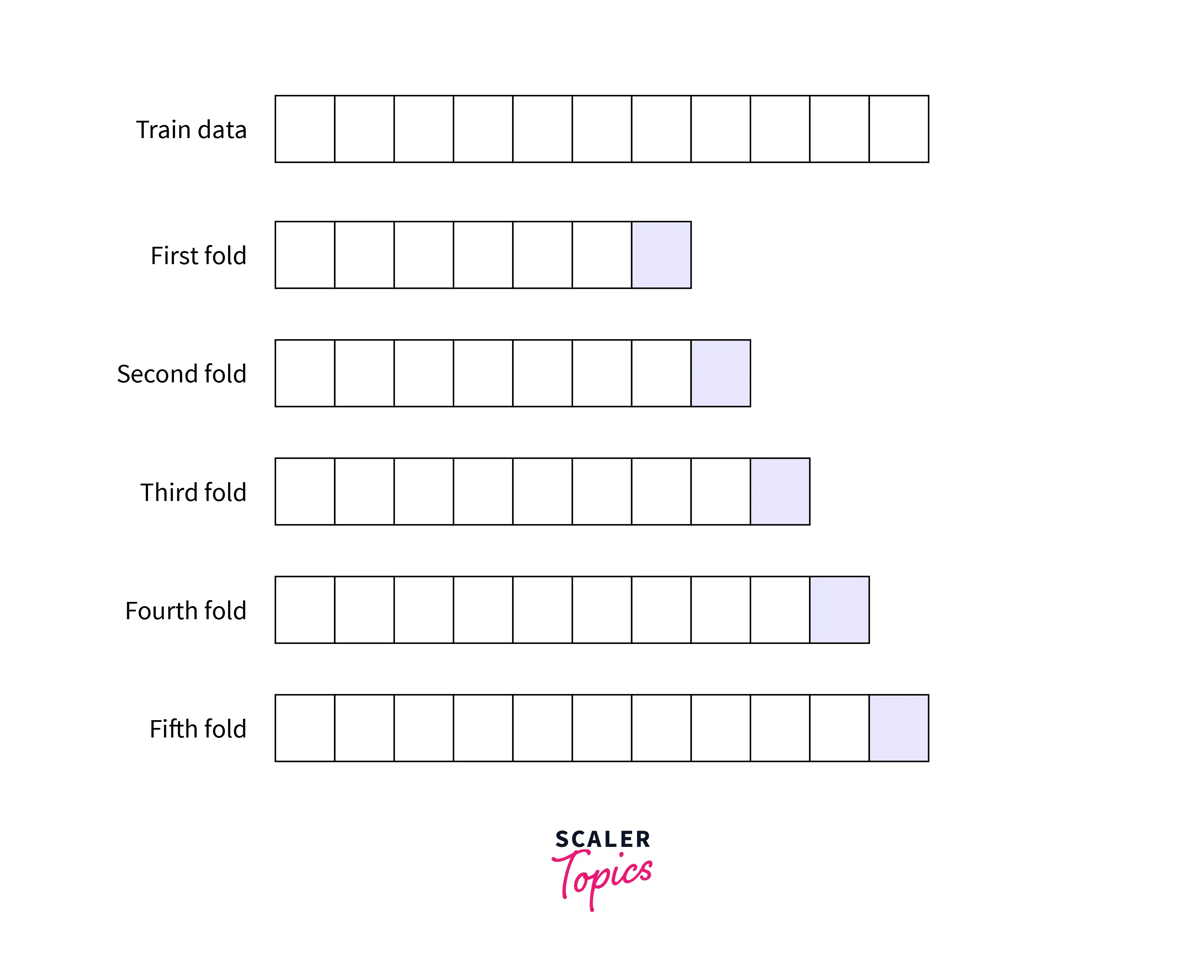

Similar to K-Fold, Forward-Chaining Cross-Validation, also known as Rolling-Origin Cross-Validation, is better suited to sequential data, such as time series. There is no random shuffling of data to begin, but a test batch may be placed aside. In other words, if each fold represents 10% of your data (as it would in 10-fold cross-validation), then your test set represents the last 10% of your data range.

With the remaining data, you first select a sample size to train on—in this case, let's say five folds—then you evaluate the performance on the sixth fold and preserve that performance statistic. The first six folds are now being re-trained, and the seventh fold is being evaluated. You continue until all folds have been used up, then you average your performance measure once more. This would look something like this:

How to Perform Validation Using Forward Chaining?

To perform validation using forward chaining in Python, we need to use the prophet library. The forecasting method Prophet is implemented in R and Python. It is quick and offers fully automated projections that data scientists and analysts can manually adjust.

Explanation

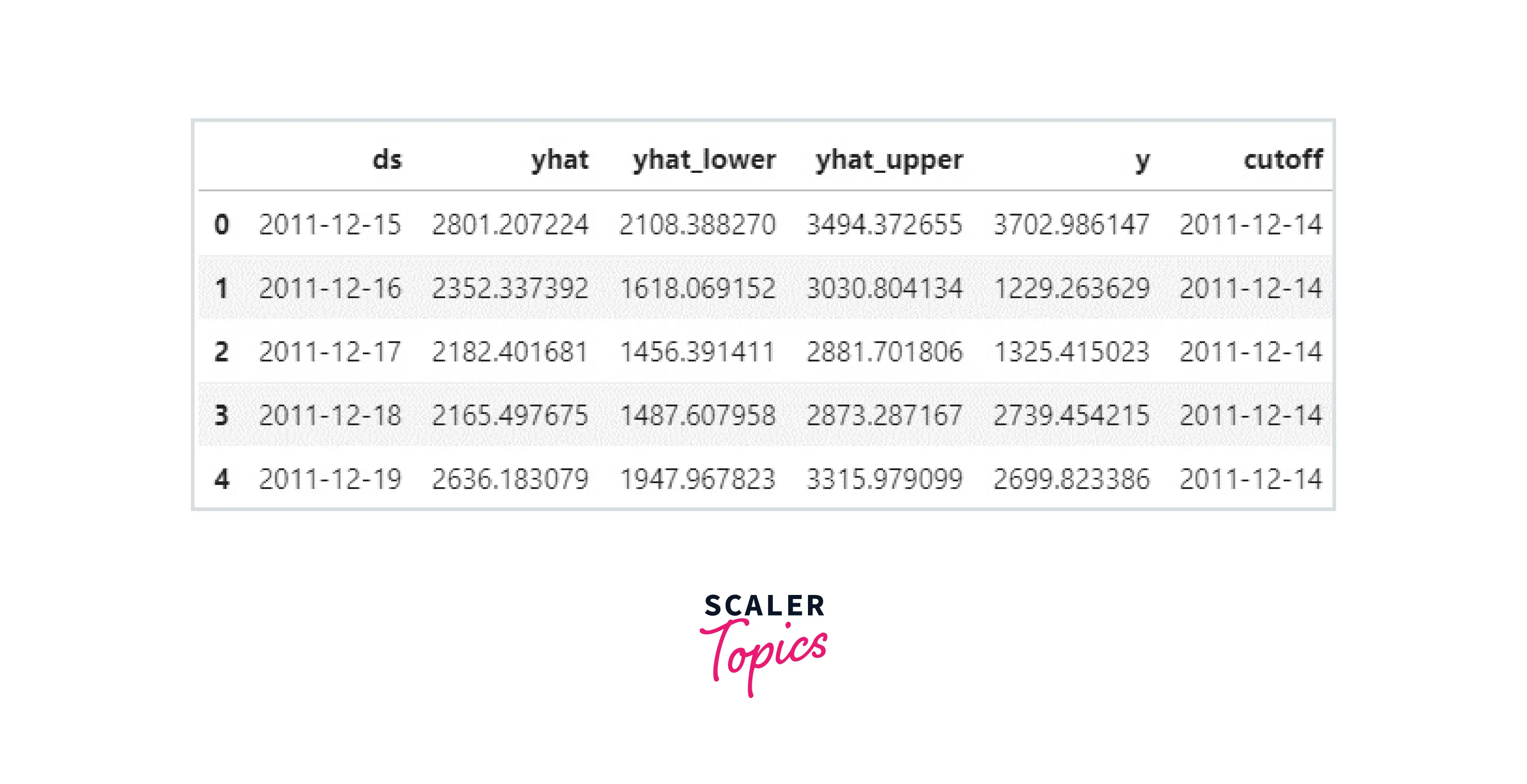

The cross_validation function takes two required arguments, the fitted model and horizon. Also, period and initial can be stated, but they are not required. If left at their defaults, period is half of the horizon, and the initial will be three times the horizon. The output of the function is the cross-validation DataFrame. We call this data frame as it df_validation.

We begin our training with an initial period of 2 years, which is '730 days'. We set horizon='90 days' to evaluate our forecast over a 90-day prediction interval. Moreover, finally, we set period='30 days', so we re-train and re-evaluate our model every 30 days. This results in a total of 10 forecasts to compare with the final year of data.

If we see our newly created df_validation dataset, it would look like:

Output

As we can see, the cutoff feature in our dataset is created because of our forward chaining technique.

Conclusion

- In this article, we talked about cross-validation. This unsupervised technique helps us to generate and fine-tune our hyperparameters, which in turn helps us to create better and more robust models.

- We also differentiated between cross validation and time series analysis.

- To conclude, we looked at the Python implementation of Forward Chaining method for Cross Validation.