Memory Management in Operating System

What is Main Memory?

Main memory, commonly referred to as RAM (Random Access Memory), is the computer's primary temporary storage for actively processed data. Unlike permanent storage like hard drives, RAM is volatile, losing its contents when the computer powers down. It's organized into addressable cells, each holding data measured in bytes. Efficient memory management, involving allocation and deallocation, is essential for optimal performance. The amount of RAM directly impacts multitasking and program handling capabilities, making it a critical factor in overall system performance.

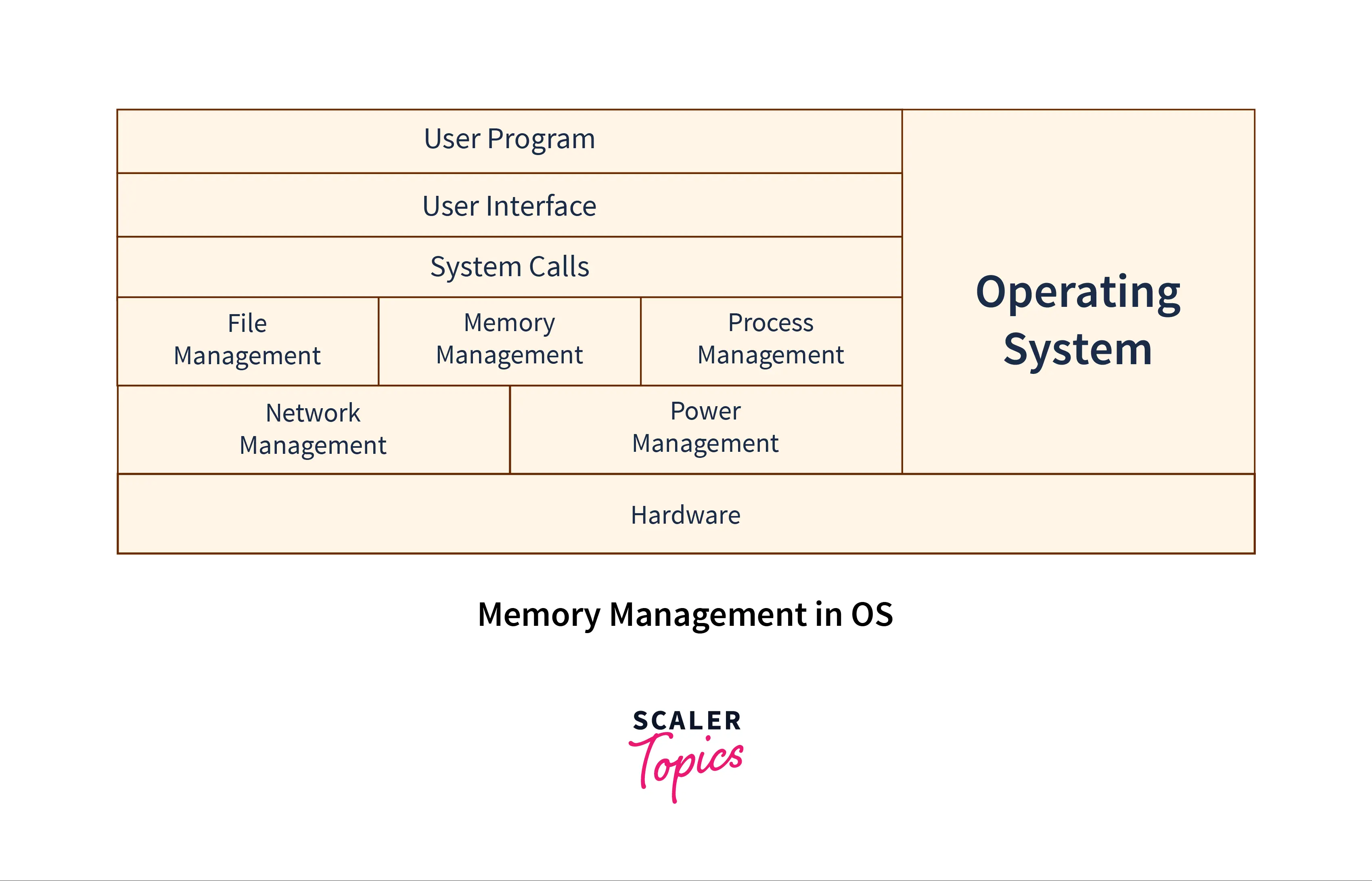

What is Memory Management in an Operating System?

Memory management in OS is a technique of controlling and managing the functionality of Random access memory (primary memory). It is used for achieving better concurrency, system performance, and memory utilization.

Memory management moves processes from primary memory to secondary memory and vice versa. It also keeps track of available memory, memory allocation, and unallocated.

Why use Memory Management in OS?

- Memory management keeps track of the status of each memory location, whether it is allocated or free.

- Memory management enables computer systems to run programs that require more main memory than the amount of free main memory available on the system. This is achieved by moving data between primary and secondary memory.

- Memory management addresses the system’s primary memory by providing abstractions such that the programs running on the system perceive a large memory is allocated to them.

- It is the job of memory management to protect the memory allocated to all the processes from being corrupted by other processes. If this is not done, the computer may exhibit unexpected/faulty behavior.

- Memory management enables sharing of memory spaces among processes, with the help of which, multiple programs can reside at the same memory location (although only one at a time).

Logical Address Space and Physical Address Space

In the realm of memory management, distinguishing between logical address space and physical address space is paramount.

Logical Address Space encompasses the range of addresses that a CPU can generate. This is the perspective from which a program "sees" its memory. For instance, if a computer has 4 GB of RAM, the logical address space might span from 0 to 4 billion. However, it's important to note that this space is not entirely occupied by physical memory. Instead, it serves as a convenient and abstract representation.

Physical Address Space, on the other hand, is the actual physical location in the memory hardware where data is stored. It constitutes the tangible address of a storage cell in RAM. In our previous example, if the RAM modules consist of 4 billion cells, the physical address space would correspond to each of these individual cells.

The Memory Management Unit (MMU) plays a pivotal role in this interplay. It acts as an intermediary, translating logical addresses to physical addresses. This enables programs to operate in a seemingly large logical address space, while efficiently utilizing the available physical memory.

Example: Consider a scenario where a program attempts to access memory address 'x' in its logical address space. The MMU translates this to the corresponding physical address 'y' and retrieves the data from the actual RAM location. This abstraction allows for efficient multitasking and memory allocation

Static and Dynamic Loading

Static Loading involves loading all the necessary program components into the main memory before the program's execution begins. This means that both the executable code and data are loaded into predetermined memory locations. This allocation is fixed and does not change during the program's execution. While it ensures direct access to all required resources, it may lead to inefficiencies in memory usage, especially if the program doesn't utilize all the loaded components.

Dynamic Loading, on the other hand, takes a more flexible approach. In this scheme, a program's components are loaded into the main memory only when they are specifically requested during execution. This results in a more efficient use of memory resources as only the necessary components occupy space. Dynamic loading is particularly advantageous for programs with extensive libraries or functionalities that may not be used in every session.

Static and Dynamic Linking

Static Linking involves incorporating all necessary libraries and modules into the final executable at compile time. This means that the code from libraries is copied into the final executable file. The result is a self-contained executable that doesn't rely on external resources during runtime. While this ensures portability and guarantees that the program will run on any system, it can lead to larger file sizes and potential redundancy if multiple programs use the same libraries.

Dynamic Linking, on the other hand, takes a more dynamic approach. In this method, the necessary libraries are not included in the final executable. Instead, the program dynamically links to the required libraries at runtime. This means that multiple programs can share a single copy of a library, reducing redundancy and conserving memory. However, it does introduce a dependency on the availability of the required libraries at runtime.

Example: Consider a scenario where multiple programs use a common math library. With static linking, each program would contain its own copy of the library, potentially leading to larger file sizes. With dynamic linking, all programs can use the same shared instance of the library, saving disk space

Swapping

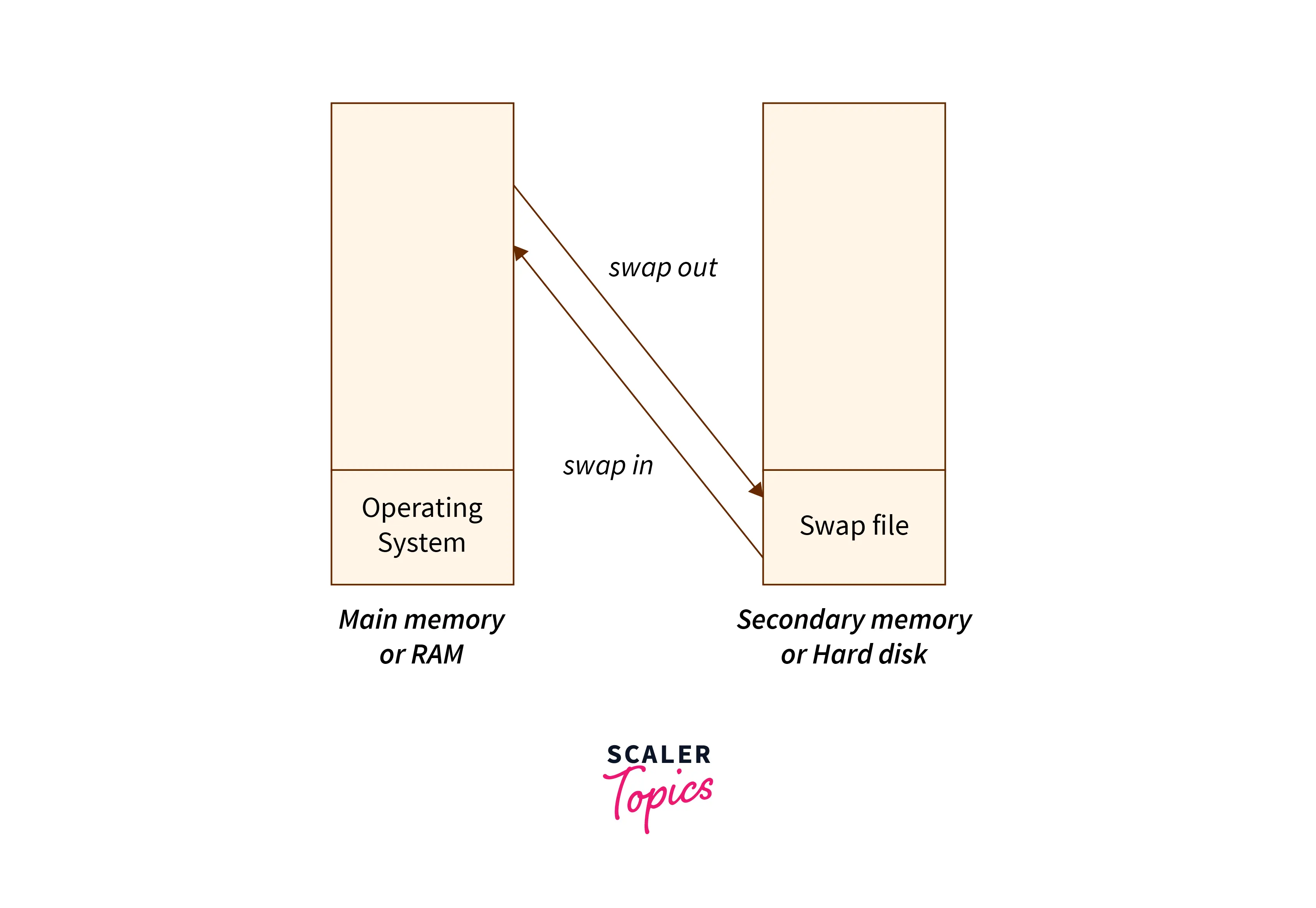

Swapping is a technique used in an operating system for efficient management of the memory of the computer system. Swapping includes two tasks, swapping in, and swapping out. Swapping in means placing the blocks or pages of data from the secondary memory to the primary memory. Swapping out is removing blocks/pages of data from the main memory to the Read-Only Memory (R.O.M.). Swapping is useful when a large program has to be executed or some operation has to be performed on a large file.

Contiguous Memory Allocation

Contiguous memory allocation is a memory management technique that involves allocating a process to the entire contiguous block of the main memory it requires to execute. This means that the process is loaded into a single continuous chunk of memory. While it's straightforward and efficient in terms of execution, it can lead to issues with fragmentation, where smaller blocks of memory remain unused between allocated processes.

Memory Allocation

Memory allocation is the process of reserving a portion of the computer's memory for a specific application or program. It's a crucial aspect of memory management, ensuring that each running process has enough space to execute efficiently. Effective memory allocation strategies are essential for optimizing system performance.

First Fit

First Fit is a memory allocation algorithm that allocates the first available block of memory that is large enough to accommodate a process. It scans the memory from the beginning and selects the first block that meets the size requirements. While it is relatively simple to implement, it can lead to fragmentation over time.

Best Fit

The Best Fit algorithm searches the entire memory space to find the smallest block that can accommodate a process. This helps in minimizing wastage of memory, as it selects the smallest available block that fits. However, it may lead to more fragmented memory spaces compared to other allocation strategies.

Worst Fit

Worst Fit, as the name implies, allocates the largest available block of memory to a process. This approach can result in more fragmentation compared to First Fit or Best Fit strategies. However, it can be useful in scenarios where larger processes need to be accommodated.

What is Fragmentation?

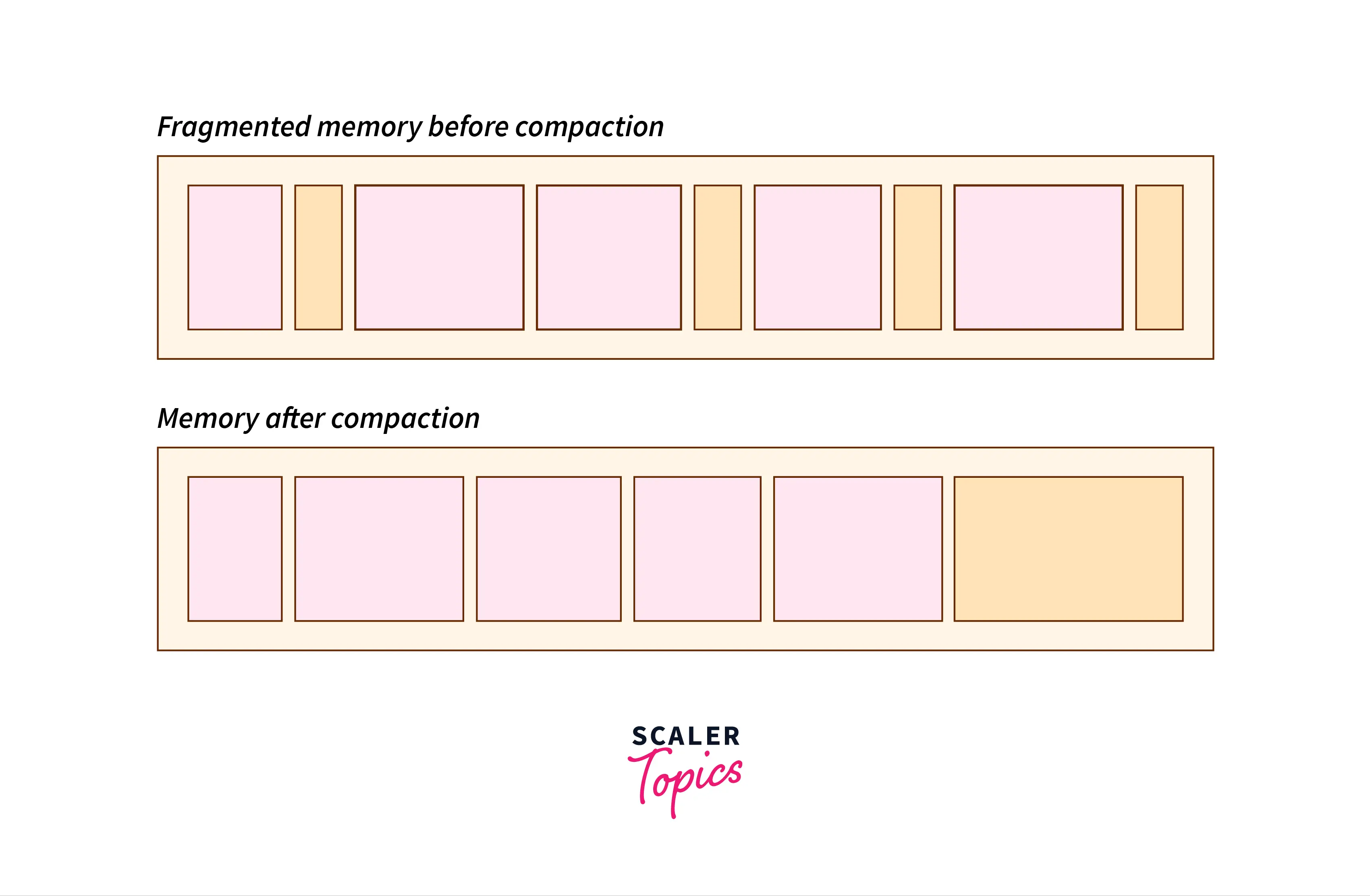

When processes are moved to and from the main memory, the available free space in primary memory is broken into smaller pieces. This happens when memory cannot be allocated to processes because the size of available memory is less than the amount of memory that the process requires. Such blocks of memory stay unused. This issue is called fragmentation.

Fragmentation is of the following two types:

1. External Fragmentation:

The total amount of free available primary is sufficient to reside a process, but can not be used because it is non-contiguous. External fragmentation can be decreased by compaction or shuffling of data in memory to arrange all free memory blocks together and thus form one larger free memory block.

2. Internal Fragmentation:

Internal fragmentation occurs when the memory block assigned to the process is larger than the amount o memory required by the process. In such a situation a part of memory is left unutilized because it will not be used by any other process. Internal fragmentation can be decreased by assigning the smallest partition of free memory that is large enough for allocating to a process.

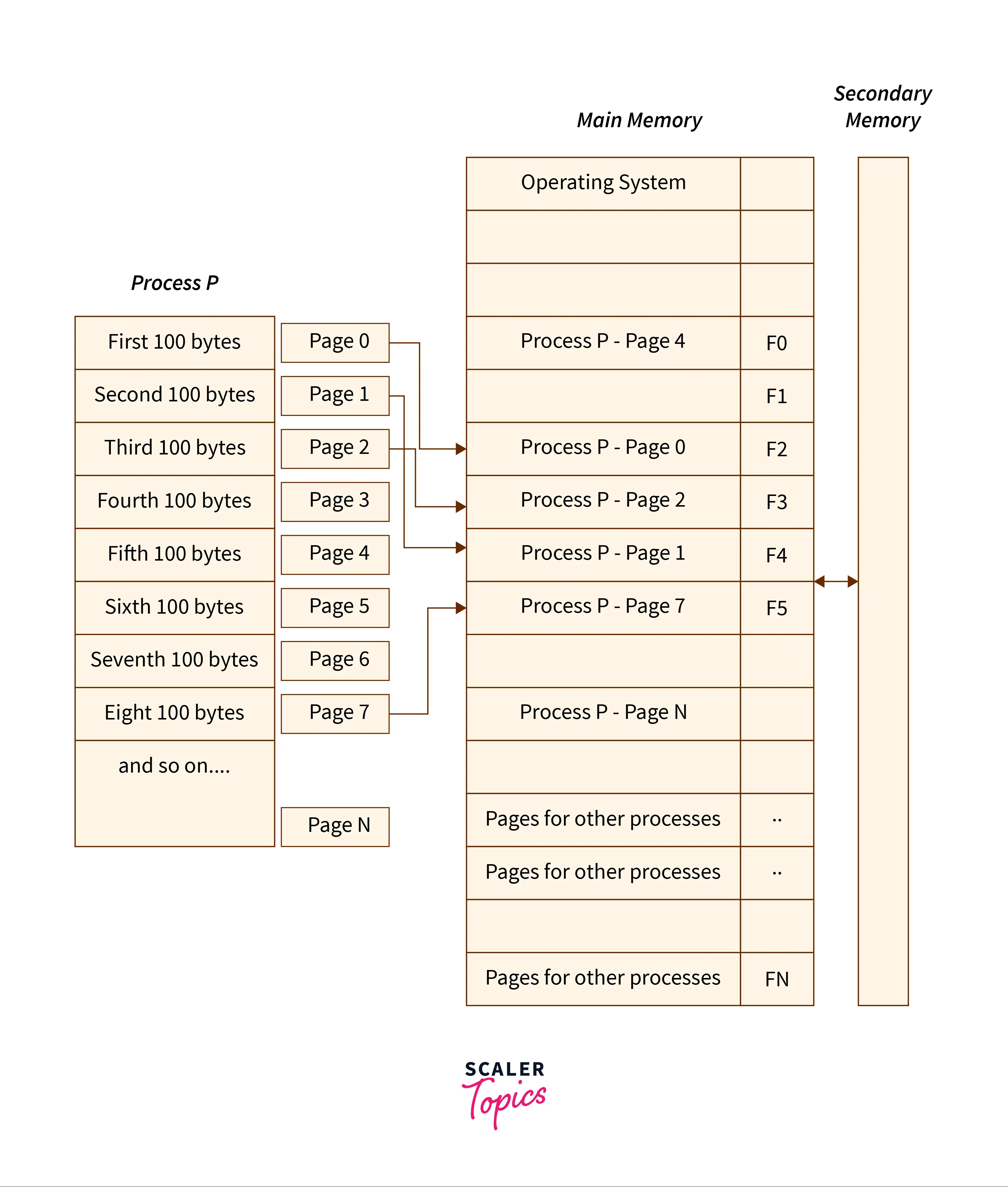

What is Paging?

A computer system can address and utilize more memory than the size of the memory present in the computer hardware. This extra memory is referred to as virtual memory. Virtual memory is a part of secondary memory that the computer system uses as primary memory. Paging has a vital role in the implementation of virtual memory.

The process address space is a set of logical addresses that every process refers to in its code. Paging is a technique of memory management in which the process address space is broken into blocks. All the blocks are of the same size and are referred to as “pages”. The size of a page is a power of 2 and its value is in the range of 512 bytes to 8192 bytes. The size of a process is measured in terms of the number of pages.

A similar division of the main memory is done into blocks of fixed size. These blocks are known as “frames” and the size of a frame is the same as that of a page to achieve optimum usage of the primary memory and to avoid external fragmentation.

Following are some Advantages of Paging:

- Paging decreases external fragmentation.

- Paging is easy to implement.

- Paging adds to memory efficiency.

- Since the size of the frames is the same as that of pages, - swapping becomes quite simple.

- Paging is useful for fast accessing data.

FAQs

Q. What is Fragmentation and How Does it Affect Memory Allocation?

A. Fragmentation occurs when memory is divided into small, non-contiguous blocks. It can lead to inefficiencies in memory usage. The article briefly mentioned fragmentation, but it might be beneficial to explain it in more detail.

Q. Can a Program Access Memory Outside its Allocated Space?

A. It's important to clarify how memory protection mechanisms prevent programs from accessing memory locations that haven't been allocated to them. This ensures that programs don't interfere with each other.

Q. How Does Virtual Memory Enhance Memory Management?

A. Virtual memory is a crucial concept that allows programs to use more memory than is physically available. This can be a significant factor in modern memory management systems.

Q. What is Thrashing in Memory Management?

A. Thrashing occurs when a computer's performance severely degrades due to excessive swapping of data between RAM and disk. This can be caused by an insufficient amount of physical memory for the tasks being performed.

Conclusion

- Memory management in OS is a technique of managing the functionality of primary memory, used for achieving better concurrency, system performance, and memory utilization.

- Memory management keeps track of the status of each memory location, whether it is allocated or free.

- Paging is a technique of memory management in which the process address space is broken into blocks. All the blocks are of the same size and are referred to as “pages”.

- Fragmentation is of two kinds, external and internal.

- Segmentation is the method of dividing the primary memory into multiple blocks. Each block is called a segment and has a specific length.

- wapping includes two tasks, swapping in, and swapping out.