Node.js Streams

Overview

Stream are objects that allow developers to continuously read and write data from a source. Stream is an abstract interface for working with continuous collections of data in Node.js. Readable, writable, duplex, and transform are the four main types of streams in Node.js. Each stream is an eventEmitter instance that emits different events at a time interval.

What are Streams?

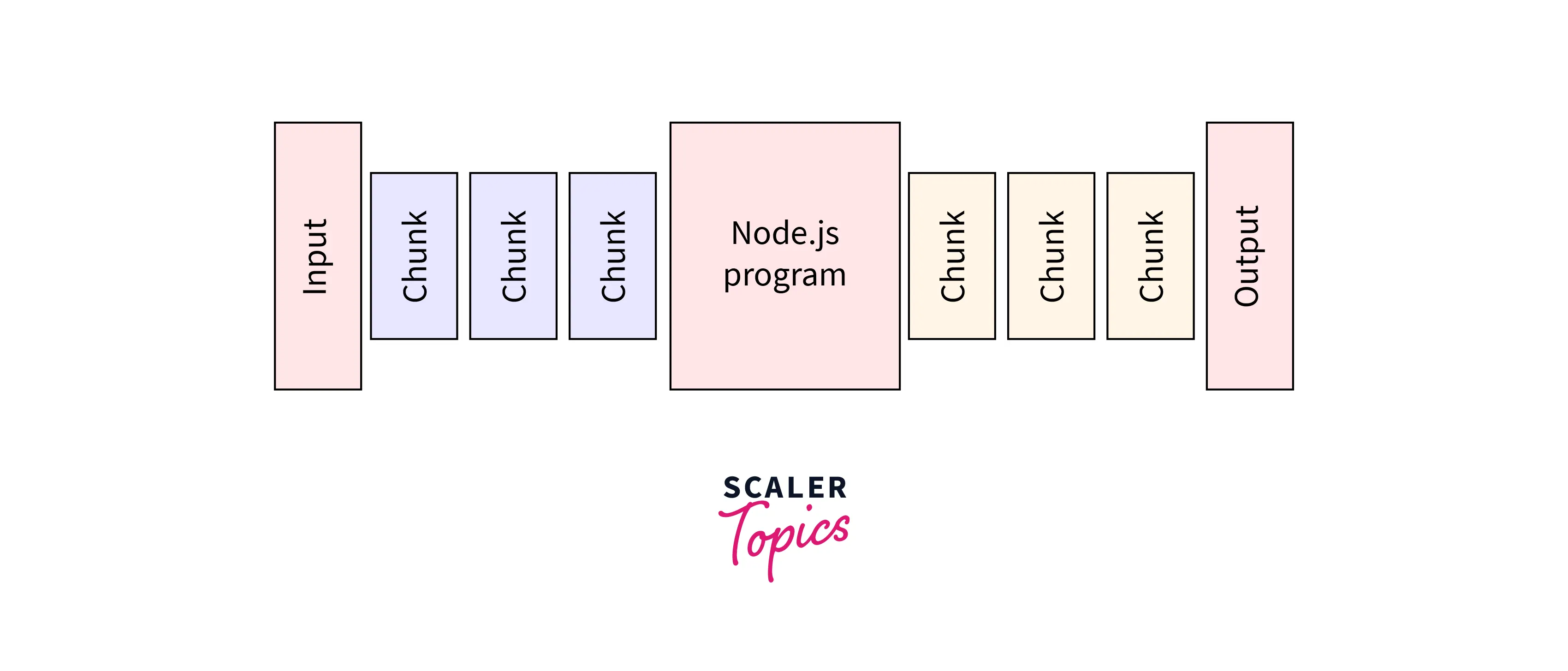

Streams are objects used to efficiently handle file writes and reads, network communication, or any kind of end-to-end information exchange. Streams are collections of data, just like arrays and strings. The difference is that streams are not available all at once and do not need to be stored in memory. Due to this streams provide a lot of performance and power for node applications to perform a large number of data transfers.

One of the unique features of streams is that instead of reading all the data in memory at once, it reads and processes small pieces of data. In this way, you do not save and process the entire file at once.

Streams can be used to build real-world applications such as video streaming applications like YouTube and Netflix. Both offer a streaming service. With this service, you don't have to download the video or audio feed all at once, but you can watch the video and listen to the audio instantly. This is how the browser receives video and audio as a continuous stream of chunks. If these websites first wait for the entire video and audio to download before streaming, it may take a long time to play the videos.

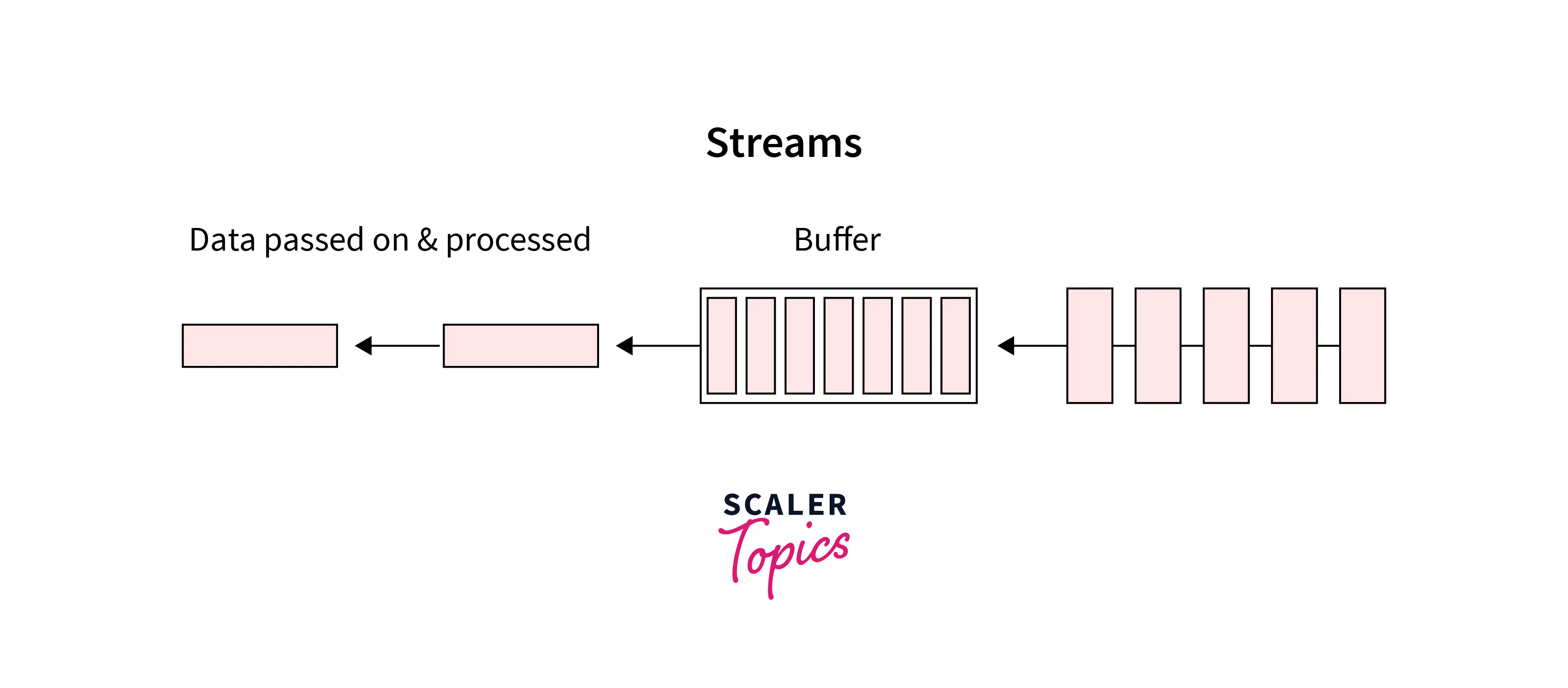

Streams work on a concept called a buffer. A buffer is a temporary memory used to hold data until the stream is consumed.

For streams, the buffer size is determined by the highWatermark property of the stream instance. highWatermark property is a number that represents the size of the buffer in bytes.

Node's buffer memory works with strings and buffers by default. You can also manipulate buffer memory with JavaScript objects. To do this, you need to set the objectMode property of the stream object to true.

Streams in Node.js can provide amazing benefits in terms of memory optimization and efficiency. This concept can seem a little confusing because you have to handle events, but it's very useful.

Advantages of Streams Over Other Data Handling Methods

The main advantages of using streams over other data handling methods are:

-

Memory efficiency: Streams read chunks of data and process the contents of the file one at a time, rather than holding the entire file in memory at once. This allows data to be exchanged in small chunks, thus significantly reducing memory consumption.

-

Time efficiency: Streams in Node.js send files in chunks and each chunk is processed separately. So there is no need to wait for the whole data to be sent. As soon as you receive it, you can start processing.

-

Composability feature: The composability feature allows us to build microservices in Node.js. Composability enables us to make complex applications that interact and link data between different pieces of code.

-

User experience: Thanks to streams, Youtube videos play instantly. YouTube will show the content while it's downloading the rest of the video.

-

Reduce bandwidth: You can show the initial results of the download to the user and exit if the results are incorrect. Downloading an entire large file only to find out whether it's a wrong file or not is a waste of bandwidth.

Accessing Streams

Stream is an abstract interface for working with data streams in Node.js. The node:stream module provides an API to implement the stream interface.

Many stream objects are provided by Node.js. For example, a request to the HTTP server and process.stdout are examples of streams.

Streams can be readable, writable, or both. All streams are instances of EventEmitter.

To access the node:stream module:

The node:stream module helps create new types of stream instances. Usually, the node:stream module is not necessary to consume streams in Node.js.

Types of Streams in Node.js

There are four fundamental types of streams in Node.js:

-

Readable: are streams from which data can be read. A readable stream can receive data, but it cannot send data. This is also called piping. Data sent to the read stream is buffered until the consumer starts reading the data. fs.createReadStream() allows us to read the contents of the file. Examples: process.stdin, fs read streams, HTTP responses on the client, HTTP requests on the server, etc.

-

Writable: are streams to which data can be written. A writable stream can send data, but it cannot receive data. fs.createWriteStream() allows us to write data to a file. Examples: HTTP requests on the client, HTTP responses on the server, fs write streams, process.stout, process.stderr etc.

-

Duplex: are streams that implement both read and write streams within a single component. It can be used to achieve the read or write mechanism since it does not change the data. Example: TCP socket connection (net.Socket).

-

Transform: Transform streams are similar to duplex streams, but perform data conversion when writing and reading. Example: read or write data from/to a file.

Practical Example Demonstrating the Streams

Let's look at an example that shows how streams in Node.js can help in memory consumption.

The following code reads files from a disk:

The Node.js fs module provides the ability to read files and can serve files over HTTP every time a new connection is made to the HTTP server.

readFile() reads the entire content of the file and invokes a callback function after reading the data.

res.end(data) inside the callback returns the contents of the file to the HTTP client.

If the file is large, using the above method will take a long time. To reduce the problem, let's write the same code using stream methods.

Now you see that you have more control. As soon as a chunk of data is sent, it starts streaming the file to the HTTP client. This is very useful if you have to work with large files since you don't need to wait for the file to be read completely.

Implementing a Writable Stream

There are two main tasks when talking about streams in Node.js.

- Task of implementing

- Task of consuming

To implement a writable stream, we use the Writable constructor of the stream module.

Writable streams can be implemented in several ways. For example, you can extend the Writable constructor.

However, the constructor approach is much simpler. We can simply create an object from the Writable constructor and pass some options. The only option required is the write function, which exposes the chunks of the data to be written.

This writing method takes three arguments:

- Chunk: This is optional data and its value must be a string, buffer, or Uint8Array. In object mode, the value of the chunk can be anything other than null.

- Encoding: It contains the encoding value if the chunk contains a string.

- Callback: it is a function that should be called after processing of chunk. This function indicates whether the writer was successful or not.

In outStream we simply console.log the chunk and then call the callback function to indicate whether the write was successful or not.

To consume the stream, we can use process.stdin, which is a readable stream.

We can simplify the above code by using process.stdout. We can pipe stdin to stdout and get the same function with this single line:

Implementing a Readable Stream

To implement a readable stream, we need the Readable interface and construct an object from it and implement the read() method in the configuration parameter:

There is an easy way to implement readable streams. Just push the data you want consumers to consume.

In the above code, pushing a null object means that we want to signal that there is no other data in the stream.

To use this simple readable stream, we can simply pipe it into the process.stdout writable stream.

By executing the above code, we will read all the data from the inStream and iterate over it by default. This is very simple, but not very efficient because we are sending all the data in the stream before piping it to process.stdout. A much better way is to push data on demand when the consumer requests it. We can do this by implementing the read() method in the configuration object:

When the read method is called on a readable stream, the implementation can enqueue partial data. For example, you can press one character at a time and increment it with each push.

While the consumer is reading the readable stream, the read method continues to fire and push more characters. This cycle has to stop somewhere. So if CurrentCharCode is greater than 90, we push null.

The Pipe Method

The pipe method is the simplest and the easiest way to consume streams. It is better to use the pipe method or consume streams with events, but avoid mixing the two.

This single line pipes the output of a readable stream (source), as the input of a writable stream(destination). The destination must be a writable stream and the source must be a readable stream. Of course, both can also be duplexed/transformed streams. When piping into a duplex stream, you can chain pipe calls:

The pipe method returns the destination stream. This made the above chaining possible in the above code.

Generally, you don't need to use events if you're using the pipe method, but if you need to use the stream in different and customized ways, using events is recommended.

Chaining the Streams

Chaining is a mechanism to connect the output of one stream to another and create a chain of operations for multiple streams. It is usually used in piping. Let's take an example where we will use piping and chaining to compress and decompress a file.

First, we will compress the data.txt file using the following code:

Output:

Compressed!!

Executing the above code will compress input.txt and an input.txt.gz file will be created. Let's decompress the same file using the code below:

Output:

Decompressed!!

Stream Events

In addition to reading from readable stream sources and writing to writable destinations, the pipe method automatically handles a few things along the way. For example, it handles errors, end-of-file, and when one stream is slower or faster than another.

However, streams can also be consumed directly by events. Let's take an example:

This is a simplified event-equivalent to read and write data in place of what the pipes method generally does to read and write data.

The most important events in a readable stream are:

- data event: it is emitted every time a stream sends a chunk of data to a consumer.

- end event: it is emitted when there is no more data to consume from the stream.

The most important events in a writable stream are:

- drain event: it is a signal that a writable stream can receive more data.

- finish event: it is emitted when all data has been transferred to the underlying system.

Events and actions can be combined to customize and optimize the use of streams. We can use the pipe/unpipe or read/unshift/resume methods to consume a readable stream. To consume a writable stream, we can make it a pipe/unpipe target, or simply write to it using the write method and call the end method when we are done.

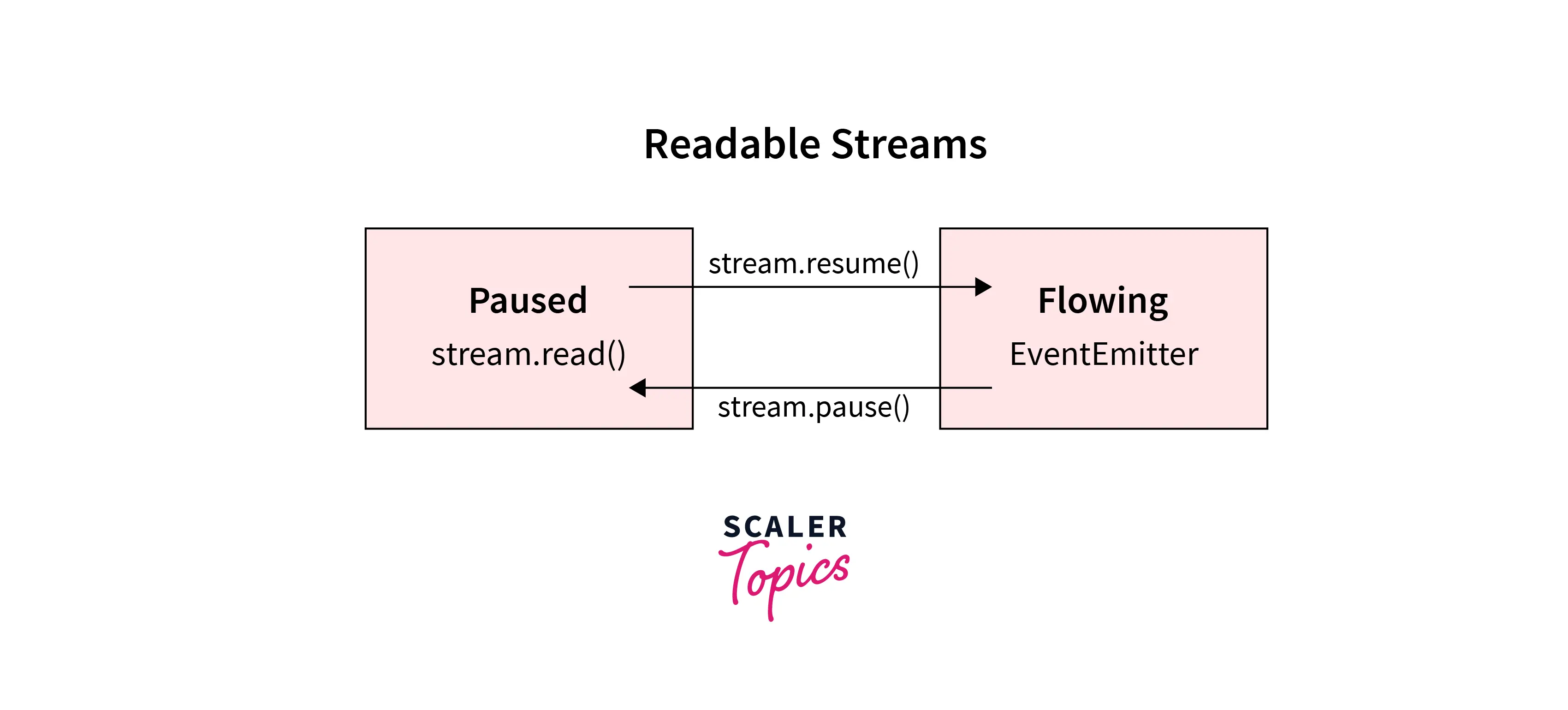

Paused and Flowing Modes of Readable Streams

A readable stream can operate in two modes:

- Flowing readable stream (Flowing mood).

- Paused readable stream (Paused mood).

These modes are sometimes called push and pull modes.

Flowing Readable Stream:

A readable stream uses event emitters to provide data to the application, allowing the application to flow continuously.

The different types of events used in flowing readable stream mode are:

- Data Event - Data events are called when data is available to read from the stream.

- End Event — The end event is called when the stream reaches the end of the file and there is no more data to read.

- Error Event - Whenever an error occurs in the process of reading or writing the stream, the error event is called.

- Finish event - The finish event is called when all data has been transferred to the underlying system.

Paused Readable Stream:

The pause readable stream mode uses the read() method to get the next piece of data from the stream. The read() method must be called explicitly because the stream is not read continuously in paused mode.

All readable streams start in paused mode by default and can be converted to a flowing state using the following steps:

- Add a data event handler to the stream.

- Call the stream.resume() method.

- call the stream.pipe() method, to send data to a writable stream.

Streams Object Mode

As discussed earlier, streams work on a concept called a buffer. By default, streams expect buffer or string values. But, there is an objectMode flag that can be set to accept any JavaScript object.

Here's a simple example that demonstrates this: Following the script of transform stream provides the ability to map a string of comma-separated values to a JavaScript object. So "s,c,a,l,e,r" becomes {s:c:a:l:e:r}.

Pass an input string to commaDivider. commaDivider pushes the array as readable data. We need to add the readableObjectMode flag to the stream because we are pushing an object here and not a string.

It then takes an array and pipe the array to the arrToObj stream. The writableObjectMode flag is required to accept objects by the stream.

Conclusion

- Streams are objects that allow you to read data from a source and write data to a destination.

- Readable, Writable, Duplex, and Transform are four fundamental types of streams in Node.js.

- The pipe method is the simplest and the easiest way to consume streams.

- Chaining is a mechanism to connect the output of one stream to another and create a chain of operations of multiple streams.

- The most important events in a readable stream are the data event andend event.

- The most important events in a writable stream are the drain event andfinish event.

- A readable stream can operate a flowing mood or paused mood.