Operations on Process in OS

Overview

In an operating system, processes represent the execution of individual tasks or programs. Process operations involve the creation, scheduling, execution, and termination of processes. The OS allocates necessary resources, such as CPU time, memory, and I/O devices, to ensure the seamless execution of processes. Process operations in OS encompass vital aspects of process lifecycle management, optimizing resource allocation, and facilitating concurrent and responsive computing environments.

Process Operations

Process operations in an operating system involve several key steps that manage the lifecycle of processes. The operations on process in OS ensure efficient utilization of system resources, multitasking, and a responsive computing environment. The primary process operations in OS include:

- Process Creation

- Process Scheduling

- Context Switching

- Process Execution

- Inter-Process Communication (IPC)

- Process Termination

- Process Synchronization

- Process State Management

- Process Priority Management

- Process Accounting and Monitoring

Creating

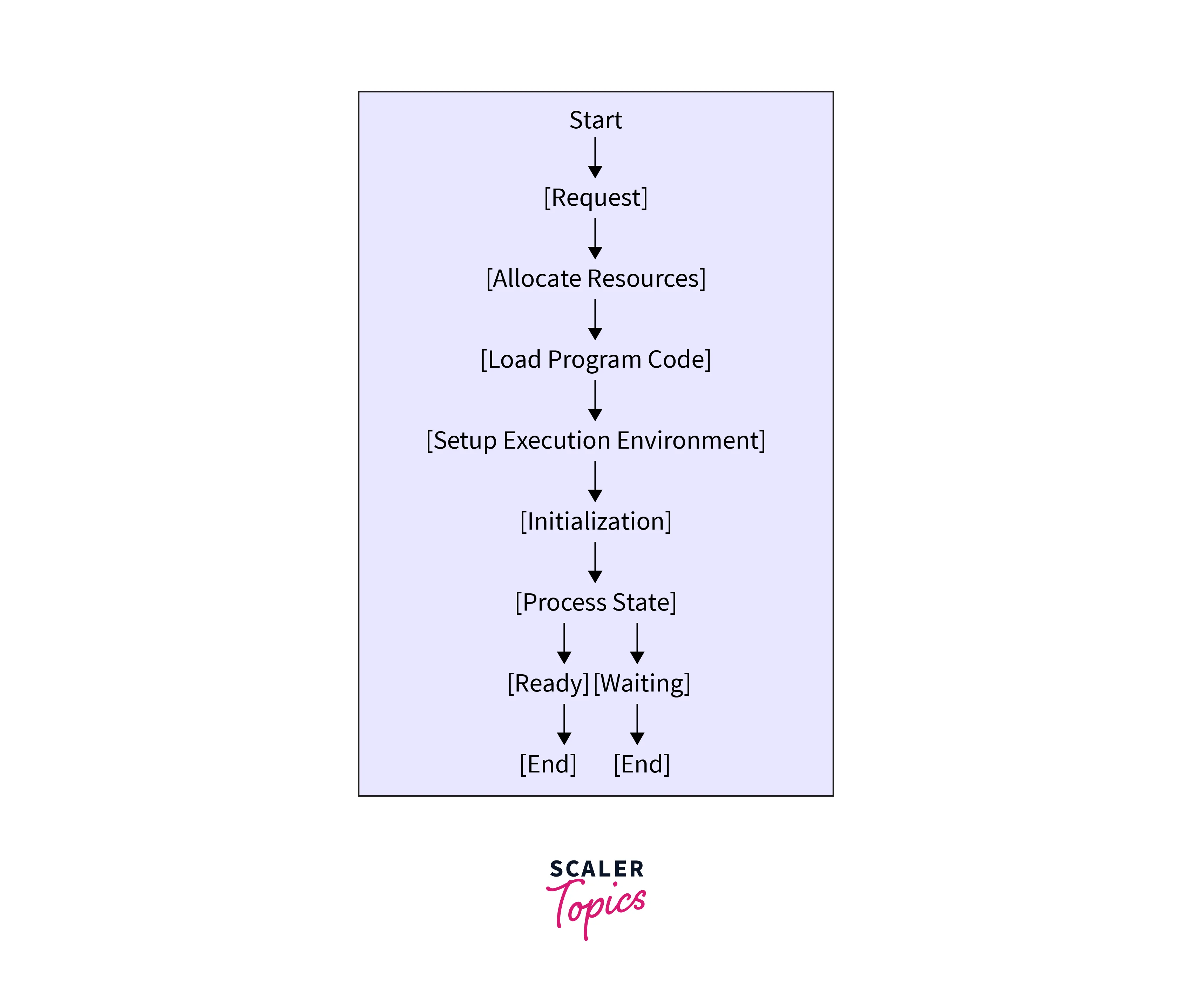

Process creation is a fundamental operation within an operating system that involves the creation and initialization of a new process. The process operation in OS is crucial for enabling multitasking, resource allocation, and concurrent execution of tasks. The process creation operation in OS typically follows a series of steps which are as follows:

-

Request:

The process of creation begins with a request from a user or a system component, such as an application or the operating system itself, to start a new process.

-

Allocating Resources:

The operating system allocates necessary resources for the new process, including memory space, a unique process identifier (PID), a process control block (PCB), and other essential data structures.

-

Loading Program Code:

The program code and data associated with the process are loaded into the allocated memory space.

-

Setting Up Execution Environment:

The OS sets up the initial execution environment for the process.

-

Initialization:

Any initializations required for the process are performed at this stage. This might involve initializing variables, setting default values, and preparing the process for execution.

-

Process State:

After the necessary setup, the new process is typically in a "ready" or "waiting" state, indicating that it is prepared for execution but hasn't started running yet.

Dispatching/Scheduling

Dispatching/scheduling, is a crucial operation within an operating system that involves the selection of the next process to execute on the central processing unit (CPU). This operation is a key component of process management and is essential for efficient multitasking and resource allocation. The dispatching operation encompasses the following key steps:

-

Process Selection:

The dispatching operation selects a process from the pool of ready-to-execute processes. The selection criteria may include factors such as process priority, execution history, and the scheduling algorithm employed by the OS.

-

Context Switching:

Before executing the selected process, the operating system performs a context switch. This involves saving the state of the currently running process, including the program counter, CPU registers, and other relevant information, into the process control block (PCB).

-

Loading New Process:

Once the context switch is complete, the OS loads the saved state of the selected process from its PCB. This includes restoring the program counter and other CPU registers to the values they had when the process was last preempted or voluntarily yielded to the CPU.

-

Execution:

The CPU begins executing the instructions of the selected process. The process advances through its program logic, utilizing system resources such as memory, I/O devices, and external data.

-

Timer Interrupts and Preemption:

During process execution, timer interrupts are set at regular intervals. When a timer interrupt occurs, the currently running process is preempted, and the CPU returns control to the operating system.

-

Scheduling Algorithms:

The dispatching operation relies on scheduling algorithms that determine the order and duration of process execution.

-

Resource Allocation:

The dispatching operation is responsible for allocating CPU time to processes based on the scheduling algorithm and their priority. This ensures that high-priority or time-sensitive tasks receive appropriate attention.

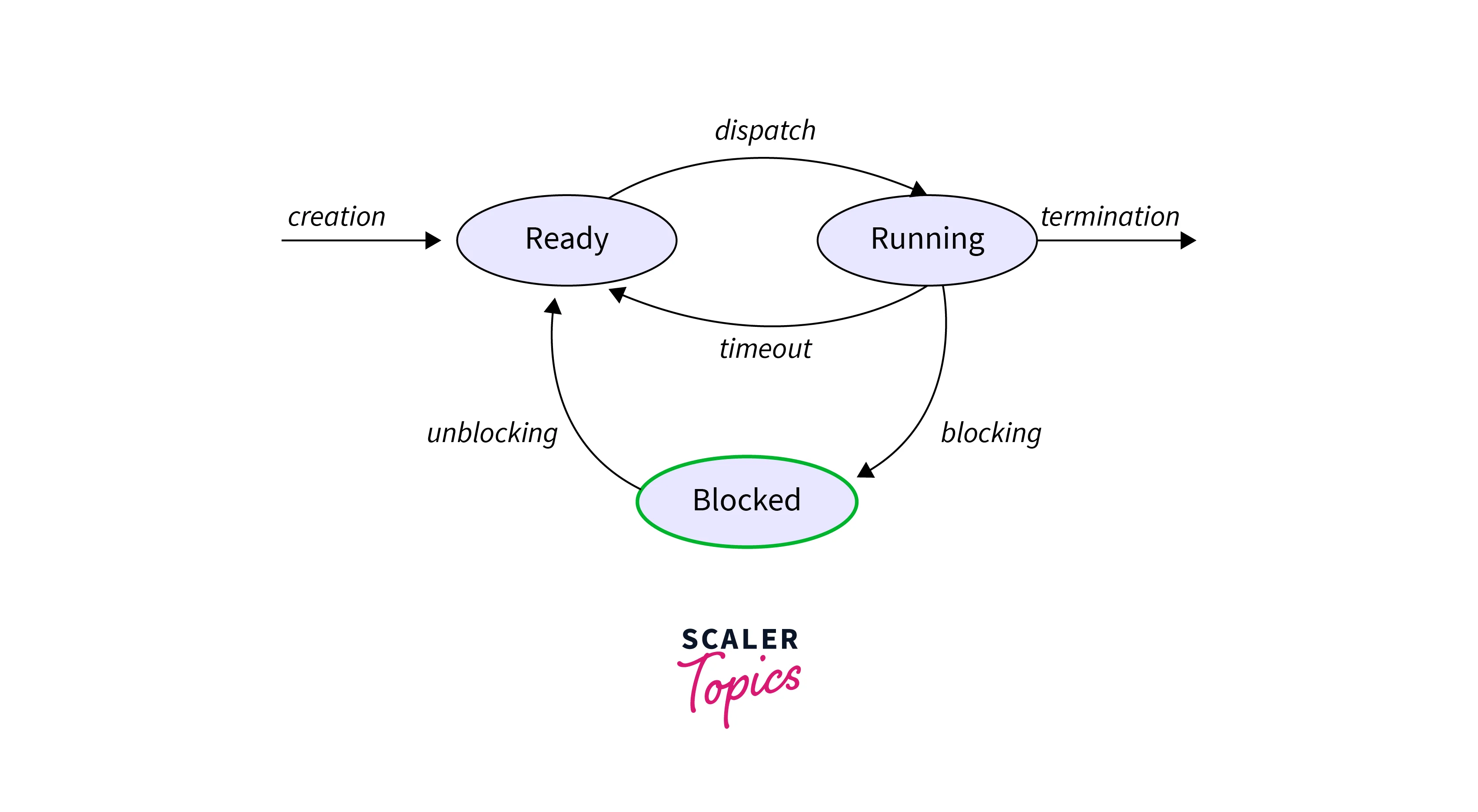

Blocking

In an operating system, a blocking operation refers to a situation where a process in OS is temporarily suspended or "blocked" from executing further instructions until a specific event or condition occurs. This event typically involves waiting for a particular resource or condition to become available before the process can proceed. Blocking operations are common in scenarios where processes need to interact with external resources, such as input/output (I/O) devices, files, or other processes.

When a process operation in OS initiates a blocking operation, it enters a state known as "blocked" or "waiting." The operating system removes the process from the CPU's execution queue and places it in a waiting queue associated with the resource it is waiting for. The process remains in this state until the resource becomes available or the condition is satisfied.

Blocking operations is crucial for efficient resource management and coordination among processes. They prevent processes from monopolizing system resources while waiting for external events, enabling the operating system to schedule other processes for execution. Common examples of blocking operations include:

-

I/O Operations:

When a process requests data from an I/O device (such as reading data from a disk or receiving input from a keyboard), it may be blocked until the requested data is ready.

-

Synchronization:

Processes often wait for synchronization primitives like semaphores or mutexes to achieve mutual exclusion or coordinate their activities.

-

Inter-Process Communication:

Processes waiting for messages or data from other processes through mechanisms like message queues or pipes may enter a blocked state.

-

Resource Allocation:

Processes requesting system resources, such as memory or network connections, may be blocked until the resources are allocated.

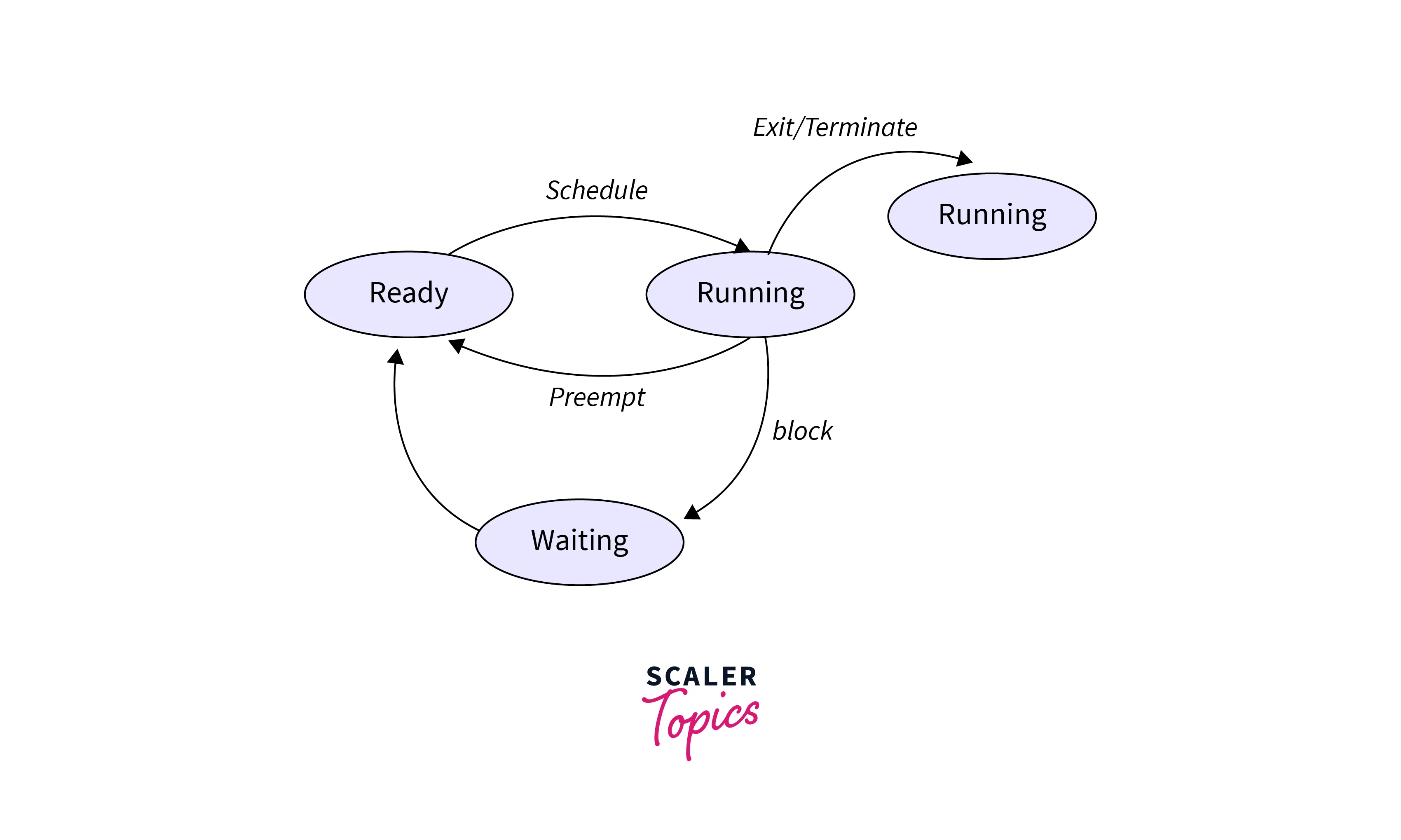

Preemption

Preemption in an operating system refers to the act of temporarily interrupting the execution of a currently running process to allocate the CPU to another process. This interruption is typically triggered by a higher-priority process becoming available for execution or by the expiration of a time slice assigned to the currently running process in a time-sharing environment.

Key aspects of preemption include:

-

Priority-Based Preemption:

Processes with higher priorities are given preference in execution. When a higher-priority process becomes available, the OS may preempt the currently running process to allow the higher-priority process to execute.

-

Time Sharing:

In a time-sharing or multitasking environment, processes are allocated small time slices (quantum) of CPU time. When a time slice expires, the currently running process is preempted, and the OS selects the next process to run.

-

Interrupt-Driven Preemption:

Hardware or software interrupts can trigger preemption. For example, an interrupt generated by a hardware device or a system call request from a process may cause the OS to preempt the current process and handle the interrupt.

-

Fairness and Responsiveness:

Preemption ensures that no process is unfairly blocked from accessing CPU time. It guarantees that even low-priority processes get a chance to execute, preventing starvation.

-

Real-Time Systems:

Preemption is crucial in real-time systems, where tasks have strict timing requirements. If a higher-priority real-time task becomes ready to run, the OS must preempt lower-priority tasks to ensure timely execution.

Termination of Process

Termination of a process operation in an operating system refers to the orderly and controlled cessation of a running process's execution. Process termination occurs when a process has completed its intended task, when it is no longer needed, or when an error or exception occurs. This operation involves several steps to ensure proper cleanup and resource reclamation:

-

Exit Status:

When a process terminates, it typically returns an exit status or code that indicates the outcome of its execution. This status provides information about whether the process was completed successfully or encountered an error.

-

Resource Deallocation:

The OS releases the resources allocated to the process, including memory, file handles, open sockets, and other system resources. This prevents resource leaks and ensures efficient utilization of system components.

-

File Cleanup:

If the process has opened files or created temporary files, the OS ensures that these files are properly closed and removed, preventing data corruption and freeing up storage space.

-

Parent Process Notification:

In most cases, the parent process (the process that created the terminating process) needs to be informed of the termination and the exit status.

-

Process Control Block Update:

The OS updates the process control block (PCB) of the terminated process, marking it as "terminated" and removing it from the list of active processes.

-

Reclamation of System Resources:

The OS updates its data structures and internal tables to reflect the availability of system resources that were used by the terminated process.

FAQs

Q. How does preemption work in process operations?

A. Preemption temporarily interrupts a running process to allocate CPU time to higher-priority tasks or time-sharing processes.

Q. Why is process termination important?

A. Process termination ensures proper resource cleanup, memory deallocation, and orderly cessation of a process's execution.

Q. How does an OS manage process synchronization?

A. Through synchronization mechanisms like locks and semaphores, the OS coordinates concurrent process execution to prevent conflicts.

Q. What role does the process control block (PCB) play?

A. The PCB stores crucial process information, facilitating context switching, state management, and efficient process operation handling.

Conclusion

- Process operations in OS form the backbone of operating system functionality, orchestrating the creation, scheduling, execution, and termination of processes.

- These operations enable efficient multitasking, resource allocation, and responsive computing environments.

- Through context switching and scheduling algorithms, the OS ensures fair CPU time distribution and optimal performance.

- Blocking operations manage resource contention, while preemption guarantees timely execution and priority-based access.

- Proper process termination safeguards resource integrity and allows for graceful cleanup.

Related Topics

Furthermore, you can read the below-mentioned articles to understand the operations of process in OS better: