I/O with Pandas

Overview

In this article, we will learn about the pandas I/O API, which is a collection of top-level reader functions. We will see how to read and write files in different formats like CSV, Excel, HTML, etc. With the help of pandas, we can also work with big data very easily using some techniques like removing unwanted data and splitting the data into chunks so that it can easily readable.

Introduction

The collection of reader functions that can be used with Pandas.read_csv and typically return pandas objects are called the pandas I/O API. Because of it, data can be loaded from files. Using series objects and DataFrame, data can be written on the clipboard. There are several methods for reading pandas files for CSV w have .read_csv, for excel we have .read_excel etc.

What is I/O API in Pandas?

The pandas I/O API is a collection of top-level reader functions that can be used with "pandas.read csv()" and typically return a pandas object. Similar to DataFrame .to_csv, the corresponding writer functions are object methods (). The API for saving the contents of Series and DataFrame objects to various types of objects, including the clipboard and files, is called Pandas IO Tools. It also makes it possible to load data from files, objects, or the clipboard.

Write Files

Data and labels can be written to the clipboard or files using the methods available in Series and DataFrame objects. They have the naming convention . to file-type>(), where file-type> denotes the destination file's type like .to_csv(), .to_excel(), .to_json() etc.

These methods provide parameters that specify the destination file directory where the data and labels were saved. It depends on the situation if this is required or optional. If this option is offered and you decide not to use it, the methods return objects (such as strings or iterables) that include the data from DataFrame instances.

Compression, an optional parameter, controls how the file with the data and labels will be compressed. There are a few additional parameters, but most of them are unique to one or more methods.

Read Files

Pandas functions for reading file contents have names that follow the pattern ".read file-type>()," where "file-type>" denotes the type of the file to be read like read_csv(), read_excel(), read_json(), read_html() etc.

The path to the destination file is specified as a parameter for these functions. Whether on a local machine or in a URL, it can be any valid string that represents the path. Depending on the type of file, more items are also permitted.

The compressed files' type of decompression will be determined by the optional parameter compression. There are additional arguments, but they are exclusive to one or more functions.

Working with Different File Types

The Pandas library provides a variety of options for loading data from files and saving data to files. Let's see how to use JSON, web pages, databases, and Python pickle files, among other file types.

CSV

CSV files(comma-separated files) are a simple way to store big data. Pandas can read CSV files since they are a widely used format that only contains plain text. csv file can be read by using .read_csv(file_path). Using the data.csv file that you gave as the first input, the Pandas read CSV() function returns a new DataFrame containing the information from that file. A valid path, including URLs, may be contained in this string.

Let's look at an example as follows:

Code:

Output:

Explanation: In the above code example, Pandas are imported as pd. Here we use a GitHub file link for data and reading this CSV file using the pd.read_csv() function. .head() is used to read only the starting rows of the data, by default starting 5 rows.

JSON

JSON stands for Javascript object Notation. It indicates that the data is stored and sent using a script file, which is composed of text written in a programming language. A pre-built module called JSON in Python provides support for JSON. The Python script imports the JSON package to make use of this feature. Text in JSON is represented as a quoted string that has the value from a key-value mapping inside. It is comparable to Python's dictionary.

Writing JSON file

Method 1: Using Python's json.dumps() function to write JSON data to a file.

A function called json.dumps() in the Python JSON module facilitates the transformation of a dictionary into a JSON object. There are two parameters:

-

dictionary-The name of the dictionary that needs to be transformed into a JSON object.

-

indent-determines the number of units for indentation

Simply use the "write" function to write the dictionary to a file after it has been converted to a JSON object.

Code:

Output:

Method 2: Using Python's json.dump function to write JSON data to a file ()

JSON can also be written to a file using the json.dump() method. The "dump" function in the JSON package allows for the direct writing of a dictionary to a JSON file without first converting it into a JSON object. There are two parameters:

-

dictionary - name of the dictionary to be transformed into a JSON object.

-

file pointer - The pointer to a file that has been opened in write or append mode.

Code:

Output:

Reading JSON File

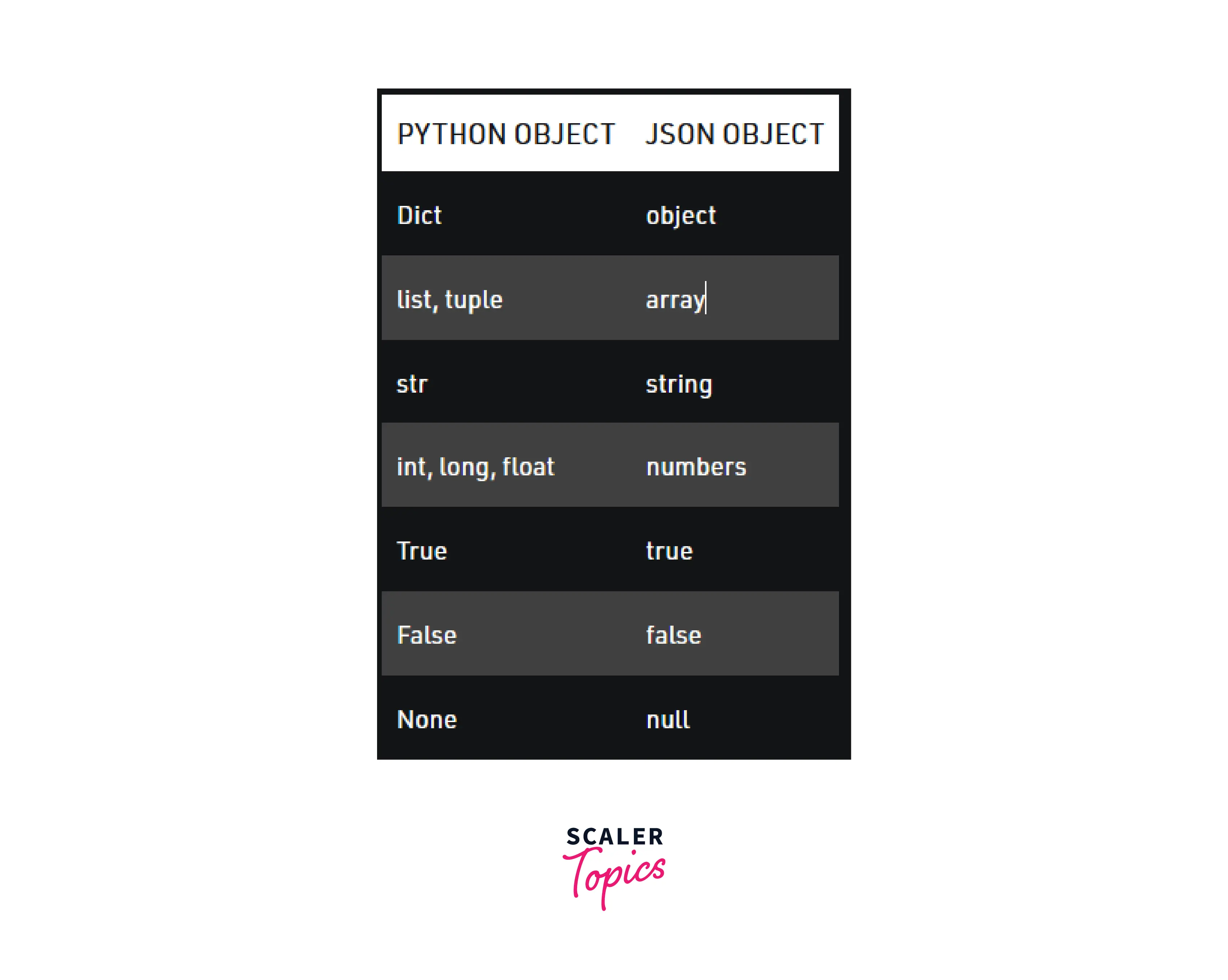

Deserialization, or converting JSON items into their corresponding Python objects, is the opposite of serialization. It uses the load() method to do so. JSON data can be readily deserialized with load(), which is typically used to load from a string; otherwise, the root object is in a list or Dict. This depends on whether you used JSON data from another application or acquired it as a string format of JSON.

Using json.load to read JSON from a file ()

The json.load() function in the JSON package loads the contents of a JSON file into a dictionary. There is only one requirement:

- file pointer - directs users to a JSON file.

Code:

Output:

HTML

The Python language may be used today to make our lives easier and has great applications in practically every sector. Getting the data output in an HTML file is one such Python application.

Creating an Html file and saving the input data into a file.

- Creating an HTML file. Function_Name = open("Complete_File_Name","File_operation")

- Adding input data in HTML format into the file. Function_Name.write("Adding_Input_data_using_HTML_Synatx_separted_by_/n")

- Saving the HTML file. Function_Name.close()

Opening the HTML file from the saved location by using the following code.

Code:

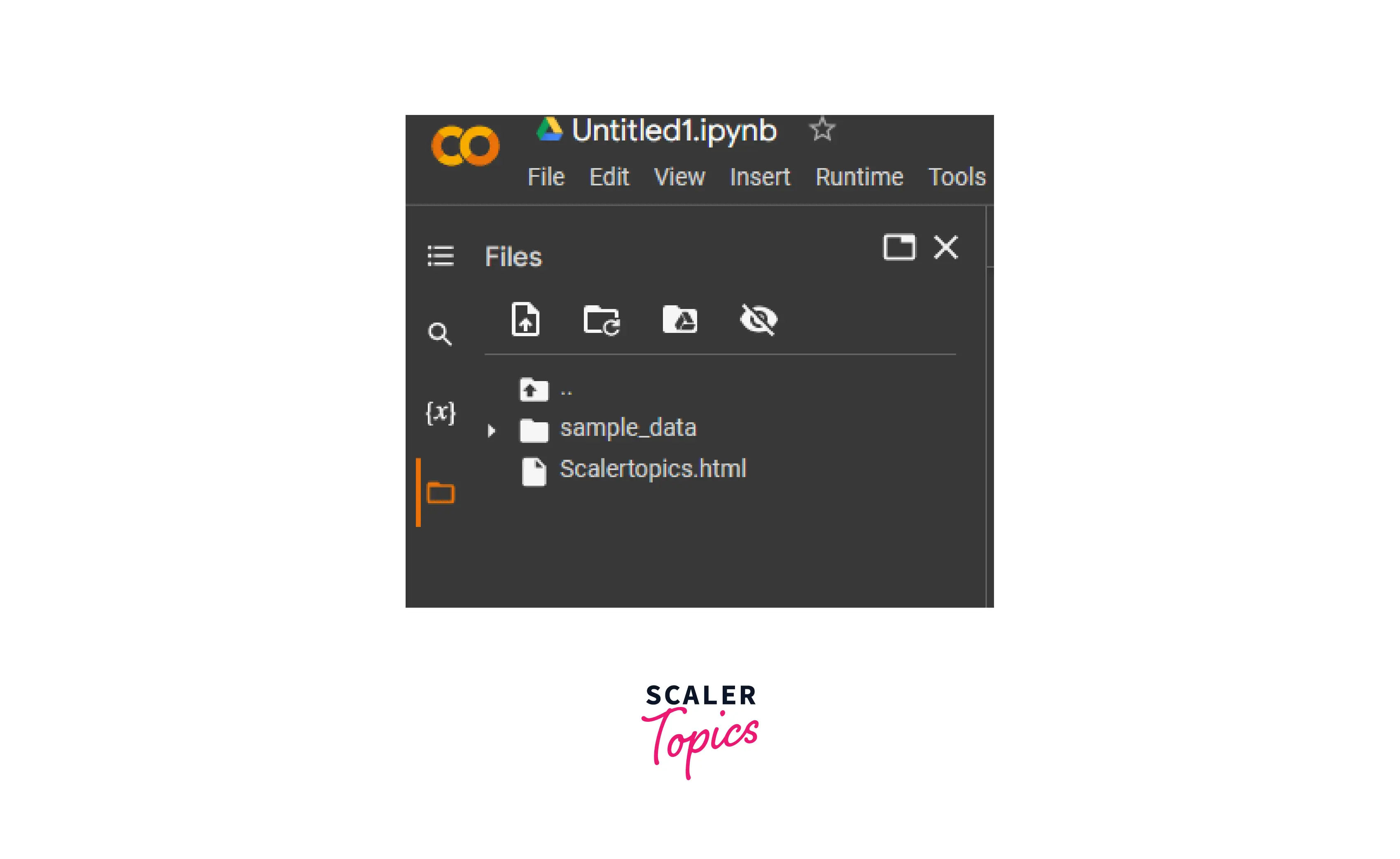

After creating the Html file, it is saved in the directory. I am using google colab here, so the file is saved in google colab. If you are using any of the python environments like pycharm, anaconda, Jupyter notebook, etc. then your created Html file will be stored on your computer.

Output:

Html file looks like:

To read the Html tables we have pandas built-in function .read_html(). Let's see how it works.

Code:

Output:

Explanation In the above code, pandas is imported as pd. An HTML code for creating tables is written and stored in Html_Data.Then using the pd.read_html() function, an HTML file can be read. type(df) is used to check the file type that is created.

SQL

For working with saved data in SQL we have to perform some complex queries. Pandas are specially built for data pre-processing and are more user-friendly than SQL . For this python has an inbuilt function to read the sql database pandas.read_sql. Let's see this with the help of an example.

Code:

Output:

Explanation:

In the above code example, connect is imported from the sqlite3 module of python, and :memory: is used to open the database connection to a database that resides in RAM instead of on disk. Then a DatFrame is created and saved as SQL Database using .to_sql as DataFile. The .read_sql is used to read the SQL data using the names of the columns.

Pickle

Python's pickle package is used to serialize and de-serialize a Python object structure. Python allows for the pickling of any object to enable disc storage. Pickle "serializes" the object before saving it to the file. A Python object (list, dict, etc.) can be turned into a character stream by pickling. According to the theory, this character stream provides all the data required to recreate the object in a different Python script. Unpickling is the inverse process. Pickle files have the extension .pickle or .pkl.

Code:

Output:

Explanation:

In the above code example, pandas are imported as pd and a dictionary is stored in the data variable. Then data is converted into a data frame using pd.Dataframe and stored in the df variable. Using df.to_pickle pandas DataFrame is stored as a pickle file and pd.read_pickle is used to read this pickle file.

Code:

Output:

Explanation:

The pickle module of python is imported and a dictionary is created, which is stored in db as Robert's and Mariee's data. Here, we create two functions, one for pickling i.e. store data(), and the other one loadData() for unpickling the file. To simply open a file open() function is used. Here, the file is opened for writing in binary mode by using wb, w for writing, and b for binary mode. pickle.dump() takes two arguments, the pickled object you want to use, and the file where the pickled object should be saved.pickle.load is used to load the pickled file. Close the file by using the close() function.

How to Work with Big Data with Pandas?

If you are working with very large files for processing and saving, then there are many approaches to help to reduce the space of the disk as:

- Compress files

- only Choose the columns you want

- Omit the rows are not needed

- Force the use of less precise data types

- Split the data into chunks

Let's look at these techniques one by one.

Compress files

Let's see an example to understand how the file is being compressed

Code:

Output:

Explanation:

Here import data function is created which takes the file as an input parameter. I have used This GitHub file link and checked the reduced size of the file.

Note: The above example is only a sample that can tell us that memory size is reduced.

Only Choose the columns you want

Sometimes, we only want specific data from the whole file, we can do so by selecting specific ow or column data.

Code:

Output:

Omit the rows are not needed

Sometimes you don’t need all the rows/features for your analysis. In such situations, you don’t have to load the dataset into a pandas data frame and then delete it. Instead, you can exclude the columns while loading the data frame. This method along with the efficient data type can save reduce the size of the data frame significantly.

Code:

Output:

Explanation

In the above code example, csv file is loaded using .read_csv from the GitHub link.

Code:

Output:

Explanation: Here, 1st parameter inside the range specifies the start value, the middle value is for the last value and the last one is for the difference followed till the last value passed is achieved. According to the given range in the above code, every second row is skipped between the 2nd and 18th row.

Force the use of less precise data types You might be able to save a sizable amount of memory if you're happy with less exact data types. Get the data types with .dtypes first:

Code:

Output:

Explanation: The integer numbers in the columns are 64-bit floats. The int64 data type requires 64 bits, or 8 bytes, for each number.

Code:

Output:

Explanation: We can confirm memory size using .memory usage().

Split the data into chunks The data can also be divided into smaller parts and processed one chunk at a time as another method of handling very large datasets. You can specify the optional parameter chunksize when using read csv(), read json(), or read sql().

Code:

Output:

Explanation: The dataset is read into chunks of data with the specified rows in the example above because we provided a value for the chunksize argument. With the chunksize operation set to three for our dataset, we have five iterators.

Conclusion

- The pandas I/O API is a collection of top-level reader functions that can be used with "pandas. read_csv()" and typically return a pandas object.

- CSV stands for comma-separated files and can be read by using the .read_csv function.

- JSON stands for Javascript object Notation and using .jsons.dumps we can write JSON files and can read files by using the json.load function.

- We can create an HTML file by using a simple .html extension and then using .write with the file we can write the HTML file.

- The pickle package of python is used for serializing and de-serialize. It needs to be imported first before using it.

- Large data takes lots of space so there are many approaches like compressing, removing the unwanted columns, or splitting data into chunks, which can reduce the size of the data so that less disk space is used.