Process Management In Operating System

Overview

Process management in OS entails completing a variety of responsibilities, including process development, scheduling, impasse management, and process termination. The operating system is in charge of overseeing all of the system's ongoing functions. By executing tasks like resource allocation and process scheduling, the operating system manages processes. When a process runs on a computer, both the machine's RAM and CPU are used. Additionally, the operating system must coordinate the many computer operations.

What is a Process?

A process, often known as a thread, is a program's functional processing unit that performs some operation.

A process is a running programme. When we construct a C or C++ programme, for example, the compiler generates binary code. For both raw and binary codes are programmes. When we run the binary code, it turns into a process. A procedure is divided into four sections:

Text: The existing actions are reflected by the value of the text.

Stack: Program Local variables, functional parameters, return addresses, and other transient data are stored in the Counter Stack.

Storage: The global variables are stored in data.

Heap: Space that is dynamically allocated and processed during runtime.

Process Control Block is a block in the operating system to regulate a process (PCB). It's a form of data structure that the operating system keeps track of individually for each process. An integer Process ID (PID) may be assigned to each PCB, which helps to retain all of the information needed to keep account of the processes.

The data of CPU registers, which are formed as a process transitions from one state to another, can also be stored on a PCB.

What is Process Management in OS?

There are several processes in the process management system that use the same shared resource. As a result, the operating system must efficiently and effectively manage all activities and structures.

To preserve consistency, some elements may need to be executed by one operation at a time. Otherwise, the system might be inconsistent and a deadlock may develop.

In terms of Process Management, the operating system is in charge of the following tasks.

- Process and thread scheduling on the CPU.

- Both user and system processes can be created and deleted.

- Processes are suspended and resumed.

- Providing synchronization methods for processes.

- Providing communication mechanisms for processes.

Characteristics of a Process

A process has the following characteristics:

- Process Id: A unique identifier assigned by the operating system

- Process State: Can be ready, running, etc.

- CPU registers: Like the Program Counter (CPU registers must be saved and restored when a process is swapped in and out of the CPU)

- Accounts information: Amount of CPU used for process execution, time limits, execution ID, etc

- I/O status information: For example, devices allocated to the process, open files, etc

- CPU scheduling information: For example, Priority (Different processes may have different priorities, for example, a shorter process assigned high priority in the shortest job first scheduling)

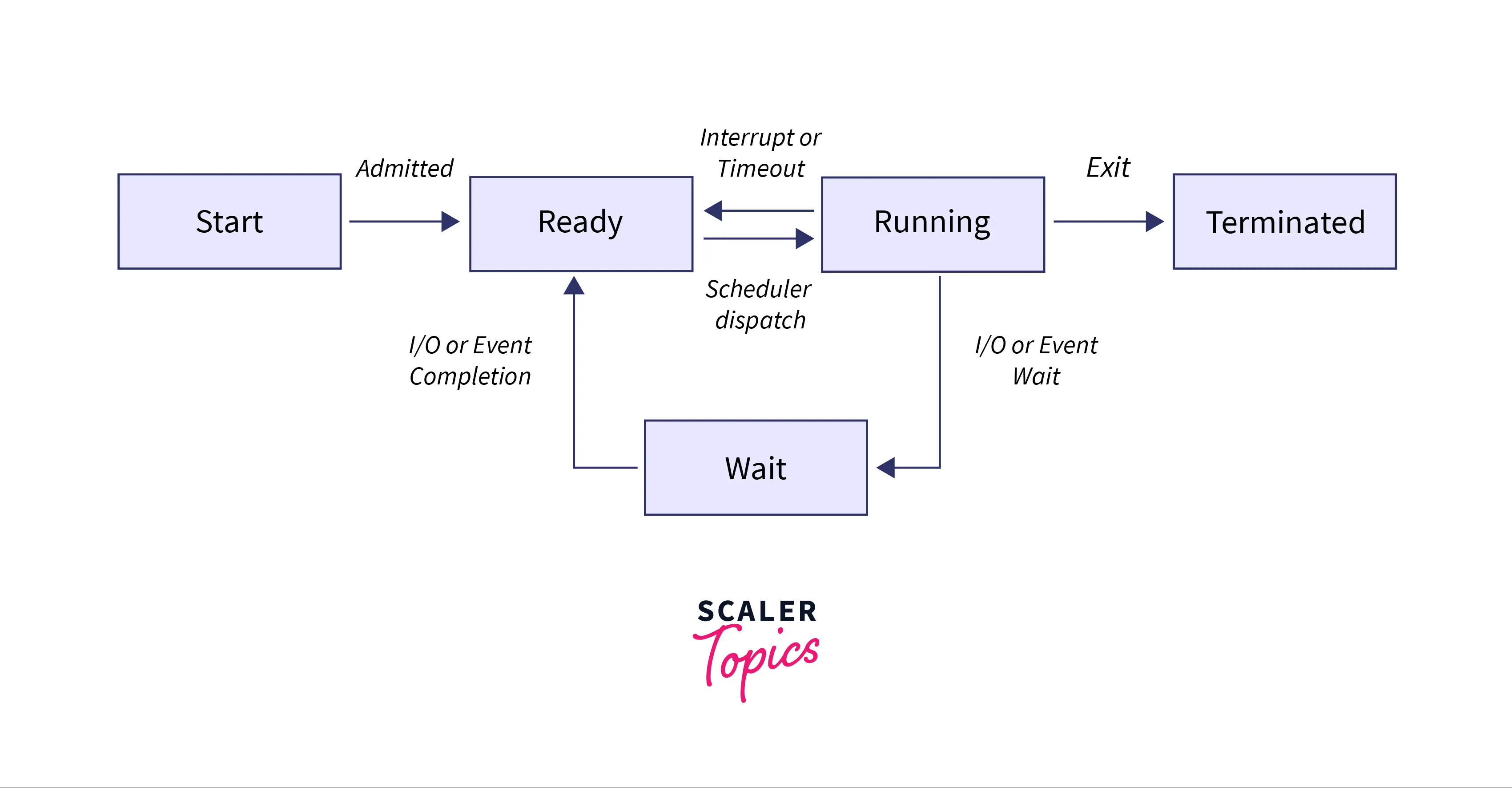

Process Life Cycle

A process state is the status of the process at a given point in time. It also specifies the process current state.

A process can be in one of the five states listed below at any one moment.

- Start: When a process is first started/created, it is in this stage.

- Ready: The process is waiting for a processor to be allocated to it. Ready processes are waiting for the operating system to assign them a processor so that they can run. The process may enter this state after starting or while running, but really the scheduler may disrupt it to assign CPU to another process.

- Running: Again when the OS scheduler has allocated the process to a CPU, the process state is set to running, as well as the processor begins to execute the commands.

- Waiting: When a process needs to wait for a resource, such as user input or a file towards becoming accessible, it enters the waiting state.

- Terminated or Exit: When a process completes its operation or is terminated by the operating system, it is transferred to the terminated state and awaits removal from memory space.

When Does Context Switching Happen?

Context switching is a fundamental concept in operating systems, and is necessary for multitasking and efficient resource management. In a multitasking operating system, multiple processes or threads can be running concurrently. The operating system uses context switching to switch between these processes or threads, so that each one can have a fair share of the CPU's time.

Context switching in OS involves saving and restoring the following information:

- The contents of the CPU's registers, which store the current state of the process or thread.

- The process or thread's memory map, which maps the process or thread's virtual memory addresses to physical memory addresses.

- The process or thread's stack, which stores the function call stack and other information needed to resume execution.

Know more about context switching

Context Switch vs Mode Switch

| Context Switch | User Switch |

|---|---|

| A context switch is the process of saving and restoring the state of a CPU for a different task or process. | A user switch refers to the act of a user manually switching from one task or application to another, o |

| Involves the operating system, which manages CPU task scheduling and state switching. | User-initiated action without direct involvement of the operating system. |

| Occurs in response to various events such as timer interrupts, I/O operations, or process scheduling. | Initiated by a user's decision to change the task or application being used, typically through interactions with the desktop or software. |

| Frequent and automatic, as the OS handles context switches as part of multitasking. | User switches occur at the discretion of the user and are less frequent. |

| Necessary for multitasking to allow multiple processes to run concurrently on a single CPU. | Allows users to interact with different applications or tasks, facilitating multitasking from a human perspective. |

| Efficiently managed by the operating system to minimize overhead. | User switches involve a slight delay due to user interaction and application loading. |

| Context switch occurs when an OS switches between running processes to provide multitasking. | User switch occurs when a user toggles between open applications on a computer or mobile device. |

CPU-Bound vs I/O-Bound Processes

| CPU-Bound Process | I/O-Bound Process |

|---|---|

| Primarily involves computation and number crunching tasks that heavily utilize the CPU. | Involves tasks that frequently require waiting for input/output operations, such as reading/writing data from/to disk or network. |

| Intensively uses CPU resources but often leaves I/O resources underutilized. | Relies heavily on I/O resources, while CPU usage may be low during waiting periods. |

| Improved performance comes from CPU optimization, parallel processing, and algorithm enhancements. | Enhanced by optimizing I/O operations, reducing latency, and asynchronous processing. |

| Tends to have shorter response times since it focuses on CPU-intensive tasks. | May experience longer response times due to I/O wait times. |

| CPU-bound tasks can be efficiently parallelized to take advantage of multi-core processors. | I/O-bound tasks can benefit from asynchronous operations to mitigate wait times. |

| CPU-bound tasks require more CPU resources, and the CPU scheduler may prioritize them. | I/O-bound tasks depend on efficient I/O operations and may be impacted by disk or network congestion. |

| Example- Scientific simulations, mathematical modeling, encryption/decryption tasks. | Example- File or database operations, web scraping, network communication, data processing from external sources. |

Scheduling Algorithms

Preemptive Scheduling Algorithms

In these algorithms, processes are assigned with priority. Whenever a high-priority process comes in, the lower-priority process that has occupied the CPU is preempted. That is, it releases the CPU, and the high-priority process takes the CPU for its execution.

Some of the preemptive algorithms are:

- Shortest Remaining Time First (SRTF): Preemptively selects the process with the shortest remaining execution time, allowing new processes to take over if they are shorter.

- Round Robin (RR): Preemptively allocates fixed time slices to processes, enabling the CPU to switch between tasks.

Non-Preemptive Scheduling Algorithms

In these algorithms, we cannot preempt the process. That is, once a process is running on the CPU, it will release it either by context switching or terminating. Often, these are the types of algorithms that can be used because of the limitations of the hardware.

Some of the non-preemptive algorithms are:

- First-Come, First-Served (FCFS): Executes processes in the order they arrive without interruption.

- Shortest Job First (SJF): Selects the process with the shortest execution time but doesn't preemptively switch to another task.

Know more about scheduling algorithms

Advantages and Disadvantages Of Processor Management In Operating System

Advantages

- Processor management ensures that the CPU is efficiently utilized by allocating tasks in a manner that minimizes idle time and maximizes throughput.

- By prioritizing interactive or high-priority processes, processor management can enhance system responsiveness, making it more user-friendly.

- Process scheduling algorithms ensure that CPU time is distributed fairly among competing processes, preventing any single process from monopolizing system resources.

- Processor management allows the OS to support multitasking, enabling users to run multiple applications simultaneously, which is essential for modern computing.

- Real-time operating systems use processor management to ensure that critical tasks meet their deadlines, making them suitable for time-sensitive applications like embedded systems or robotics.

Disadvantages

- Managing the CPU efficiently can be complex, especially when dealing with a large number of processes and different scheduling criteria. This complexity can lead to potential errors in scheduling.

- The act of switching between processes (context switching) comes with overhead. Excessive context switching can reduce overall system performance.

- Poor scheduling algorithms can lead to process starvation, where some processes never get CPU time, impacting their execution.

- In some cases, high-priority processes may be delayed due to lower-priority processes holding resources they need, causing priority inversion.

- If not managed effectively, processor management can lead to unpredictable system behavior, making it challenging to ensure consistent and reliable system performance.

- Contentions for CPU resources may occur when multiple processes are competing for CPU time. This can lead to delays and increased response times for some processes.

Conclusion

- Hence, the article discusses the meaning of process and process management in OS.

- The article addresses the process life cycle and different states in the process management in operating system.

- The article also explains the importance of process management in OS.