Data Augmentations in Pytorch

Overview

Data Augmentation is one of the key aspects of modern Data Science/Machine Learning. In this article, we will be going to learn various techniques around data augmentations and learn to apply them in using PyTorch.

Introduction

Increasing the training data is one of the easiest ways to boost the model's performance. But having many good and clean data is difficult, especially if the data and model are around a niche. Other than data, many approaches, like hyperparameter tuning, etc., solve this. But, there is a hack to increase the data called Data Augmentation, which transforms the existing data to make new data. This technique can generally be applied to data like speech, images, text, etc.. So, without any further due, let's dive into the details.

What is Data Augmentation?

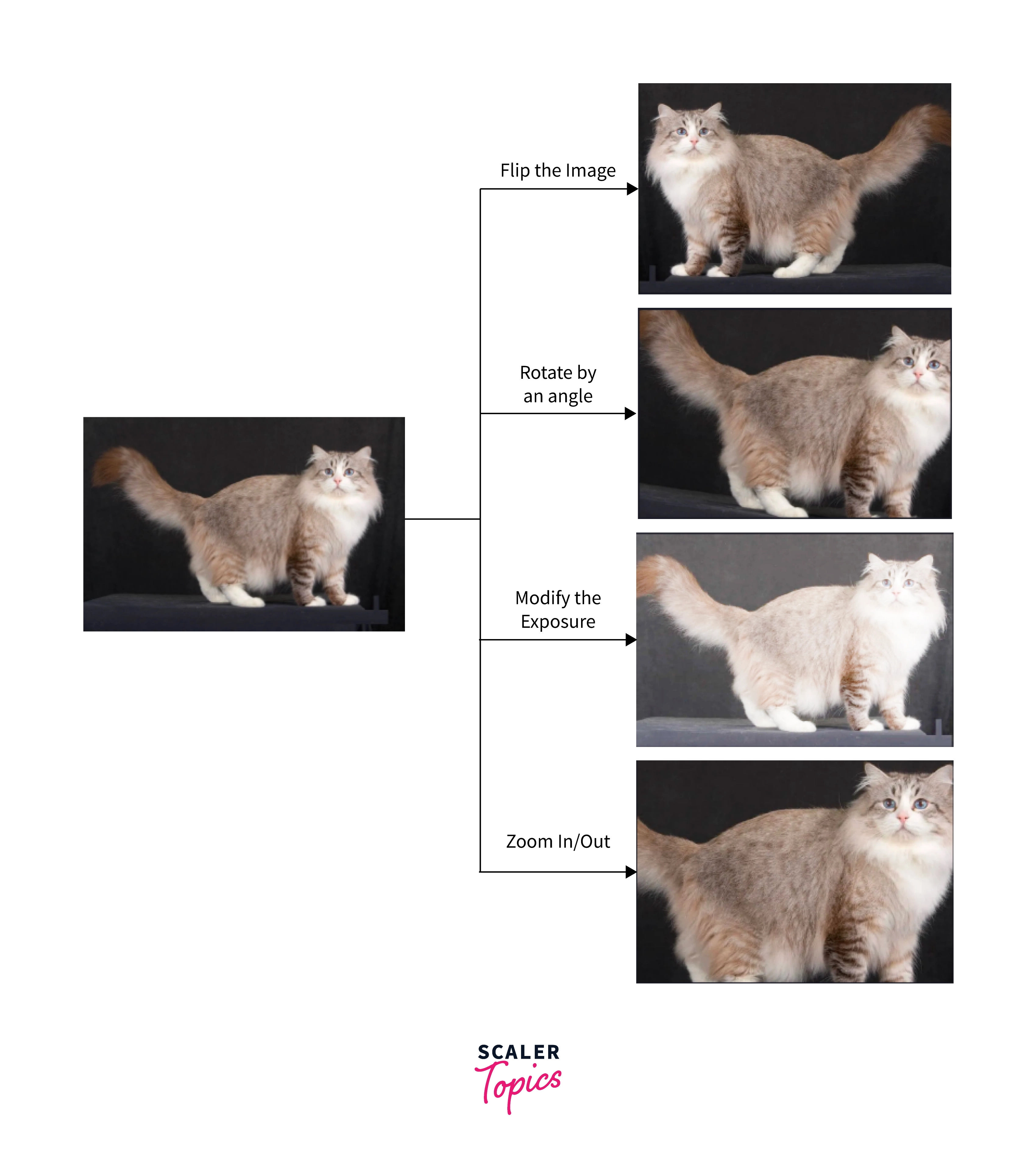

Data augmentation is a technique used to increase the amount of data by adding artificial data that is a modified version of existing data. Let's understand through an example. Consider an Image of a cat. You can augment it and create more versions of the image using that image. Below are some altered images that can be used for training the model. (One catch is the image should have the same number of pixels even though the image is changed, as most machine learning/deep learning models have a fixed image size as their input)

Need for Data Augmentation

Generally, Deep Learning models are data hungry, so as much as we feed "good data," the model will increase performance. Data Augmentation helps us to create new data by modifying existing data, thus helping in a reduction in operation time and costs to collect new data. Also, Data Augmentation will give us a diverse set of data using the existing data, as we can see in Fig 1. Specifically, Image data can be augmented with color, exposure, rotation, crop, scale, noise, etc. However, we will limit our conversation to image-based augmentation in this article as it will be easier to interpret and learn.

Some Python Libraries for Data Augmentation

Since we are dealing with image pixels, augmentation can be done manually by altering the pixel number with some scripting in Python. But some libraries can handle this for us with ease. So without reinventing the wheel, let's explore those libraries.

1. Albumentations

Albumentations is a Python library for image augmentation that is fast, flexible, and easy to use. Here is the Github Repository for further details.

2. Imgaug

Imgaug is a Python library for image augmentation that is helpful in data science/machine learning experiments. This library has various augmentation techniques, allows us to combine and execute images in batches in random order, and can run on multiple CPU cores. It also allows us to do tasks like heatmaps, bounding boxes, Key point(landmark) detections, and segmentation maps. Here is the Github Repository for further details.

3. Torchvision

Torchvision is a Python library part of the Pytorch project. So, it's highly efficient when using the PyTorch framework for training the models. This library supports numerous amount of operations for augmenting the image. Here is the Github Repository for further details. In the next section, let's explore most of the functionalities and code in detail.

How to Augment Images Using Transforms?

So far, we have seen some basic stuff of augmentation and Python libraries. Let's dive into the types of augmentation, implement them in the torchvision library and visualize the results.

The Generic Structure of the code to apply the transformation will be

- Define the transformation pipeline

- Use that in dataset/dataloader

First, We will discuss different types of augmentations that could help a lot in projects for data augmentations. Next, we will see a complete code that applies all the transformations we have learned using PyTorch. Finally, we will use Albumentations and Torchvision libraries as examples to learn how to augment.

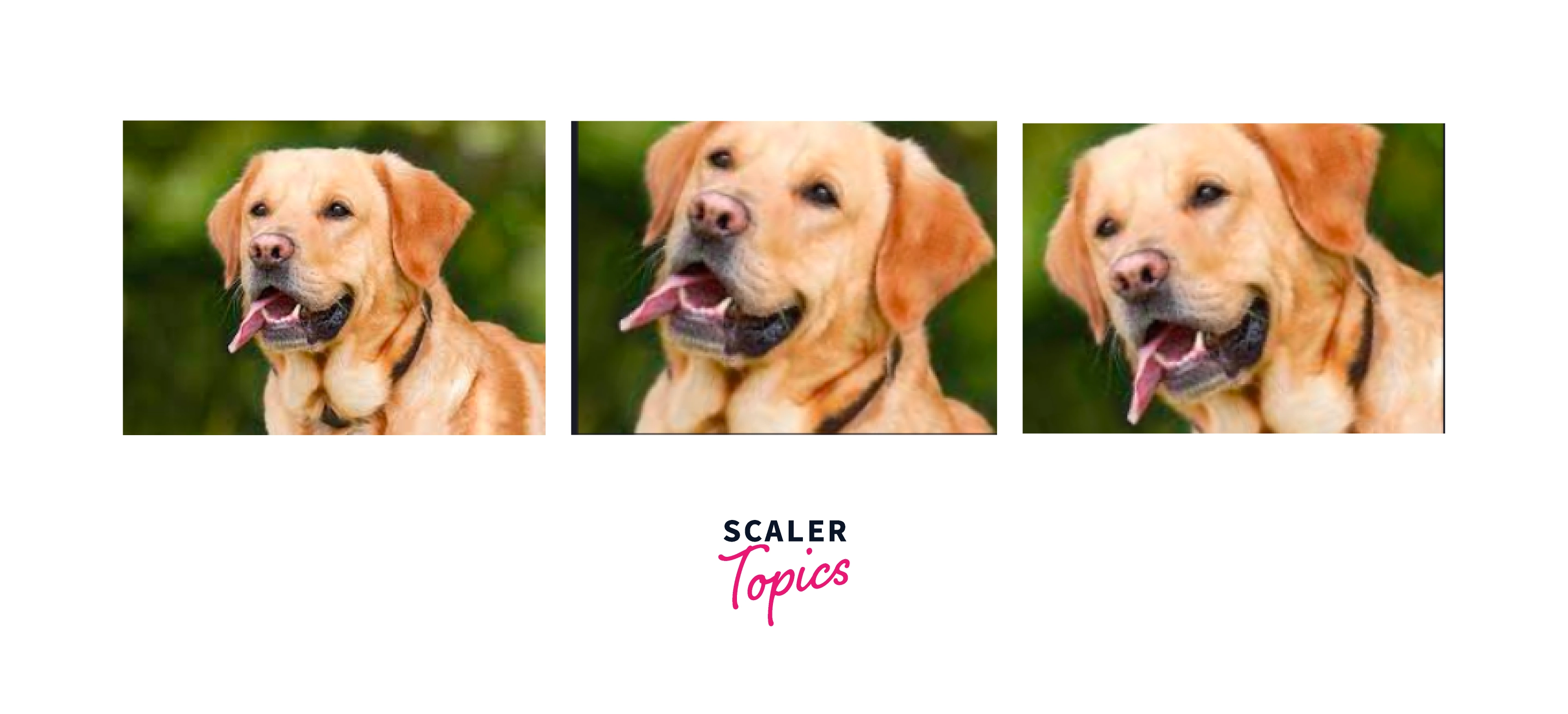

a. RandomCrop

This transformation crops the image at a random location, taking height and width parameters. It also takes a parameter padding that can help to fill the cropped area. Generally, the RandomCrop covers the edge case of images with half of its core theme not captured, as shown in the image below.

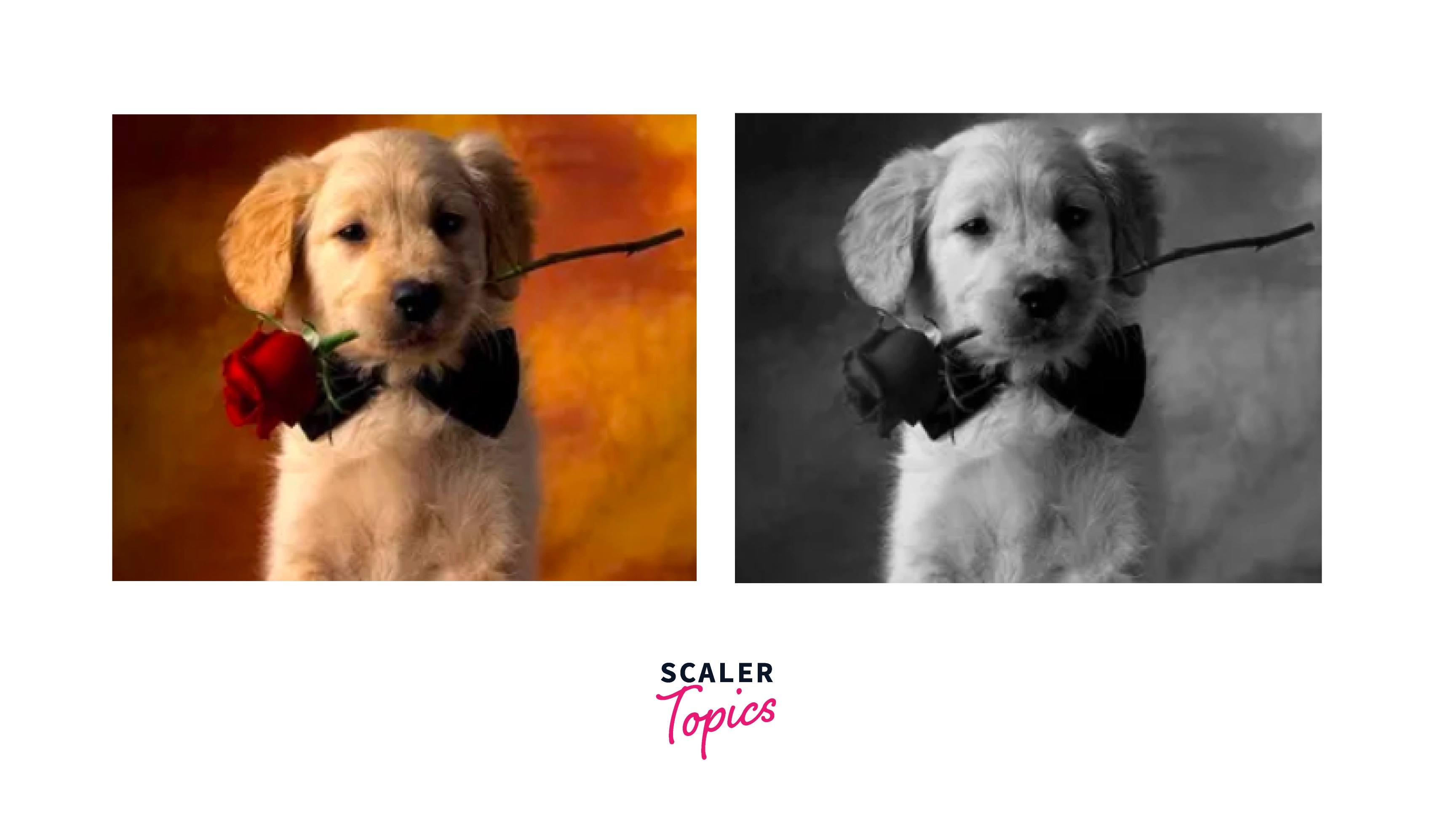

b. RandomGrayscale

RandomGrayscale converts the image to grayscale randomly according to the probability(p). This operation helps the model to train in the case where the image is in grayscale.

c. RandomHorizontalFlip

This Transformation flips the image horizontally randomly with a given probability. This transformation helps the model to reduce the bias towards the images over the views.

d. RandomPerspective

Random perspective applies a transformation for the given image with a given probability. Also, this helps the model to get different views or perspectives. The empty gap will be filled by 0(black).

e. RandomRotation

Random Rotation rotates the image with a given angle. This transformation helps the model learn various angled images.

f. Resize

Resize the input image to the given size. Most models expect the image size to be fixed. This transformation helps to convert any size image to the required size. (This transformation can warp the image)

g. Scale

Scale is an approach to increase or decrease the image size. This generally is used when image size is not a restriction for the model. (This doesn't warp the image but keeps the aspect ratio the same)

h. Normalize

Normalize makes the distribution to normal by applying z-transformation to float tensor image with mean and standard deviation. This transformation does not support PIL Image. This helps the model to train fast as the computation will be of small numbers now.

i. ToTensor

ToTensor converts a PIL Image or NumPy.ndarray to a tensor. ToTensor is a necessary transformation for an image to train the model in PyTorch.

Let's combine all the things we have learned and apply them to a small dataset. We will be using torchvision in this code.

Next, train the model with a data loader. Augmentations will be applied whenever the data is loaded.

Conclusion

Here are some takeaways from the article:-

- Data augmentation is an approach used to increase the amount of data by adding artificial data.

- Data Augmentation will reduce time and operation costs, diversifying the dataset using the existing data.

- There are many libraries supporting augmentations for data, Albumentations and Torchvision are popular.

- There are a lot of other approaches to augment the data that can be useful for specific applications. In this article, we have explored the generic techniques used in deep learning projects.