Aurora Auto Scaling in AWS

Overview

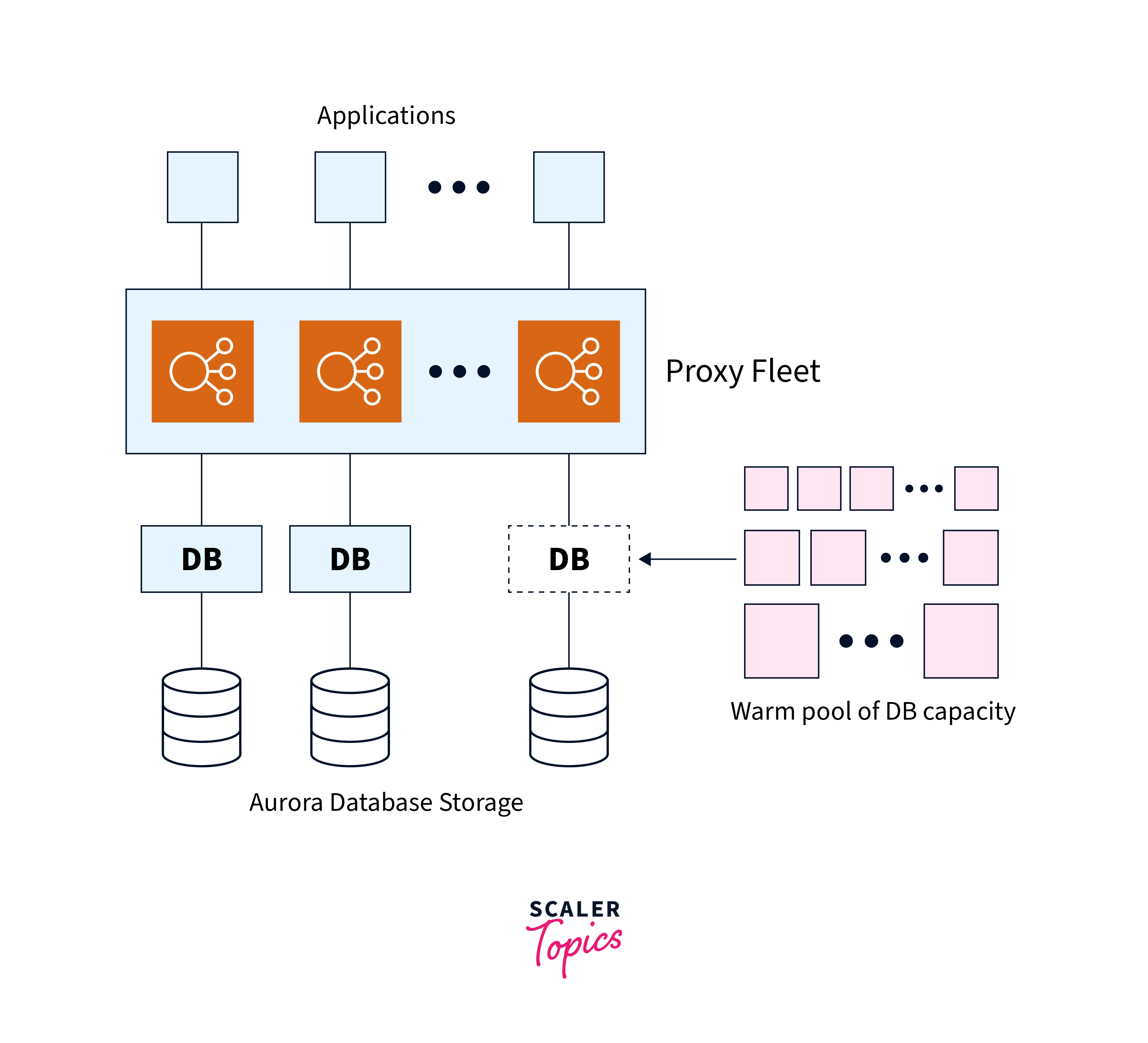

Amazon Aurora offers a reasonably easy, affordable choice for irregular, sporadic, or unpredictable workloads. It automatically starts up, scales compute capacity to match the usage of your application and shuts down when it is no longer required, which makes this possible. You only receive one endpoint with Aurora Serverless (as opposed to two endpoints for the standard Aurora provisioned DB). This is essentially a DNS record made up of a fleet of proxies that sits on top of the database instance. From the perspective of the MySQL server, this indicates that the connections are always coming from the proxy fleet.

Introduction to Aurora Auto Scaling

The number of Aurora Replicas provided for an Aurora DB cluster utilizing single-master replication is constantly changed by Aurora Auto Scaling to match your connectivity and workload needs. Both Aurora PostgreSQL and Aurora MySQL are compatible with Aurora Auto Scaling. With the help of Aurora Auto Scaling, your Aurora DB cluster can manage unexpected surges in connection or workload. You won't be charged for unused provisioned DB instances when the connectivity or workload reduces since Aurora Auto Scaling eliminates unneeded Aurora Replicas. You create and implement a scaling policy for an Aurora DB cluster. The minimum and maximum number of Aurora Replicas that Aurora Auto Scaling is capable of managing are specified by the scaling policy. According to the policy, Aurora Auto Scaling changes the number of Aurora Replicas up or down in response to actual workloads, which are determined by using Amazon CloudWatch metrics and target values.

You create and implement a scaling policy for an Aurora DB cluster. The minimum and maximum number of Aurora Replicas that Aurora Auto Scaling is capable of managing are specified by the scaling policy. According to the policy, Aurora Auto Scaling changes the number of Aurora Replicas up or down in response to real workloads, which are calculated by utilising Amazon CloudWatch measurements and target values.

Applying a scaling strategy based on a preset measure is possible using the AWS Management Console. As an alternative, you may implement a scaling strategy based on a predefined or custom measure using the AWS CLI or Aurora Auto Scaling API.

Only MySQL 5.6 is supported by Aurora Serverless at the moment. In essence, you need to provide the DB cluster's minimum and maximum capacity units. Each capacity unit equates to a distinct computing and memory setup. When the DB cluster's workload falls below these levels, Aurora Serverless reduces the resources for the cluster. When using Aurora Serverless, capacity can be increased to the maximum unit or decreased to the bare minimum.

If one of the following circumstances occurs, the cluster will automatically scale up:

- CPU use is more than 70% OR

- Over 90% of connections are active.

If both of the following circumstances are true, the cluster will automatically scale down:

- Less than 40% of connections are in use AND CPU utilization falls below 30%.

The following are some significant details concerning the scaling flow of Aurora:

- It only scales up when performance problems are found that can be fixed by scaling up.

- Scaling down is not possible for 15 minutes after scaling up.

- The cooldown time for scaling down once more after the initial scaling down is 310 seconds.

- When there are no connections for five minutes, the scaling reaches zero.

Automatic scaling is automatically carried out by Aurora Serverless by default, without interrupting any ongoing database connections to the server. It can establish a scaling point (a point in time at which the database can safely initiate the scaling operation). But in the instances listed below, Aurora Serverless could be unable to locate a scaling point:

- There are ongoing lengthy inquiries or transactions.

- Table locks or temporary tables are in use.

Unless 'Force Scaling' is enabled, Aurora Serverless keeps looking for a scaling point in the event of either of the aforementioned scenarios to start the scaling operation. As long as the DB cluster needs to be scaled, it continues to operate in this manner. It is necessary to first build an Aurora DB cluster with a primary instance and at least one Aurora Replica before you can use Aurora Auto Scaling with it. Aurora Replicas are managed by Aurora Auto Scaling; however, at least one Aurora Replica must be present when the Aurora DB cluster is first created.

Only when all of the Aurora Replicas in a DB cluster is in the available state can Aurora Auto Scaling grow the DB cluster. When an Aurora Replica is not in an available state, Aurora Auto Scaling waits until the entire DB cluster is ready for scaling.

The new Aurora Replica is the identical DB instance class as the one used by the primary instance when Aurora Auto Scaling creates a new Aurora Replica. DB instance classes may be found in further detail at Aurora DB instance classes. Additionally, by default, the promotion tier for new Aurora Replicas is set to the last priority, which is 15.

As a result, in the event of a failover, a replica with a higher priority, such as one made manually, would be elevated first. Only replicas that Aurora Auto Scaling itself developed are removed.

Your apps must be able to connect to the new Aurora Replicas for Aurora Auto Scaling to work. We advise making use of the Aurora reader endpoint to achieve this. You may use a driver like the AWS JDBC Driver for MySQL for Aurora MySQL.

How Aurora autoscaling works in the background?

The service-linked role AWSServiceRoleForApplicationAutoScaling RDSCluster is used when creating a new autoscaling policy for Aurora. The target matrix must be specified for autoscaling to scale up and down. Let's assume that you are thinking about utilising CPU usage as a goal matrix. When the predetermined threshold is reached, the Aurora autoscaling policy will generate a cloud watch alarm that will cause the policy to scale up and down. As new read replicas must be spun up one at a time, keep in mind that your configuration must allow for this.

Configuring the autoscaling policy

From 0 to 15, you may choose the maximum number of read replicas that Aurora Autoscaling will maintain. The minimum number must likewise fall between 0 and 15, but it must be lower than the maximum number.

Make careful to provide the proper values for the cooldown time. This will stop scale-in and scale-out events from continuing to be triggered. If you set the scale in the policy's cooldown period to 120 seconds. There won't be any more occurrences that scale up or down until the 120-second cooldown time has passed. This enables you to manage the behaviour of autoscaling. To prevent numerous scale-in or out triggers, it is crucial to provide a cooling-off period. Additionally, building and removing instances take time. For scale-in and scale-out operations, the cooldown time is by default set to 300 seconds.

Aurora Auto Scaling Policies

The number of Aurora Replicas in an Aurora DB cluster can be changed by Aurora Auto Scaling using a scaling policy. The components of Aurora Auto Scaling are as follows:

- A service-linked role

- A target metric

- Minimum and maximum capacity

- A cooldown period

Service-linked role

The service-linked role AWSServiceRoleForApplicationAutoScaling RDSCluster is used by Aurora Auto Scaling.

Application To obtain the permissions necessary to make calls to other AWS services on your behalf, Auto Scaling leverages service-linked roles. AWS Identity and Access Management (IAM) roles come in several varieties, but one of them is exclusive to an AWS service and is called a service-linked role. Because only the connected service may adopt a service-linked role, these roles offer a safe method of assigning rights to AWS services.

You may establish service-linked roles for the services that interface with Application Auto Scaling using this tool. Each service has a unique role that is tied to it. Every service-linked position puts its faith in the designated service principle to carry it out.

Every relevant authorization for each service-linked role is included in Application Auto Scaling. The permitted activities for each resource type are specified by these managed permissions, which are produced and maintained by Application Auto Scaling.

Roles associated with the Application Auto Scaling service may be created and managed using the sections below. Create permissions that will enable an IAM entity (such as a user, group, or role) to add, amend, or remove a service-linked role first.

Target Metric

A preset or custom measure and a target value for the metric are specified by a target-tracking scaling policy configuration in this type of policy. The Aurora Auto Scaling-created and managed CloudWatch alerts, which also choose the scaling adjustment based on the measure and goal value, are what start the scaling policy. The scaling policy adds or removes Aurora Replicas as necessary to maintain the metric at, or very close to, the set goal value. A target-tracking scaling policy keeps the metric close to the target value while also adjusting to changes in the measure brought on by a changing workload. The number of Aurora Replicas that are available to your DB cluster is less abruptly changed thanks to a similar policy. Think about a scaling strategy that makes use of the predefined average CPU utilisation metric. Such a rule may keep CPU use at, or slightly below, some fixed threshold, like 40%.

Minimum and Maximum Capacity

You have the option of selecting the most Aurora Replicas that Application Auto Scaling can manage. This value must be more than or equal to the amount specified for the minimum number of Aurora Replicas, be in the range of 0 to 15, and be set to the value range of 0 to 15. Another variable that you may choose is the bare minimum number of Aurora Replicas that Application Auto Scaling must manage. This value has to be between 0 and 15, and it has to be lower than the value selected for the maximum number of Aurora Replicas.

Cooldown Period

By introducing cooldown intervals that affect scaling your Aurora DB cluster in and out, you may increase the responsiveness of a target-tracking scaling strategy. Prior scale-in or scale-out requests are halted for the cooling time and are not restarted until it is over. The deletion of Aurora Replicas for scale-in requests and the creation of Aurora Replicas for scale-out requests in your Aurora DB cluster are both hindered by these limitations.

It is possible to programme the following cooldown times:

- Due to a scale-in activity, the number of Aurora Replicas in your Aurora DB cluster is decreased. A scale-in cooldown duration establishes the amount of time that must pass after a scale-in action is finished before another one may begin.

- Your Aurora DB cluster's Aurora Replica count grows as a result of a scale-out action. The amount of time that must pass after one scale-out activity is finished before the commencement of another is determined by the scale-out cooling period.

Enable or Disable Scale-in Activities

Scale-in actions for a policy can be enabled or disabled. The scaling policy could eliminate Aurora Replicas when scale-in operations are enabled. When scale-in operations are enabled, they are subject to the scale-in cooling period that the scaling policy specifies. Scale-in operations can be disabled to prevent the deletion of Aurora Replicas in compliance with the scaling policy.

Adding a Scaling Policy to an Aurora DB cluster

The AWS Management Console, AWS CLI, or the Application Auto Scaling API can all be used to add a scaling policy.

Auto scaling Template Snippets

The examples below show numerous snippets that may be included in templates for use with Amazon EC2 Auto Scaling or Application Auto Scaling. Amazon EC2 Auto Scaling enables you to scale Amazon EC2 instances automatically using either scheduled scaling or scaling policies. Auto Scaling groups, which are collections of Amazon EC2 instances, offer fleet management features including health checks and connectivity to Elastic Load Balancing as well as automated scaling. Application Auto Scaling provides automated scaling of extra resources outside of Amazon EC2 with the use of scheduling or scaling policies.

The AWS Management Console may be used to apply a scaling policy to an Aurora DB cluster.

An Aurora DB cluster needs an auto-scaling strategy added:

- To learn more, go to https://console.aws.amazon.com/rds/. To use the AWS Management Console while signed in to access the Amazon RDS console.

- The navigation pane allows for the selection of databases.

- Decide which Aurora DB cluster you want to add a policy to.

- Select the Logs and Events tab.

- In the section labelled "Auto scaling policies," click Add.

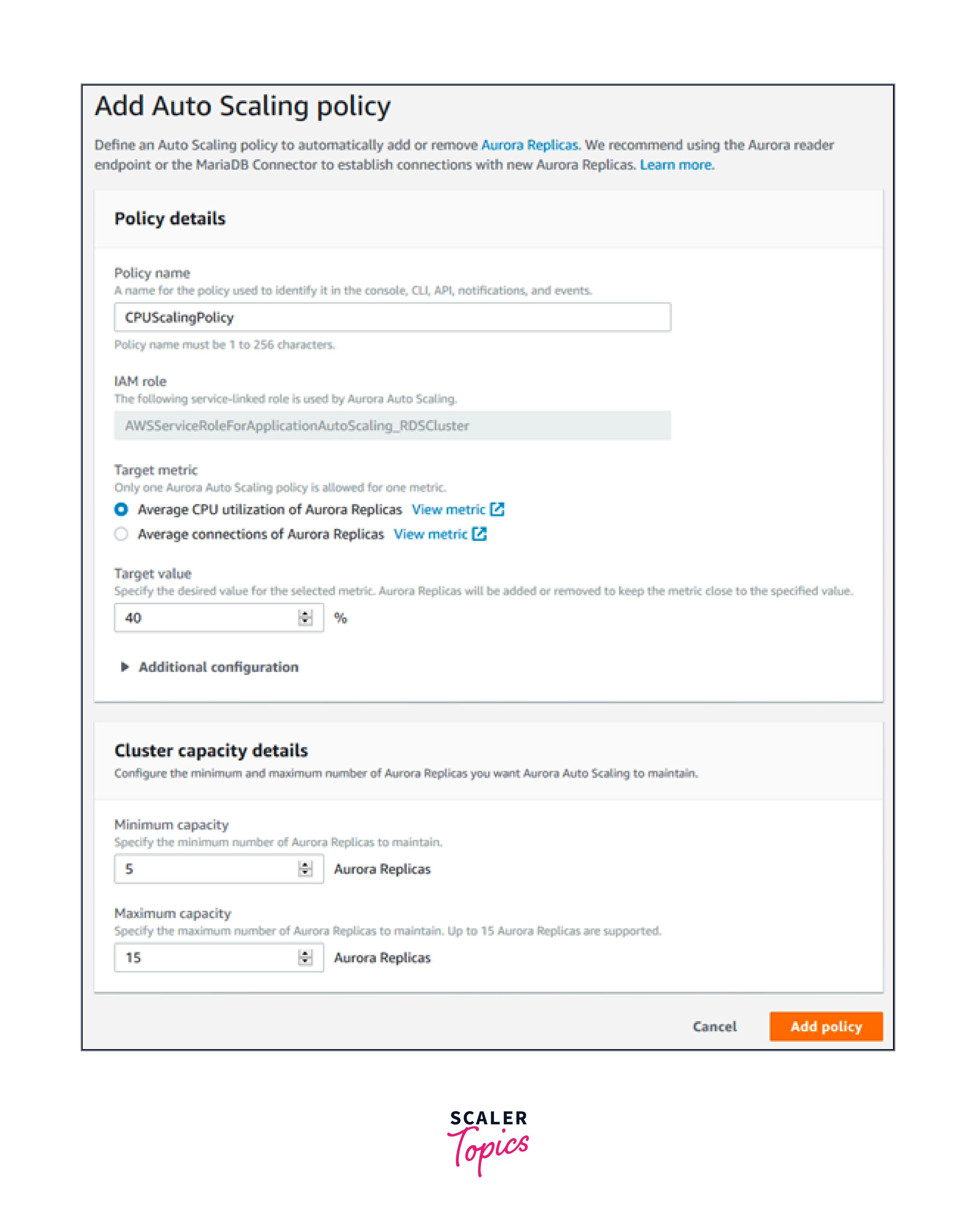

- An add auto-scaling policies dialogue box appears.

- In the box labelled "Policy Name," type the name of the policy.

- Choose one of the following choices for the goal metric:

- Based on the typical CPU usage of Aurora Replicas, a policy will be developed.

- It is necessary to take into account the average connections of Aurora Replicas while developing a strategy based on the normal number of connections to these replicas.

- Fill in one of the following as the goal value:

- If you choose the Average CPU Utilisation of Aurora Replicas in the previous step, enter the CPU usage % you want to retain for Aurora Replicas.

- If you choose the option for Average connections of Aurora Replicas in the previous stage, enter the number of connections you want to preserve.

- The measure is either added to or removed from to maintain it close to the intended value.

- By enlarging Additional Configuration, you may create a scale-in or scale-out cooling period (optional).

- Enter the minimum number of Aurora Replicas that must be maintained for the Aurora Auto Scaling policy to function in the box labelled "Minimum capacity."

- Maximum capacity should be filled up with the most Aurora Replicas that the Aurora Auto Scaling policy can sustain.

- Pick Add policy.

The following dialogue box provides an auto-scaling plan with a 40% average CPU use. By the rules, there must be a minimum of 5 and a maximum of 15 Aurora Replicas.

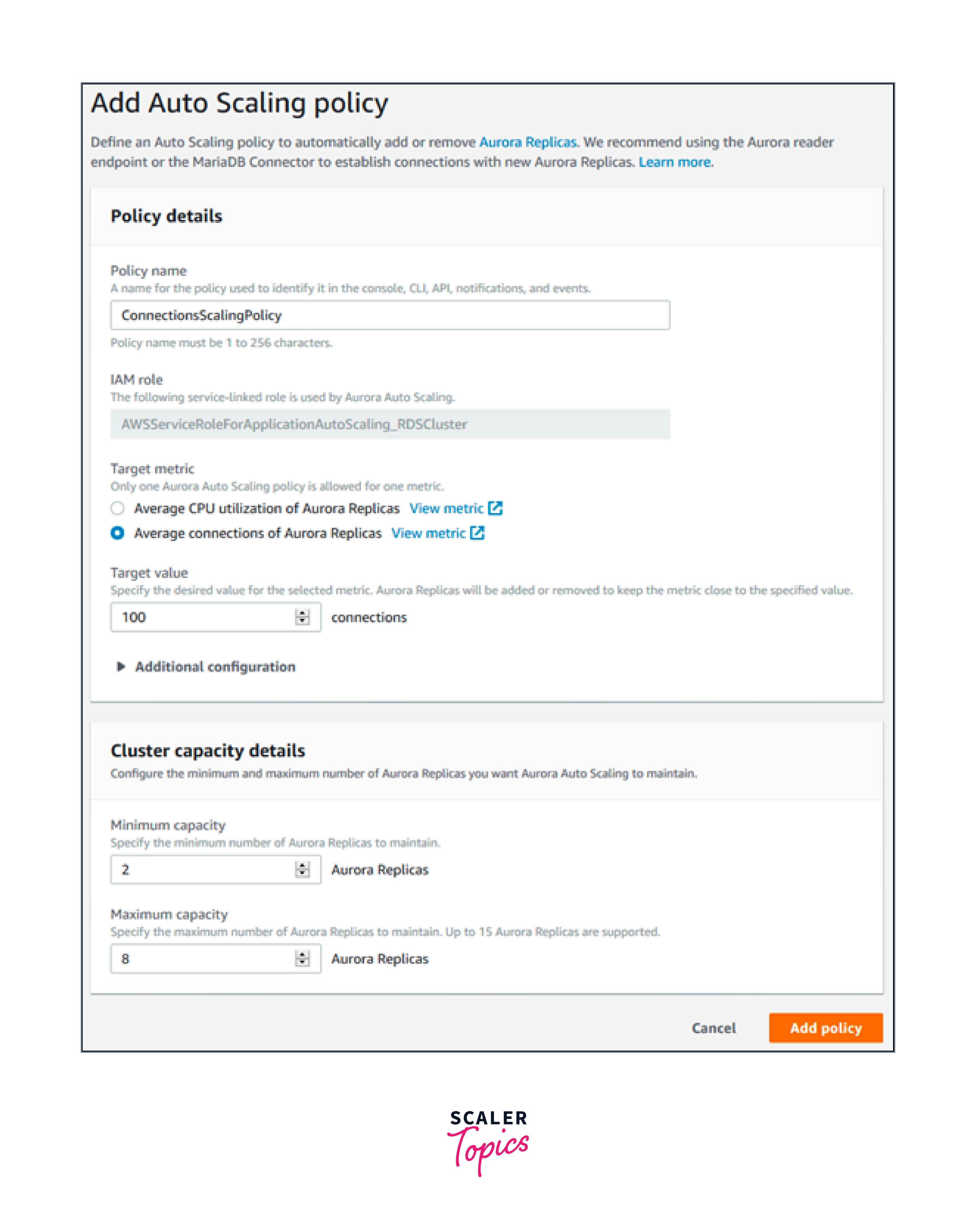

The following dialogue box generates an auto-scaling policy based on a typical connection count of 100. The policy specifies a minimum of two Aurora Replicas and a maximum of eight Aurora Replicas.

AWS CLI or Application Auto Scaling API

Applying a scaling policy that is either predefined or custom-made depending on the statistic will be possible. You may achieve this by using the AWS CLI or the Application Auto Scaling API. Registering your Aurora DB cluster is required before using Application Auto Scaling.

Registering an Aurora DB cluster

Before you can use Aurora Auto Scaling with an Aurora DB cluster, your Aurora DB cluster must be registered with Application Auto Scaling. This is not to impose constraints and a scaling dimension on that cluster. Using Application Auto Scaling along the rds, the Aurora DB cluster is dynamically scaled: cluster: ReadReplicaCount, a scalable dimension, represents the total number of Aurora Replicas. Registering your Aurora DB cluster may be done either by the AWS CLI or the Application Auto Scaling API.

AWS CLI

The register-scalable-target AWS CLI command should be used with the following parameters to register your Aurora DB cluster:

- —service-namespace - Set rds as the value for this field.

- The resource identifier for the cluster of Aurora DBs is —resource-id. The resource type for this parameter is the cluster, and the unique identifier is the name of the Aurora DB cluster, for example, cluster

. - —scalable-dimension - Set this value to rds: cluster

. - —min-capacity - The bare minimum number of reader DB instances that Application Auto Scaling will manage. See Minimum and maximum capacity for details on the connection between —min-capacity, —max-capacity, and the number of DB instances in your cluster.

- Most reader DB instances that may be maintained by Application Auto Scaling are specified by the —max-capacity option. See Minimum and maximum capacity for details on how —min-capacity, —max-capacity, and the number of DB instances in your cluster relate to each other.

In the following example, you register a collection of Aurora DBs called myscalablecluster. By registration, the database cluster should be dynamically scaled to contain one to eight Aurora Replicas.

For Linux, macOS, or Unix:

For Windows:

Application Auto Scaling API

Use the RegisterScalableTarget Application Auto Scaling API method and the following arguments to add your Aurora DB cluster to the system for Application Auto Scaling:

- Change the serviceNamespace property's value to rds.

- The Aurora DB cluster's ResourceID is used to identify resources in the cluster. The specific identifiers for this option, such as cluster

, are the cluster resource type and cluster name for Aurora DB. - Change the ScalableDimension property's value to rds. cluster

. - MinCapacity: This specifies the bare minimum number of reader DB instances that Application Auto Scaling will be able to support. For information on how the number of DB instances in your cluster and the minimum and maximum capacity of your cluster relate to one another, see Minimum and maximum capacity.

- MaxCapacity is the maximum number of reader DB instances that can be managed by application auto-scaling. For further information on how MinCapacity, MaxCapacity, and the quantity of DB instances in your cluster relate to one another, see Minimum and maximum capacity.

The example that follows shows how to register an Aurora DB cluster with the Application Auto Scaling API under the name myscalablecluster. The DB cluster should be dynamically scaled to contain between one and eight Aurora Replicas in accordance with this registration.

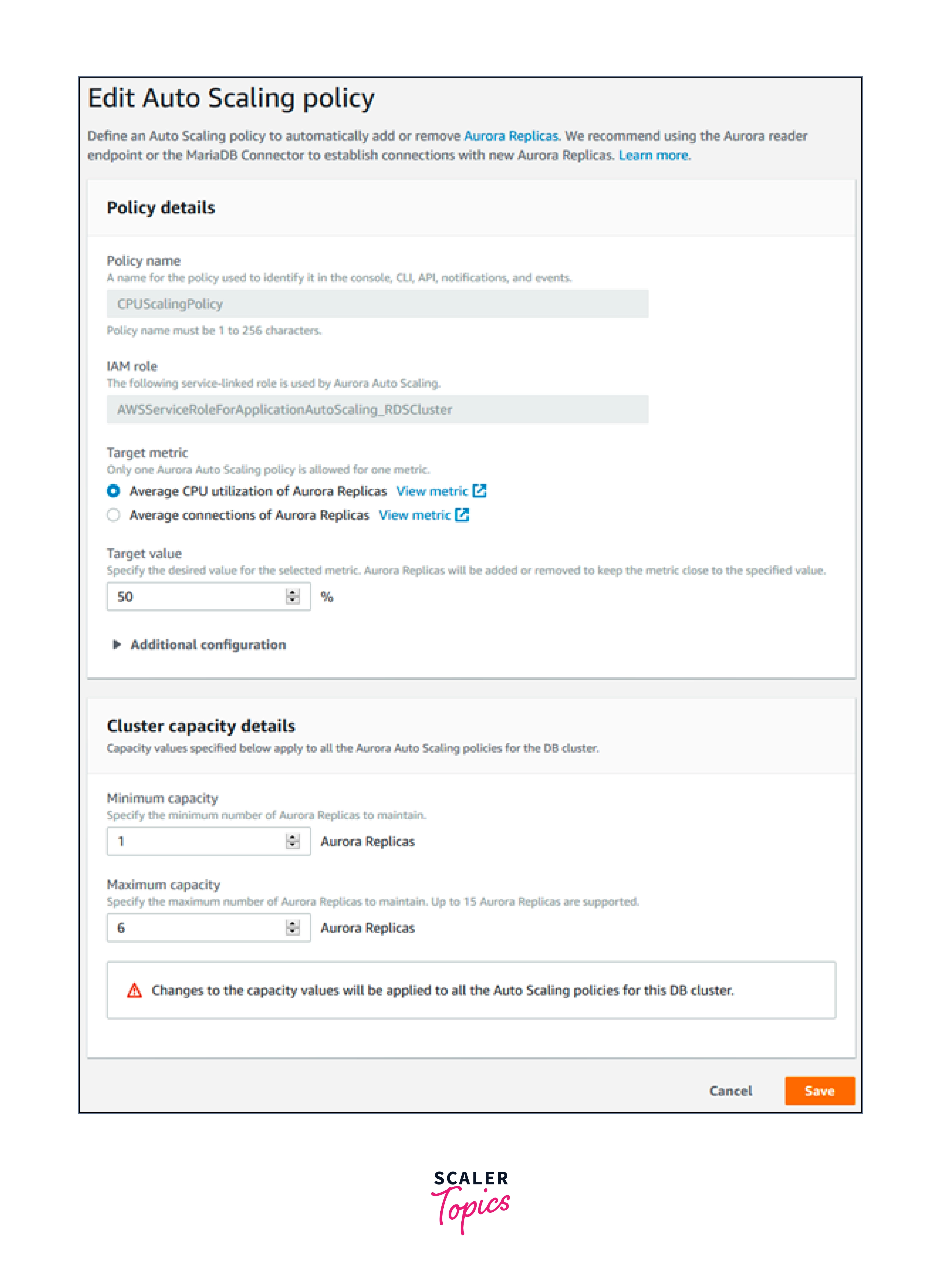

Editing a Scaling Policy

The AWS Management Console, AWS CLI, or Application Auto Scaling API can all be used to edit scaling policies.

Console

With the help of the AWS Management Console, you can edit a scaling policy.

To change the auto-scaling settings for an Aurora DB cluster:

- To learn more, go to https://console.aws.amazon.com/rds/. To use the AWS Management Console while signed in to access the Amazon RDS console.

- The navigation pane allows for the selection of databases.

- You should choose the Aurora DB cluster whose auto-scaling method you want to modify.

- Select the Logs and Events tab.

- Click "Edit" after selecting the auto-scaling policy from the list of available options.

- The rule has to be changed.

- Select Save.

The following is a sample Edit Auto Scaling policy dialogue box.

AWS CLI or Application Auto Scaling API

The same tools that you use to apply a scaling policy are available to use to edit one as well: AWS CLI and Application Auto Scaling API.

- The name of the policy you want to update should be specified using the —policy-name argument of the AWS CLI. You should specify new values for the parameters you want to modify.

- When utilising the Application Auto Scaling API, enter the name of the policy you wish to modify in the PolicyName argument. You should specify new values for the parameters you want to modify.

Deleting a Scaling Policy

The AWS Management Console, AWS CLI, or Application Auto Scaling API can all be used to delete scaling policies.

Console

Using the AWS Management Console, you may remove a scaling policy.

For an Aurora DB cluster, eliminate the auto-scaling policy.

- To learn more, go to https://console.aws.amazon.com/rds/. To use the AWS Management Console while signed in to access the Amazon RDS console.

- The navigation pane allows for the selection of databases.

- Select the Aurora DB cluster whose auto-scaling strategy you wish to disable.

- Select the Logs and Events tab.

- Click "Delete" after selecting the auto-scaling policy in the "Auto scaling policies" section.

AWS CLI

Use the delete-scaling-policy AWS CLI command with the following parameters to remove a scaling policy from your Aurora DB cluster:

- — policy name — The scaling policy's official name is The cluster's —resource-id resource identifier for Aurora DBs. The name of the Aurora DB cluster, for instance, cluster

, serves as the unique identifier for the resource type for this option, which is the cluster. - —service-namespace For this field, enter rds as the value.

- scalable-dimension - Rds should be entered here: cluster

.

The target-tracking scaling policy named myscalablepolicy from an Aurora DB cluster named myscalablecluster is deleted in the example below.

For Unix, Linux, or macOS.

For Windows:

Application Auto Scaling API

Utilize the DeleteScalingPolicy method of the Application Auto Scaling API and the following arguments to remove a scaling policy from your Aurora DB cluster:

- The scaling policy's name is specified as PolicyName.

- Change the serviceNamespace property's value to rds.

- The Aurora DB cluster's ResourceID is used to identify resources in the cluster. The specific identifiers for this option, such as cluster

, are the cluster resource type and cluster name for Aurora DB. - Change the ScalableDimension property's value to rds. cluster

.

The Application Auto Scaling API is used in the example below to remove a target-tracking scaling policy called myscalablepolicy from an Aurora DB cluster called myscalablecluster.

Conclusion

- A more powerful instance replaces a less powerful instance in Amazon Aurora Serverless auto-scaling, which effectively makes use of the shared storage technology underpinning Aurora.

- By default, the auto-scaling procedure is flawless, with Aurora selecting a secure scaling point at which to move between instances. One can choose to compel automatic scaling at the risk of losing active database connections.

- When employing single-master replication, Aurora Auto Scaling dynamically modifies the number of Aurora Replicas provided for an Aurora DB cluster.

- To avoid having to pay for unneeded provisioned DB instances, Aurora Auto Scaling eliminates superfluous Aurora Replicas when connectivity or workload reduces.

- Only when all of the Aurora Replicas in a DB cluster are in the available state can Aurora Auto Scaling grow the DB cluster. When an Aurora Replica is not in an available state, Aurora Auto Scaling waits until the entire DB cluster is ready for scaling.

- The number of Aurora Replicas in an Aurora DB cluster may be changed using Aurora Auto Scaling and a scaling strategy. The elements of Aurora Auto Scaling are as follows: a service-linked role, A target metric, Minimum and maximum capacity, and A cooldown period.

- With the data in your cluster volume, Aurora storage scales automatically. Your cluster volume storage can grow as your data does, up to 128 tebibytes (TiB) or 64 TiB at most. Depending on the DB engine version, the maximum size may vary.

- You need at least one read replica in your Aurora cluster for autoscaling to function. (However, you might wish to have a larger instance for writers and smaller ones for readers if you don't already have read replicas; autoscaling will utilise the writer instance configuration to produce new read replicas.)

- When a new replica is added, it will be configured and have the same instance class as the manually built read replica.

- If your application wants to read data from your database cluster, it should utilise reader endpoints.

- The manually generated replica will be given priority in a failover and will be elevated to the status of the primary instance. Replicas produced by autoscaling will have a final priority that is by default set to 15.