AWS Edge Locations

Overview

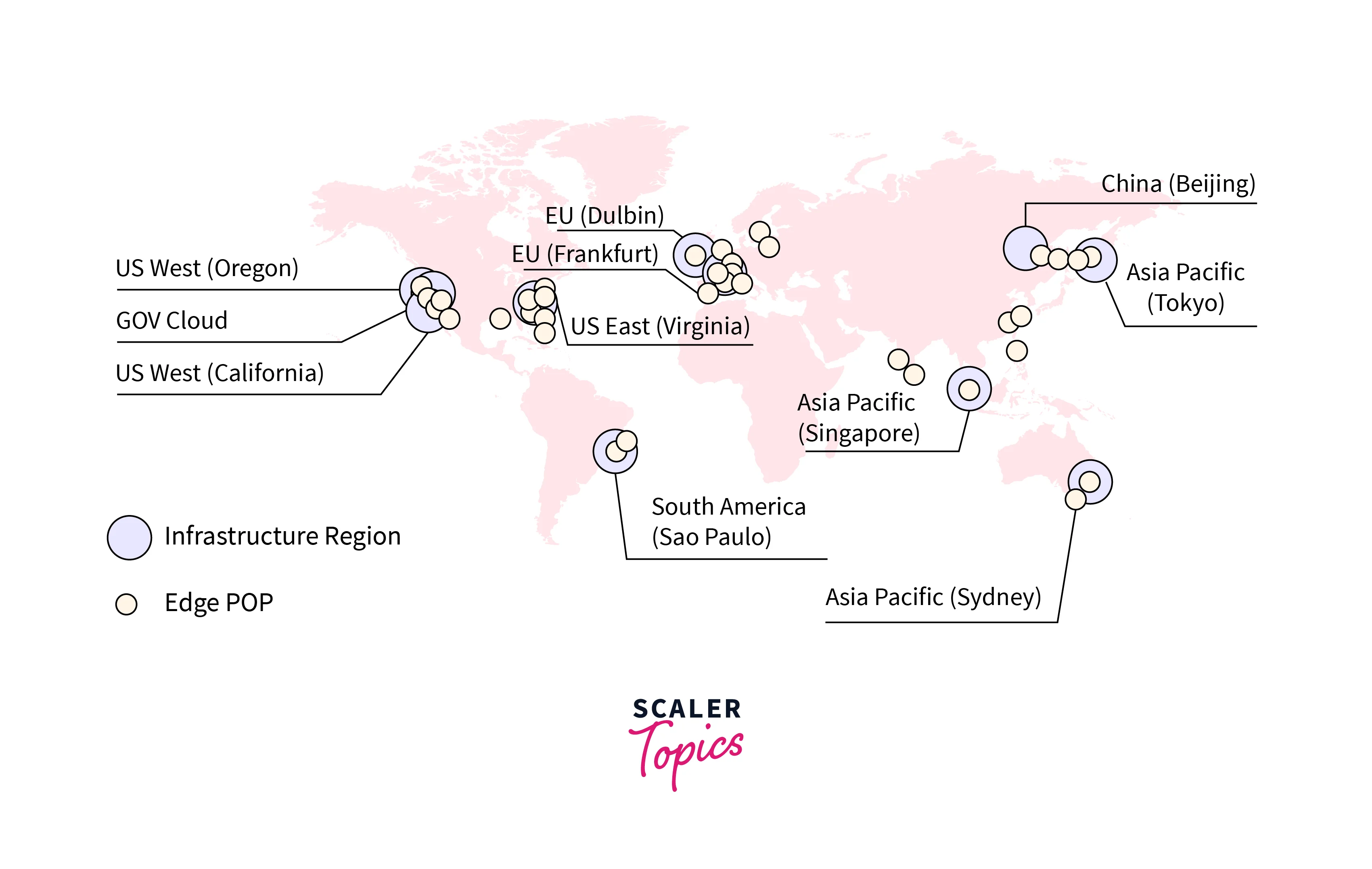

The primary purpose of an AWS Edge Location is to store massive data files closer to the point where end users access AWS services in a mini-data center. AWS data centers called edge locations are built to provide services with the least amount of latency feasible. These data centers are located all around the world and are owned by Amazon. They are frequently located in large cities and closer to users than Regions or Availability Zones, allowing quick replies.

What is Cache?

-

The software can provide content more quickly and affordably with the help of caching.

-

Data fragments are copied and stored quickly in the cache.

-

The information is kept on equipment with quick content delivery capabilities, such RAM (Random-access memory).

-

The cache's primary function is to quickly distribute content.

-

To avoid using the slow storage hardware, the data is stored in the fast hardware layer.

-

Since the data is no longer requested from the central location, the content is provided more quickly.

-

Delivery takes place from the Edge Location, the area that is closest to the user.

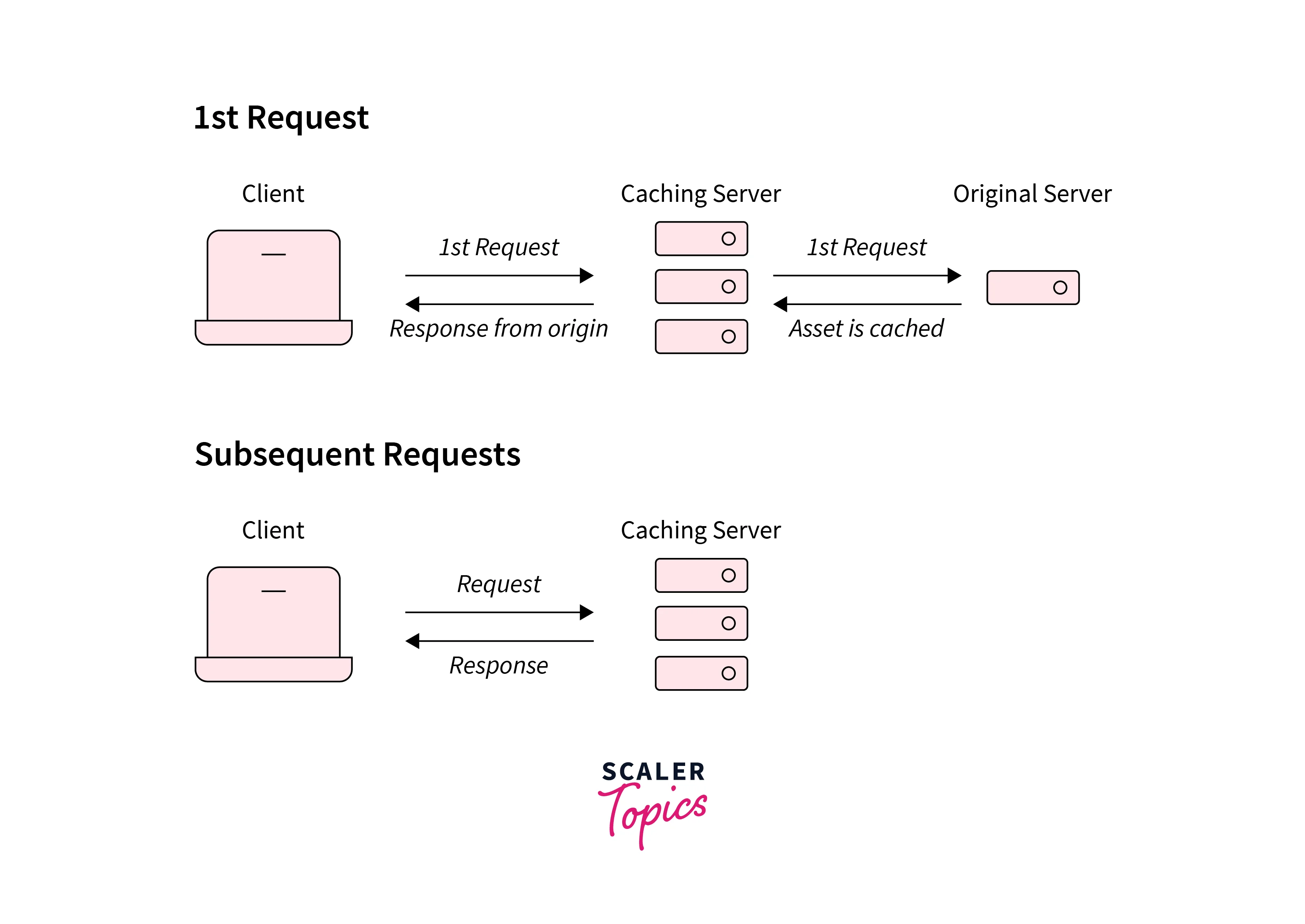

How Cache Works?

- The information in a cache is often kept in quick-access hardware like RAM (Random-access memory), though it might also be utilized in conjunction with a software component. The main goal of a cache is to improve data retrieval performance by minimizing access to the slower storage layer beneath.

- A cache often temporarily maintains a subset of data, sacrificing capacity for speed, as opposed to databases, whose information is normally comprehensive and long-lasting.

Benefits of Caching

- Optimize Application Performance:

Reading data from an in-memory cache is incredibly quick because memory is orders of magnitude faster than disc (magnetic or SSD) (sub-millisecond). The application's overall performance is improved by this noticeably quicker data access. - Efficient Database Cost:

When compared to multiple database instances, a single cache instance might potentially yield hundreds of thousands of IOPS (Input/Output operations per second), lowering the overall cost. This matters a lot more if the main database charges by throughput. In some circumstances, the cost reduction may amount to several percentage points. - Reduce the Load on the Backend:

Caching can lessen the pressure on your database and shield it from worse performance under stress or even from crashing during spikes by diverting a large portion of the read load from the backend database to the in-memory layer. - Predictable Results:

Dealing with periods of surges in application usage is a regular difficulty in modern systems. Examples include social media platforms on election day or the day of the Super Bowl, e-commerce websites on Black Friday, etc. Higher data retrieval latencies as a result of increased database load make the performance of the entire application uncertain. This problem can be reduced by utilizing a high throughput in-memory cache. - Increase Read Throughput (IOPS):

In-memory systems offer substantially higher request rates (IOPS) than a comparable disk-based database in addition to having lower latency. When used as a distributed side cache, a single instance may handle millions of queries per second.

What is an Edge Location in AWS?

A website that CloudFront uses as a cache allows copies of your content to be delivered more quickly to users wherever.

AWS data centers called edge locations are built to provide services with the least amount of latency feasible. These data centers are located all around the world and are owned by Amazon. They are frequently located in large cities and closer to users than Regions or Availability Zones, allowing quick replies. Edge locations are used by a subset of services for which latency matters, such as:

- CloudFront:

It utilizes edge locations to cache versions of the content it delivers so that it can be provided to users more quickly because it is closer to them. - Route 53:

To speed up the resolution of local DNS queries, it serves DNS replies from edge locations. - Web Application Firewall and AWS Shield:

To as quickly as possible halt undesirable traffic, it analyzes traffic in edge areas.

When you take a closer look at the issue, you'll see that what's occurring is that when a user sends a request, it routes to the closest edge location and offers the response from there, making it rapid, rather than obtaining a response from the core server.

For instance, some of your traffic may arrive from Canada if your data is stored in an S3 bucket located in Australia. In this case, AWS will begin caching your data in one of the edge locations in Canada, preventing the requirement for the request to travel to Australia by delivering it from the cache edge site in Canada. As a result, the latency will be reduced, improving the user experience.

AWS Edge Locations Use Cases

Many AWS capabilities make advantage of AWS Edge Locations.

- The most frequently mentioned application of edge locations is CloudFront. It is a content delivery network that stores caches of content near its edges. Users can access content more quickly by having it provided directly from the cache. Serving static materials, speeding up websites, and streaming video are all common uses for CloudFront.

- The name servers for Route 53's managed DNS service are dispersed throughout Amazon's edge locations. DNS answers are as quick as they can be because they come directly from the edge sites.

- A firewall and DDoS defense are provided by Web Application Firewall and AWS Shield, respectively. To eliminate harmful or undesired traffic as close to the source as feasible, these services filter traffic at edge locations. As a result, both the public internet and Amazon's worldwide network experience less congestion.

- With the help of AWS Global Accelerator, you can send requests for important resources halfway around the world and still route them through Amazon's global network. After being sent to the nearest edge site, the request is then forwarded across Amazon's network, frequently with reduced latency and greater capacity than the open internet.

Benefits of AWS Edge Locations

There are several ways edge locations cut down on latency.

- Some edge location services provide users with a quick response right away. For instance, CloudFront caches content at edge locations, allowing for an immediate serving of the cached content. The edge location has lower latency since it is geographically closer to the user than the origin server.

- Onto the AWS network, traffic is routed by other edge location services. AWS offers a backbone of redundant, high-bandwidth fiber cables that span the whole world.

- Especially over long distances, traffic delivered over this network is frequently quicker and more dependable than traffic sent over the open internet.

- For instance, if you utilize S3 Transfer Acceleration to download an object, it first leaves S3. It travels through the AWS global network to your closest edge location before making its final hop over the open internet.

- Compared to Regions, there are a lot more edge places. Users are, therefore, more likely to receive low-latency responses if they are close to an edge site.

- Regularly, Amazon adds additional edge locations, and users in the area will automatically see improved speed. As an illustration, Amazon has opened its first edge location in Thailand.

- Without any further work, your application would have become faster for Thai users if it had been using AWS Global Accelerator.

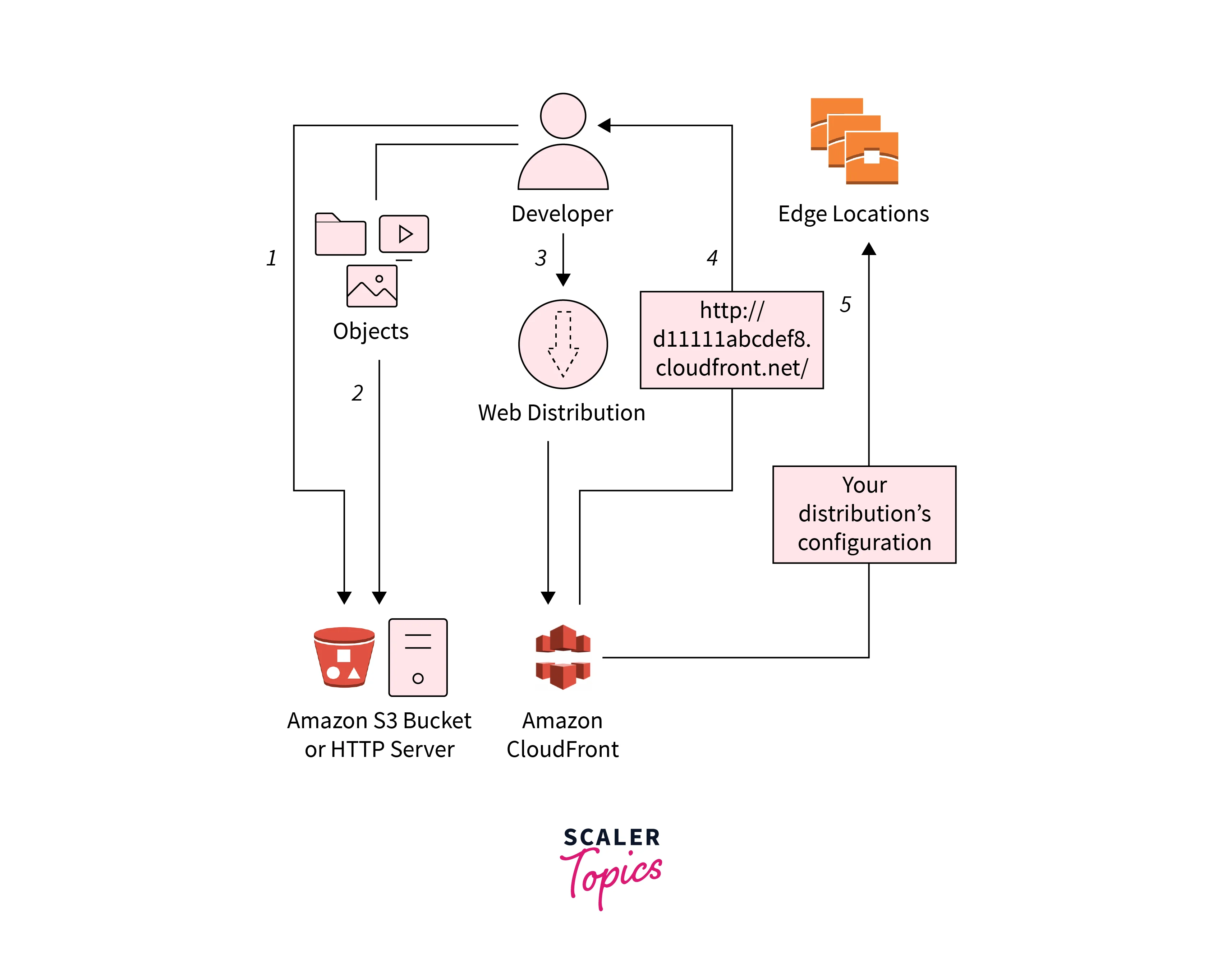

AWS Edge Locations and CloudFront

The online service Amazon CloudFront makes it faster for users to access your static and dynamic web content, including.html,.css,.js, and picture files. Edge locations are a global network of data centers that CloudFront uses to deliver your content. To serve content with optimal performance, a user's request for content that you are providing with CloudFront is routed to the edge location that has the lowest latency (time delay).

- CloudFront serves material right away if it is already in the edge location with the lowest latency.

- Suppose the material is not present at that edge location. In that case, CloudFront obtains it from a predetermined origin, such as an Amazon S3 bucket, a MediaPackage channel, or an HTTP server (for instance, a web server), which you have designated as the source for the final version of your content.

Consider the scenario where you're serving an image through a conventional web server rather than from CloudFront. For instance, you could use the URL https://example.com/sunsetphoto.png to deliver the image sunsetphoto.png.

Your users can access this URL and view the image with ease. They may only be aware that their request gets forwarded via the intricate web of interconnected networks that is the internet once the image is located, though.

By directing each user request over the AWS backbone network to the edge location that can best serve your content, CloudFront expedites the distribution of your content. The edge CloudFront server usually offers the quickest delivery to the viewer. Performance is enhanced by using the AWS network, which significantly minimizes the number of networks that user requests must transit through. Users benefit from faster data transfer rates and lower latency (the amount of time it takes for the first byte of a file to load).

Edge Locations Vs. Availability Zones Vs. AWS Regions

AWS Regions

- Numerous data centers that offer various computing, storage, and other valuable services that are necessary for running your applications are provided by AWS.

- Second, a high-speed fiber network links all Regions together. AWS effectively controls this network. It is a worldwide organization as a result.

- Thirdly, each Region is independent of the others. It means that in the selected Region, absolutely no information can enter or leave your surroundings. The only exception is if you expressly authorize the transfer of such data.

For instance, you might adhere to legal restrictions that Delhi's financial information not be sent outside of India. As a result, any data kept in the Delhi Region is not permitted to be exported unless the proper authorization and permissions are given. As a result, a key element of the AWS Regions' design is regional data sovereignty.

Four business aspects to take into account when choosing a region are listed below:

- Support for Data Governance:

Your business could need to run its information out of particular locations depending on the industry and geography. Therefore, you should choose the Frankfurt Region if your firm has to keep all of its data within German borders. - Proximity to Clients:

You may deliver the required content to your consumer base much faster by selecting a region that is close to them. Imagine that the majority of your clientele is headquartered in Singapore, but your company is based in Ohio, USA. In this situation, you should think about operating the application from the Singapore Region. - Feature Availability:

the nearest Region might be unable to supply all the advantages your business needs to offer to its clients. As they develop several new services and enhance the functionality of the already existing ones, AWS innovates constantly. But they frequently need to develop a substantial amount of actual hardware to make these services accessible worldwide. - Pricing:

Despite the hardware being the same across all Regions, some places cost more.

Availability Zones

- One or more separate data centers located inside a Region and offer redundant power, networking, and connectivity make up an availability zone (AZ).

- These centers are housed in different buildings. Customers can operate production databases and apps in availability zones, which provide greater availability, fault tolerance, and scalability than single data centers.

- Despite being isolated, each AZ is connected to the others through low-latency lines in the pertinent Region. Customers have enough freedom with AWS to place instances and store data across a wide range of geographical Regions and several AZs inside each Region.

- All Availability Zones on AWS are created as autonomous failure zones. In a traditional urban area, it means that AWS physically divides AZs, and each AZ is situated in a lower-risk flood plain. * AWS data centers in different AZs additionally receive power from independent substations in addition to the uninterruptible power supply (UPS) and onsite backup generation capabilities.

- Between Availability Zones, all communication is encrypted. Additionally, the network performance can enable synchronous replication between pertinent AZs. Application partitioning for high availability is simple with Availability Zones.

- After all, distributing programs among AZs improves organizational isolation and provides defense against a variety of risks, such as power failures, lightning strikes, or natural disasters.

- Because of this, Availability Zones need to be physically separated by tens of miles, which is a considerable amount of space.

Edge Locations

-

For instance, even though this information is managed from the Tokyo Region, numerous clients in Mumbai can access it.

-

In this situation, you can place or cache a suitable copy regionally in Mumbai rather than forcing such Mumbai-based clients to submit requests for accessing the necessary data to Tokyo continually.

-

The method of caching copies of data closer to users throughout the world uses the idea of content delivery networks (CDNs).

-

You may send data, video, applications, or APIs to clients anywhere in the world with Amazon CloudFront on AWS. High transfer rates and low latency are both offered by Amazon CloudFront.

-

The crucial aspect, however, is that this service uses so-called Edge locations to speed up a connection with customers, wherever they may be.

Your company can send content from Regions to a certain set of Edge locations worldwide since Edge locations and Regions function as separate infrastructure components. This enables both communication and content delivery to be sped up. At the same time, Amazon Route 53, a well-known domain name service (DNS) on AWS, is run at Edge sites. This ensures dependable low latency and guides users to the proper web pages. Therefore, end customers who require access to your services frequently visit Edge sites.

Conclusion

- This article taught us about AWS Edge Locations and its various use cases.

- The primary purpose of an Edge Location is to store massive data files closer to the point where end users access AWS services.

- We also learned about Cache and how it works. The information in a cache is often kept in quick-access hardware like RAM.

- In this article, we also learned about AWS CloudFront and how it uses Edge Locations. The online service Amazon CloudFront makes it faster for users to access static and dynamic web content.

- Ultimately, we learned some key aspects of AWS Regions, Availability Zones, and Edge Locations.