Placement Groups in AWS

Overview

Whenever users deploy numerous EC2 instances on AWS, the EC2 measures ensure that each of the EC2 instances is distributed among different physical computers to reduce system crashes. However, AWS EC2 further allows clients to place the EC2 instance where they require them. Placement Groups govern whether an EC2 instance is deployed just on hardware resources. AWS provides three types of placement group tactics that you may utilize depending on the workloads.

What is a Placement Group in Amazon EC2?

When you create a new EC2 instance, the AWS EC2 service tries to distribute the instance so that each of the instances is distributed among hardware resources to prevent related failure. To suit the demands of the workloads, users may utilize placement groups in AWS to impact the allocation of a collection of interconnected instances. Forming a placement group is completely free. Users may construct a placement group utilizing these placement techniques, based on the workload:

Cluster:

It clusters instances within an Availability Zones. This strategy enables the workload to attain the limited performance of the network required for closely coupled node-to-node connectivity seen in greater computation workloads.

Partition:

It divides the instances into logical partitions so that groupings of instances in the same partition need not use the same hardware resources as units of instances in a separate partition. Large-scale replicated and duplicated systems, like Hadoop, Cassandra, and Kafka, frequently employ such methods.

Spread:

To avoid linked breakdowns, it tightly distributes a smaller set of instances over diverse hardware resources.

What are the Benefits of Using a Placement Group?

Placement Groups in AWS:

- Suggested for apps requiring lower latency and higher throughput in the network.

- Only applicable to a single Availability Zones

- It is possible to bridge peer VPCs located in the same Region.

Partition Placement Groups in AWS:

- Lowers the effect of hardware faults on the app.

- Primarily utilized to install big dispersed and duplicated workloads over several racks, like HDFS, HBase, and Cassandra.

- Partitions can be located in several AZs within a single Region.

- Provide transparency to partitions, allowing users to determine what instances are already in whichever partition. Topology-aware programs like HDFS, HBase, and Cassandra can use knowledge to build smart data replications choices that improve the accessibility and persistence of data.

Spread Placement Groups in AWS:

- Suggested for apps with a limited number of vital instances that must be maintained apart.

- Minimizes the risk of concurrent failure, which can happen whenever instances utilize the very same racks, which isn't the situation inside a spread placement group.

- It is possible to span various AZs within a single Region.

Placement Groups Strategies Provided by AWS

Cluster Placement Groups

It is a logical collection of instances contained inside a single AZ. A cluster placement group in AWS could cover several peering VPCs within a single Region. Instances located in the same cluster placement groups have a greater per-flow TCP/IP performance limitation and are put within the same elevated bandwidth network.

The graphic below depicts instances that have been assigned to the cluster placement groups.

For apps that utilize lower latency, higher throughput networks, or even both, cluster placement groups are usually suggested. It is also advised whenever the most of network activity is directed between both the instance in the groups. Choose an ec2 instance type that supports an improved network to give the least latency and maximum packet-per-second network utilization for the placement groups.

It is recommended to Users Start The Instances as Follows:

- Have been using a single launch demand to deploy the number of instances inside the placement groups in AWS which users require.

- For any instances inside the placement groups, employ the same type of instance.

- Attempting to add the additional instance to the placement groups in AWS afterward, or launching more types of instances in the placement groups, increases the likelihood of receiving an inadequate capacity issue.

- If users stop and restart an instance inside a placement group, it remains inside the placement groups. But, when there is insufficient capacity for the instance, then starts to fail.

- If users get a capacity error while attempting to release an instance inside a placement group where there are already instances operating, pause and restart each of the instances in the placement groups before attempting the launch once more. Launching the instance can cause it to be migrated to hardware that can accommodate each of the required instances.

Partition Placement Groups

- Partition placement groups in AWS lower the possibility of associated hardware problems in the system. AWS EC2 divides each partition placement group into logical parts known as partitions while employing partition placement groups.

- Every partition inside the placement groups gets its very own collection of racks. Each rack is equipped with a separate networking and power supply. Because no two partitions in a placement group utilize the very same racks, users can segregate the effect of hardware failure inside the system.

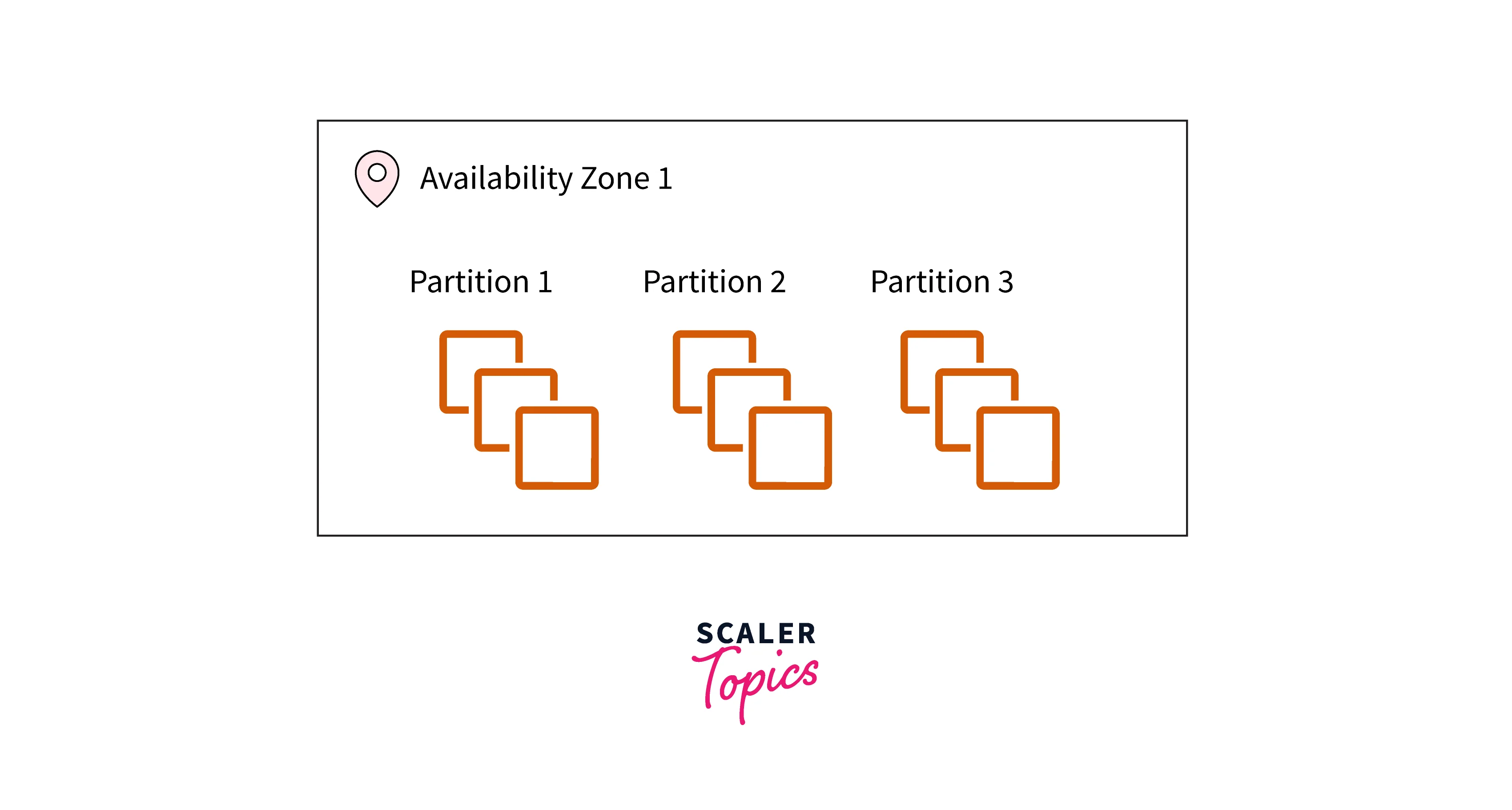

- The graphic below depicts a straightforward visual depiction of a partition deployment group inside one AZs. It displays instances that have been assigned to the partition placement groups that have 3 partitions: Partitions 1, 2, and 3.

- Every partition has many instances. Because instances in a partition need not exchange racks with instances in the other partitions, you may limit the consequences of a single hardware failure towards the corresponding partition alone.

Partition placement groups could be employed to install distributed networks and duplicated applications over several racks, like HDFS, HBase, and Cassandra. Whenever users deploy an instance into partition placement groups, AWS EC2 attempts to equally divide the instance over the number of partitions they choose. To gain greater command of how the instance is put, users may deploy them into specified partitions.

Partitions in several AZs within the same Region may be part of partition placement groups. Each AZ can have a total of seven partitions in a partitions placement group. The number of times that may be deployed into a partitions placement group is solely restricted by the user's restrictions.

Furthermore, the partition placement group provides insight into partition, allowing users to know when the instance is all in a particular partition. Such data could be shared with topology-aware programs like HDFS, HBase, and Cassandra. Such programs make smart data aggregation choices based on this data to increase the availability of data and sustainability of data.

The request fails if users launch or deploy instances inside a partition placement group and there is inadequate distinct hardware to complete the requests. AWS EC2 adds new hardware to its inventory throughout the moment, allowing you to attempt the request once shortly.

Spread Placement Groups

It is a collection of instances all of which are installed on separate hardware.

-

Spread placement groups in AWS are advised for programs with a limited amount of critical instances that need to be held apart. Instances launched in spread-level placements groups lessen the possibility of concurrent failure which might happen whenever instances use identical equipment.

-

Spread-level placement groups in AWS have a variety of hardware and are thus appropriate for blending instance kinds or releasing instances across the period.

-

The request rejects if users launch or host instances in distributed placements groups and you have inadequate distinct hardware to satisfy the request.

-

AWS EC2 adds new hardware to its inventory over occasion, allowing you to attempt the request once more afterward. Instances can be distributed between racks or hosts using placement groups. Just AWS Outposts support host-level spread placements groups.

Groups of Rack Spread Levels Placements:

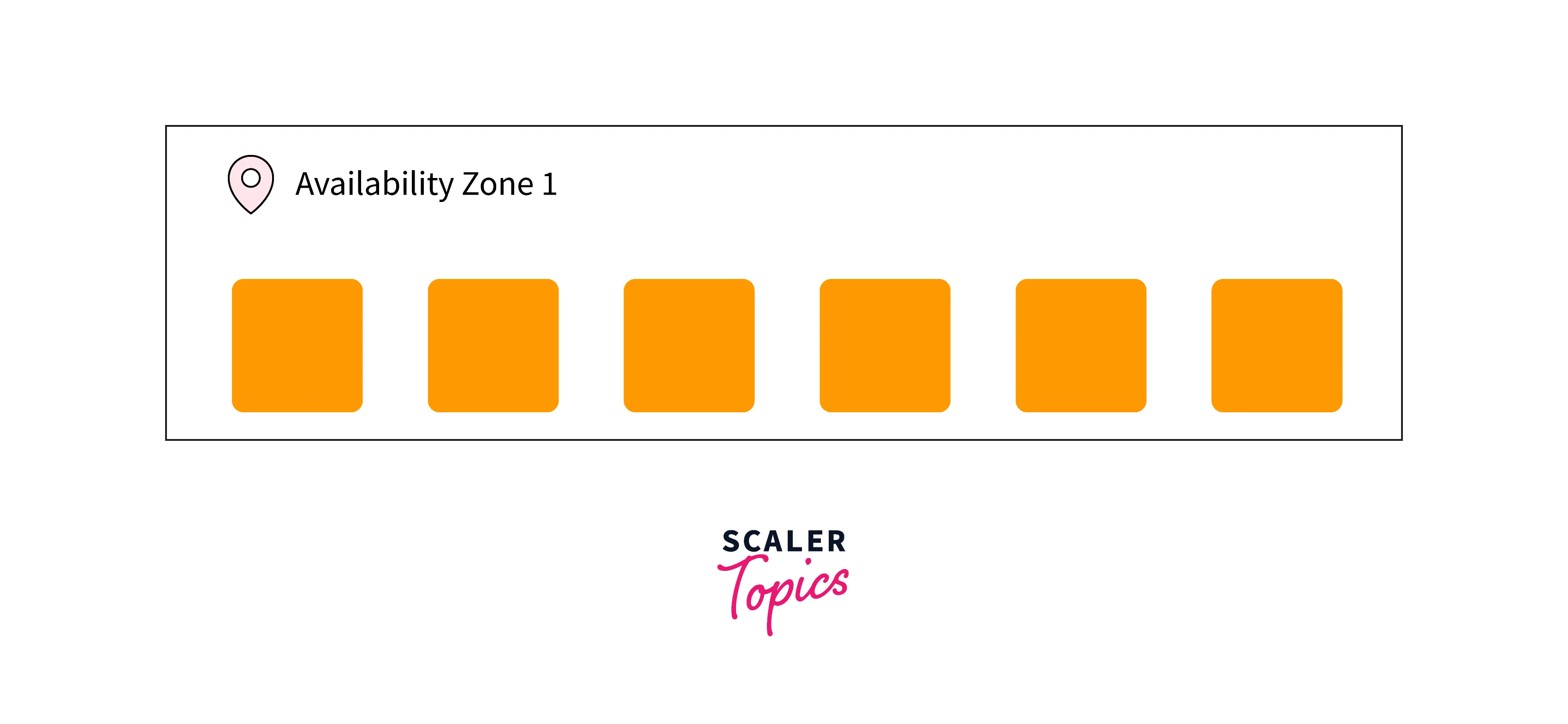

The figure below depicts 7 instances in the same AZs that are assigned to spread placements groups. The (7) instances are divided into 7 racks, each having its networking and power supply.

Within the same Region, rack-dispersed placements groups can traverse different AZs. One can also have a maximum of seven operating instances per AZs for each group for rack spread level placements groups.

Spread Placement Groups in AWS at The Host Level:

Just AWS Outposts support hosts spread level placements groups. There aren't any constraints on applications running within Outposts in hosting spread-level placements groups.

Working with Placement Groups

Create a Placement Groups

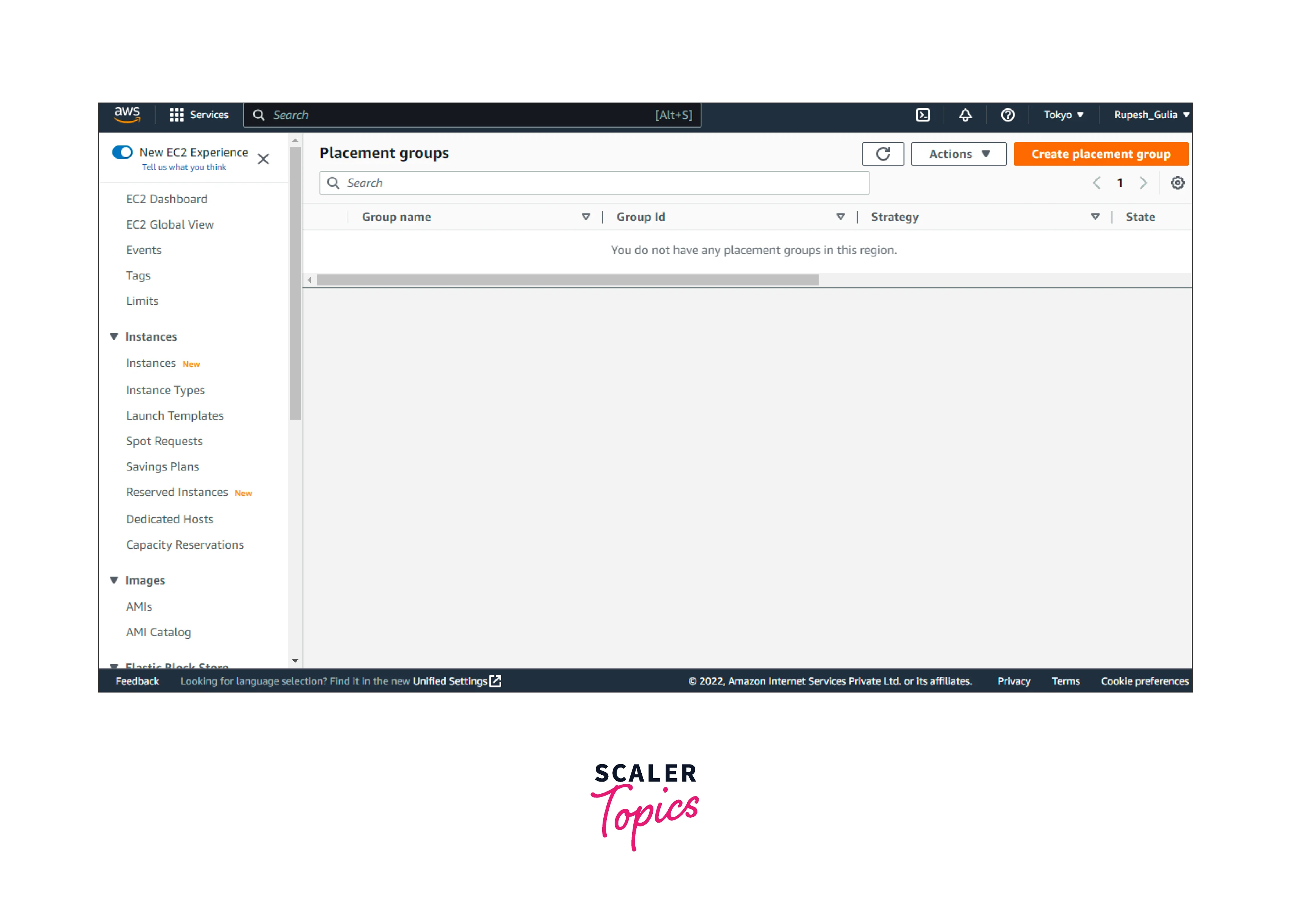

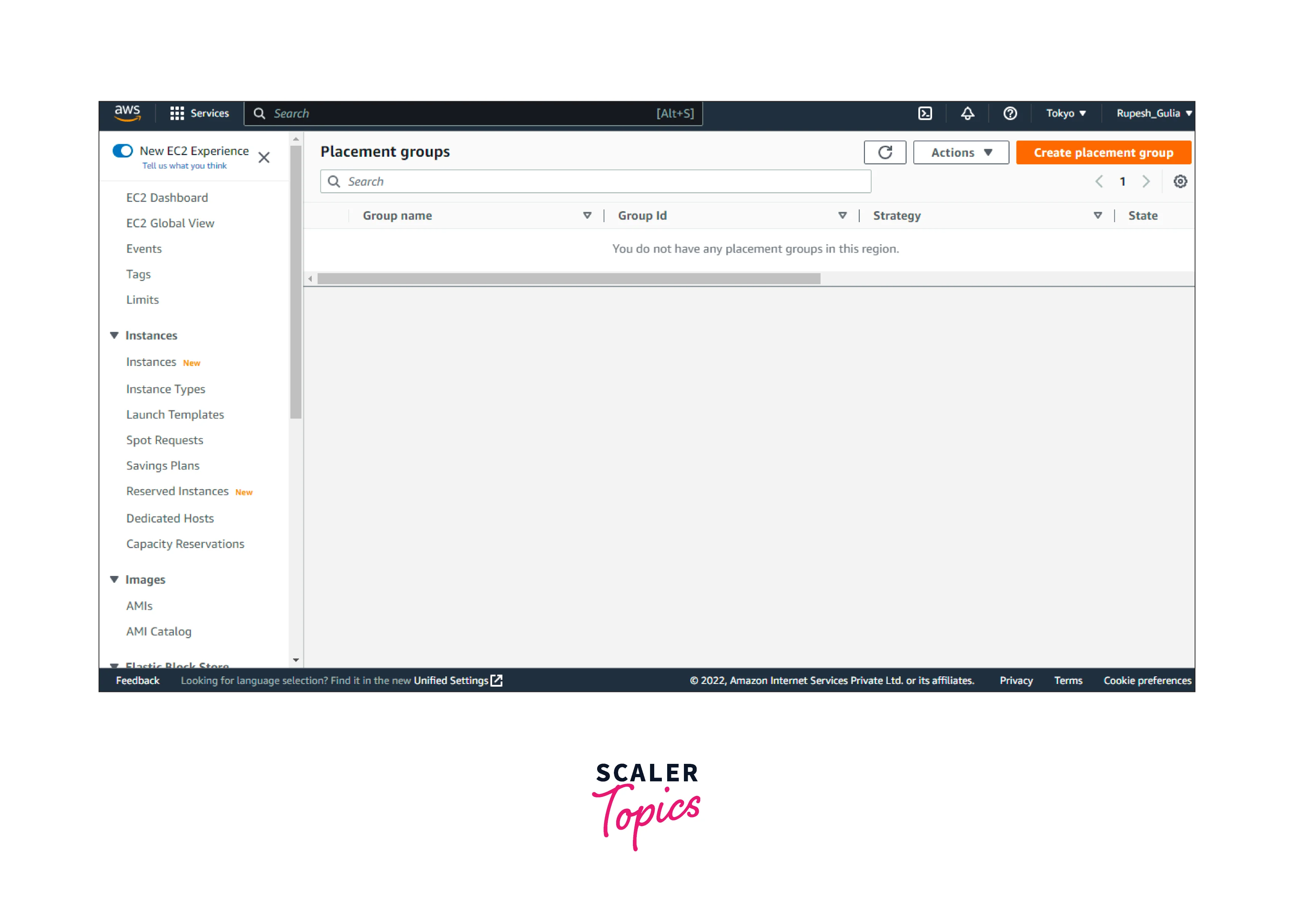

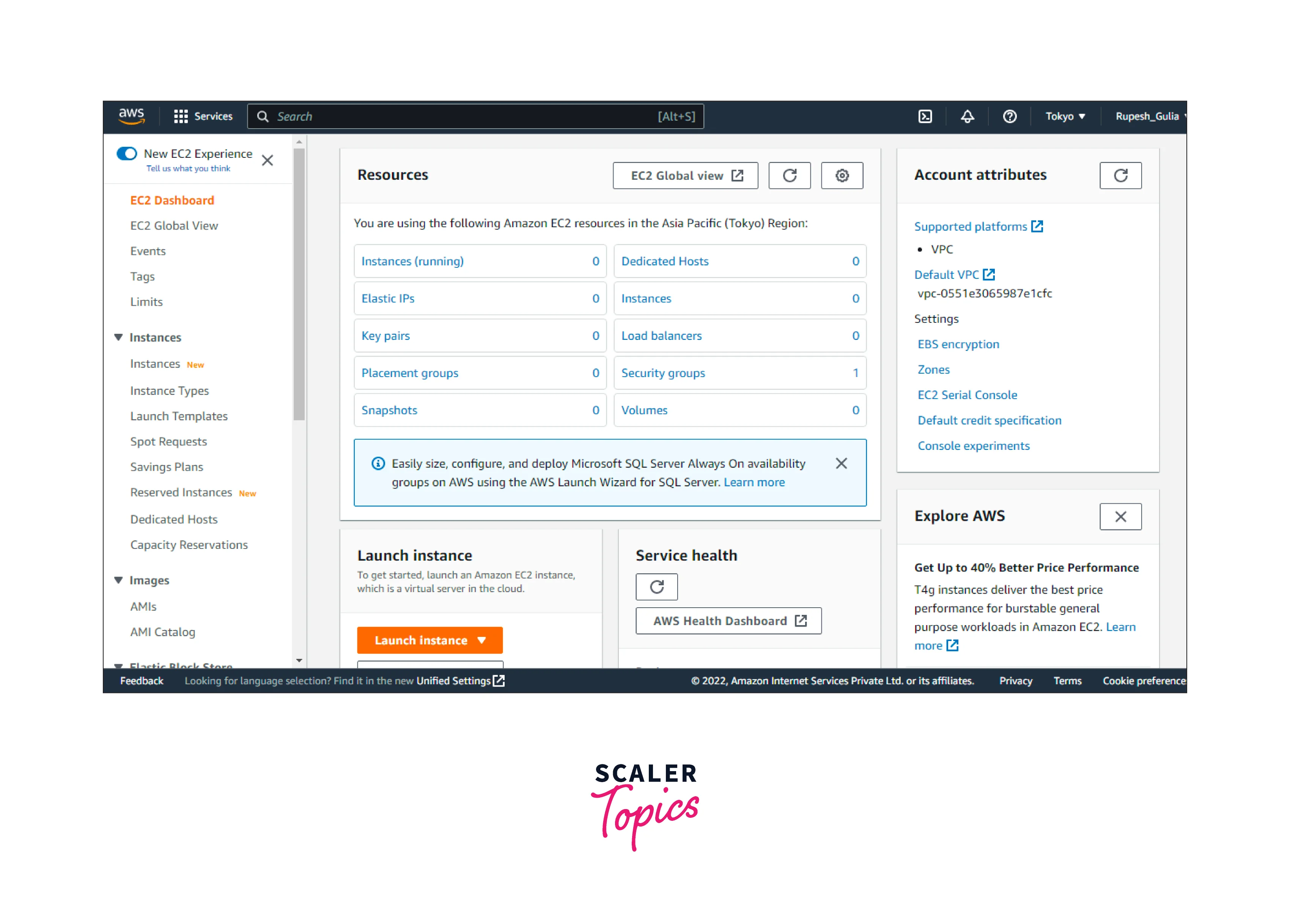

To Create a Placement Group in AWS Using The Console:

-

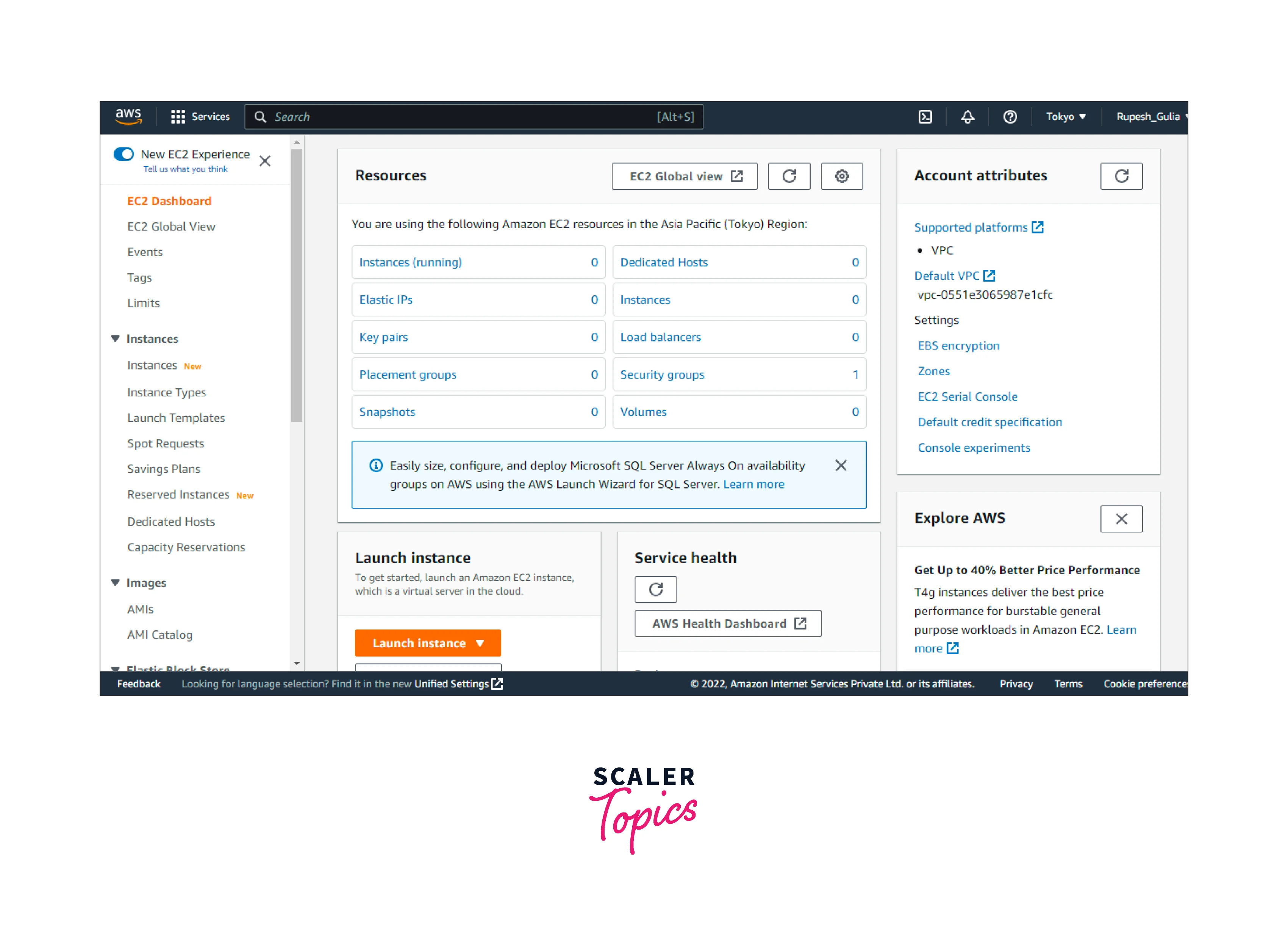

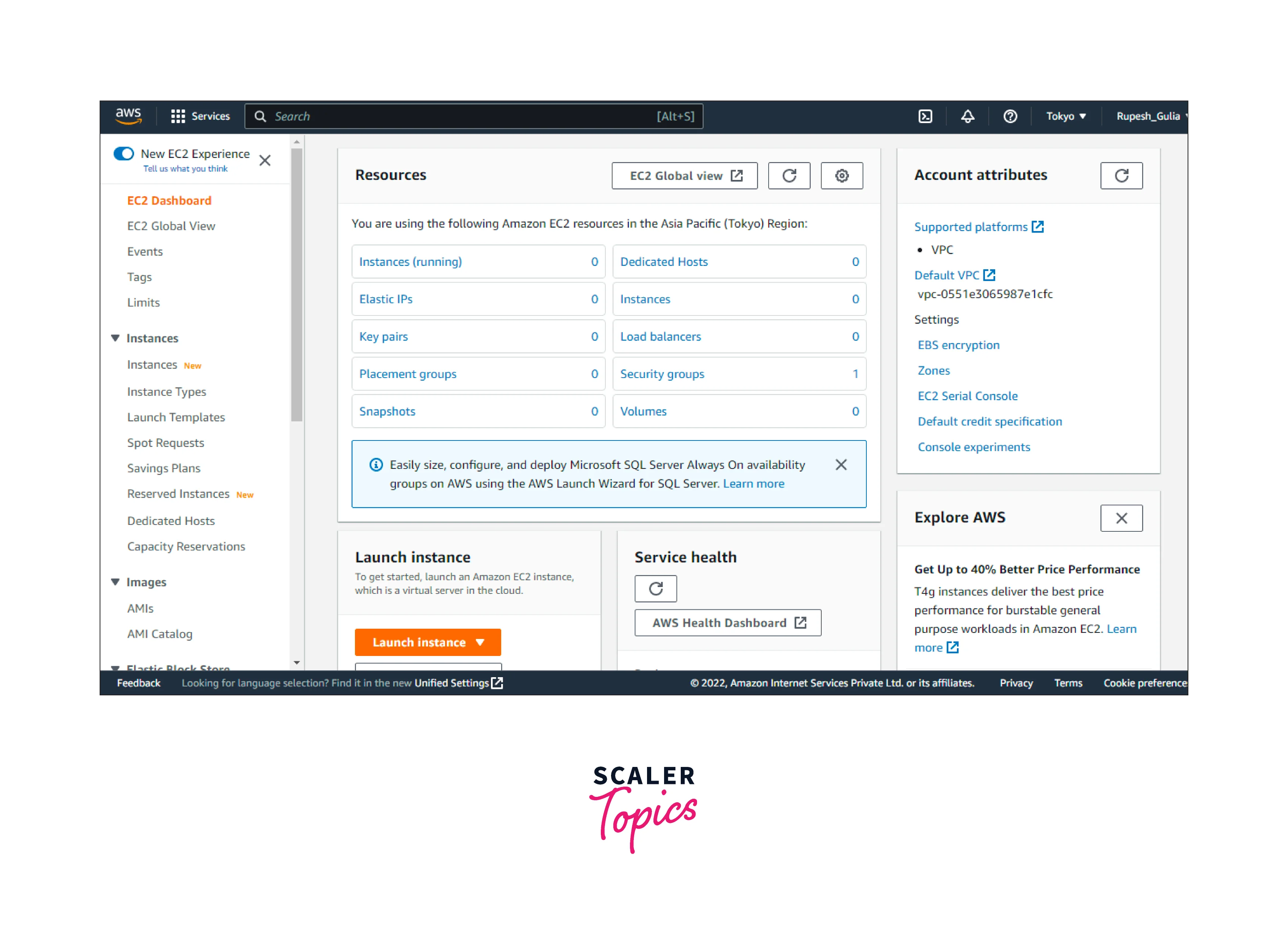

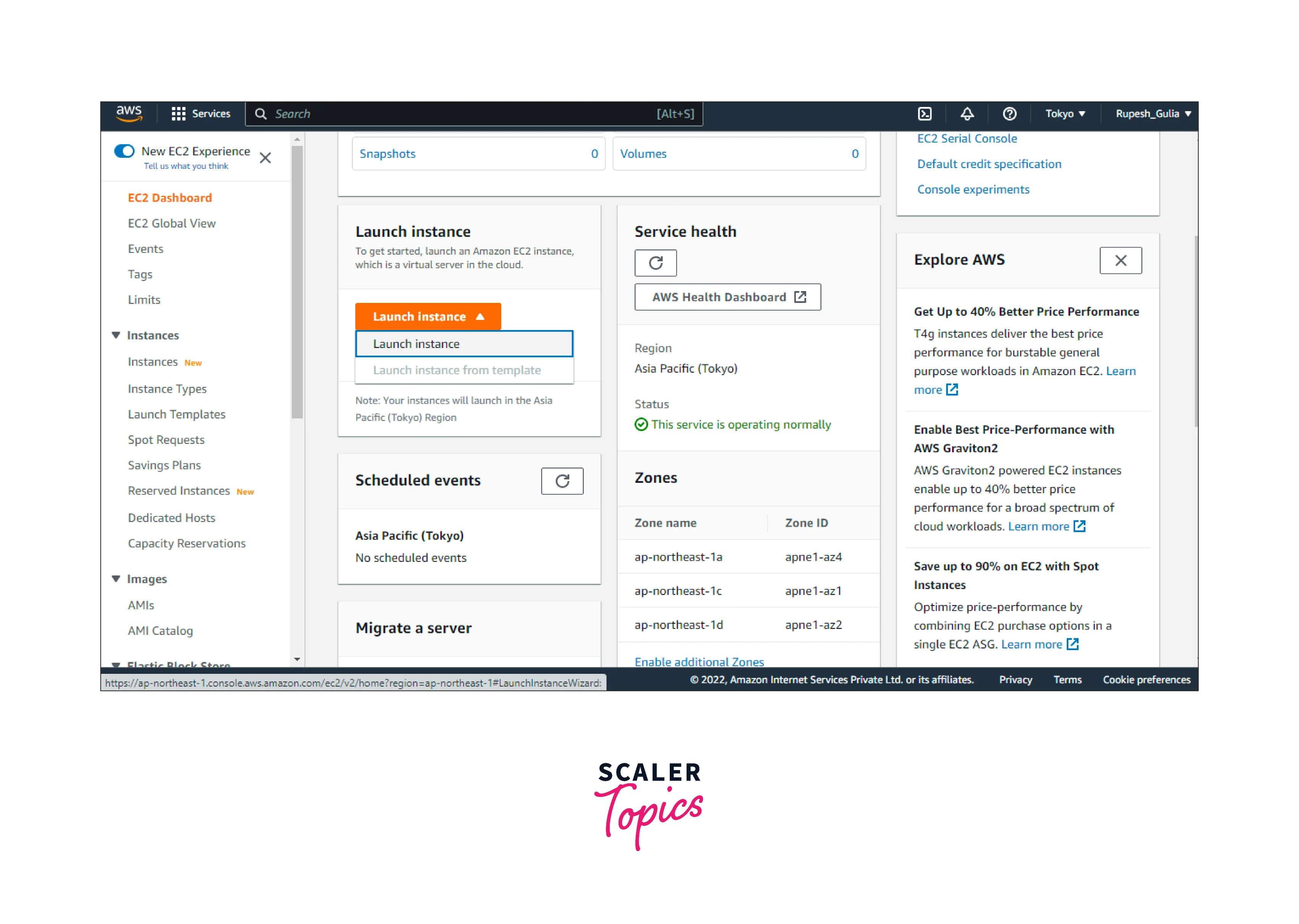

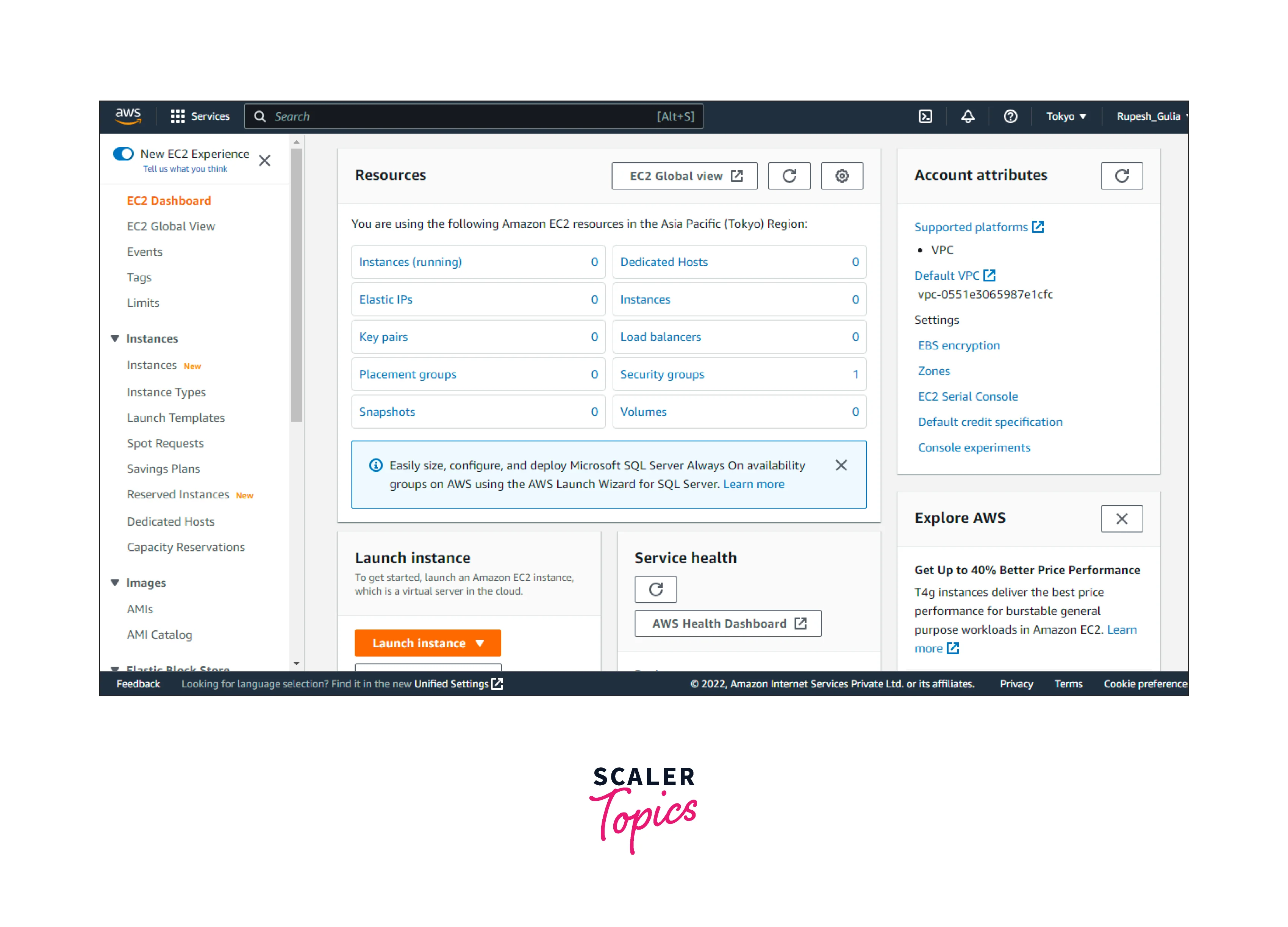

Access the AWS EC2 dashboard on link.

-

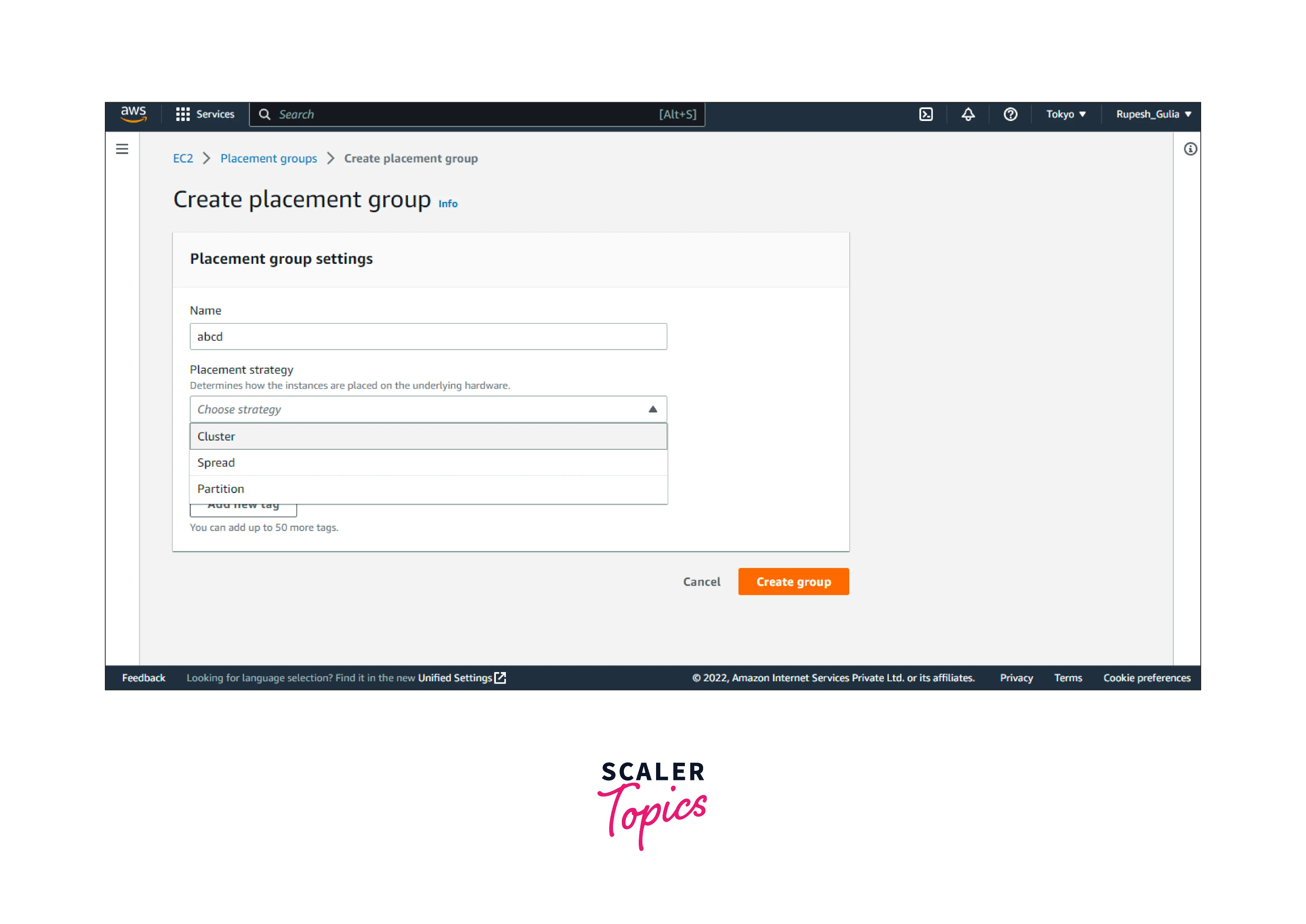

As shown below choose "create placement groups".

-

Provide a naming to the required groups.

-

Select the group placement strategy. Select the number of partitions inside the groups while selecting the partition.

- Select the group's placement approach.

- If users select Spread, select the spread amount.

- Racks: zero limitations

- Host: exclusively available to Outposts, If users select Partition, provide the number of partitions inside the groups.

-

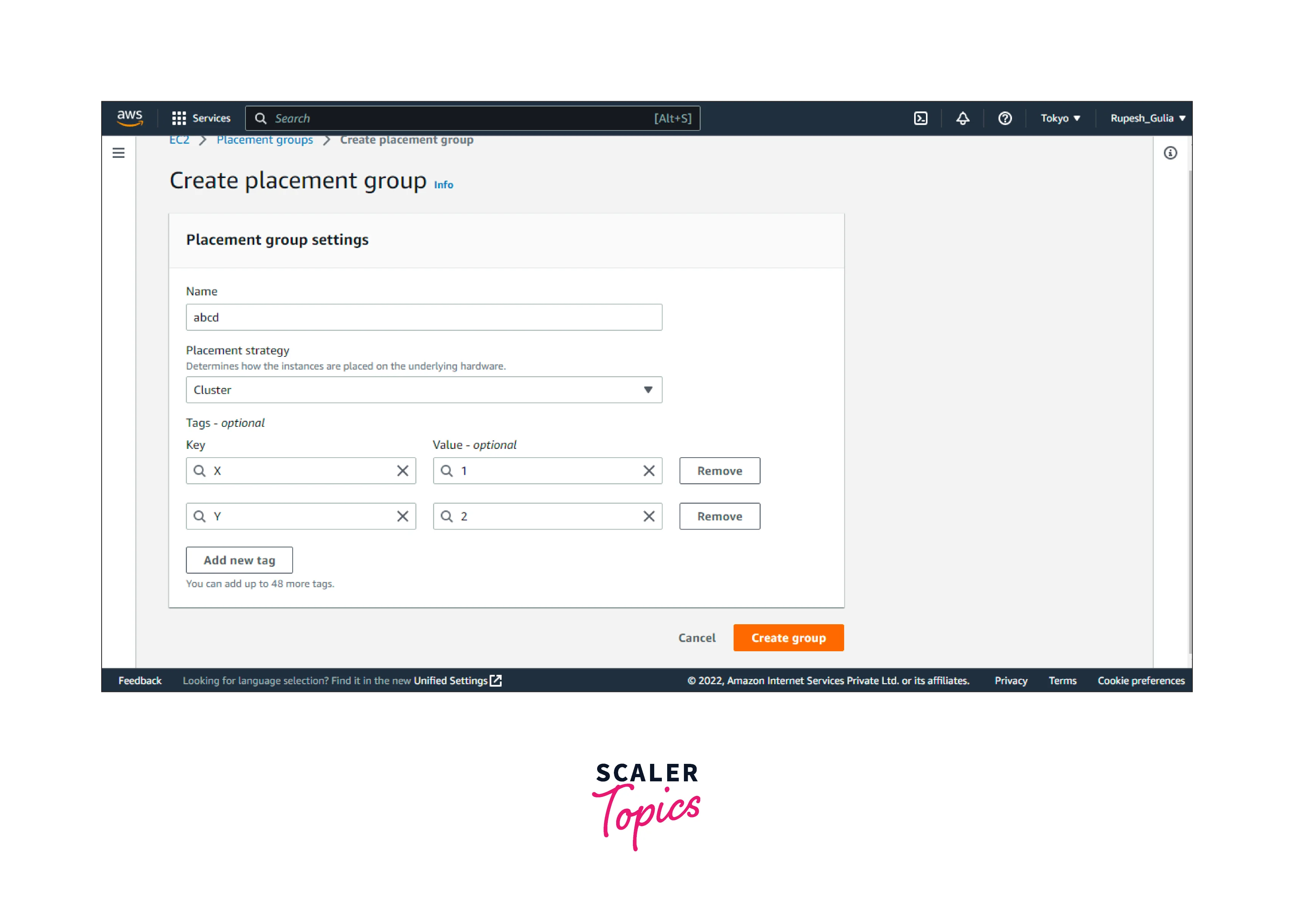

To add the new tags which are optional (max limit is 50) in the form of key-value pair by clicking on the "Add Tag".

-

Finally click on "Create groups".

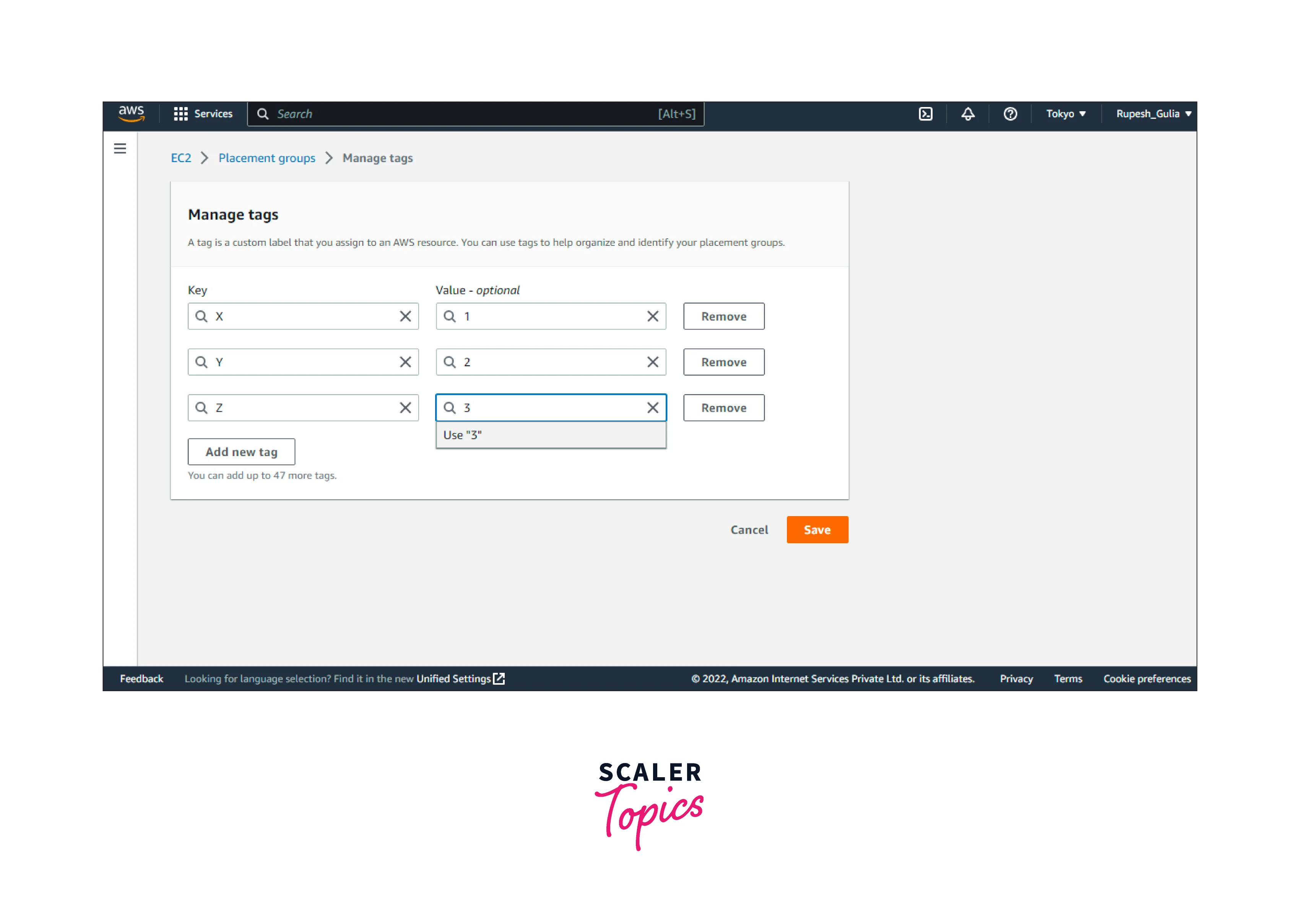

Tag a Placement Group

To help categorize and manage your existing placement groups, you can tag them with custom metadata. For more information about how tags work, see Tag your Amazon EC2 resources.

When you tag a placement group, the instances that are launched into the placement group are not automatically tagged. You need to explicitly tag the instances that are launched into the placement group. For more information, see Add a tag when you launch an instance.

You can view, add, and delete tags using the new console and the command line tools.

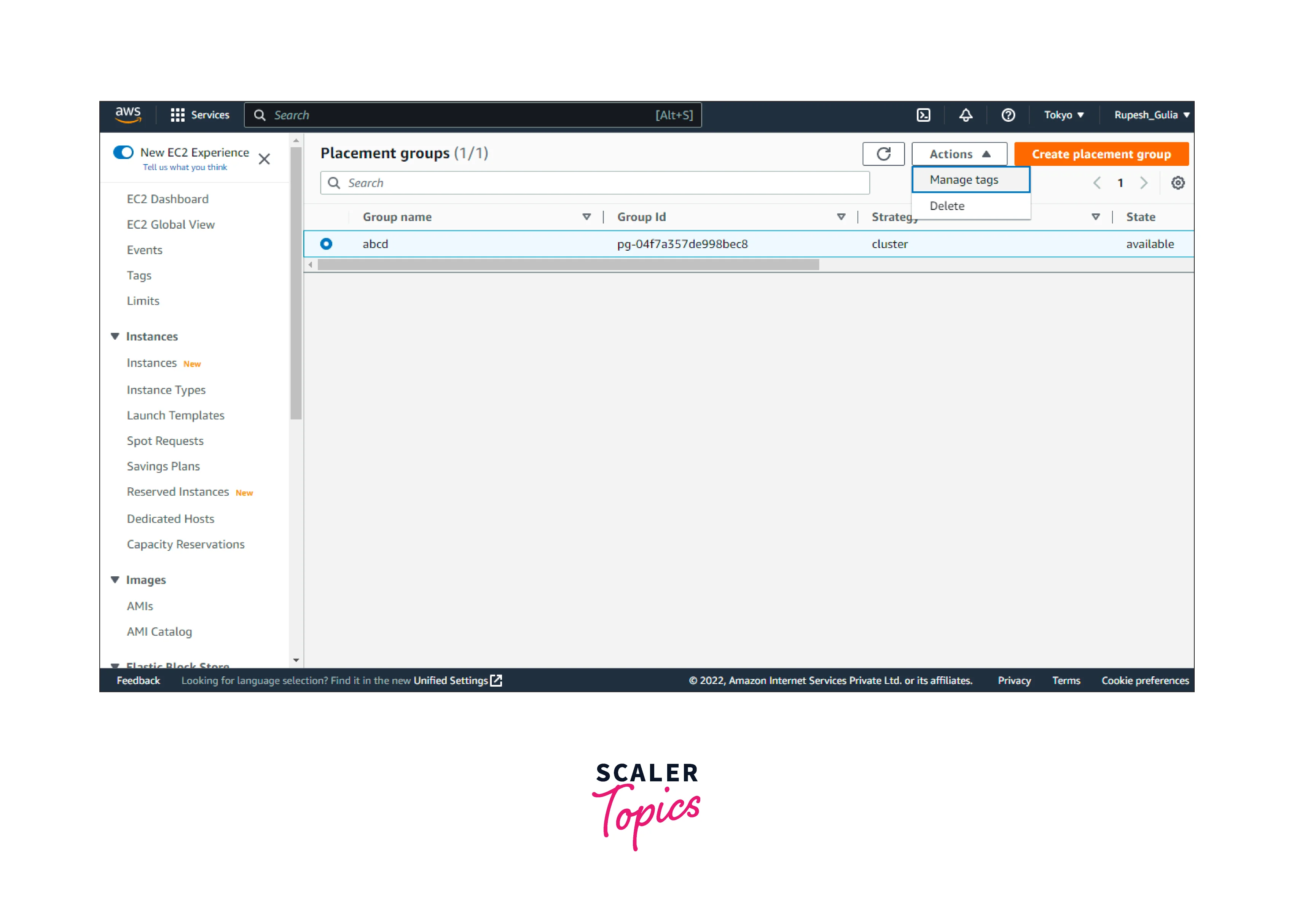

To See, Add, or Remove a Tag From an Existent Placement Group:

- Access the AWS EC2 dashboard on link.

- As shown below choose "create placement groups".

- Click on the created groups, then click on the action button, and then choose the manage tags as shown below.

- The tags added to a placement group are displayed on the Manage tags page. For adding or deleting the tag, just follow these steps:

- To add the new tags which are optional (max limit is 50) in the form of key-value pair by clicking on the "Add Tag".

- To remove the tags, click on Remove when you see the tag that wishes to remove.

- Click on the Save button as shown above to make the changes.

Launch Instances in a Placement Group

To Launch Instances Into Placement Groups Using The Console:

-

Access the AWS EC2 dashboard on link.

-

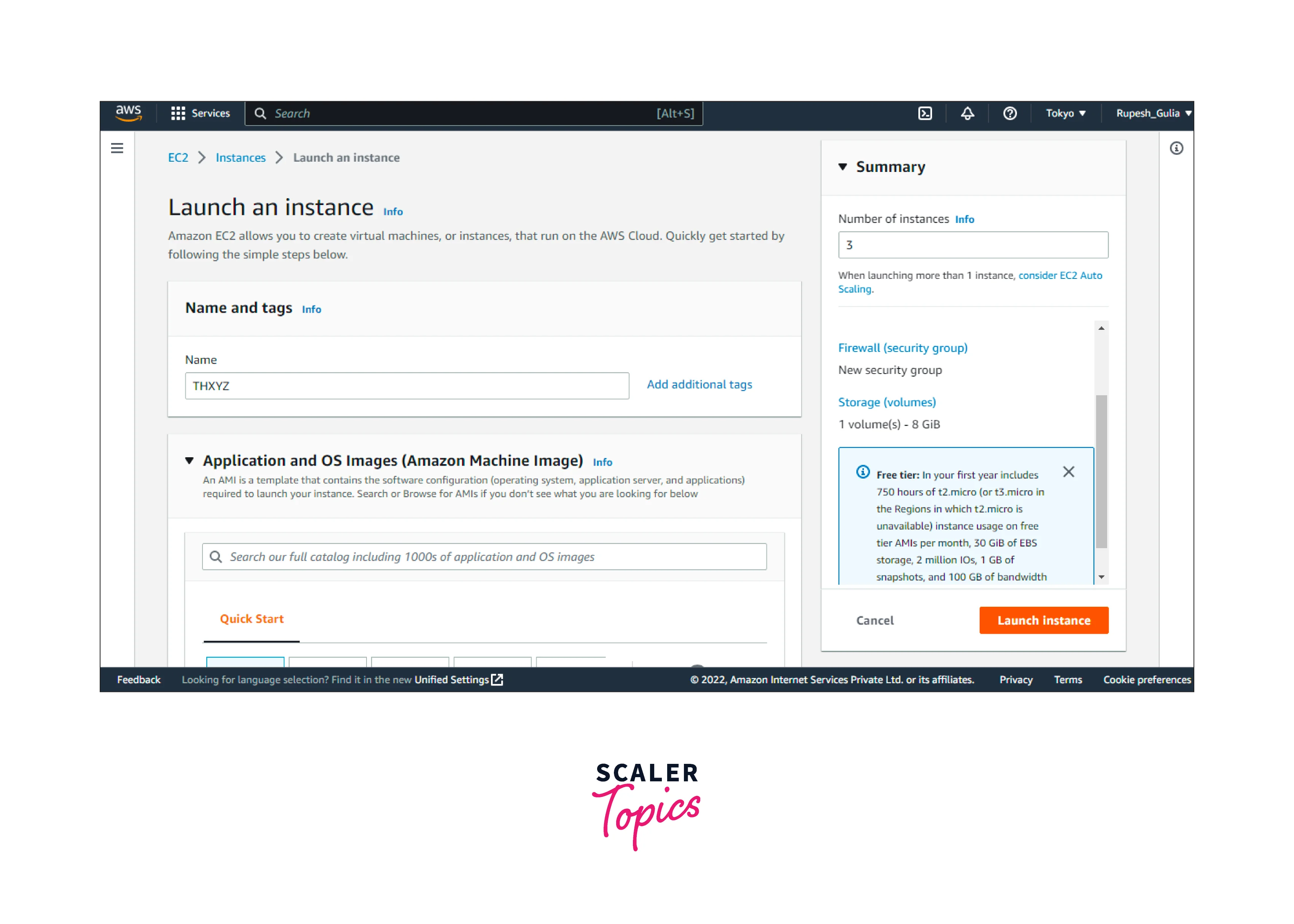

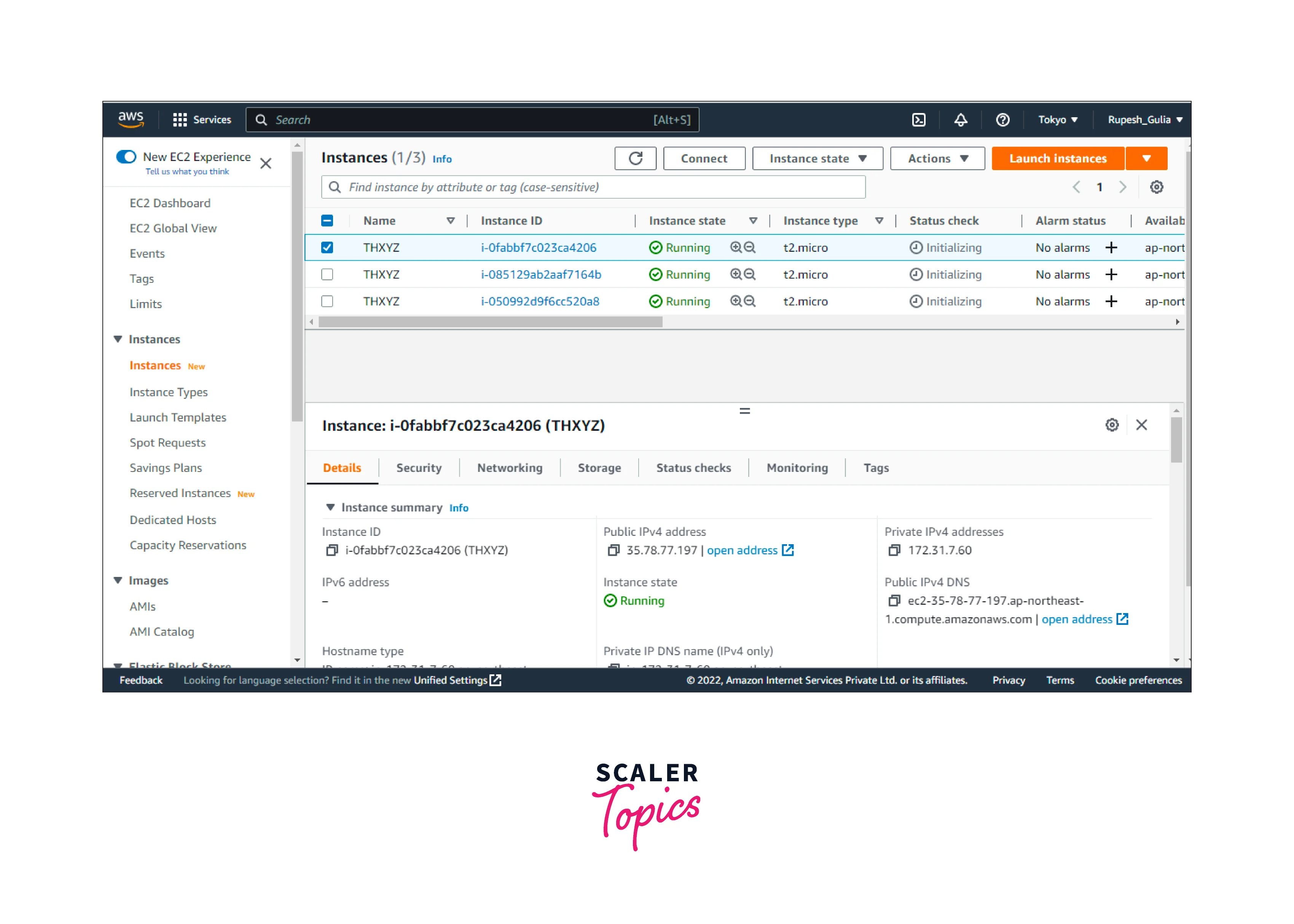

Inside the Launch instance window on the EC2 dashboard, select Launch instances, and afterward select Launch instances from the alternatives that display. Fill out the form as prompted, making sure to include the number of things:

-

Choose the type of an EC2 instance that may be deployed into placement groups under EC2 Instance types.

-

Inside the Summary field, below the Number of instances, indicate the entire number of instances required in this placement group, since users may be unable to add instances later.

-

Under Advanced Details, for the Placement group name, you can choose to add the instances to a new or existing placement group. If you choose a placement group with a partitioning strategy, for the Target partition, choose the partition in which to launch the instances.

Describe Instances in a Placement Group

To View the Placement Groups and Partition Number of an Instance Using The Console:

- Access the AWS EC2 dashboard on link.

- Select Instances from the menu bar.

- Locate the Placement groups on the Details page, below Host and placement groups. This field is blank if the instances do not belong to a placement group. Instead, it includes a placements group's name. Partition number holds the partition number for the instances if somehow the placement group is a partition placement group.

Change the Placement Group for an Instance

Users could modify the instance's placements groups in several ways:

- Add the new instances to the existing placement groups.

- Transfer a placement group's instance to the other

- Delete a placement groups instances

While moving or removing instances, it needs to be in the halted state. AWS CLI or SDK can be used to relocate or delete the instances.

To Use The AWS CLI, Transfer an Instances To The Placement Groups in AWS:

-

Use the stop-instances operation to terminate the instances.

-

By using the modify-instance-placement prompt to transfer the instances to a different placement group.

-

Use the start-instances instruction to begin the instances.

Delete a Placement Group

To Delete a Placement Group Using The Console:

If you need to replace a placement group or no longer need one, you can delete it. You can delete a placement group using one of the following methods.

Requirement:

Before you can delete a placement group, it must contain no instances. You can terminate all instances that you launched in the placement group, moveinstances to another placement group, or remove instances from the placement group.

-

Go to the Amazon EC2 console.

-

Select Placement groups from the main window.

-

Select Action, and Remove after selecting the placement groups.

-

If asked for confirmations, type Remove and then select Delete.

Rules and Limitations of Placement Groups

General Rules and Limitations:

To use placement groups, remember the following guidelines:

- In every Region, users could establish a limit of 500 placements groups per user.

- The names that provide a placement group must be distinct throughout the AWS account again for Region.

- It is not possible to combine placement groups.

- Every instance may only be released inside one placement group at the same time; it will not be started in several placement groups at the same time.

- On-Demand Capability Reservations and zones Reserved Instance reserve capacity on AWS EC2 instance in an AZs. Instances in a placement group can utilize the power limitation. Therefore, it isn't feasible to reserve capacities for the placement groups expressly.

- Committed Host could not be launched in the placements groups.

Cluster Placement Group Rules and Limitations:

-

Except for low latency quality instances (such as T2) and Mac1 instances, these instances categories are assisted: current gen instance.

-

The following previous generation instances: A1, C3, cc2.8xlarge, cr1.8xlarge, G2, hs1.8xlarge, I2, and R3.

-

A cluster placement group can't span multiple Availability Zones.

-

The maximum network throughput speed of traffic between two instances in a cluster placement group is limited by the slower of the two instances. For applications with high-throughput requirements, choose an instance type with network connectivity that meets your requirements.

-

For instances that are enabled for enhanced networking, the following rules apply:

-

Instances within a cluster placement group can use up to 10 Gbps for single-flow traffic. Instances that are not within a cluster placement group can use up to 5 Gbps for single-flow traffic.

-

Traffic to and from Amazon S3 buckets within the same Region over the public IP address space or through a VPC endpoint can use all available instance aggregate bandwidth.

-

You can launch multiple instance types into a cluster placement group. However, this reduces the likelihood that the required capacity will be available for your launch to succeed. We recommend using the same instance type for all instances in a cluster placement group.

-

Network traffic to the internet and over an AWS Direct Connect connection to on-premises resources is limited to 5 Gbps.

Partition Placement Group Rules and Limitations:

-

A partition placement group supports a maximum of seven partitions per Availability Zone. The number of instances that you can launch in a partition placement group is limited only by your account limits.

-

When instances are launched into a partition placement group, Amazon EC2 tries to evenly distribute the instances across all partitions. Amazon EC2 doesn’t guarantee an even distribution of instances across all partitions.

-

A partition placement group with Dedicated Instances can have a maximum of two partitions.

-

You can't use Capacity Reservations to reserve capacity in a partition placement group.

-

Capacity Reservations do not reserve capacity in a partition placement group.

Spread Placement Group Rules and Limitations:

-

A rack spread placement group supports a maximum of seven running instances per Availability Zone. For example, in a Region with three Availability Zones, you can run a total of 21 instances in the group, with seven instances in each Availability Zone.

-

If you try to start an eighth instance in the same Availability Zone and the same spread placement group, the instance will not launch.

-

If you need more than seven instances in an Availability Zone, we recommend that you use multiple spread placement groups.

-

Using multiple spread placement groups does not provide guarantees about the spread of instances between groups, but it does help ensure the spread for each group, thus limiting the impact from certain classes of failures.

-

Spread placement groups are not supported for Dedicated Instances.

-

Host-level spread placement groups are only supported for placement groups on AWS Outposts. There are no restrictions on the number of running instances with host-level spread placement groups.

-

You can't use Capacity Reservations to reserve capacity in a spread placement group.

-

Capacity Reservations do not reserve capacity in a spread placement group.

Conclusion

- EC2 Placement groups in AWS govern how instances are allocated to the underlying hardware. AWS now offers three different types of placement groups:

- Cluster,

- Partition,

- Spread

- Partitions are logical groups of instances in which the included instances need not maintain the very same hardware resources across partitions.

- A Spread Placement Group in AWS is a collection of instances that are each put on the separate underlying hardware, i.e. each instance on its rack, for each rack with its very own network and a power source.

- A Cluster Placement Group in AWS is a group of Auto Scaling groups in the same Region that allows EC2 instances to communicate with one another through high bandwidth, low latency connections.

- Depending on the needs of your application, AWS offers various placement options for your EC2 instance. You may arrange EC2 instances in multiple placement groups to ensure that your application has low latency or high availability.