Recursion Tree Method

The Recursion Tree Method resolves recurrence relations by converting them into recursive trees, where each node signifies the cost at different recursion levels. This visual representation simplifies understanding. Recursion, vital in computer science and mathematics, enables functions to self-reference. Recursion trees visually depict the iterative execution of recursive functions, aiding comprehension in problem-solving.

Types of Recursion

Broadly speaking, there are two types of recursion namely,

- Linear Recursion

- Tree Recursion

Linear Recursion

- A linear recursive function is a function that only makes a single call to itself each time the function runs. The factorial function is a good example of linear recursion. A linearly recursive function takes linear time to complete its execution that’s why it is called linear recursion.

- Consider the psuedo-code written below,

- If we take a look at this function doSomething(n), it takes a parameter n and does some computation and in the end, it calls again the same function with smaller values.

- Suppose T(n) is the time required to perform all of the computation when the function doSomething() is called with a parameter value n. We can write a recurrence relation for this as well, T(n) = T(n-1) + K. Here K is some constant. Constant K is added because some time is also needed to allocate or deallocate memory to a variable, or doing some mathematical task. in the function. The time is very small and negligible so we use K to define it here.

- The time complexity of this recursive program can be easily determined as the function doSomething() is called n times in the worst case. More formally the time complexity of the function is O(N).

Tree Recursion

- Tree Recursion is just a phrase to describe when you make a recursive call more than once in your recursive case. The fibonacci function is a good example of Tree recursion. The time complexity of tree recursive function is not linear, they run in exponential time.

- Consider the pseudo-code written below,

- Here the function doSomething(n) is almost similar to the previous one, the only difference is that one more call is being made to the same function with a smaller value of n.

- Let's write the recurrence relation for this function as well, T(n) = T(n-1) + T(n-2) + k. Again K is some constant here.

- These types of recursion are called tree recursion where more than one call is made to the same function with smaller values. Now the interesting part, what is the time complexity of this function?

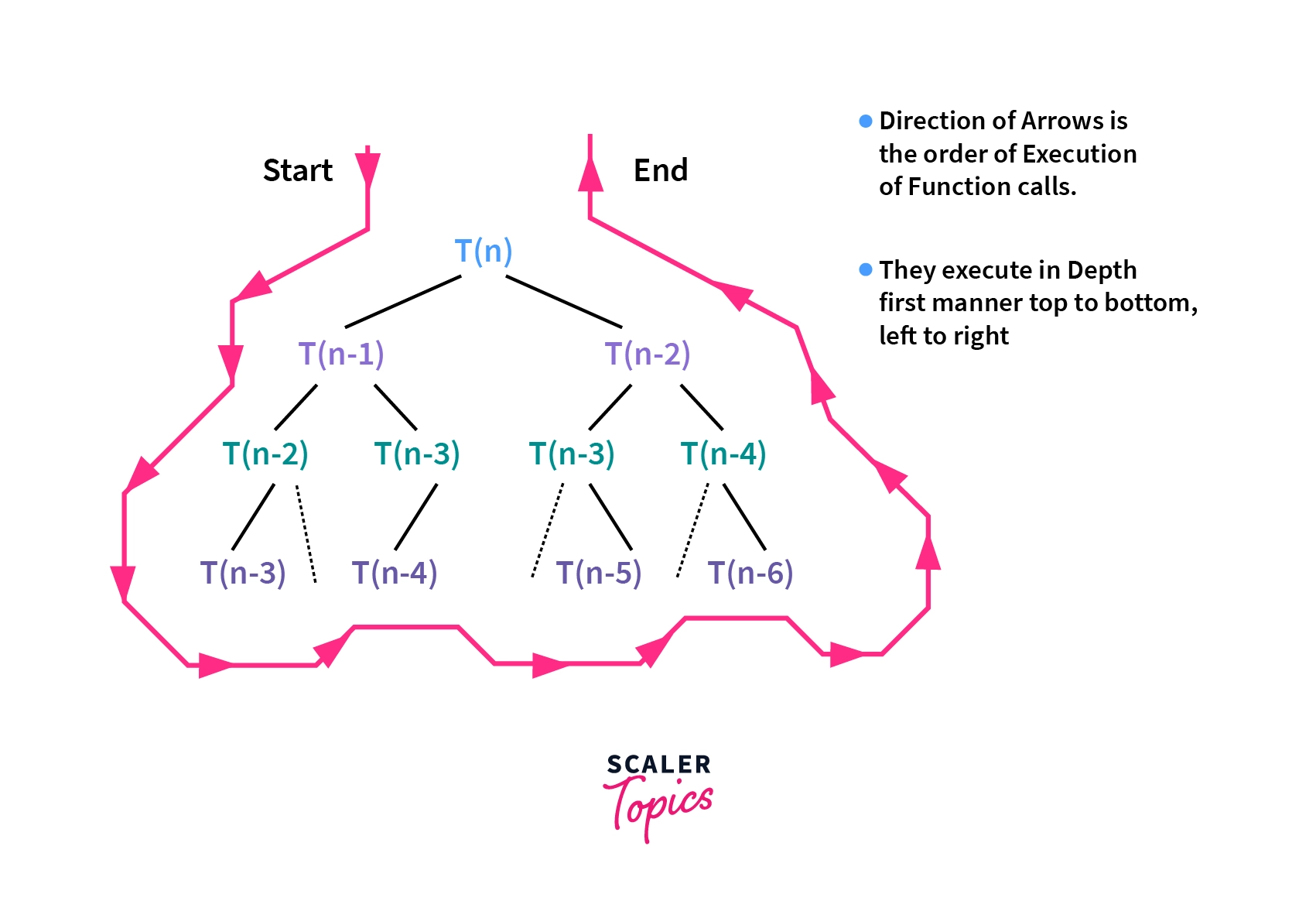

- Take the below recursion tree for the same function like a hint and take a guess.

- You may realize that it's hard to determine the time complexity by directly looking into a recursive function, especially when it's a tree recursion. There are a lot of ways to determine the time complexity of such functions, one of them is Recursion Tree Method. Let's understand it more deeply.

What is the Recursion Tree Method?

- Recursion tree method is used to solve recurrence relations like or the two we have discussed above in types of recursion section. Generally, these recurrence relations follow the divide and conquer approach to solve a problem.

- When a problem is divided into smaller subproblems, there is also some time needed to combine the solutions to those subproblems to form the answer to the original problem.

- For example, the recurrence relation for Merge sort is . This additional O(N) is the time required to combine the solutions of two subproblems of size which is true at the implementation level as well. We merge two sorted arrays to form a new sorted array in linear time in the merge step of Merge sort.

- For example, the recurrence relation for Binary search is , we know that in Binary search the search space is reduced to half in each iteration. As soon as we find the result, we return from the function. This is a constant time operation, this is the reason why is added in the recurrence relation.

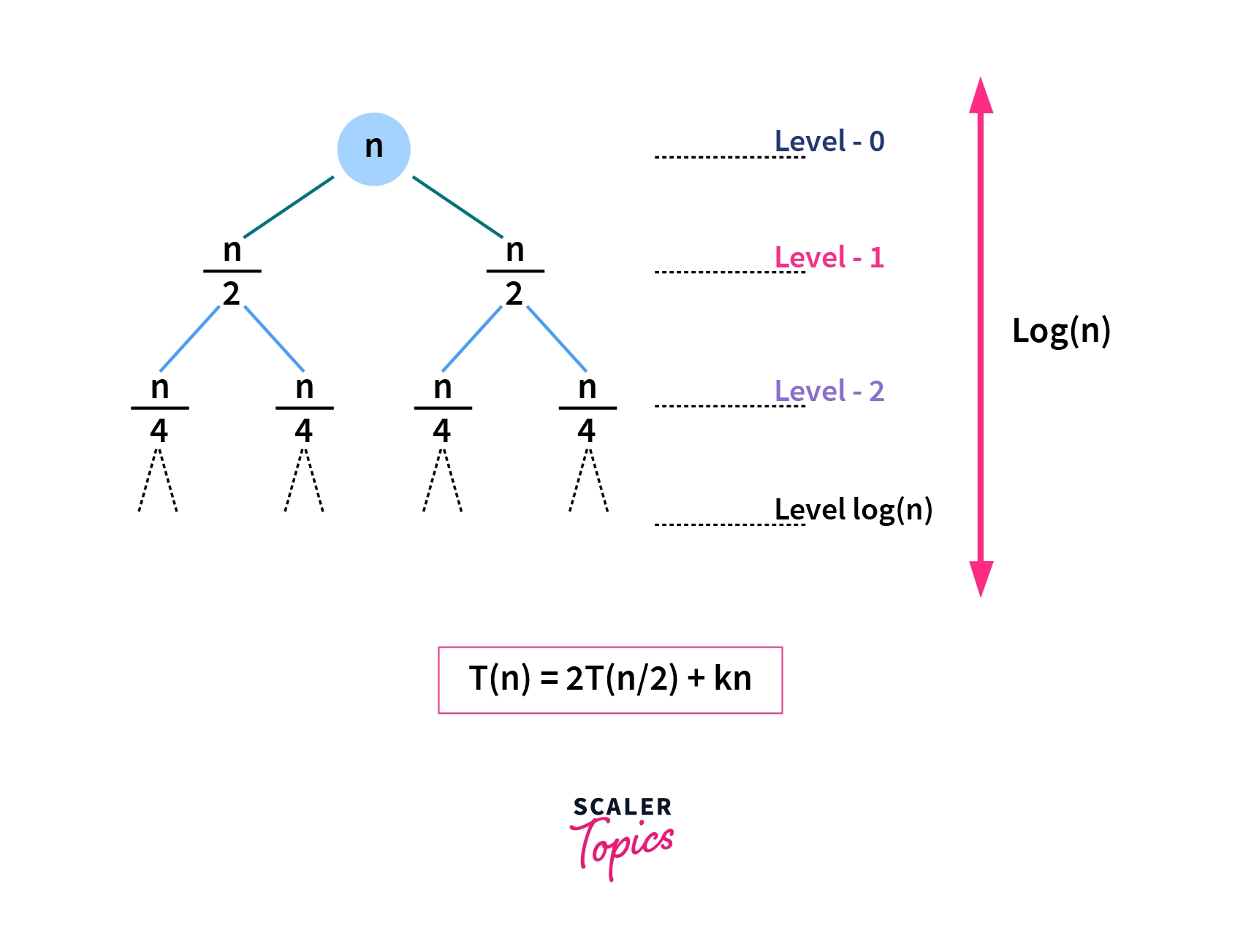

- Consider the recurrence relation, . Here Kn is the time needed to combine the solutions of subproblems of size .

- Let's draw the recursion tree for the recurrence relation stated above,

By analyzing the above recursion tree we can make a few deductions,

- The value of the node at each level is nothing but the size of the problem at that level. At level 0 the problem size is , at level 1 problem size is , and so on.

- The height of this recursion tree is equal to the number of levels in the tree, in general, we define the height of the tree as equal to , where n is the problem size. This is because we already discussed that recurrence relation uses the divide and conquer approach to solve a problem, and we only need steps to reach problem size 1 from problem size n.

- For example, consider the value of , how many steps are needed to reach N = 1, if we are allowed to divide N by 2 at each step? Answer is 4 steps, which is the value of log(16) base 2 because we are dividing by 2 at each step.

- , since

- so,

- We consider the second term in recurrence as root at each level.

Although this method uses 'tree' in its name, you will be able to understand this method even with little or no knowledge of trees.

Steps to Solve Recurrence Relations Using Recursion Tree

In the recursion tree method, the time required to solve a subproblem is referred to as the cost of the subproblem. So if you find "cost" word associated with the recursion tree then it means nothing but the time required to solve a subproblem.

To solve a recurrence relation using the recursion tree method, a few steps must be followed. They are,

- Draw the recursion tree for the given recurrence relation.

- Calculate the height of the recursion tree formed.

- Calculate the cost(time required to solve all the subproblems at a level) at each level.

- Calculate the total number of nodes at each level in the recursion tree.

- Sum up the cost of all the levels in the recursion tree.

Let's understand all of these steps with a few examples.

Example

- Consider the recurrence relation,

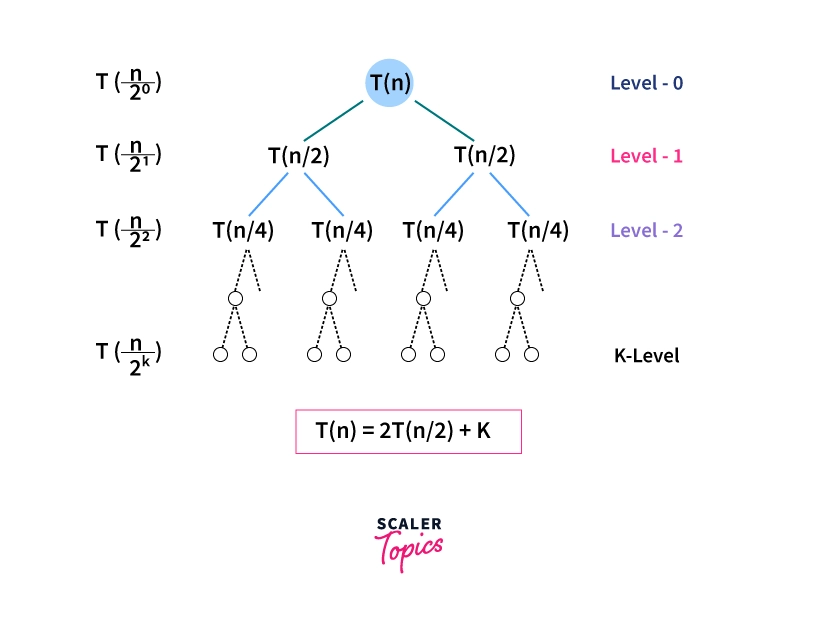

T(n) = 2T(n/2) + K

Solution

The given recurrence relation shows the following properties,

- A problem size n is divided into two sub-problems each of size n/2. The cost of combining the solutions to these sub-problems is K.

- Each problem size of n/2 is divided into two sub-problems each of size n/4 and so on.

- At the last level, the sub-problem size will be reduced to 1. In other words, we finally hit the base case.

Let's follow the steps to solve this recurrence relation,

Step. 1 Draw the Recursion Tree

Step. 2 Calculate the Height of the Tree

- Since we know that when we continuously divide a number by , there comes a time when this number is reduced to . Same as with the problem size , suppose after divisions by 2, becomes equal to 1, which implies, (n / 2^k) = 1

- Here is the problem size at the last level and it is always equal to .

- Now we can easily calculate the value of from the above expression by taking to both sides. Below is a more clear derivation,

So the height of the tree is .

Step. 3 Calculate the cost at each level

- Cost at Level-0 = K, two sub-problems are merged.

- Cost at Level-1 = K + K = 2*K, two sub-problems are merged two times.

- Cost at Level-2 = K + K + K + K = 4*K, two sub-problems are merged four times. and so on....

Step. 4 Calculate the number of nodes at each level

Let's first determine the number of nodes in the last level. From the recursion tree, we can deduce this

- have node

- have nodes

- have nodes

- have nodes

So the level should have nodes i.e. n nodes.

Step. 5 Sum up the cost of all the levels

- The total cost can be written as,

- Total Cost = Cost of all levels except last level + Cost of last level

- Total Cost = Cost for level-0 + Cost for level-1 + Cost for level-2 + .... + Cost for level-log(n) + Cost for last level

- The cost of the last level is calculated separately because it is the base case and no merging is done at the last level so, the cost to solve a single problem at this level is some constant value. Let's take it as .

- Let's put the values into the formulae,

- T(n) = + + + .... +

- T(n) =

- T(n) = +

- If you closely take a look to the above expression, it forms a Geometric progression (). The sum of GP is given by . Here is the first term and r is the common ratio.

- In the GP formed above, and , after solving this we get,

Thus, T(n) = O(n)

That's how we step by step solve any recurrence relation using the recursion tree method.

Ready to Build Strong Foundations? Enroll Now in Our Data Structures and Algorithms Course for Coding Excellence!

Conclusion

- Recursion is a method of solving a large problem where the solution depends on the solutions of smaller instances of the same problem.

- Broadly speaking there are two types of recursion, Linear Recursion, and Tree Recursion.

- There are a lot of methods to solve recurrence relations, the Recursion tree method is one of them.

- Generally, these recurrence relations are just a mathematical way to define a recursive problem. There are a few steps that should be followed to solve a recurrence relation using the recursion tree method.