Introduction to Kubernetes for DevOps

Introduction

Definition of Kubernetes

Kubernetes is an open-source container orchestration platform that automates containerised application deployment, scaling, and management, making it a crucial tool in DevOps practices. It provides a standardized way to package and deploy applications in containers, optimizing resource utilization, enabling scalability, and ensuring high availability. Kubernetes seamlessly integrates into DevOps pipelines, facilitating continuous integration and deployment (CI/CD) practices, and abstracts the underlying infrastructure, providing a consistent and declarative approach to managing applications. It streamlines application deployment, enhances operational efficiency, and enables faster and more reliable software delivery in the Kubernetes DevOps workflow.

Importance of Kubernetes in DevOps

Kubernetes is an open-source container orchestration platform that has emerged as a critical tool in the DevOps ecosystem. The importance of Kubernetes in DevOps is highlighted in below key points that showcase its significance in modern software development and operations workflows:

- Container Orchestration : Kubernetes automates the deployment, scaling, and management of containerized applications, providing a standardized way to package and deploy applications in containers. This enables DevOps teams to easily manage complex application deployments across multiple environments.

- Resource Efficiency : Kubernetes optimizes resource utilization by dynamically allocating resources based on application requirements, ensuring efficient utilization of compute resources in the infrastructure. This helps in maximizing the cost-effectiveness of the infrastructure and reduces wastage.

- Scalability and High Availability : Kubernetes provides built-in features for scaling applications horizontally and vertically, allowing DevOps teams to easily handle changes in demand and ensure the high availability of applications, some of the Kubernetes distributions even provide automatic high availabilty clustering. It also includes self-healing capabilities that automatically restart failed containers or reschedule them to healthy nodes.

- Infrastructure Abstraction : Kubernetes abstracts the underlying infrastructure, allowing DevOps teams to define and manage application deployments declaratively using configuration files. This enables a consistent and portable way to deploy and manage applications across different cloud providers or on-premises environments.

- Continuous Integration and Deployment (CI/CD) : Kubernetes integrates seamlessly with popular CI/CD tools, enabling DevOps teams to automate the process of building, testing, and deploying containerized applications. This allows for faster and more reliable software delivery, reducing manual errors and ensuring consistency across different environments.

- Service Discovery and Load Balancing : Kubernetes provides built-in service discovery and load balancing capabilities, making it easy for DevOps teams to expose and manage services running in containers. This enables seamless communication between services and ensures reliable and scalable application communication.

- Ecosystem and Community : Kubernetes has a large and vibrant ecosystem with a strong community of users, contributors, and vendors. This provides access to a rich set of tools, extensions, and best practices that can be leveraged by DevOps teams to enhance their application deployments and operations.

Kubernetes Architecture and Components

Understanding Kubernetes Architecture

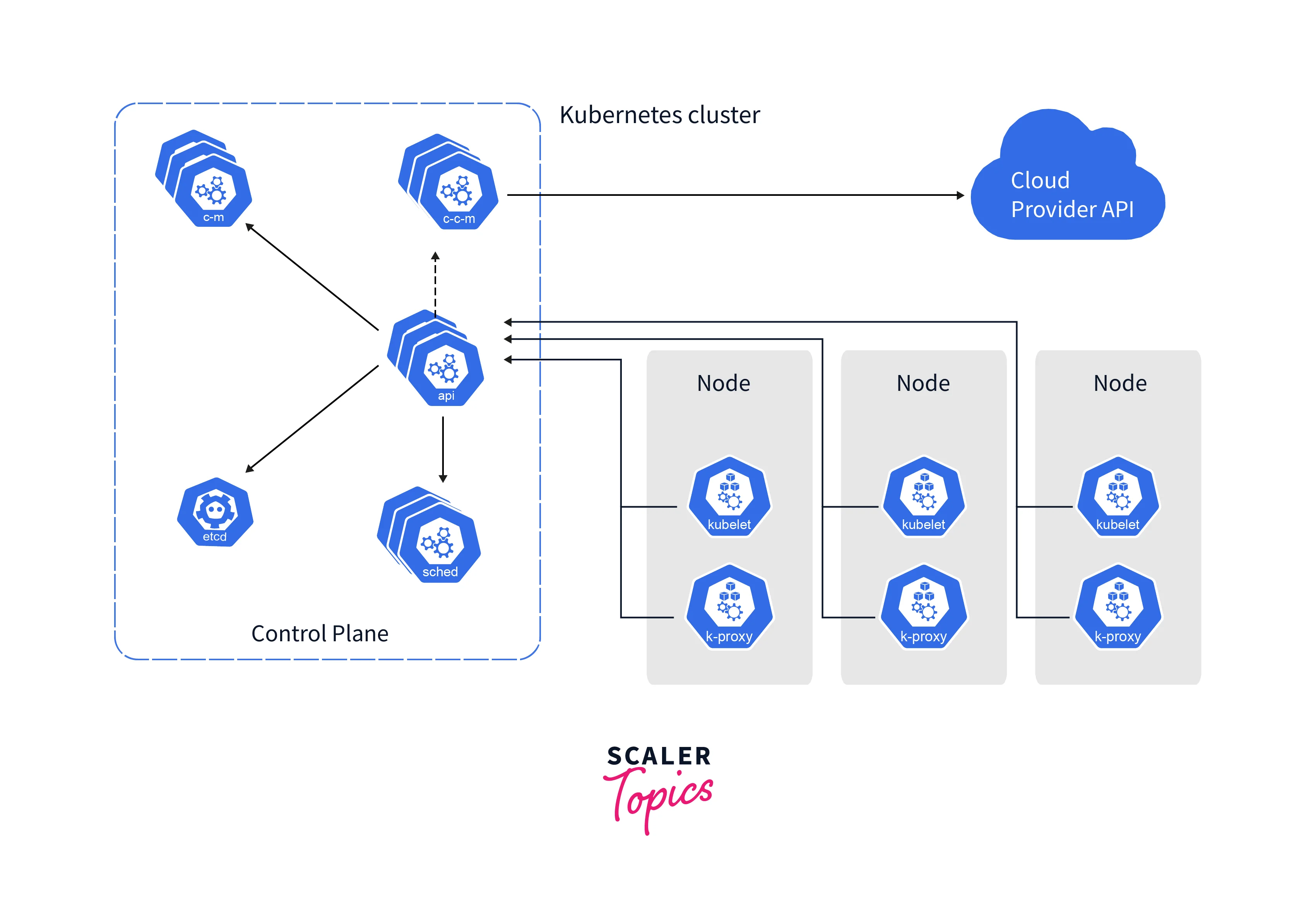

Kubernetes or K8s architecture is based on a Master Worker Node Model, where the Master(Control Plane) node manages the overall cluster state and the Worker nodes host and run containers. The architecture of Kubernetes provides a highly scalable, flexible, and resilient platform for managing containerized applications, enabling efficient deployment, scaling, and management of container workloads in modern IT environments.

Here are some components of Kubernetes Architecture:

- Master(Control Plane) Node : The master node is the control plane of the Kubernetes cluster, responsible for managing the overall state and configuration of the cluster. It includes components such as the API server, etcd (a distributed key-value store for storing configuration data), controller manager, and scheduler.

- Worker Nodes : The worker nodes, also known as minions, are responsible for running containers. They host and execute containers using container runtimes like Docker, and communicate with the master node to ensure the desired state of the cluster is maintained.

- Pods : Pods are the smallest and most basic units in the Kubernetes architecture. They are used to encapsulate one or more containers and share the same network namespace, allowing them to communicate with each other using localhost.

- Services : Services are used to expose pods to the network, enabling communication between pods and external services. They provide a stable IP address and DNS name, and can load balance traffic among multiple pods.

- Volumes : Volumes are used to provide persistent storage to containers, allowing data to survive in case of container restarting or rescheduling.

- Labels and Selectors : Labels are used to attach metadata to resources such as pods, services, and volumes, while selectors are used to filtering and identify resources based on their labels.

- Controllers : Controllers are used to ensure the desired state of the cluster by managing the replication, scaling, and rolling updates of pods.

- Add-Ons : Add-ons are optional components that can be deployed in a Kubernetes cluster to provide additional functionality, such as networking (e.g., kube-proxy), monitoring (e.g., Prometheus), and logging (e.g., Fluentd).

Key Kubernetes Components and Their Roles

Kubernetes comprises several key components that create a highly resilient and scalable containerized infrastructure. Here are some of the key Kubernetes components and their roles:

Control Plane : The Control Plane is the brain of a Kubernetes cluster and manages the overall state and configuration of the cluster. It consists of several components, including:

- etc: etcd is a distributed key-value store that stores the configuration data of the cluster, such as cluster state, configuration settings, and metadata. It serves as the "single source of truth" for the entire cluster.

- API Server : The API Server is the front-end component that exposes the Kubernetes API and accepts API requests from clients, such as the kubectl command-line tool or other applications. It validates and processes API requests and updates the cluster state etc accordingly.

- Controller Manager : The Controller Manager is responsible for managing various controllers that automate tasks in the cluster, such as maintaining desired Pod replicas, managing services, and handling updates to resources. It continuously monitors the state of the cluster and ensures that the desired shape is maintained.

- Scheduler : The Scheduler is responsible for assigning Pods to Nodes based on resource availability, constraints, and other policies. It ensures that Pods are scheduled to run on appropriate Nodes and optimizes resource utilization in the cluster.

Nodes : Nodes are worker machines that run containerized applications as Pods. They are responsible for running containers and managing their lifecycle. Nodes are managed by the control plane components and report their status back to the control plane. Some important components of a Node include:

- Container Runtime : The container runtime is responsible for starting and stopping containers, managing their lifecycle, and monitoring their health. Docker, containers, and cri-o are some common container runtimes used in Kubernetes.

- Kubelet : The Kubelet is an agent that runs on each Node and communicates with the control plane. It ensures that containers are running as expected, monitors their health, and reports their status back to the control plane. It also manages the networking and storage resources for containers on the Node.

- kube-proxy : The kube-proxy is responsible for managing network communication within the cluster. It sets up and manages network routes, load balancing, and other networking tasks for Pods running on the Node.

Containers : Containers are lightweight, portable, and isolated runtime environments that package applications and their dependencies into a single executable unit. Containers run inside Pods and are managed by the container runtime, such as Docker, which is responsible for starting, stopping, and isolating containers on Nodes.

Networking : Networking in Kubernetes involves connecting Pods running on different Nodes and enabling communication between them. Kubernetes provides networking plugins, such as Calico, Flannel, and Cilium, that facilitate container networking and allow Pods to communicate with each other using IP addresses.

Services : Services are used to expose Pods to the network within and outside the cluster. They provide a stable IP address and DNS name, and load balancing across multiple Pods. Kubernetes supports different types of Services, such as ClusterIP, NodePort, and LoadBalancer, to expose Pods as services within and outside the cluster.

Volumes : Volumes are used to provide persistent storage to containers running in Pods. Volumes can be used to store data that needs to persist across Pod rescheduling, such as databases or file storage. Kubernetes supports various types of Volumes, such as HostPath, EmptyDir, and Persistent Volumes, that provide different levels of durability and persistence.

Ingress : Ingress is used to expose HTTP and HTTPS services running in Pods to the external network. It acts as a reverse proxy and provides routing rules, SSL termination, and load balancing for incoming traffic to services running in the cluster.

Kubernetes API and Its Use in DevOps

The Kubernetes API (Application Programming Interface) is a set of rules and protocols that allow for communication and interaction of cluster components within a Kubernetes cluster. It provides a standardized way for developers, operators, and tools to interact with the Kubernetes control plane and manage cluster resources, such as Pods, Services, Deployments, and more.

Below are some key points on uses of Kubernetes API in DevOps:

- Automate application deployment, scaling, and updates.

- Define and manage infrastructure as code (IaC) for Kubernetes clusters.

- Integrate with CI/CD pipelines for automated deployments.

- Manage configuration settings for applications running in Kubernetes clusters.

- Monitor and troubleshoot the state of the cluster, applications, and resources.

- Manage resources, such as CPU, memory, and storage, for optimal utilization.

- Enable rolling updates and rollbacks of applications in production.

- Support multi-cloud and hybrid cloud deployments with a consistent interface.

- Define custom resource definitions (CRDs) for extended functionality.

- Integrate with ecosystem tools and platforms for enhanced management and operation of containerized applications in Kubernetes clusters.

Best Practices for Kubernetes in DevOps

Best Practices for Deploying Applications in Kubernetes

- Use declarative configuration with YAML or JSON files for reproducibility.

- Utilize container images for consistent packaging and deployment.

- Implement namespace isolation to enforce access controls and resource limits.

- Define readiness and liveness probes for application health checks.

- Use rolling updates to minimize downtime during application updates.

- Securely manage sensitive information with Kubernetes secrets.

- Monitor and log applications for performance insights and issue troubleshooting.

- Regularly backup and restore application data for data integrity and reliability.

- Use horizontal pod autoscaling (HPA) to dynamically adjust resources based on workload.

- Follow the principle of "Immutable Infrastructure" by treating containers as disposable and recreating them for updates or fixes instead of modifying them in place.

Scaling Applications in Kubernetes

- Use Horizontal Pod Autoscaling (HPA) for automatic scaling based on metrics.

- Design stateless applications to enable seamless scaling and resiliency.

- Optimize resource allocation to prevent overprovisioning.

- Utilize ReplicaSets or Deployments to manage desired replicas and enable rolling updates.

- Consider vertical scaling for resource-intensive applications.

- Define affinity and anti-affinity rules for better load distribution and fault tolerance.

- Plan for application-level scaling using distributed databases, caching, and queuing systems.

- Continuously monitor and adjust scaling parameters for optimal performance.

- Conduct performance and load testing to validate scalability.

- Plan for failure and design applications to handle failures gracefully with retries, circuit breakers, and fault-tolerant architectures.

Monitoring and Logging in Kubernetes

- Use Kubernetes native monitoring solutions like Prometheus and Grafana.

- Collect and analyze logs from containers, pods, and nodes for insights into application behaviour and performance.

- Define meaningful metrics related to application performance, resource utilization, and health, and monitor them regularly.

- Implement alerting based on defined thresholds to notify stakeholders about critical events or anomalies.

- Use distributed tracing tools like Jaeger or Zipkin to track requests across microservices.

- Define custom metrics to monitor application-specific performance and behaviour.

- Monitor node health, including CPU, memory, and disk usage.

- Create dashboards for visualization of application and cluster health.

- Follow logging best practices, such as using structured logs and avoiding logging sensitive information.

- Regularly review and optimize monitoring and logging configurations for better performance and efficiency.

Kubernetes Security and Compliance Best Practices

- Use RBAC (Role-Based Access Control) to restrict permissions and limit access to critical resources.

- Enable network security with Network Policies to control traffic flow between pods and namespaces.

- Use Pod Security Policies (PSP) or PodSecurity admission controllers to enforce security policies for pods.

- Keep Kubernetes components and containers up-to-date with the latest patches and security updates.

- Use container image scanning tools to identify and mitigate vulnerabilities in container images.

- Enable auditing and logging to track and monitor activity within the cluster.

- Use Secrets or external secret management tools to securely manage sensitive information.

- Implement Pod-level security measures, such as running containers as non-root users and using read-only file systems.

- Regularly review and audit cluster configurations to identify and address potential security risks.

- Follow the principle of least privilege, only granting necessary permissions to users and applications to prevent unauthorized access.

You can also learn more about various best practices for security, monitoring and other things using Official Kubernetes Documentation by Clicking Here.

Use Cases for Kubernetes in DevOps

Microservices Deployment with Kubernetes

- Scalable and Cost Effective : Kubernetes enables horizontal scaling and self-healing of microservices, ensuring high availability and fault tolerance in handling variable workloads. Kubernetes provides a cost-effective approach to implementing microservices architecture, as it leverages containers and efficient resource utilization, reducing infrastructure costs.

- Collaboration with Cloud : Kubernetes allows for deploying microservices across different cloud providers or on-premises data centres, providing flexibility in choosing the best infrastructure for each microservice. Kubernetes fosters collaboration by providing a unified platform for containerization, deployment, and management of microservices, facilitating smooth integration of DevOps practices.

- Service Discovery and Optimization : Kubernetes provides built-in service discovery and networking features, simplifying the development and management of microservices-based applications. Kubernetes automatically manages container placement, scaling, and load balancing, optimizing resource utilization and cost savings.

- Extensibility and Observability : Kubernetes allows for monitoring, logging, and tracing of microservices, providing visibility into their performance and health. Kubernetes has a large ecosystem of plugins and custom resources for extending its functionality and integrating with other tools or services.

- Rapid Deployment and Agility : Kubernetes facilitates faster deployment and release cycles, enabling continuous integration and continuous deployment (CI/CD) practices for microservices-based applications. Kubernetes allows for independent development, deployment, and management of microservices, promoting agility in software development.

CI/CD Pipelines with Kubernetes

- Automated Builds and Resilient Deployments : Kubernetes enables automated building and deployment of containerized applications, allowing for efficient CI/CD pipelines. Kubernetes supports horizontal scaling and provides resiliency features like auto-recovery, making it ideal for deploying applications with CI/CD pipelines.

- Testing and Rolling Updates : Kubernetes allows for rolling updates and rollbacks of application deployments, ensuring seamless updates without downtime and easy rollbacks in case of issues. Kubernetes provides a consistent environment for testing and validation of applications at different stages of the CI/CD pipeline, ensuring application stability and reliability.

- Versioning and Tagging : Kubernetes allows for versioning and tagging of container images, facilitating easy tracking and management of application versions in CI/CD pipelines.

- Monitoring and Auditing : Kubernetes provides built-in monitoring and observability features, enabling effective monitoring of application deployments in CI/CD pipelines. Kubernetes provides auditing and compliance features, ensuring compliance with security and regulatory requirements in CI/CD pipelines.

- Faster Deployments with Cloud : Kubernetes is cloud-agnostic, allowing for CI/CD pipelines to deploy applications consistently across different cloud providers or on-premises environments. By automating the building, testing, and deployment process, Kubernetes enables faster time to market for applications, allowing organizations to deliver software more efficiently and reliably.

Hybrid Cloud Deployment with Kubernetes

- Hybrid Cloud : It enables seamless migration and deployment of applications across on-premises and cloud environments using Kubernetes. Cloud bursting allows for automatically scaling applications in the public cloud during peak demand, while running the rest of the workload on-premises.

- Disaster Recovery and Backup : It can be implemented by replicating critical applications and data across multiple clouds for enhanced resilience. Data localization and compliance can be achieved by keeping sensitive data on-premises while leveraging the public cloud for other less sensitive workloads.

- Modernizing applications : Applications by containerizing them with Kubernetes, allowing for easier deployment and management in a hybrid cloud environment. Edge computing can leverage Kubernetes to deploy containerized applications at the edge for low-latency services and improved performance.

- Extended Workflows : DevOps workflows can be extended across different cloud environments, enabling consistent development and deployment processes. Hybrid cloud deployment provides flexibility in choosing and switching cloud providers, avoiding vendor lock-in.

- Resource Optimization and Mobility : It can be achieved by dynamically allocating workloads across on-premises and cloud environments based on resource availability and cost factors. Mobility allows for workload placement flexibility, enabling applications to be deployed and migrated across different cloud environments based on changing business needs.

Kubernetes for Infrastructure Automation

- Avoiding Failures : Kubernetes can automate the deployment and management of containerized applications across multiple servers, making it ideal for large-scale infrastructure automation. Kubernetes can automatically recover from failures by rescheduling containers or pods to healthy nodes, ensuring the high availability of applications.

- Infrastructure Provisioning : Provisioning and scaling can be automated using Kubernetes, allowing for the dynamic allocation of resources based on workload demands. Kubernetes can automate the load balancing and distribution of network traffic to containerized applications, ensuring efficient utilization of resources.

- Automated Monitoring and Updates : Kubernetes can automate the rolling updates and rollbacks of applications, ensuring zero downtime during updates and minimizing human intervention, and automating the monitoring and logging of applications and infrastructure, providing visibility and insights for troubleshooting and performance analysis.

- Managing and Scaling : Kubernetes can automate the creation and management of namespaces, enabling multi-tenancy and isolation of applications and users. Kubernetes can automate the scaling of applications based on metrics and policies, ensuring efficient utilization of resources.

- Security: Kubernetes can automate the management of secrets and configuration data, improving security and compliance. Automating the configuration and management of networking, storage, and other infrastructure resources, simplifying complex infrastructure setups.

Challenges and Limitations of Kubernetes in DevOps

Common Challenges Faced by Teams Using Kubernetes in DevOps

Using Kubernetes DevOps can bring many benefits, but it also comes with its own set of challenges which are as follows:

- Learning Curve and Complexity : Kubernetes has a steep learning curve due to its complex architecture and numerous concepts, which may require team members to invest time and effort in learning and training. Kubernetes has a complex ecosystem with various components, configurations, and tools, which can require teams to invest time and effort in managing, troubleshooting, and maintaining Kubernetes clusters.

- Management and Networking : Properly managing resources such as CPU, memory, and storage in Kubernetes can be challenging, as it requires teams to optimize resource allocation, avoid resource contention, and monitor resource utilization. Networking in Kubernetes can be complex, as it involves setting up and managing networks, services, and load balancing for containerized applications, which may require networking expertise.

- Storage and Scaling : Configuring and managing networking and storage resources in Kubernetes can be complex, especially when integrating with external services or managing data persistence. Scaling applications in Kubernetes can be challenging, as it requires teams to define and manage deployment configurations, rolling updates, and scaling policies.

- Updates and Upgrades : Managing updates and upgrades of Kubernetes versions, as well as updates to container images and application configurations, can be complex and require careful planning and testing to avoid disruptions.

- Monitoring and Troubleshooting : Identifying and resolving issues in Kubernetes deployments, such as container failures, pod evictions, and networking problems, can be challenging, requiring troubleshooting and debugging skills. Monitoring and logging containerized applications in Kubernetes can be challenging, as it requires setting up monitoring tools, logging mechanisms, and alerting systems to gain visibility into application performance and troubleshooting issues.

- Security Compliance : Ensuring compliance with organizational policies, industry regulations, and security standards in Kubernetes can be challenging, as it requires teams to implement proper governance practices, security controls, and auditing mechanisms. Security of Kubernetes clusters, containerized applications, and data can be challenging, as it requires implementing proper authentication, authorization, and encryption mechanisms, as well as regularly patching and updating components.

Limitations of Kubernetes and How to Work Around Them?

Kubernetes is a powerful and widely adopted container orchestration platform, but it also has some limitations that can pose challenges for teams using it in a DevOps environment.

- Scalability and Complexity : Kubernetes has a steep learning curve, with a complex architecture and numerous concepts to understand. To work around this, Invest in training, use online resources, and adopt Kubernetes in small steps. Managing scalability can be challenging, particularly when dealing with a large number of pods and services. To overcome this, Use best practices like horizontal pod autoscaling and cluster autoscaling, and monitor resource utilization.

- Networking Storage : Kubernetes networking can be complex. To overcome this, Use Kubernetes service objects for service discovery and load balancing, implement network policies for fine-grained control over traffic, and consider using a container network interface (CNI) plugin that suits your requirements. Kubernetes has limited storage management, with challenges related to persistent volumes (PVs) and persistent volume claims (PVCs). Use storage classes, dynamic provisioning, and third-party storage solutions to work around this,

- Security : Kubernetes requires robust security measures. To work around this, Follow Kubernetes security best practices, such as Role Based Access Control, network policies, and pod security policies, and implement container image scanning and vulnerability assessment tools to ensure secure container images.

- Upgrades and Logging : Monitoring and logging in Kubernetes can be complex. For this, we can use Kubernetes Native monitoring tools like Prometheus, Grafana, and ElasticSearch Fluentd Kibana (EFK) stack and implement centralized logging solutions. Upgrading Kubernetes can be challenging, with potential disruptions to running applications. Plan and execute upgrades carefully, following upgrade guides, and test in non-production environments to overcome this.

- Vendor Lock-In and Portability : Kubernetes may have dependencies on specific cloud providers. For this, we can follow cloud-agnostic practices, use cloud-agnostic tools, and consider multi-cloud Kubernetes distributions or managed services. Achieving application portability can be challenging. To work around this, Use declarative deployment configurations like YAML files, abstraction layers like Helm charts, and Kubernetes operators.

- Human Error : Human error can lead to misconfigurations or mistakes in managing Kubernetes resources, resulting in potential downtime or security risks. For this, Implement automation, use Infrastructure as Code (IaC), use tools like GitOps and enforce strict change management processes to minimize errors.

Future Trends in Kubernetes for DevOps

With increase in demand of Kubernetes, we see it's adoption in various sectors of the tech industry:

- Increased adoption and continued growth in multi-cloud and hybrid cloud deployments.

- Enhanced security measures.

- Advanced automation capabilities to streamline application deployment and management processes.

- Improved observability and monitoring capabilities.

- Increased use of Kubernetes operators.

- Integration with emerging technologies like machine learning and edge computing.

Harness the Force of DevOps: Enlist in Our DevOps Online Course for Practical Insights and In-Depth Learning. Enroll Now!

Conclusion

- Kubernetes is an open-source container orchestration platform used for automating the deployment, scaling, and management of containerized applications.

- Control Plane, Services, Volumes, Networking, Containers, Nodes, etcd, API Server, and Ingress are some components of Kubernetes DevOps.

- Kubernetes Architecture includes Master Node, Worker Node, Pods, Controllers, kubelet, kube-proxy, Container Runtime and Scheduler.

- Some Best Practices for Kubernetes include Automate deployment, using GitOps, implementing security, monitoring, and continuously testing for effective Kubernetes management in DevOps.

- Use cases of Kubernetes are Microservices deployment, CI/CD pipeline integrations, Hybrid cloud deployment, Infrastructure Automation, etc.

- Complexity, Human Errors, Storage, Scalability, Monitoring, Vendor Lock-in, Application Portability, Security, Upgrades, and Networking are some Challenges and Limitations of Kubernetes DevOps.