Tackling Biases in Transformer Models

Overview

Transformer models have become integral to various natural language processing tasks but have flaws. One critical issue is the presence of biases in these models, which can lead to unfair and undesirable outcomes. In this article, we will explore the types of biases commonly found in transformer models, delve into the role of data bias and pre-training, and discuss strategies for mitigating biases during model development.

Types of Biases in Transformer Models

Understanding the different types of biases in transformer models is crucial for effectively addressing them. Here are some common types of biases found in these models:

- Stereotype Bias:

Transformer models may generate outputs that reinforce stereotypes related to gender, race, or other characteristics. For instance, associating certain professions with specific demographics can perpetuate unfair generalizations. - Confirmation Bias:

These models can exhibit confirmation bias, favouring information that aligns with existing beliefs or opinions while disregarding dissenting viewpoints. This can hinder balanced and objective analysis. - Cultural Bias:

Transformer models trained on data from specific cultures or languages may need help understanding or generating content relevant to other cultures. This bias can lead to cultural insensitivity and exclusion. - Amplification of Extremes:

In some cases, biases in transformer models generate extreme or polarized content, exacerbating disagreements and polarizing discussions rather than fostering productive conversations. - Underrepresentation:

Models trained on imbalanced datasets may provide inaccurate information about underrepresented groups, further marginalizing them and perpetuating invisibility. - Contextual Bias:

Biases i transformer models can also arise from the context of a given input. For example, a model may respond differently to a sentence mentioning a political candidate based on its preconceived notions about that candidate.

Data Bias and Pre-Training

Addressing data bias during pre-training involves careful data curation, selection, and potentially applying de-biasing techniques. While it's challenging to provide specific code examples without a particular dataset, here's an outline of the steps involved and some common techniques you can use:

-

Data Collection and Cleaning:

Collect a diverse dataset representing different demographics, cultures, and perspectives relevant to your application. Thoroughly clean the dataset to remove any biased or inappropriate content. -

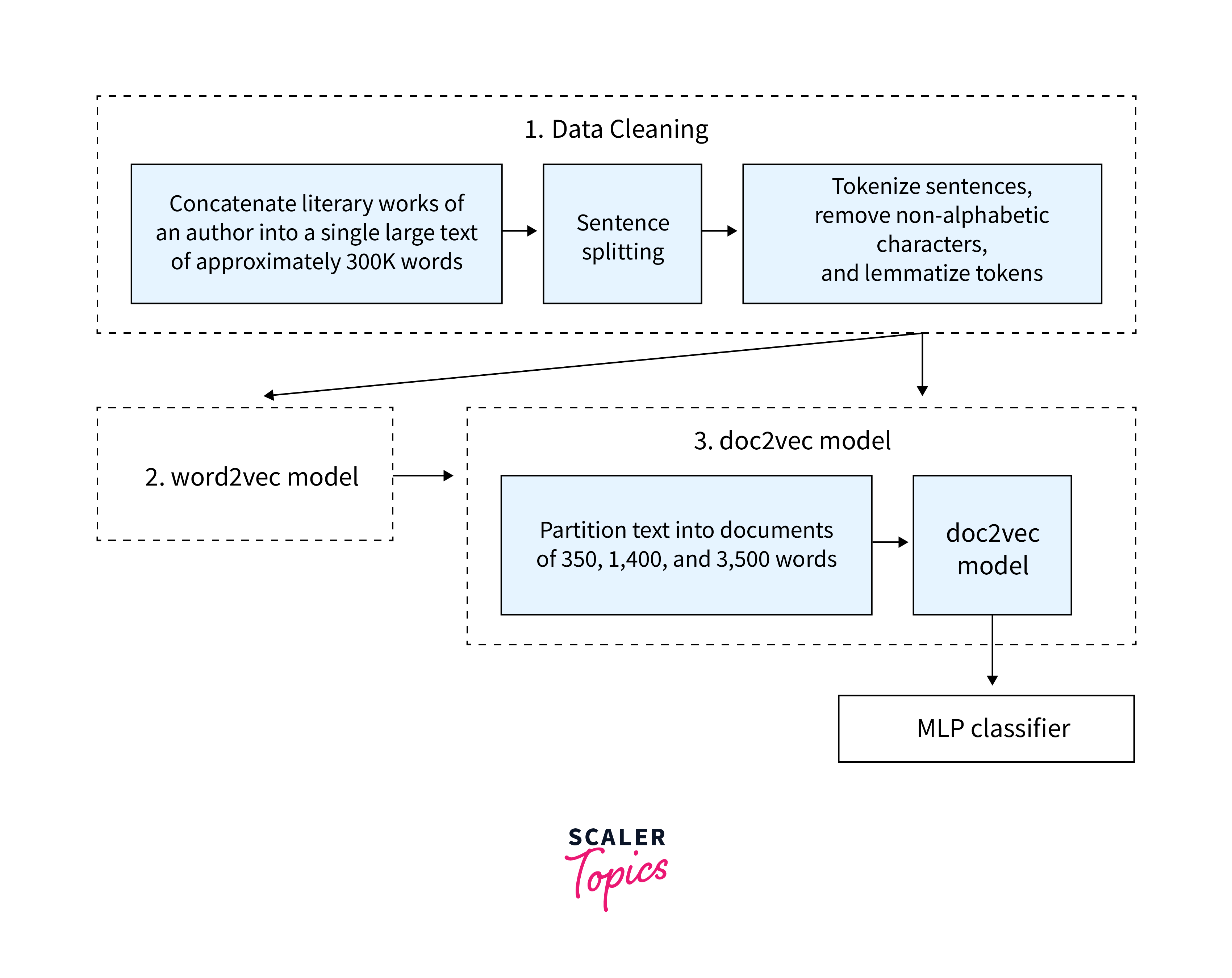

Text Embeddings:

Use pre-trained word embeddings, such as Word2Vec, FastText, or GloVe, to capture semantic relationships in the text data.- De-biasing Techniques:

Implement de-biasing techniques to mitigate bias in the data. Some common techniques include: - Word Embedding Debiasing:

Adjust word embeddings to reduce gender or racial bias. You can use libraries like gensim for word embedding debiasing. - Reweighting:

Assign different weights to different data samples during training to reduce bias impact. - Subsampling:

Reduce the occurrence of dominant or overrepresented terms in your dataset to balance representation.

- De-biasing Techniques:

-

Data Augmentation:

Augment your dataset by generating additional samples that emphasize underrepresented groups or perspectives. -

Evaluation Metrics:

Define evaluation metrics to measure the effectiveness of your debiasing techniques. Common metrics include fairness metrics, such as Equal Opportunity or Demographic Parity. -

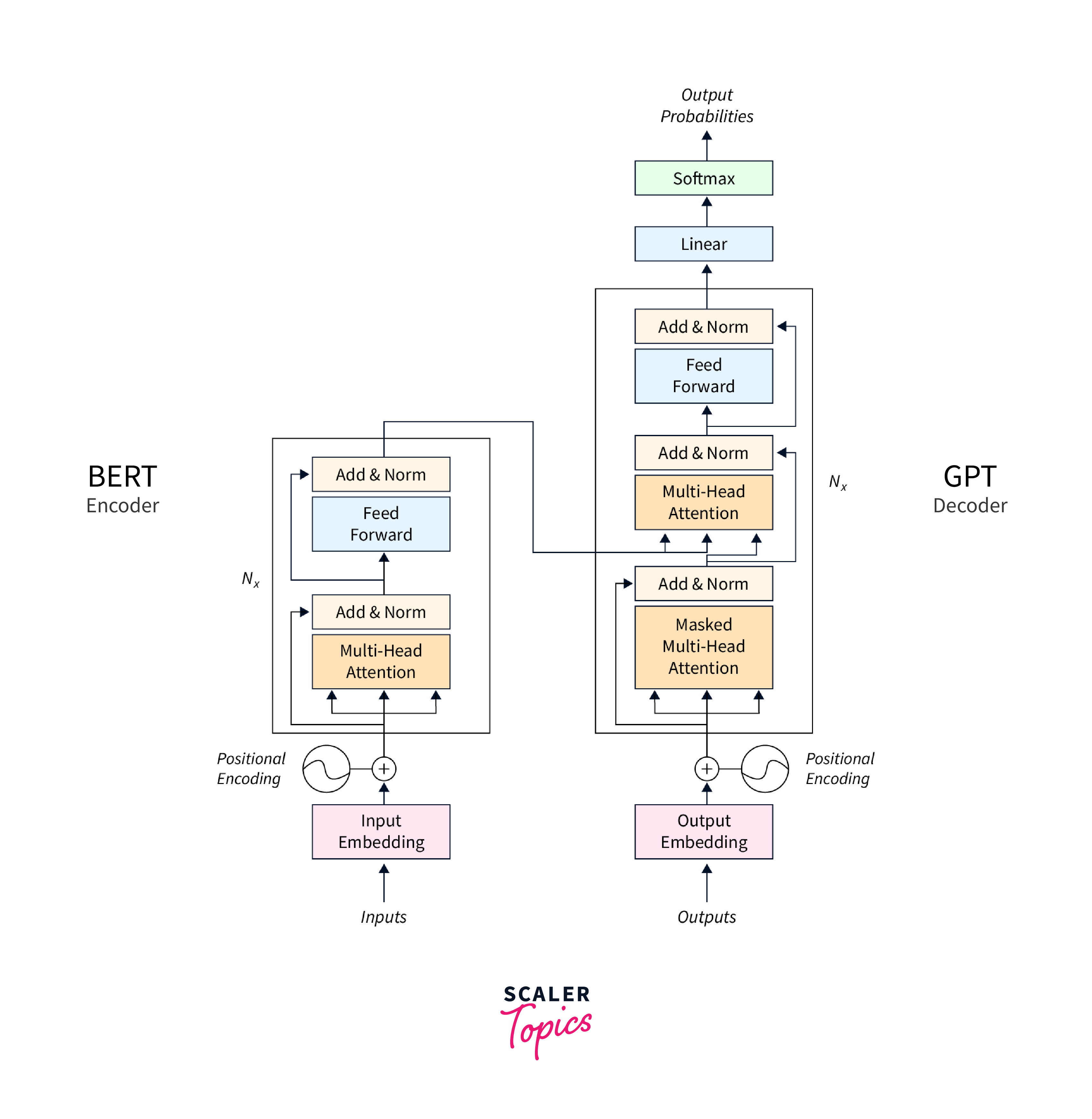

Fine-tuning Models:

Fine-tune transformer models like GPT-3 or BERT on your debiased dataset. -

Monitoring and Auditing:

Continuously monitor the model's outputs during fine-tuning and deployment to identify and rectify any remaining biases. -

Ethical Guidelines:

Develop and follow ethical guidelines emphasising fairness, inclusivity, and transparency throughout data collection, model development, and deployment.

In the context of biases in Transformer models, the data bias refers to the presence of skewed or unrepresentative data during the pre-training phase. This bias can lead to undesirable behavior in the model, including biased language generation and decision-making. Let's explore this concept with a code example using the Hugging Face Transformers library.

Example Code:

Output:

Mitigating Biases in Training Data

Addressing biases in transformer models requires a multifaceted approach. While complete elimination of biases may not be feasible, several strategies can help mitigate their impact:

- Diverse and Representative Training Data:

Curating training datasets that are diverse and representative of different demographics, cultures, and perspectives can help reduce biases associated with underrepresentation and cultural insensitivity. - De-biasing Techniques:

De-biasing techniques aim to identify and neutralize biased language or content in the training data. These techniques may involve modifying the training data or adjusting model parameters to reduce the likelihood of generating biased outputs. - Evaluation and Auditing:

Regularly evaluating model outputs for biases in transformer models is essential. Human reviewers can assess model-generated content and provide feedback to identify and rectify problematic outputs. Auditing the model's performance against various fairness metrics can also be beneficial. - Ethical Guidelines:

Developing and adhering to ethical guidelines for data collection, model development, and deployment is crucial. These guidelines should emphasize fairness, inclusivity, and transparency as essential principles in AI system development. - Post-processing Filters:

Implementing post-processing filters can help mitigate biases in model-generated content. These filters can automatically identify and remove biased or harmful outputs before they are presented to users.

Conclusion

- Biases in Transformer models pose significant challenges, potentially leading to unfair or harmful outputs.

- Common types of biases in transformer models include stereotypes, confirmation bias, cultural bias, and underrepresentation.

- Mitigating biases requires diverse training data, de-biasing techniques, monitoring, and adherence to ethical guidelines.

- Addressing biases is essential for responsible AI development and ensuring fairness and inclusivity in AI systems.