How to build a News Search Engine with NLP

Overview

Building a new search engine using NLP involves several steps: first, collect a large dataset of news articles and pre-process the text by removing stop words and applying them to stem or lemmatization. Next, use NLP techniques such as TF-IDF or word embeddings to represent the text numerically. Then, we use an NLP algorithm to rank the articles based on the user's query's relevance. Finally, we use machine learning models to classify articles as required.

What are We Building?

Search engines have been a boon to us since the times of the dot com bubble. Various search engines such as Google, Yahoo, Bing, and so on came into light during that time and have been evolving and developing over the ages. Today's search engines use the power of machine learning to search articles, not only based on an index but also taking into account the context and understanding of the articles. We will build such a news search engine using NLP, which will be capable of searching for news within a huge corpus of data.

Pre-requisites

- You should have a basic knowledge of Python and linear algebra before building this search engine

- Aside from that, knowledge of data pre-processing techniques in NLP is a plus, though we will still be explaining in detail about the same

How are we Going to build it?

- We will be using a library called "rank_bm25."

- Before modeling, we will be passing the data through multiple steps:

- Tokenization

- StopWords Removal

- Stemming

- BM25 ranking

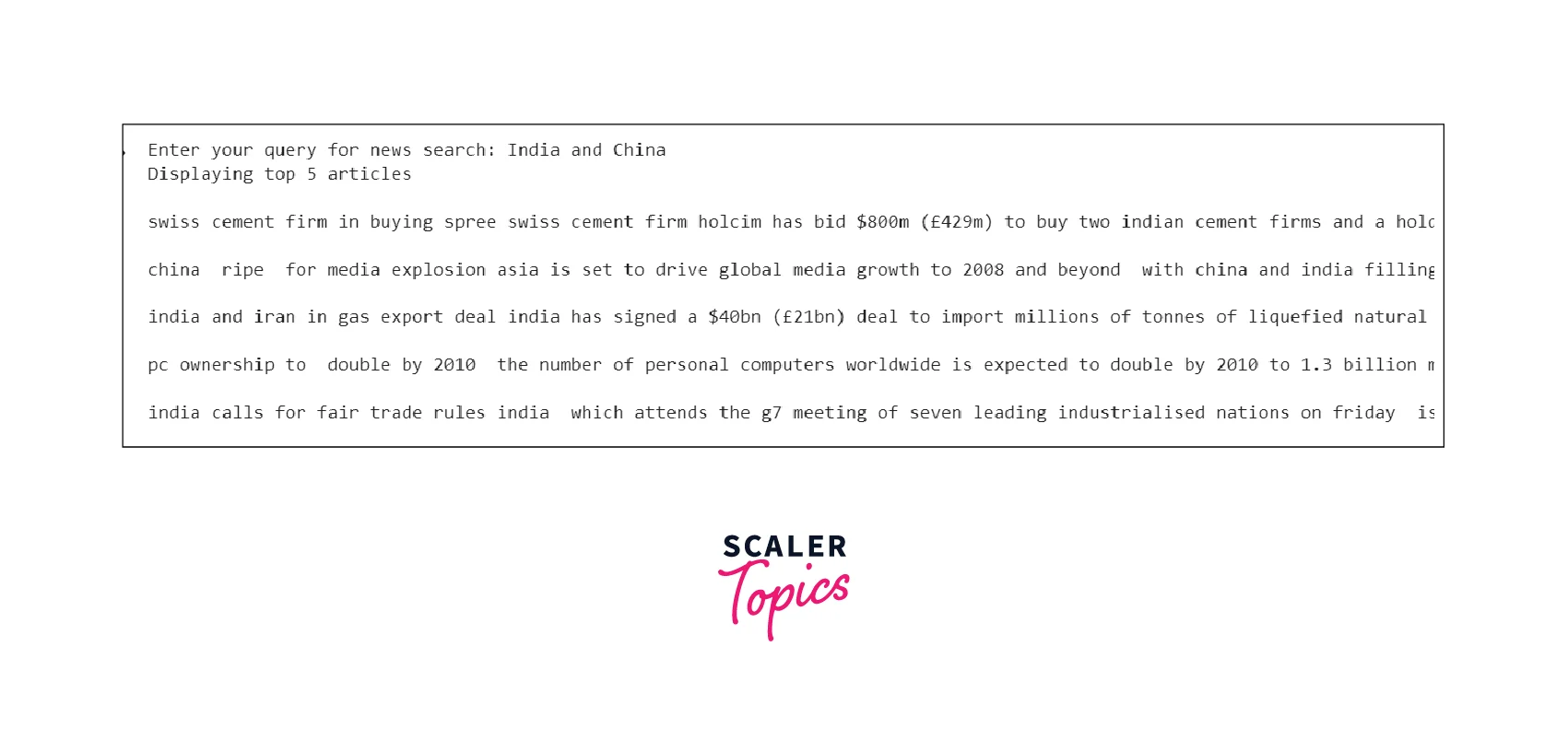

Final Output

This is the final output that we will be getting after completing this project.

Requirements

As we mentioned earlier, we will be using the "rank_bm25" library for creating the news search engine.

Aside from that, we will be using the following:

- Pandas

- regex library re

- NLTK

- gensim's pre-processing module

Building the Model

Info About the Dataset

The dataset we will be using here is the BBC News Classification Dataset.

It can be found in the link: https://www.kaggle.com/competitions/learn-ai-bbc/data

Some information about the data:

- It is a public dataset from the BBC comprised of 2225 articles, each labeled under one of 5 categories: business, entertainment, politics, sport, or tech.

- The dataset is broken into 1490 records for training and 735 for testing. For our search engine, we will be using only the training records' article column since we have no use of the "Article ID" and "category" of the news.

Data Pre-processing Steps

Before we build our search engine, we would need first to pre-process our data. We will be using multiple steps to do the same.

Let's look at them one by one:

Tokenization

Tokenization is the process of breaking down a sequence of text into individual words, phrases, symbols, or other meaningful elements, known as tokens. In natural language processing (NLP), tokenization is a common pre-processing step that enables a wide range of NLP tasks, such as text classification, language translation, and text-to-speech. Different methods of tokenization include word-based tokenization, sentence-based tokenization, and character-based tokenization.

For our news search engine, we will be using word-based tokenization. This is inbuilt into nltk and can be directly used by

Output:

- Here, "punkt" is a tokenizer that divides a text into a list of sentences by using an unsupervised algorithm to build a model for abbreviation words, collocations, and words that start sentences.

- This is inbuilt into nltk. We use the word_tokenize of nltk to tokenize our given sentence, and the output can be seen above.

Special Characters Removal

- Non-alphanumeric characters like "&," "-" and such don't contribute to the search of news from a corpus. Hence removing them helps to remove unnecessary noise before training the model.

- We will define a function and use the regex module to remove the special characters

- The code "re.sub("[^0-9a-zA-Z]"," ",element)" removes all characters which aren't an alphabet or a number.

Stopwords Removal

- Stopwords are a set of commonly occurring words in a language that are typically filtered out before or during the processing of natural language text. These words are usually considered irrelevant for many natural language processing (NLP) tasks, such as text classification, information retrieval, and text-to-speech, as they contain little meaningful information. Examples of stopwords include "a", "an", "the", etc. These words are common in any text and are not useful for identifying the context or meaning of the text.

- We will use the gensim library's STOPWORDS list to remove the stopwords from our data

Output:

Stemming

- Our last step of pre-processing our data would be "Stemming."

- Stemming is the process of reducing a word to its base or root form. The goal of stemming is to normalize text by removing the suffixes, prefixes, or other word endings that are not important for a specific NLP task. The base form of a word is called the stem.

- There are several algorithms available to perform stemming. The most popular are the Porter stemmer and Snowball stemmer. These algorithms use a set of rules and heuristics to identify and remove the suffixes, prefixes, or other word endings that are not important for a specific NLP task.

- We will be using Porter Stemmer for our pre-processing

Output:

Implementing Our Search Engine

We will be using rank_bm25 to build our news search engine.

What is rank_bm25?

- BM25 (Best Match 25) is a ranking function used in information retrieval to rank the relevance of documents in response to a user's query.

- BM25 is based on the assumption that relevance is a combination of two factors: the term frequency (tf) of the query term in the document and the inverse document frequency (IDF) of the term in the collection. The BM25 score of a document is calculated as the sum of the tf-idf weight of each query term in the document.

- It also includes a parameter k1, which controls the term frequency component of the score, and b, which controls the document length component of the score.

- It has been widely adopted in many search engines and is also known as Okapi BM25, after the name of the project that first described it.

- BM25 is used to rank the relevance of text documents based on the relevance of the text to a user's query.

Although similar to TF-IDF, there are some major key differences between both:

- BM25 includes a parameter k1, which controls the term frequency component of the score, and b, which controls the document length component of the score. This means that BM25 can adjust the importance of term frequency and document length to the relevance score.

- BM25 uses a logarithmic function to calculate the IDF component, which allows it to handle rare terms better and also penalize common terms. On the other hand, TF-IDF uses a simple inverse frequency calculation.

- BM25 also includes a normalization factor that adjusts the score based on the length of the document, which can reduce the bias towards shorter documents.

Overall, BM25 is considered a more advanced and sophisticated ranking function than TF-IDF, as it includes additional parameters and calculations to improve the relevance score.

We can install the "rank_bm25" library using the code:

Final Code to Implement Our Search Engine

Notice the function "news_search"

- We use BM250kapi to rank our corpus

- We then calculate the scores of each article as compared to our query

- Using NumPy's arg-partition, we find the indices of the top n scores from our calculated scores

- Finally, we print the top n articles based on these scores

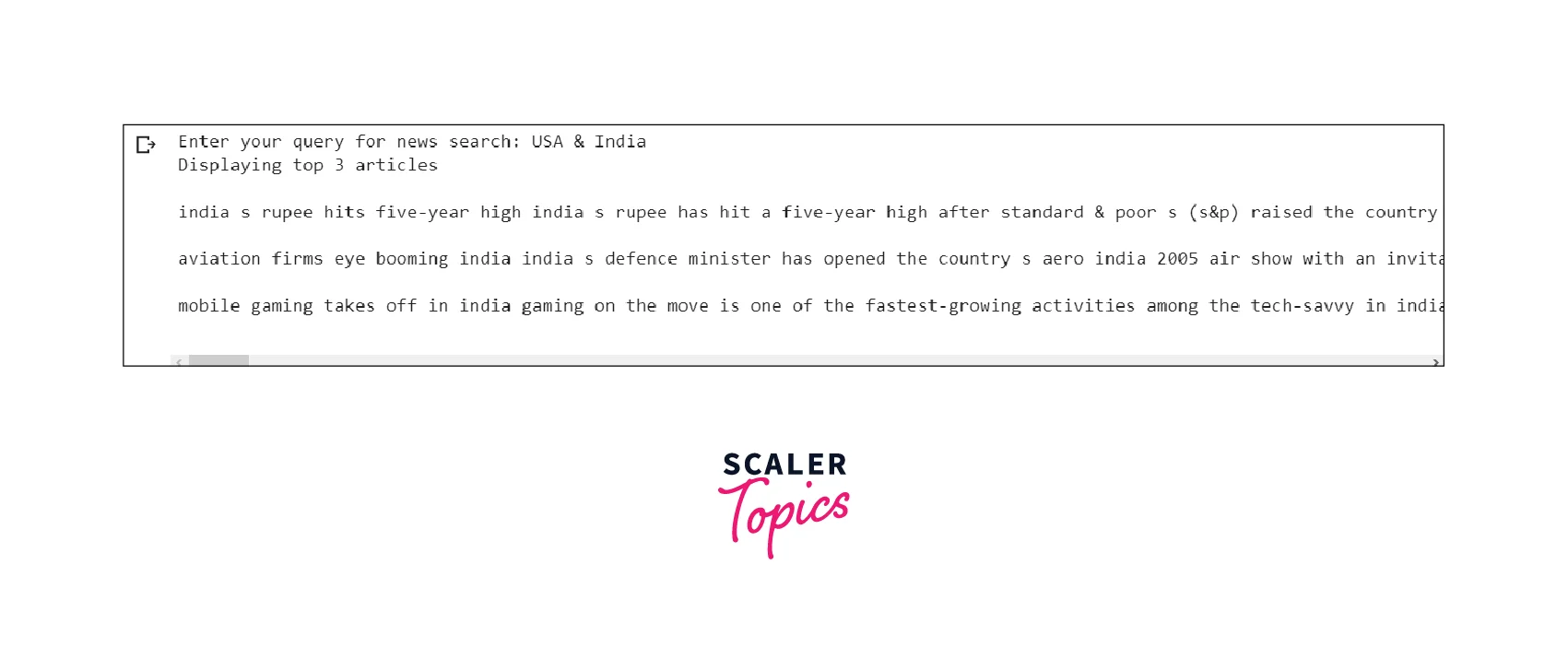

Testing our News Search Engine

Let's test our search engine and find the top 3 news for the query USA & India.

Output:

Advanced Methods

Several advanced methods can be used to build a news search engine using NLP:

-

Named Entity Recognition (NER): This method can be used to extract named entities (people, organizations, locations) from news articles and to create an index of entities and their relationships.

-

Text Summarization: This method can be used to create a summary of a news article, which can be used to give an overview of the article to the user quickly; this can be helpful in situations where the user is looking for a quick summary of the news, rather than reading the entire article. A great example of this method is "Inshorts."

-

Neural Network-based models: These models, such as BERT, GPT-2, and others, can be used to perform tasks such as text classification, question answering, and text generation, which can be used to improve the search results and provide more accurate results by understanding the context and meaning behind the news article.

Conclusion

- In this article, we built a news search engine using techniques from NLP, with a focus on document relevance.

- Even though we used BM25 for our modeling, you can use simpler algorithms like TF-IDF, though the accuracy will be lesser compared to the same due to the earlier discussed points.

- We can use even more advanced techniques to build powerful and way faster news search engines.