Building a Text Summarizer in NLP

Overview

Text summarizers in NLP are tools that can automatically analyze the text in various languages and tries to identify the most important parts of the text to shorten the text while preserving all the main points that the text contains.

Text summarization is typically done to condense a long article to main points so that we can easily get insights into their original text.

What are We Building?

Text summarization solves the problem of presenting the information needed by a user in a compact form, and there are different approaches to creating well-formed summaries.

One of the powerful methods is Latent Semantic Analysis (LSA), and we will be building a text-based summarizer based on LSA in Python and evaluating the model.

Description of Problem Statement

In recent years with the increase in computing power and explosive growth of the internet, there has been a tremendous explosion in the amount of text data on the web and in the archives of news articles, scientific papers, legal documents, and even in online product reviews.

- Text summarization plays an important role in automatic content creation, minutes of meeting generation, helping disabled people, and also for quick online document reading.

- automatic text summarization (ATS) systems are tools that help with the process of finding a subset of a document that contains the information residing in the entire document

- Text summarization system filters the significant information from the original document to generate an abbreviated version.

- The summarization process,, in general,, can also be decomposed into three phases: Analysis of document text to obtain text representation, transformation of text representation into summary representation, and transfiguration of summary representation into the summary text to generate a summary.

Classification of Text Summarization Techniques

Text summarization systems can be categorized according to how and why they are created and what kind of approach is used for the creation of summaries.

- Abstractive vs. Extractive Text Summarization: Extractive summarization is the strategy of concatenating extracts taken from a corpus into a summary, while abstractive summarization involves paraphrasing the corpus using novel sentences.

- Extractive Summarization: In this method, we identify essential phrases or sentences from the original text and extract only these phrases from the text. These extracted sentences would be the summary.

- Abstractive Summarization: In this technique, we work on generating new sentences from the original text. The abstractive method contrasts the approach described above, and the sentences generated through this approach might not even be present in the original text.

- Single vs. Multiple document-based summarization: Another categorization of summarization systems is based on whether they use single or multiple documents.

- Only single documents are used for generating the summary in a single-document summarization system.

- Multiple documents on the same subject are used for the generation of a single summary in multi-document summarization systems.

- Generic vs Query Based Summarization: Summarization systems can also be categorized as generic and query-based.

- Main topics are used to create the summary in generic summarization systems while topics that are related to the answer of a question are used for the construction of the summary in query-based summarization systems.

- Supervised vs. Unsupervised text summarization: Document summarization systems can also be categorized based on the technique they use, such as supervised and unsupervised methods.

- Supervised techniques use data sets that are labeled by human annotators.

- Unsupervised approaches do not use annotated data but linguistic and statistical information obtained from the document itself.

Algorithms for Text Summarization

- Text summarization algorithms based on machine learning: These algorithms use techniques like Naive Bayes, Decision Trees, Hidden Markov Model, Log–linear Models and also Neural Networks.

- Graph-based summarization approaches for text summarization: Well-known graph-based algorithms exist, like HITS and Google’s PageRank, which were developed to understand the structure of the Web.

- These methods can be used in text summarization where nodes represent the sentences and the edges represent the similarity among the sentences.

- Methods like TextRank and Cluster LexRank are two methods that can use a graph-based approach for document summarization.

- Algebraic methods such as Latent semantic analysis, Non-negative Matrix Factorization (NMF), and Semi-discrete Matrix Decomposition are also used for document summarization.

- LSA is the best known among these algorithms, which are based on singular value decomposition (SVD). Similarity among sentences and similarity among words are extracted in the LSA algorithm.

- The LSA algorithm is also used for document clustering and information filtering other than text summarization.

- Topic Representation Approaches: Topic representation approaches are one of the common and earliest works which aim to identify words that describe the topic of the input document.

- Topic Words: This method uses frequency thresholds to locate the descriptive words in the document and represent the topic of the document. We can also use the log-likelihood ratio test to identify explanatory words (also called as topic signatures in text summarization literature)

- Utilizing topic signature words as topic representation was very effective and increased the **accuracy of sample tasks like multi-document summarization in the news domain.

- The second step is to compute the importance of the sentences either as a function of the number of topic signatures it contains or as the proportion of the topic signatures in the sentence.

- Both sentence scoring functions relate to the same topic representation; however, they might assign different scores to sentences.

- The first method may assign higher scores to longer sentences because they have more words. The second approach measures the density of the topic words.

- Frequency-driven Approaches: When assigning weights of words in topic representations, we can think of binary (0 or 1) or real-value (continuous) weights and decide which words are more correlated to the topic. The two most common techniques in this category are word probability and TFIDF (Term Frequency Inverse Document Frequency).

- Topic Words: This method uses frequency thresholds to locate the descriptive words in the document and represent the topic of the document. We can also use the log-likelihood ratio test to identify explanatory words (also called as topic signatures in text summarization literature)

- Bayesian Topic Models: Bayesian topic models are probabilistic models that uncover and represent the topics of documents. They are quite powerful and appealing because they represent the information (as topics) that are lost in other approaches.

- The advantage is that describing and representing topics in detail enables the development of summarizer systems that can determine the similarities and differences between documents to be used in summarization

- Limitations of other topic models solved by Bayesian topic models:

- They consider the sentences as independent of each other, so topics embedded in the documents are disregarded.

- Sentence scores computed by most existing approaches typically do not have very clear probabilistic interpretations, and many of the sentence scores are calculated using heuristics.

Usage of Context in Text Summarisation Systems

Text Summarization systems often have additional evidence which can be utilized in order to specify the most important topics of the document and enhance the insights. Below are some examples.

- When summarizing blogs, the discussions and comments coming after the blog post are good sources of information to determine which parts of the blog are critical and interesting.

- When summarising scientific papers, there is a considerable amount of information, such as cited papers and conference information, which can be leveraged to identify important sentences in the original paper. I

Deep learning-based language models have proven effective and state of the art that can incorporate text from multiple sources in building summaries.

- Working of a sample deep learning for text summarization: Most deep learning models either use sequence-to-sequence models incorporating LSTM or RNN units or employ the recently popular transformer models.

- The deep learning models based on tranformer-based encoder decodes models simulate how humans summarize long documents first using an extractor agent.

- The extractor agents typically select the salient sentences or highlights and then employ an abstractor (an encoder aligner decoder model). This way, the network can rewrite each of these extracted sentences.

- To train the extractor on available document summary pairs, the model uses a policy-based reinforcement learning (RL) with sentence level metric rewards to connect both extractor and abstractor networks and to learn sentence saliency.

Latent Semantic Analysis for Text Summarization

Latent Semantic Analysis is an unsupervised, algebraic, and also statistical method that extracts hidden semantic structures of words and sentences. Since the model is unsupervised, it does not need any annotated training or external knowledge.

- LSA uses the context of the input document and extracts information such as which words are used together and which common words are seen in different sentences. A high number of common words among sentences indicates that the sentences are semantically related.

- The meaning of a sentence is decided using the words it contains, and meanings of words are decided using the sentences that contain the words.

The summarization algorithms that are based on the LSA method usually contain three main steps: Input matrix creation, SVD, and Sentence Selection.

- Input matrix creation: Each input document needs to be represented in a way that enables the algorithm to understand and perform calculations on it.

- The representation is usually a matrix representation where columns are sentences and rows are words/phrases.

- The cells are used to represent the importance of words in sentences.

- Since all words are not seen in all sentences, most of the time, the created matrix is sparse.

- Singular Value Decomposition (SUV) in LSA: SVD is also an algebraic method like LSA that is used to find out the interrelations between sentences and words.

- Besides having the capability of modeling relationships among words and sentences, SVD has the capability of noise reduction and helps to improve the accuracy of the models.

- For LSA, SVD, in particular, tries to model the relationships among words phrases, and sentences. The given input matrix A is decomposed into three new matrices such that

- U is words × extracted concepts (m×n) matrix; represents scaling values and is a diagonal descending matrix (n×n); and V is the sentences × extracted concepts (n×n)

- Sentence selection: The results of SVD can be used to select important sentences in this step. There are several methods for this, so let us look at one method briefly.

- After representing the input document in the matrix and calculating SVD values, the matrix (the matrix of extracted concepts × sentences) is used for selecting the important sentences.

- In matrix, the row order indicates the importance of the concepts such that the first row represents the most important concept extracted.

- The cell values of the matrix show the relation between the sentence and the concept. A higher cell value indicates that the sentence is more related to the concept.

- One sentence is chosen from the most important concept, and then a second sentence is chosen from the second most important concept until a predefined number of sentences are collected.

- The number of sentences to be collected in the above step can be given as a parameter to the model or selected based on eventual need.

How Are We Going to Build This?

For a set of documents that are supposed to be summarized, we shall use LSA (Latent Semantic Analysis method) in the following order to summarize the documents.

- Applying SVD to them using some sort of feature weights (we use TF-IDF weights here)

- Get the sentence vectors from the matrix V (k rows).

- Get the top k singular values from S

- We then apply a threshold based approach to remove singular values that are less than half of the largest singular value if any exist.

- This is a heuristic, and we can play around with this value if we want for better performance.

- We then multiply each term sentence column from V squared with its corresponding singular value from S also squared to get sentence weights per topic.

- At last, we compute the sum of the sentence weights across the topics and take the square root of the final score to get the salience scores for each sentence in the document.

Requirements

Import the libraries for processing data and other utilities.

Setting up the Jupyter Notebook

Jupyter Notebook can be installed from the popular setup of Anaconda or jupyter directly. Reference instructions for setting up from the source can be followed from here. Once installed, we can spin up an instance of the jupyter notebook server open a python notebook instance, and run the following code for setting up basic libraries and functionalities.

If a particular library is not found, they can simply be downloaded from within the notebook by running a system command pip install sample_library command. For example, to install seaborn, run the command from a cell within jupyter notebook like:

Building a Text Summarizer in NLP

Getting the Dataset

- For this particular text summarization problem, we are getting data from kaggle datasets related to News Summary and can be found here.

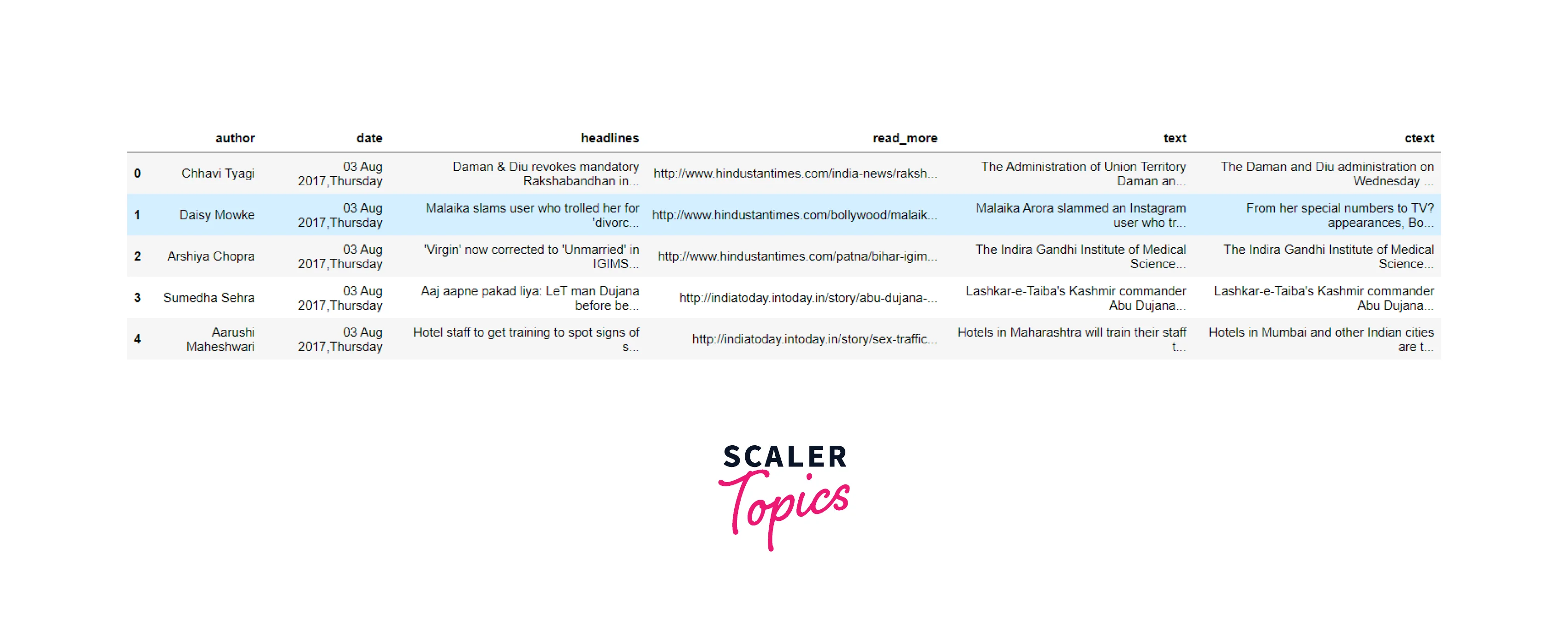

- The dataset consists of 4515 examples and contains Author_name, Headlines, Url of Article, Short text, and Complete Article. The summarized news is gathered by scraping from multiple news articles sites like the Hindu, Indian Times and Guardian, etc. The time period ranges from February to August 2017.

Loading the Dataset

- There is one file in the dataset, news_summary.csv, which needs to be downloaded separately and kept in the same location in the place where the jupyter notebook with the code which we run here is there.

- An example view of sample records from the dataset is here, and we will mainly use the last column in the dataset, ,text, which gives the complete text of the articles that were collected.

Raw Data Preprocessing

- Initial data processing on raw data for basic cleaning. We shall properly format the data and also remove the nans present in the raw dataset.

EDA & Brief description of the data

- We shall do EDA on the dataset related to the text in the corpus

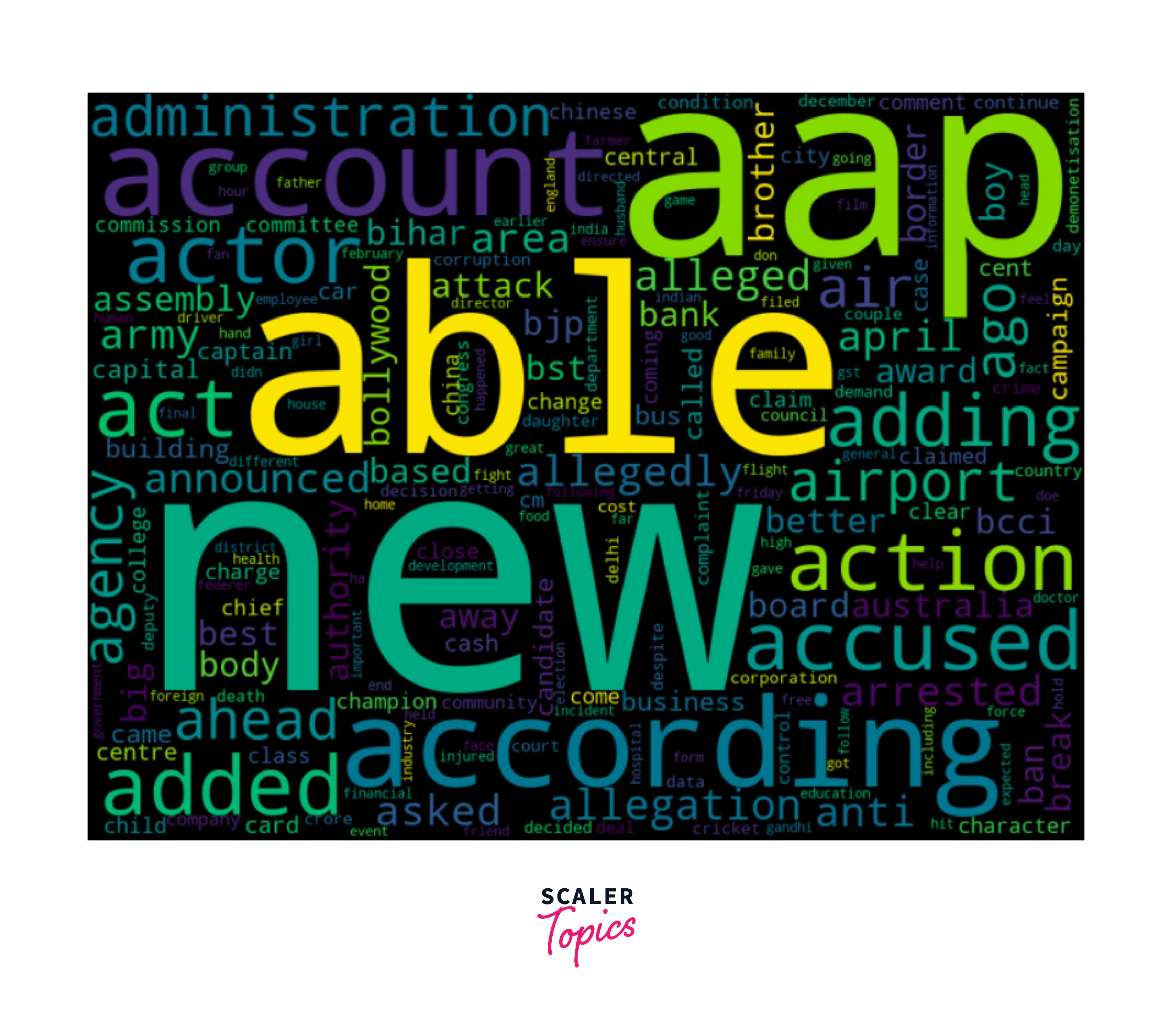

- Generate unigrams and draw word clouds to look at the most frequent words. Let us plot for unigrams, bigrams, and trigrams

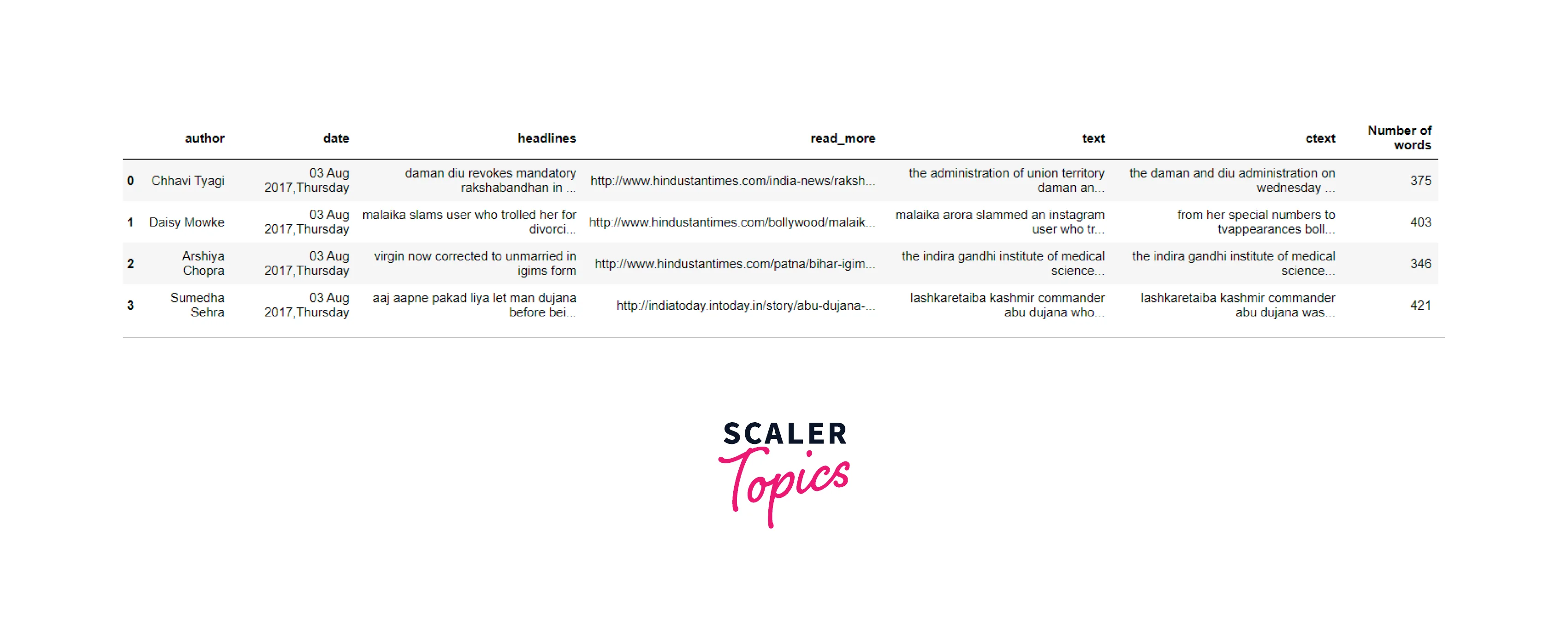

- Pre-processing: We will lowercase the text in the corpus, then split the sentences into individual words, and then remove special characters and punctuations and return the final text after processing as it is.

Sample sentence to display how our function for processing works. The output of the pre-process function looks like this for the above input sentence:

- Using the preprocessing function on the overall dataframe.

- After pre-processing, we will write a function here that can take the cleaned text and tokenize it into individual words. We will also filter the stop words again while tokenizing the corpus

- Since we pre-processed the dataframe columns directly, the output of pre-processing still looks the same, but the text in the text column will be cleaned.

Let us look at an example of original text in the corpus and how it looks like after the pre-processing:

Original input text from the example:

Result after pre-processing the text:

Build the Text Summarizer model

We will do some feature engineering and then supply SVD, then construct the overall sentence scores, which are summarized in separate steps.

- Feature Engineering: In the previous pre-processing step, we have a cleaned set of records; we need to represent text with features that models can understand. We do this with a bag of words representation using TF IDF vectorizer in sklearn and apply SVD on top of it to efficiently decompose the input matrix of TF-IDF features before building the sentence scores.

- While typically, Bag of Words representation only creates a set of vectors containing the count of word occurrences from document text, TF-IDF vectorization goes a step further to process the text such that more important words are given more weight based on relevance also but not count alone.

- Applying SVD: When we apply SVD on top of tf-idf, it gives a reduced dimensional representation of the feature matrix, making a strong emphasis on the strong relationships and removing the noise.

- This also helps us in retaining the best possible reconstruction signal of the feature matrix with the least possible information loss.

- Typically, the main hyperparameter to use SVD is to figure out how many dimensions concepts to use when approximating the feature matrix. This parameter can be learned by experimentation.

- Then we construct the sentence scores (absolute value of sentence vector in the projected conceptual space) and pick the top sentences we need as summary.

We will wrap all the steps for building the model into a single function and run it on each document for which the summary is needed.

Train and Test the Model

Let us look at the output of two sample examples from our model:

Input for Sample 1:

Output for sample 1:

Input for Sample 2:

Output for sample 1:

Limitations of LSA for Text Summarization

There are some limitations to using Latent Semantic Analysis for text summarization. Let us look at them here.

- The major issue with LSA is that it does not use the information about word order, syntactic relations, and morphologies. This kind of information can be necessary for finding out the meaning of words and texts.

- One other limitation is that it uses no world knowledge but just the information that exists in the input document.

- The other limitation is related to the performance of the algorithm.

- Also, in LSA, with larger and more inhomogeneous data, the performance decreases sharply, and mainly this decrease in performance is caused by SVD, which is a very complex algorithm.

To improve the base LSA method, we can augment it with other approaches; one sample approach can be in combining it with other models:

- Text summarizing the long textual documents using LSA topic modeling along with the TF-IDF keyword extractor for each sentence in a text document and combining that using the BERT encoder model.

- The BERT model can encode the sentences from textual documents in order to retrieve the positional embedding of topics word vectors which will use the entire context of the sentence while feeding to the LSA model.

Evaluating Systems for Text Summarizers in NLP

Human Evaluation: Human Evaluation is the simplest way to evaluate a summary created by any text summarization system, and it entails having a human assess the quality of output like some expert judges would evaluate the coverage of the summary.

- Human experts can evaluate the summary by judging how much the candidate summary covered the original input given.

- The factors that human experts must consider when giving scores to each candidate summary are grammaticality, nonredundancy, integration of most important pieces of information, structure, and coherence.

Automatic Evaluation Methods: There has been a set of metrics to automatically evaluate summaries since the early 2000s and ROUGE is the most widely used metric for automatic evaluation.

- Steps associated with automatic summary evaluation are:

- It is fundamental to decide and specify the most important parts of the original text to preserve.

- Evaluators have to automatically identify these pieces of important information in the candidate summary since this information can be represented using disparate expressions.

- The readability of the summary in terms of grammaticality and coherence has to be evaluated.

Conclusion

- Text Summarization is the process of obtaining salient information from an authentic text document.

- Text Summarization techniques are mainly classified into abstractive and extractive summarization.

- `Extractive summarization techniques produce summaries by choosing a subset of the sentences in the original text which contain the most important sentences of the input.

- Abstractive Text Summarization is the task of generating a short and concise summary that captures the salient ideas of the source text, but it may create new sentences altogether new.

- Latent semantic analysis (LSA) is an unsupervised method for extracting a representation of text semantics based on observed words and works very well in practice.