Ethical Considerations for Transformer Deployment

Overview

As artificial intelligence (AI) technology advances, We should consider the Ethics for Transformer models as there are growing ethical considerations surrounding their deployment, particularly with transformer models like Chat GPT. These models can generate human-like text, raising concerns about the potential misuse or manipulation of AI-generated content. Organizations and individuals must understand the ethical considerations and navigate the complex landscape surrounding the use of transformer models.

Transparency and Explainability

Transparency and Explainability plays a crucial role in ethically deploying transformer models like GPT. As AI technologies become more advanced, it becomes increasingly challenging to understand how these models arrive at their decisions. This lack of transparency can give rise to potential biases and unjust outcomes. To address this issue, organizations must strive to make their AI systems more transparent and explainable.

Documenting the Data

- One way to achieve transparency is by documenting the training data and the processes used to develop the transformer model.

- This includes providing detailed information about the sources of data, the data preprocessing steps, and the algorithms involved. Data Privacy and Compliance make up privacy measures like describing the data licence and Permissions. For example

Consider the source of data and data Licencing in ethics of the transformer models.

a. Sources of Data:

-

Detailed Information:

Start by listing all the sources of data that were used for training. Be specific about where the data came from, whether it's publicly available datasets, proprietary data, user-generated content, or a combination of these sources.

-

Data Provenance:

Include information about the origin of each dataset. For instance, if web scraping was used, specify the websites and domains from which the data was collected.

b. Data Licensing and Permissions:

-

Licensing Information:

Clearly state the licensing terms associated with each dataset. Ensure that you have the legal rights to use the data for the intended purpose.

Explainability

- Organizations should consider implementing explainability techniques that allow stakeholders to understand the model's decision-making process.

- Explainability can be achieved through various methods, such as using attention mechanisms to highlight which parts of the input influenced the model's output or providing interactive interfaces that enable users to query the model for explanations.

- Organizations can build trust with users, regulators, and society by prioritizing. In the following sections, we will explore practical steps and best practices to enhance transformer models like Chat GPT deployment.

Fairness and Bias Mitigation

Fairness and Bias Mitigation are important aspects to consider when deploying transformer models like Chat GPT. As AI systems become more integrated into our daily lives, ensuring that they treat all individuals fairly and do not perpetuate biases is crucial.

-

Fairness Metrics:

To ensure fairness in AI-generated content, organizations should employ a variety of fairness metrics. These metrics can help assess and quantify potential biases in the model's outputs. Common fairness metrics include disparate impact, equal opportunity, and demographic parity. By continuously monitoring these metrics, organizations can identify and rectify bias in real-time.

-

Bias Detection and Mitigation:

In addition to fairness metrics in the ethics of transformer models, Organizations should implement proactive bias detection and mitigation strategies. This involves regularly reviewing and analyzing the model's responses to identify any instances of bias or discrimination. When biases are detected, organizations should take steps to retrain the model on more diverse and representative datasets, addressing the root causes of bias.

-

Diverse Training Data:

One effective way to mitigate bias is to ensure that the training data used for transformer models is diverse and representative of the intended user base. This includes actively seeking out and including data from underrepresented groups. It's important to recognize that biased training data can lead to biased model outputs, so efforts should be made to minimize such biases at the source.

-

User Feedback and Iteration:

User feedback plays a vital role in bias mitigation. Organizations should actively encourage users to report any biased or harmful content generated by the model. This feedback loop allows organizations to quickly identify and address issues, improving the fairness and inclusivity of the model over time and improving the ethics for transformer models.

-

Bias-Awareness Training:

Training data scientists, developers, and other stakeholders on bias-awareness are crucial. By raising awareness about the potential for bias and discrimination in AI-generated content, organizations can foster a culture of ethical responsibility and ensure that bias mitigation becomes an integral part of the development process.

Incorporating these strategies and practices into the deployment of transformer models helps organizations address the ethical considerations related to fairness and bias mitigation, ultimately leading to more equitable and inclusive AI-generated content.

Privacy and Data Protection

In ethics for transformer models, Privacy and data protection are paramountly considered when transformer models are deployed. These models rely on vast amounts of data, often personal and sensitive information, to generate responses. Ensuring the responsible handling of user data is essential to maintain user trust and adhere to legal and ethical standards.

-

Data Minimization:

Organizations should adopt a data minimization approach, collecting only the data necessary for the model's intended purpose. Collecting excessive or irrelevant data can increase privacy risks and potential misuse.

-

Consent and Transparency:

User consent is fundamental. Organizations must obtain clear and informed consent from users before collecting and using their data. Transparent privacy policies should outline the purposes of data collection, how the data will be used, and the security measures in place.

-

Data Encryption:

Robust data encryption measures should be implemented to protect user data in transit and at rest. Encryption ensures that even if data is intercepted or breached, it remains unreadable without the appropriate decryption keys.

-

Data Anonymization and Aggregation:

To minimize privacy risks, organizations can anonymize or aggregate data whenever possible. This process removes personally identifiable information, reducing the risk of exposing individual user identities.

-

Data Retention Policies:

Organizations should establish clear data retention policies. Data should not be retained longer than necessary for the intended purpose, and mechanisms for data deletion should be in place.

-

Security Audits and Incident Response:

Regular security audits and vulnerability assessments are crucial to identify and address potential weaknesses in data protection measures. Additionally, organizations must have a well-defined incident response plan in case of data breaches to minimize harm and inform affected users promptly.

-

User Rights:

Users should have the right to access, correct, or delete their data. Organizations should provide mechanisms for users to exercise these rights easily and effectively.

-

Compliance with Regulations:

It is essential to adhere to relevant data protection regulations, such as the General Data Protection Regulation (GDPR) in Europe or the California Consumer Privacy Act (CCPA) in the United States. Compliance with these regulations ensures legal and ethical data handling practices

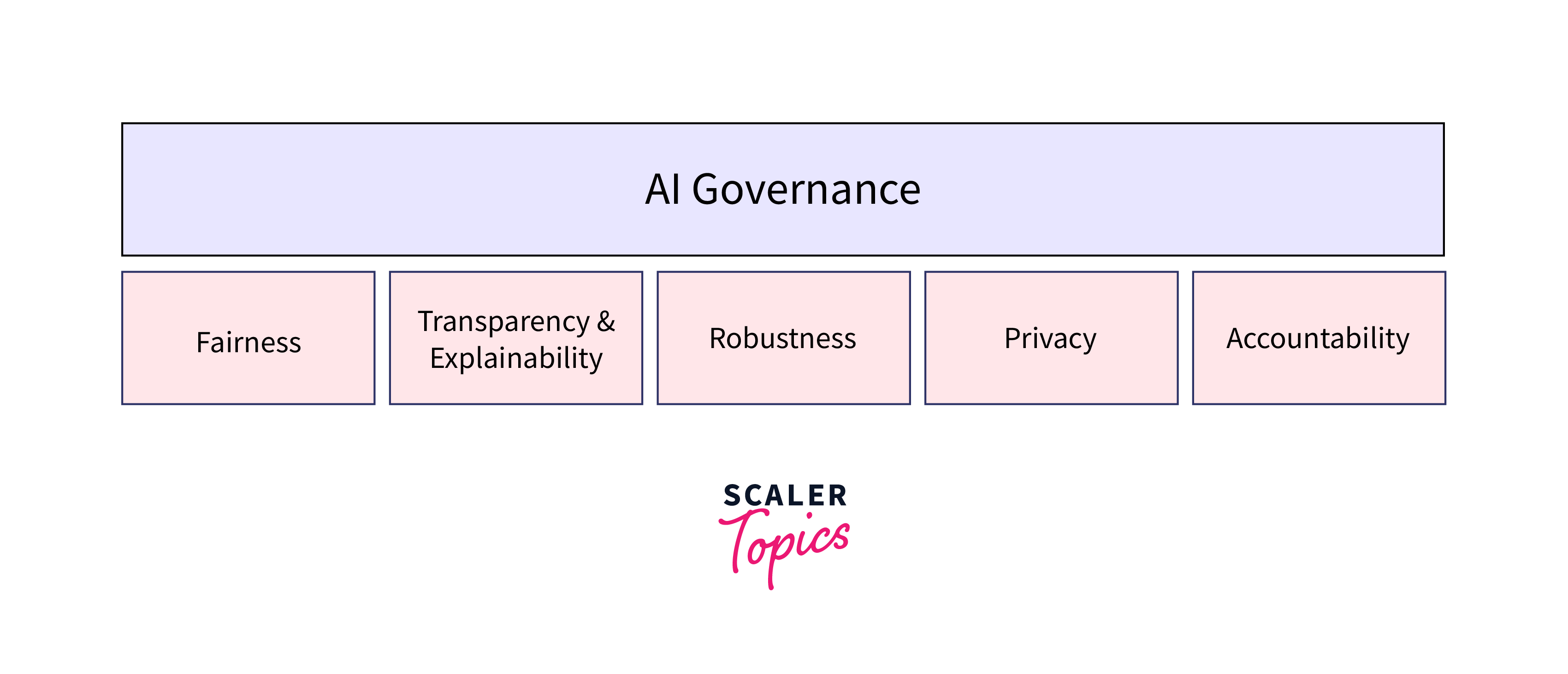

Responsible AI Governance

Responsible AI is a critical aspect of deploying transformer models like GPT's . It ensures that organizations have a framework to monitor and regulate the use of these models, mitigating potential risks and ensuring ethical considerations are met.

-

One important element is fostering accountability. Organizations should establish clear lines of responsibility and assign individuals or teams to oversee the deployment and usage of transformer models. These individuals should be well-versed in ethical considerations and have the authority to make decisions that prioritize user privacy and safety.

-

Transparency is another key component. Organizations should communicate to users how their data will be used and provide them with the ability to opt-out if they do not wish to participate. Additionally, organizations should be transparent about the limitations of the transformer models and any biases that may exist in the generated responses.

By implementing a robust framework, organizations can ensure that transformer models like Chat GPT are deployed ethically and responsibly, protecting user privacy and building trust with their users.

The Importance of Regular Monitoring and Evaluation

-

Once transformer models like Chat GPT are deployed, it is crucial for organizations to prioritize regular monitoring and evaluation. This ongoing process allows for the identification and mitigation of any potential ethical issues that may arise during the model's use.

-

Monitoring the performance of the model ensures that harmful or biased responses are identified and addressed promptly. By regularly evaluating the output and seeking feedback from users, organizations can more effectively detect and rectify any instances of misinformation, hate speech, or discriminatory language.

-

Furthermore, monitoring and evaluation enable organizations to continuously improve the model's performance and fine-tune its responses. By understanding the strengths and limitations of the model, developers can implement necessary updates and avoid potential pitfalls.

In addition, organizations should actively seek external input and engage with the wider community to understand the broader societal impact of their deployed transformer models to better the ethics of the transformer models. This collaboration can help identify ethical blind spots and ensure that the models are aligned with societal needs and values.

Use Case Specific Ethical Considerations

When deploying transformer models like Chat GPT, it is crucial to consider ethical considerations that are specific to the use case. Different industries and applications may have unique challenges and potential risks that must be addressed in the ethics of transformer models.

-

One such consideration is the potential for harmful or malicious use of the model. Organizations should carefully evaluate the potential impact of their deployed transformer model and take steps to mitigate any negative consequences. For example, in the healthcare industry, safeguards must be put in place to ensure that the model does not provide incorrect medical advice or compromise patient privacy.

-

Another key consideration is the potential for bias in the generated responses. Transformer models are trained on large datasets, which can introduce biases present in the training data. Organizations should be vigilant in detecting and addressing biases to ensure fair and inclusive outcomes. This may involve regular monitoring and analyzing the model's responses, as well as actively seeking diverse perspectives during the model development process.

Furthermore, organizations should be cognizant of the risks associated with data security and privacy. Transformer models rely on vast amounts of data, including user inputs, to generate responses. It is essential to have robust data security measures in place to protect this sensitive information. Organizations should also consider anonymizing or aggregating data to minimize the risk of exposing individual users' identities.

Conclusion

In conclusion, deploying transformer models like chat GPT requires careful consideration in the ethics for transformer models. Here are some key takeaways:

- Understand the potential for harmful or malicious use and take steps to mitigate negative consequences. Detect and address biases in the generated responses to ensure fair and inclusive outcomes.

- Implement robust data security measures to protect sensitive information and consider anonymizing or aggregating data to minimize privacy risks.

- Regular monitoring and evaluation demonstrate a commitment to ethical principles and responsible AI deployment. It is a vital aspect of navigating the ethical considerations that come with deploying transformer models like Chat GPT. By actively engaging in this process, organizations can strive to ensure fair, unbiased, and inclusive outcomes for all users.