Introduction to Google Conversational AI

Overview

Google Conversational AI is a groundbreaking technology that leverages Google's expertise in artificial intelligence and natural language processing to create conversational experiences. By combining tools like Dialogflow and Google Assistant, developers can craft interactions that understand and respond to user inputs naturally and intuitively. From google ai chatbot to voice assistants, Google's Conversational AI is shaping the future of human-computer interaction.

Google Meena: End-to-End, Neural Conversational Model

Background

Conversational AI has rapidly evolved to make machine-human interactions natural and seamless. Early Google AI chatbots and voice assistants were limited to rule-based responses. With a shift towards deep learning and neural networks, their capabilities expanded significantly. Google's Meena, built on extensive NLP and machine learning research, leads this evolution. It marks a major advancement in training large neural models for human-like text in conversations. This brief overview showcases the journey to Meena's creation and underscores its significance in conversational AI.

Technical Specifications

Google Meena is the epitome of state-of-the-art conversational models characterized by its vastness and intricacy. Here's a detailed look into its technical facets:

-

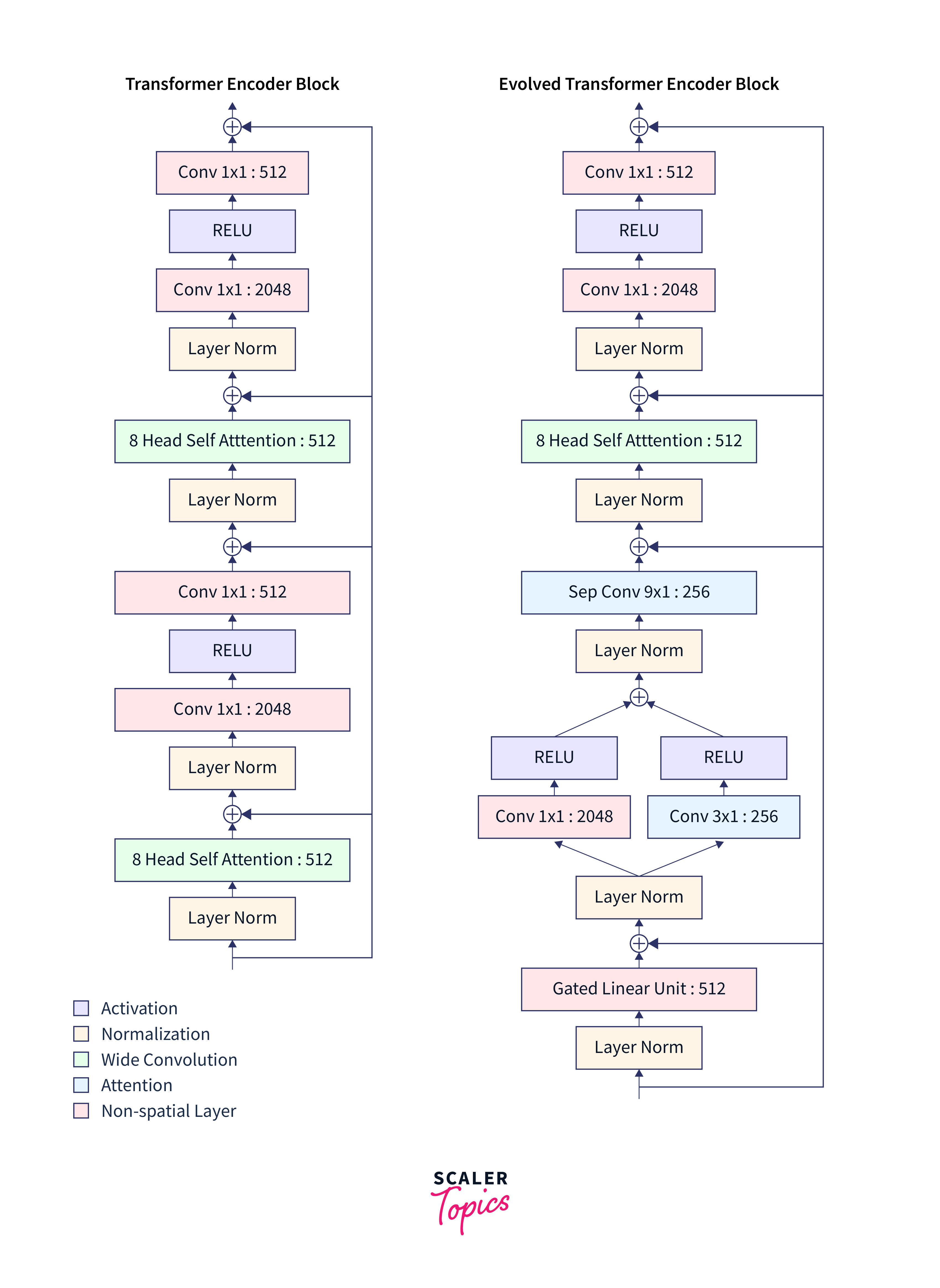

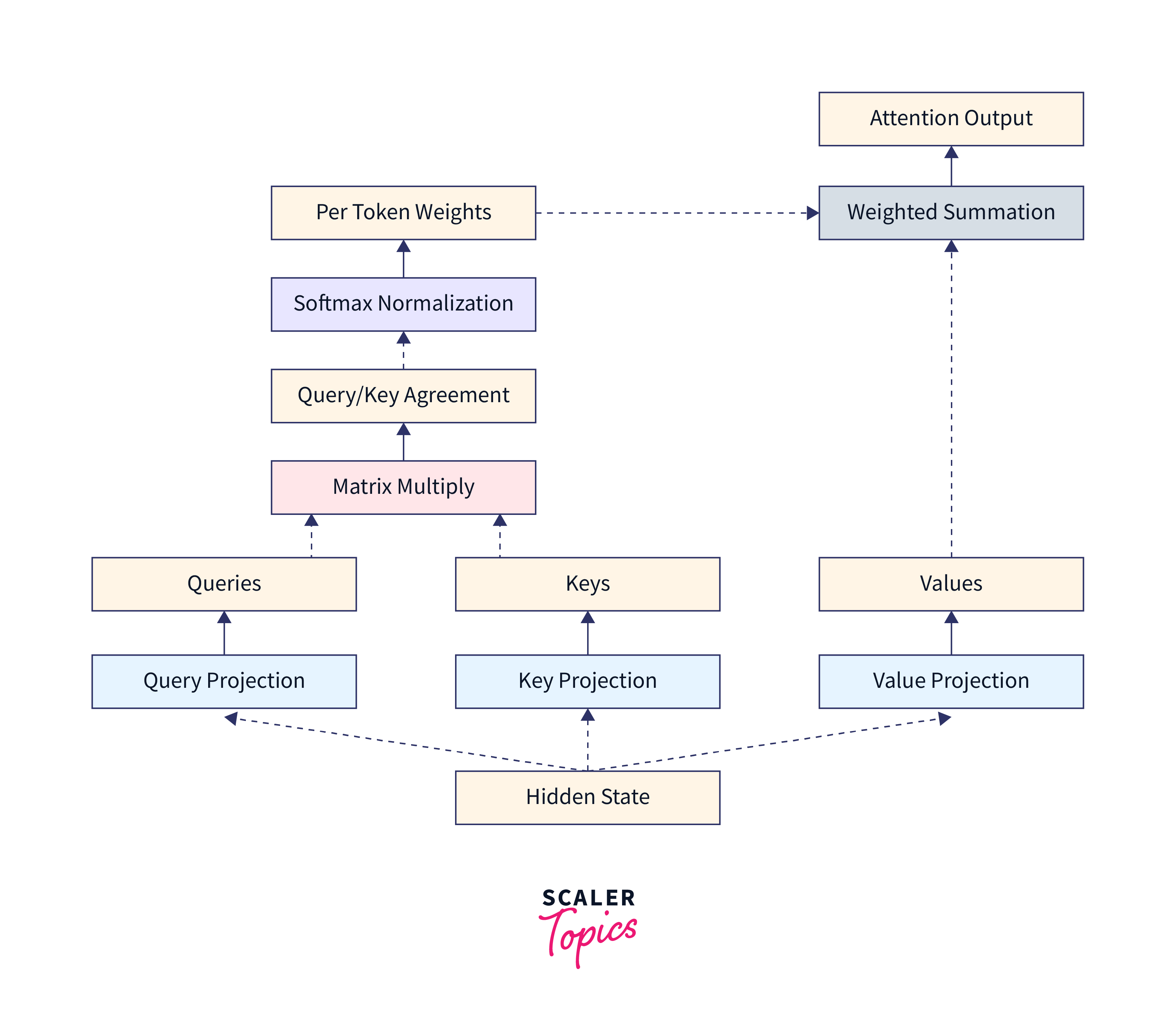

Model Architecture: Meena is based on the Transformer architecture, specifically the seq2seq variant, which has become a gold standard for various NLP tasks.

-

Parameters: With 2.6 billion parameters, Meena is one of the largest models of its kind. This vast number of parameters allows Meena to capture intricate patterns in human language.

-

Training Data: Meena was trained on a corpus of 341 GB of text, underscoring its ability to process various conversational scenarios.

-

SSA Score: Google introduced the Sensibleness and Specificity Average (SSA) to evaluate Meena's performance. It's a benchmark to measure how logical and specific a model's responses are in various situations.

-

Reinforcement Learning: Beyond traditional supervised training, Meena utilizes reinforcement learning from human feedback, enabling it to fine-tune its responses for more human-like interactions.

These specifications not only cement Meena's capabilities but also highlight Google's dedication to pushing the boundaries in the realm of conversational AI.

Features and Capabilities

Google Meena is a powerful conversational agent with notable features:

-

Conversational Depth and Coherence: Meena excels in maintaining depth and coherence in dialogues, ensuring context is preserved in prolonged conversations. This distinguishes it from models that tend to stray off-topic.

-

Multitasking Abilities: Meena is adept at handling multiple tasks simultaneously, swiftly transitioning between topics and providing accurate responses even in complex scenarios.

-

Language Support and Versatility: Beyond English, Meena is proficient in multiple languages, accommodating a diverse user base. It also understands various dialects, colloquialisms, and cultural nuances, enhancing interactions for users from different linguistic backgrounds.

These features collectively make Meena capable of delivering seamless, intelligent interactions, regardless of conversation complexity.

Future Research and Challenges

The journey of creating a conversational AI model like Google Meena is an ongoing one. Despite the cutting-edge features and capabilities, there remain areas that call for continuous research and improvement:

-

Bias and Neutrality: One of the most pressing challenges in AI today is addressing bias. Ensuring Meena provides unbiased responses that cater to diverse populations, cultures, and viewpoints is paramount. Google has been investing in de-biasing techniques, but the task remains a significant research focus.

-

Complex Context Handling: While Meena shows a strong understanding of context, there are layers of human conversation – filled with nuances, emotions, and implicit meanings – that can be difficult for any AI model to grasp fully. Enhancing this understanding is an area where more research is needed.

-

Real-time Learning: While Meena is trained on vast datasets, real-time learning from conversations and adapting instantaneously is a challenge that can further improve the model's efficiency and accuracy.

-

Integration with Multiple Platforms: As conversational AI becomes more ubiquitous, integrating Meena seamlessly across various platforms – from smartphones to IoT devices – presents both an opportunity and a challenge.

Applications and Use Cases

The advent of advanced conversational AI models like Google Meena has opened up many applications across diverse domains. The inherent capability to understand and generate human-like text allows for various uses. Here are some potential applications and use cases for Google Meena:

-

Customer Support: Automating customer service with a more human touch, Meena can handle a wide range of queries, complaints, and issues, offering timely solutions and reducing the load on human agents.

-

Virtual Personal Assistants: Beyond the simple commands and responses, Meena can power virtual assistants that understand context, perform multitasking operations, and provide more intuitive interactions.

-

E-Learning: Meena can assist in educational platforms, offering students personalized tutoring, answering questions, and engaging in deep, meaningful conversations about complex subjects.

-

Entertainment: In gaming and interactive storytelling, Meena can serve as characters that players can converse with, enhancing immersion and offering dynamic story arcs based on conversations.

-

Business Analytics: By understanding the context and nuances of business data, Meena can provide insightful analyses, forecasts, and suggestions to decision-makers.

-

Healthcare: In telemedicine platforms, Meena can provide preliminary diagnoses based on symptoms described by patients, guide them through medical procedures, or even offer therapeutic interactions.

-

IoT Devices: In homes equipped with smart devices, Meena can serve as the central interaction point, understanding user preferences, offering suggestions, and controlling various devices based on conversational commands.

-

Research: Scientists and researchers can use Meena to brainstorm, discuss complex theories, or even simulate interactions in social science experiments.

These applications only scratch the surface. As the technology matures and integrates with other advanced systems, the potential use cases for Google Meena will expand, touching virtually every aspect of our daily lives.

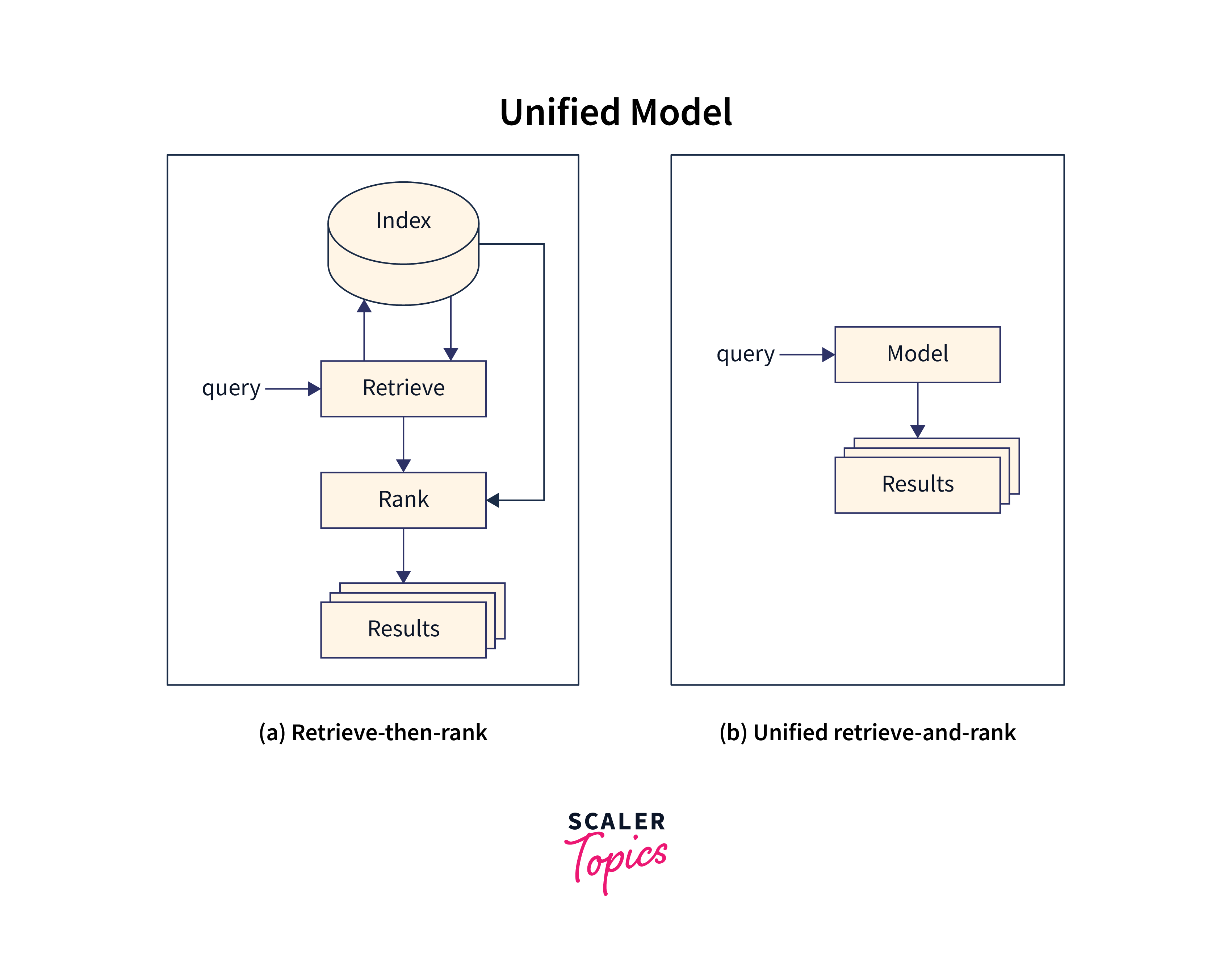

MUM: Multitask Unified Model

Introduction

Google's Multitask Unified Model (MUM) is another monumental step in artificial intelligence. It represents a leap beyond traditional search algorithms and conversational models, offering a more integrated and versatile approach to understanding and generating information. Built on the same Transformer architecture as BERT, MUM goes several steps further, designed to handle multiple tasks simultaneously, understanding information across a vast array of languages, and even bridging modalities like text, images, and potentially video. This powerful capability means that MUM is not just another conversational model; it's a holistic tool that aims to redefine our interactions with digital information, making them more seamless, contextual, and enriched.

Background

The digital evolution has continually driven the demand for richer, faster, and more contextualized user experiences. As data grows both in volume and complexity, there's been an imperative need for models that can understand and generate multifaceted information. Google, staying at the forefront of AI research, recognized these challenges and set out to innovate beyond the capabilities of the already groundbreaking BERT model. Enter MUM, which stands for Multitask Unified Model. Conceptualized as a successor to BERT, MUM's foundation is rooted in the Transformer architecture but amplified to multitask across diverse data formats and languages. Its design was driven by the vision to provide more comprehensive answers to complex questions, transcending the limitations of earlier models that operated in more siloed contexts.

Technical Specifications

MUM, like its predecessor BERT, is rooted in the Transformer architecture, but it comes with several advancements that set it apart:

-

Scale: MUM is estimated to be 1,000 times more powerful in processing abilities than BERT. This increase in scale allows it to handle more complex tasks and provide richer results.

-

Multimodal Abilities: Unlike many solely text-based models, MUM can understand and generate information across multiple modalities, like text and images, simultaneously.

-

Multitasking Capabilities: While most models are trained for specific tasks individually, MUM is designed for multitasking. It can perform multiple tasks without the need for task-specific model training.

-

Languages: MUM's extensive training dataset covers 75 languages, allowing it to understand and generate information in diverse languages.

-

Efficiency: Despite its increased power and capabilities, MUM maintains computation efficiency and can deliver results rapidly, crucial for real-time applications.

The versatility and robustness of MUM's technical specifications underscore Google's commitment to pushing the boundaries of what conversational AI models can achieve.

Features and Capabilities

MUM brings forth a new era of conversational AI with a suite of impressive features:

-

Cross-Modal Understanding: MUM seamlessly integrates text, image, and potentially other modalities to understand and provide more contextually relevant answers.

-

Deep Semantic Search: Instead of relying on keyword-based searches, MUM can understand the deep semantics of a query, offering more nuanced and contextually relevant results.

-

Real-time Translation and Transliteration: With support for 75 languages, MUM can perform real-time translations, allowing users to converse or search in their preferred language.

-

Context Preservation: MUM can understand the broader context of a conversation. It can recall previous parts of a conversation, ensuring the interactions feel more natural and less repetitive.

-

Adaptability: MUM can be used across various applications without retraining due to its multitasking nature. This adaptability means it can be quickly deployed in diverse scenarios ranging from search engines to virtual assistants.

MUM's inherent features and capabilities highlight its potential to revolutionize how users interact with technology, creating more intuitive, efficient, and personalized experiences.

Future Research and Challenges

MUM, as groundbreaking as it is, still has areas awaiting exploration and hurdles to overcome:

-

Data Biases: Like many AI models, MUM can be influenced by biases present in its training data. Addressing and minimizing these biases is paramount for ethical and accurate responses.

-

Complexity Management: The multitasking nature of MUM, while a strength, also means that its training and deployment are complex. Ensuring the model remains efficient without compromising its capabilities will be a focal point.

-

Privacy Concerns: With MUM's deep understanding capabilities, user data privacy becomes more crucial. Ensuring the model respects user boundaries and does not inadvertently infringe upon privacy rights is essential.

-

Continuous Learning: While MUM possesses a deep understanding, the ever-evolving nature of languages and user interactions necessitates continuous learning to stay relevant and accurate.

-

Interactivity Limitations: Despite MUM's advanced capabilities, there may be instances where the model might not fully grasp a user's intent or could misinterpret contextual cues. Research into enhancing its interactivity and context understanding is ongoing.

Applications and Use Cases

The Multitask Unified Model (MUM) is more than just a technological marvel; it offers practical solutions to numerous real-world challenges:

-

Search Enhancements: MUM can deeply understand and generate more contextually relevant search results, easily answering multifaceted questions. For instance, if a user asks, "What's the best way to plant tomatoes and prevent pests?", MUM can provide a comprehensive response addressing planting and pest prevention.

-

Content Creation: With its profound language understanding, MUM can assist content creators in drafting articles, providing content suggestions, and even translating complex topics into simpler language for broader audiences.

-

Virtual Assistance: MUM's multitasking abilities make it an ideal candidate for advanced virtual assistants, capable of handling complex queries, scheduling tasks, and even offering contextual recommendations based on user preferences.

-

E-learning: In educational platforms, MUM can serve as a tutor, answering intricate questions, offering detailed explanations, and even providing multi-language support for students worldwide.

-

Business Intelligence: MUM can analyze vast amounts of data, extracting insights, forecasting trends, and offering actionable business recommendations. Its ability to understand context can be invaluable for finance, healthcare, and retail industries.

-

Cultural and Language Bridging: MUM's extensive language support can aid in breaking down language barriers, translating not just words but also cultural nuances, and making global communication smoother.

These are just a handful of the myriad applications MUM offers. As developers and businesses explore its capabilities further, it's poised to revolutionize numerous sectors, enhancing efficiency and user experience.

Reformer: The Efficient Transformer

Introduction

Reformer is an evolution in the family of transformer models. Introduced by researchers at Google, Reformer was designed to tackle the limitations of earlier transformer models, chiefly their significant memory consumption and computational requirements. With unique approaches such as locality-sensitive hashing (LSH) for attention and reversible layers, Reformer offers the deep learning community a model that can handle long sequences without memory overhead, making it efficient and scalable.

Background

Since its inception, the Transformer architecture has become the backbone of various state-of-the-art models in Natural Language Processing and other domains. However, its Achilles' heel has always been its voracious appetite for memory, especially when dealing with longer sequences. This challenge has restricted its deployment in resource-constrained environments and has hindered the processing of extensive texts.

The reformer needed a transformer model that retains the architecture's strengths while eliminating its inefficiencies. By introducing techniques like LSH attention and reversible layers, the Reformer model drastically reduces memory usage without a significant performance drop. This efficiency ensures the model can process texts as long as novels in a single go!

Technical Specifications

-

Locality-Sensitive Hashing (LSH) Attention: Traditional attention mechanisms scale poorly with sequence length. LSH attention breaks the sequences into fixed-length chunks and employs hashing techniques to attend to only a subset of tokens, thereby making the attention mechanism scalable.

-

Reversible Residual Layers: One of the primary memory costs during training comes from storing activations for backpropagation. The reformer uses reversible layers that allow the recomputation of activations during the backward pass, eliminating the need to store them and thus reducing memory consumption.

-

Axial Positional Encodings: Instead of standard positional encodings, Reformer uses axial positional encodings. This method splits the input into rows and columns, encoding each separately, which is both memory-efficient and retains positional information effectively.

-

Byte-Pair Encoding: To handle a vast vocabulary efficiently, Reformer incorporates a variant of Byte-Pair Encoding (BPE), allowing it to manage out-of-vocabulary words and reduce the embedding size.

With these technical innovations, Reformer achieves impressive performance with a fraction of the resources required by traditional transformer models.

Future Research and Challenges

Reformer has undeniably advanced the capabilities of transformer models, making them more memory-efficient and versatile. However, as with any groundbreaking technology, there are areas of potential improvement and challenges:

-

Training Stability: Incorporating reversible layers and LSH attention has occasionally led to training stability issues. Further research is needed to optimize these components for various tasks and datasets.

-

Adaptability: While Reformer is designed for long sequences, how it adapts to various other tasks, especially those that were not its primary focus, remains a topic of exploration.

-

Comparison with Sparse Attention: With the rise of models using sparse attention mechanisms, there's a need for comparative studies to ascertain the most effective approach for sequence handling.

-

Integration with Other Techniques: How Reformer's unique techniques integrate with other advancements in the deep learning domain, such as model distillation or pruning, is yet to be deeply explored.

Applications and Use Cases

Reformer, with its ability to handle exceptionally long sequences, has a broad spectrum of potential applications:

-

Processing Entire Documents: Traditional models often require lengthy documents to be split into smaller chunks. Reformers can process an entire article, research paper, or even a book in one go, making it invaluable for document summarization, topic modeling, or sentiment analysis at a holistic level.

-

Code Analysis: With the rise of code as text, models like Reformer can be used for code completion, bug detection, and even code summarization, given its ability to handle long strings of code efficiently.

-

Genomic Sequencing: DNA sequences are inherently long, and their analysis requires models capable of processing extended sequences. Reformers can be applied in bioinformatics for tasks like DNA sequence alignment or mutation detection.

-

Real-time Translation: For live translations, especially in formal settings like international conferences, capturing the context from long sentences or paragraphs is crucial. Reformer's capability to handle longer contexts makes it an ideal choice for such applications.

In essence, any application requiring the processing of extended sequences can potentially benefit from the capabilities that Reformer brings to the table.

How to Improve Transformer Efficiency?

Transformers have revolutionized the world of deep learning, especially in natural language processing tasks, owing to their exceptional ability to handle context and provide state-of-the-art results. However, their computation and memory demands tend to increase quadratically with sequence length, making them less efficient for longer sequences. The ever-growing demand for faster, leaner, and more effective models has propelled researchers to investigate methods to enhance transformer efficiency. A few of these methods aim to address the challenge of long sequences without sacrificing too much, if any, of the transformer's power.

1. Linformer

- Introduction: Linformer is designed to approximate the self-attention mechanism of the transformer to be of linear complexity rather than quadratic.

- How It Improves Efficiency: It limits the context of tokens to a fixed size. Rather than attending to all previous tokens in the sequence, each token attends only to a fixed number of recent tokens, thus ensuring linear complexity.

- Application: Especially useful for tasks that involve very long sequences, such as document classification or genome sequencing.

2. Longformer

- Introduction: Longformer introduces a hybrid self-attention mechanism. It combines local windowed attention with global attention.

- How It Improves Efficiency: By using local attention for the majority of tokens and selective global attention for certain tokens, Longformer maintains a balance between computational efficiency and the ability to focus on important tokens.

- Application: Real-time document search, coreference resolution, and other tasks that require understanding over longer contexts.

3. Performer

- Introduction: Performer is a transformer model that leverages kernel-based self-attention methods and random feature maps to approximate the full-rank attention mechanism.

- How It Improves Efficiency: The performer significantly reduces the computational burden by approximating the attention scores using positive random features, thereby achieving linear time complexity.

- Application: Any task that benefits from transformers but requires faster processing, including large-scale language models or real-time analysis.

4. Sparse Attention Patterns

- Introduction: Instead of using full attention, models can be designed to focus only on specific patterns, leading to sparse attention mechanisms.

- How It Improves Efficiency: The computational requirements are drastically reduced by only attending to certain parts of the input.

- Application: Tasks where certain input parts are more relevant than others, like document summarization, where only key points might be needed.

5. Model Pruning

- Introduction: Model pruning involves eliminating certain parts of the model (like weights) that have minimal contribution to the final output.

- How It Improves Efficiency: By reducing the model's size, memory, and computational requirements are reduced, leading to faster inference times.

- Application: Situations with crucial real-time processing or limited resources, such as on-edge devices.

Improving transformer efficiency is crucial for their broader application, especially as data grows in size and complexity. The methods mentioned above are just the tip of the iceberg; research in this domain is ongoing and vibrant.

Google Bard

Google Bard is a large language model google ai chatbot developed by Google. It is still under development, but it has learned to perform many kinds of tasks, including

- Answer your questions in an informative way, even if they are open-ended, challenging, or strange.

- Generate different creative text formats of text content, like poems, code, scripts, musical pieces, emails, letters, etc.

- Translate languages.

- Write different kinds of creative content.

- Follow your instructions and complete your requests thoughtfully.

- Bard is still under development and may sometimes give inaccurate or inappropriate responses. You can help make Bard better by leaving feedback.

Bard is available in over 40 languages and over 230 countries and territories. To use Bard, you need to sign in with a Google Account.

Comparison of Various Google Chatbots

Given the details you've shared, here's how the comparison of various Google ai chatbot can be represented in tabular form:

| Chatbot Name | Nature | Primary Features | Best For |

|---|---|---|---|

| Google Assistant | Virtual assistant embedded in various Google devices and services. | Voice recognition, smart home device control, reminders, real-time information retrieval, etc. | General user queries, smart home control, and everyday tasks. |

| Google Meena | End-to-End neural conversational model. | Conversational depth and coherence, capability to generate contextually relevant responses over multiple turns. | More in-depth, multi-turn conversations with a broader context. |

| Google Duplex | An AI-driven phone call system. | Can make restaurant reservations, book appointments, and get holiday hours via phone call. Exceptional voice naturalness. | Specific task-oriented phone call operations, replicating human-like conversation tones. |

| Google Chat | Enterprise team communication tool. | Direct & team messaging, virtual rooms, bot integrations. | Collaborative tasks, business communications. |

| Dialogflow | End-to-end development suite for creating conversational interfaces. | Natural Language Processing (NLP) to understand and respond to user inputs, supports voice and text-based chats. | Developers and businesses looking to integrate AI chat capabilities into their products or services. |

Conclusion

- The realm of chatbots and conversational AI has witnessed rapid advancements, with Google leading the innovation with models tailored for diverse needs.

- While primarily designed for conversations, the applications of these chatbots stretch to areas like business communication, device control, and developer tools.

- Models like Meena emphasize the breadth and depth of conversations, underscoring the importance of context and coherence.

- The push for efficiency has led to linear and linear innovations, addressing the Transformer architecture's computational challenges.

- As technology progresses, the future promises even more sophisticated, human-like conversational models.