Top Alternatives of GPT-3 Model

Overview

Within the dynamic domain of natural language processing (NLP), the GPT-3 model (Generative Pre-trained Transformer 3) has unquestionably established itself as an adept text generator. However, the AI and NLP landscape constantly evolves, revealing a series of remarkable gpt-3 alternatives. This article looks at key GPT-3 alternatives, carefully studying each model's special traits, potential applications, and conceivable advantages.

What is GPT?

GPT, which stands for Generative Pre-trained Transformer, is a series of language processing models developed by OpenAI. These models are built on the transformer architecture, known for its ability to capture contextual relationships within text. GPT models undergo pre-training using extensive textual data, which enables them to generate coherent and contextually appropriate text in response to prompts.

GPT-3, the third iteration of the GPT model, is particularly notable for its immense scale, boasting 175 billion parameters. This large scale empowers GPT-3 to produce human-like text across various subjects and writing styles. Its applications span a wide range of domains, including content creation, chatbots, code generation, and more.

While GPT-3 is highly powerful and versatile, exploring alternative models can provide valuable insights into the capabilities and potential of AI-powered language processing. Some of the top gpt-3 alternatives include open-source models like OPT by Meta and AlexaTM.

These models offer accessible options for developers and researchers to leverage AI language processing capabilities. Additionally, there are several GPT-3 tools available that can assist in generating high-quality content quickly and easily. Some of the best GPT-3 tools include Bing AI, Google Bard, and ChatSonic. These tools can be utilized to enhance workflows and increase productivity.

Alternatives of GPT-3

These gpt-3 alternatives fall into distinct categories based on their unique characteristics and approaches:

- Structural Innovations

Models like T0 (T5) challenge the conventional model structure by adopting a text-to-text format, simplifying training and application across many NLP tasks. - Contextual Comprehension Focus

DeBERTa specializes in tasks requiring in-depth contextual comprehension, as evidenced by its focus on long-range dependencies and coreference resolution. - Controllable Generation

By enabling users to adjust the style and tone of generated information, Turing NLG meets the need for customisable text generation. - Knowledge Integration

ERNIE stands out by integrating external knowledge sources, enhancing its ability to understand and generate text with real-world context.

These categories highlight the variety of options accessible, addressing various NLP requirements and extending the limits of language processing.

Let's examine each of the models closely.

T0 (Tensorflow's T5)

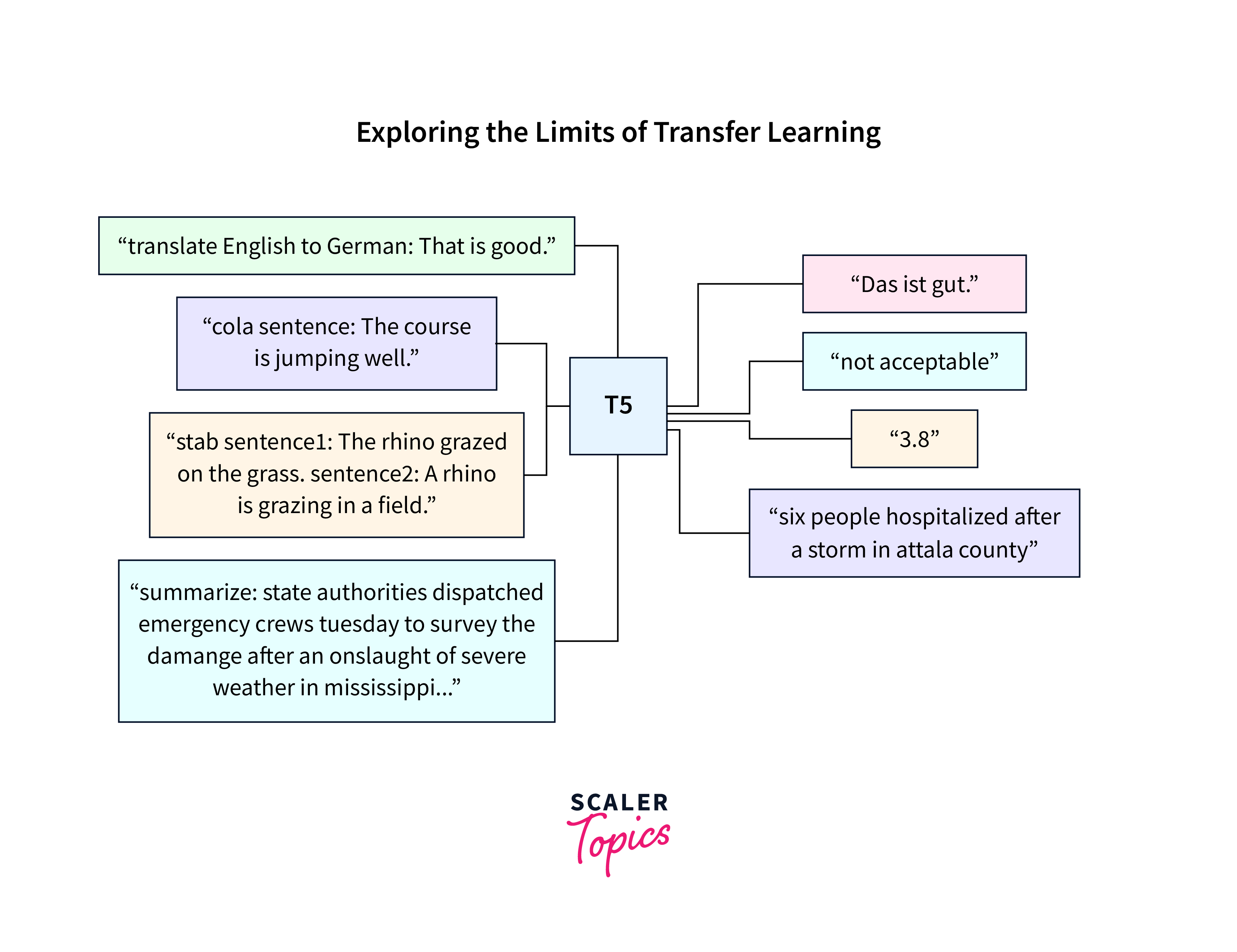

TensorFlow's Text-To-Text Transfer Transformer (T5) introduces a revolutionary concept by framing all NLP tasks as text-to-text problems. This means inputs and outputs are treated as text sequences, providing a consistent and unified framework for various language tasks.

T5's innovation lies in its ability to convert various NLP tasks into a standardised format, such as translation, summarization, question answering, and more. By doing this, T5 eliminates the requirement for task-specific architectures and speeds up the training and deployment process. This approach facilitates transfer learning while also streamlining model management. T5 is a flexible and effective substitute for models like GPT-3 since it can be fine-tuned for particular tasks and is pre-trained on enormous amounts of text.

Let's illustrate the speciality of T5 using a specific task text translation. T5's unique text-to-text approach will shine in this context.

Let's illustrate the speciality of T5 using a specific task text translation. T5's unique text-to-text approach will shine in this context.

We'll use the Hugging Face Transformers library to implement a simple text summarization example using the Hugging Face Model Hub - T5.

Output

In this example, the model is loaded using the t5-small checkpoint, and the source text is specified along with the task prefix ("translate English to French"). The model generates the translation, and the output is decoded into human-readable text.

DeBERTa

DeBERTa, a creation from Microsoft, emerges as a prominent alternative to GPT-3, especially in deep contextual understanding. "DeBERTa" stands for "Decoding-enhanced BERT with disentangled attention," highlighting its unique approach to language processing.

At its core, DeBERTa is built upon the BERT (Bidirectional Encoder Representations from Transformers) architecture, known for its proficiency in capturing bidirectional contextual relationships within text. However, DeBERTa extends this foundation by addressing a crucial challenge: comprehending long-range dependencies and relationships within sentences and documents.

The innovation lies in the disentangled attention mechanism, which allows DeBERTa to focus on different aspects of context without interference. This capability is advantageous for tasks requiring understanding complex connections and interdependencies across a document. DeBERTa offers a valuable contribution to language understanding by excelling in coreference resolution and document-level understanding.

Use a pretrained DeBERTa model to perform sentiment analysis using the Hugging Face Transformers library:

Output

In this example, the DeBERTa model "microsoft/deberta-base" is loaded. The input text is tokenized using the DeBERTa tokenizer. The model's output logits are used to predict the sentiment, and the sentiment prediction is interpreted based on the highest logit value. The confidence score is obtained by applying a softmax to the logits.

Turing NLG

Microsoft's Turing Natural Language Generation (NLG) is an AI-powered language generation contender. One of its defining features is its focus on controllable language generation, allowing users to influence the generated text's style, tone, and other aspects.

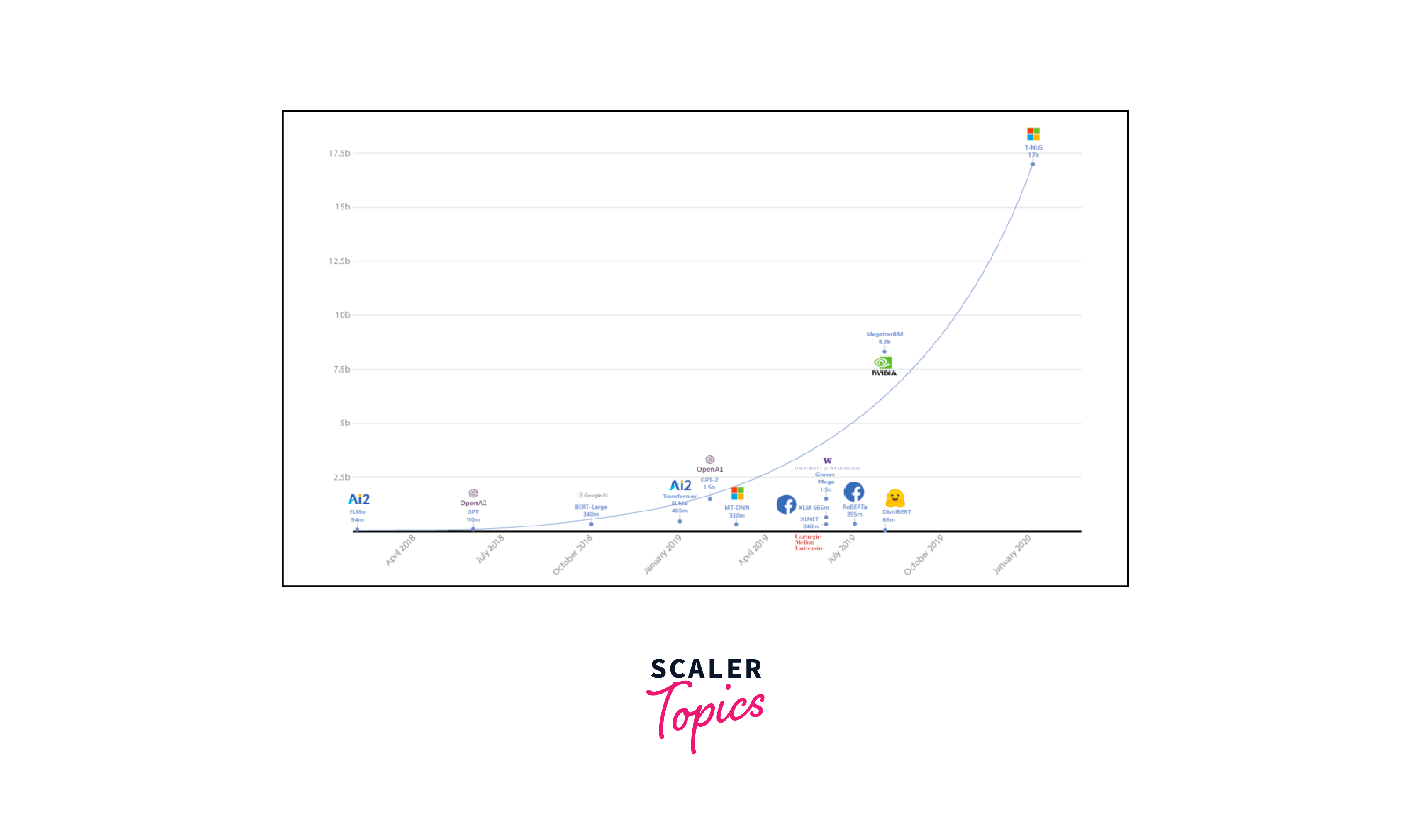

("Turing Natural Language Generation (T-NLG) is a 17 billion parameter language model" have this in the bottom of the image)

("Turing Natural Language Generation (T-NLG) is a 17 billion parameter language model" have this in the bottom of the image)

Turing NLG achieves this controllability through innovative techniques, including reinforcement learning and fine-tuning. This enables users to specify requirements and constraints, ensuring the generated content adheres to predefined guidelines. This level of control makes Turing NLG particularly suitable for applications that demand tailored and contextually precise language generation, such as content creation for marketing materials, social media posts, and more.

Turing NLG expands the horizons of what AI-driven content creation can achieve by offering a controllable approach to language generation. As you delve deeper into the intricacies of Turing NLG and its capabilities, you'll discover the potential for shaping the narrative with fine-grained control over the language generation process.

Turing-NLG has yet to be made available to the general public, and Microsoft has yet to state whether this is intended. However, the corporation does claim that Turing-NLG's contributions to Project Turing are "being integrated into multiple Microsoft products, including Bing, Office, and Xbox."

ERNIE

The Baidu-developed model ERNIE (Enhanced Representation via Knowledge IntEgration) is a game-changer in natural language processing. ERNIE stands out due to its distinctive method of language comprehension, which incorporates outside knowledge sources into the process of learning representations.

Traditional language models like GPT-3 excel at understanding and generating text based solely on the data they've been trained on. However, ERNIE takes a step further by incorporating factual information from the real world. This knowledge enrichment enables ERNIE to understand text in context better and generate responses that reflect a deeper comprehension of the language and the subject matter. The incorporation of external knowledge sources also equips ERNIE with improved reasoning capabilities. Whether answering questions, classifying text, or performing other language-related tasks, ERNIE's ability to draw from a broader pool of information enhances its accuracy and relevance.

The below code demonstrates how to use the ERNIE model for contextualized language understanding.

Output

Conclusion

- In natural language processing (NLP), GPT-3 is a prominent model capable of generating human-like text across diverse domains.

- TensorFlow's T5 reimagines NLP tasks as text-to-text challenges, simplifying training and deployment and showcasing innovative strategies.

- Microsoft's DeBERTa focuses on deep contextual comprehension, while Turing NLG offers controlled text generation, and Baidu's ERNIE integrates external knowledge for enhanced understanding.

- Together, these gpt-3 alternatives broaden NLP possibilities, presenting a mosaic of innovative pathways beyond.