Myths And Reality Around large language Models

Overview

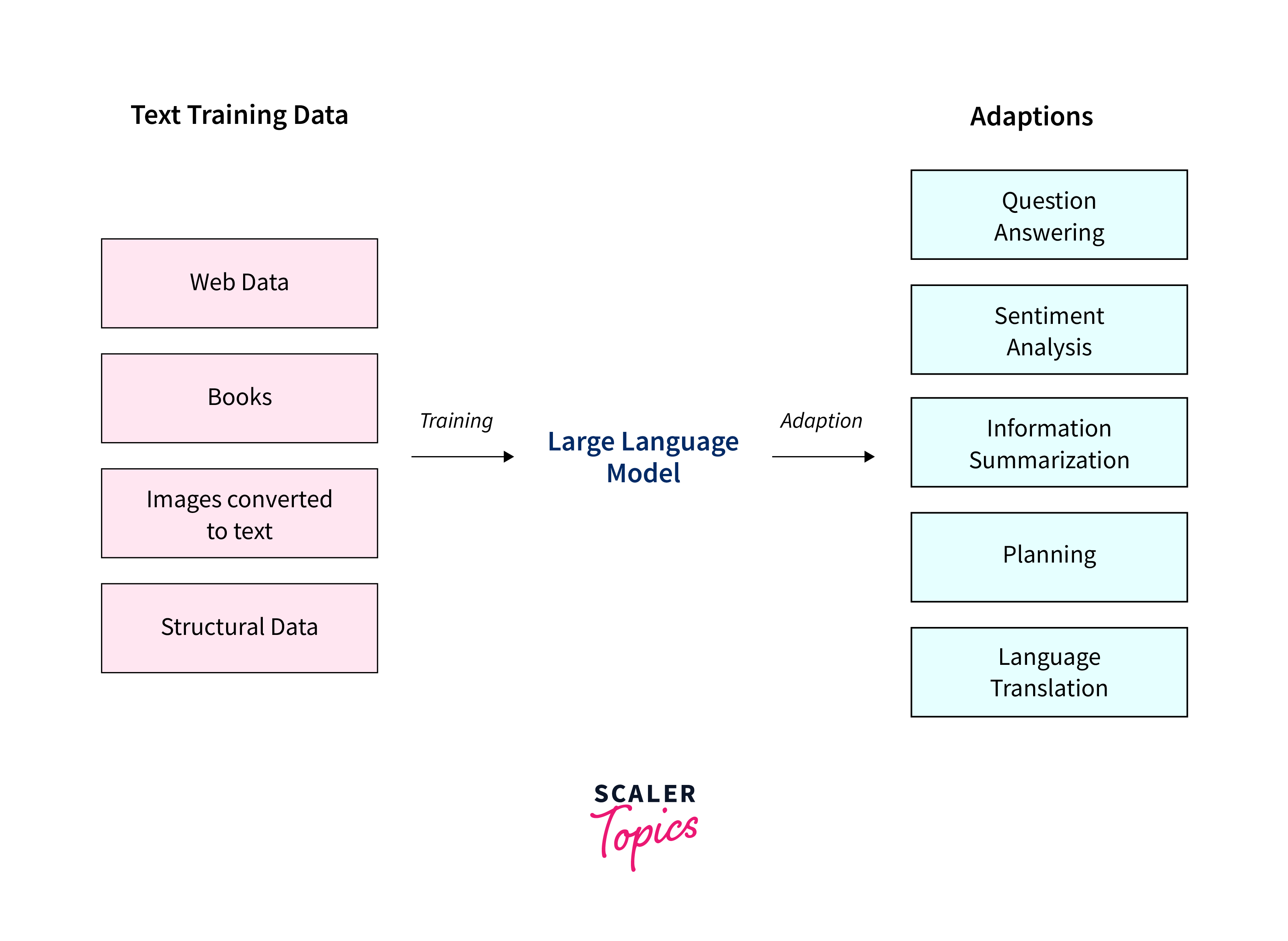

The landscape of artificial intelligence is constantly evolving. Large Language Models (LLMs) have emerged as transformative tools within this realm. However, their prominence has given birth to several myths and misconceptions. In this blog, titled "Myths and Reality Around Large Language Models," we will embark on a journey to shed light on the actual capabilities and limitations of LLMs. From their perceived omniscience to their potential to replace human creativity, we will dive deep into the realities of LLMs and their impact on various facets of our lives.

Myths and Reality Around Large Language Models: Debunking Misconceptions

Myth 1: Large Language Models Are Omniscient and Infallible

Reality: The notion that LLMs possess a reservoir of all possible knowledge is far from accurate. While they can provide information on various topics, their knowledge is confined to the data they have been trained on. LLMs require greater discernment and true comprehension since, while they can repeat facts, they might need to understand complexities fully or have deep insights. They may unintentionally provide inaccurate or deceptive information yet have a convincing appearance.

It is important to remember that LLMs generate responses based on patterns and associations in the training data rather than having an inherent understanding of the topics they discuss. They do not possess personal experiences, opinions, or the ability to reason like humans do. LLMs are not capable of critical thinking or independent judgment.

Myth 2: Large Language Models Lack Context and Common Sense

Myth 2: Large Language Models Lack Context and Common Sense

Reality: LLMs have achieved remarkable context-awareness, but more is needed to guarantee flawless comprehension. They rely heavily on data patterns, meaning they must be more accurate with complex conversations. Common sense, a trait inherent to humans, is still out of reach for LLMs. While they can provide logical answers, they need a genuine understanding of the world and may produce absurd responses.

LLMs excel at understanding and generating text within a specific context. Still, their understanding is limited to what they have been trained on. They can struggle with nuanced or ambiguous language and may misinterpret or provide irrelevant responses. Additionally, LLMs lack background knowledge and real-world experiences that humans possess, which can lead to contextually inappropriate or illogical answers.

Myth 3: Large Language Models Pose No Bias or Ethical Concerns

Reality: Contrary to the myth, LLMs do inherent biases from the training data and can generate biased language, stereotypes, and discriminatory content. This raises significant ethical challenges, especially when LLMs are used in sensitive domains such as law, healthcare, or social interactions.

Mitigating these biases requires ongoing efforts in data curation and model fine-tuning. It is important to recognize that biases are not inherent to LLMs but rather a reflection of the biases in the data they are trained on. Therefore, addressing bias in LLMs involves addressing bias in the training data and implementing measures to reduce bias during model training and deployment.

Efforts are underway to improve the fairness and inclusivity of LLMs. Researchers and developers are actively working on techniques such as debiasing methods, diverse dataset collection, and inclusive fine-tuning to minimize biases and promote the ethical use of LLMs.

Myth 4: Large Language Models Will Replace Human Creativity

Reality: The assumption that LLMs are meant to replace human creativity is a misconception. LLMs are tools designed to assist and augment human creativity rather than replace it.

While LLMs can generate text and perform various tasks, they cannot truly understand and replicate human creativity. Human creativity is a complex process that involves imagination, emotion, intuition, and personal experiences, which are not easily replicated by machines.

LLMs can be valuable tools for inspiration, generating ideas, and assisting in creative tasks. They can provide suggestions, help with brainstorming, and offer alternative perspectives. However, the final creative output still relies on human interpretation, judgment, and originality.

Myth 5: Large Language Models Are Easy to Deploy and Use

Reality: Deploying and using Large Language Models (LLMs) is more complex than some may assume. While LLMs have shown remarkable capabilities, there are several challenges and considerations.

Firstly, deploying LLMs requires substantial computational resources and infrastructure. Training and running LLMs can be computationally intensive, requiring high-performance hardware and significant memory capacity. Organizations and individuals must have the necessary resources to handle the computational demands of LLM deployment.

Secondly, fine-tuning LLMs for specific tasks or domains can be complex. Fine-tuning involves training the model on specific data and optimizing it for a particular application. This process requires machine learning, data curation, and model evaluation expertise to achieve optimal performance.

Additionally, using LLMs effectively requires careful consideration of ethical and bias-related concerns. LLMs can inherit biases in the training data, leading to biased outputs. Addressing and mitigating these biases requires ongoing efforts in data curation, bias detection, and algorithmic fairness.

Myth 6: Large Language Models Will Solve All Problems

Reality: The notion that Large Language Models (LLMs) will serve as a solution to all problems is an overstatement. While LLMs have demonstrated impressive capabilities, it is important to understand their limitations.

LLMs excel at tasks that can be approached through pattern recognition and statistical inference. However, they may struggle with complex problems requiring deep contextual understanding, reasoning, and real-world knowledge.

The outputs generated by LLMs heavily rely on the quality and diversity of the training data they are exposed to. Only complete or correct training data can result in good outputs. Acknowledging that LLMs are tools that require human guidance and interpretation is crucial. They cannot independently think or possess critical thinking abilities. Human oversight remains essential to ensure the responsible and ethical use of LLMs and validate their outputs.

Myth 7: Large Language Models Will Eliminate Human Jobs

Reality: The belief that Large Language Models (LLMs) will completely eliminate human jobs is an exaggeration. While LLMs have the potential to automate certain tasks and improve efficiency, they cannot replace the unique skills and capabilities that humans possess.

LLMs are proficient at processing and generating text. Still, they cannot fully understand context, emotions, and human experiences. They excel in tasks involving language processing, such as generating summaries, answering questions, or writing code. However, jobs that require creativity, critical thinking, empathy, and complex decision-making are beyond the capabilities of LLMs. Human jobs that involve innovation, problem-solving, interpersonal skills, and adaptability are unlikely to be replaced by LLMs.

Instead of eliminating jobs, LLMs have the potential to augment human work by assisting in repetitive or time-consuming tasks, freeing up human resources to focus on higher-level responsibilities. The collaboration between LLMs and human workers can lead to increased productivity and new opportunities for growth and development.

Conclusion

- In the dynamic world of AI, myths and reality around Large Language Models often intertwine, but separating fact from fiction is essential.

- Debunking these myths is crucial for fostering realistic expectations and responsible utilization of these models.

- As we move forward, understanding LLMs' true capabilities and limitations will allow us to harness their potential while also upholding human creativity, ethics, and expertise.

- A harmonious integration of AI and human ingenuity is the key to unlocking a future where technology truly enhances our lives.