Hands-with BERT in NLP

Overview

BERT is a pre-trained model on a large corpus of unlabeled text data. It uses a transformer architecture to generate contextualized representations of words in a sentence, allowing the pre-trained models to understand the context in which words are used. That makes BERT particularly useful for natural language processing tasks, such as sentiment analysis, named entity recognition(NER) and machine translation.

Pre-requisites

You will need to have the following prerequisites:

- Basic understanding of machine learning concepts and natural language processing (NLP).

- Basic understanding of TensorFlow and how to use it to build and train deep learning pre-trained models.

- Familiarity with BERT and how it works.

- A good understanding of English and its grammar, including POS tagging.

- Access to a machine with a GPU, such as BERT, is a computationally intensive model requiring a powerful machine to train efficiently.

- A dataset for training and evaluating the pre-trained models could be a publicly available dataset or one you have compiled.

- A good understanding of preprocessing and preparing text data for a machine-learning model.

- Basic programming skills, particularly in python.

It is also helpful to have some experience working with other NLP tasks, such as language translation or named entity recognition, as this can give you a better understanding of the concepts and techniques commonly used in NLP.

Introduction

BERT (Bidirectional Encoder Representations from Transformers) is a state-of-the-art natural language processing (NLP) pre-trained model developed by Google. It is designed to pre-train deep bidirectional representations from an unlabeled text by joint conditioning on both the left and right context in all layers. BERT has achieved impressive results on various NLP tasks, such as question answering, language translation, and named entity recognition. One of the key advantages of BERT is its ability to handle long-range dependencies in text, making it well-suited for tasks that require an understanding of context and relationships between words in a sentence or paragraph. BERT has been implemented in various NLP frameworks and is widely used by researchers and practitioners in the field.

Setup

To implement the NLP task using BERT (Bidirectional Encoder Representations from Transformers) in TensorFlow using the Hugging Face transformers library, you will need the following prerequisites:

- A working installation of TensorFlow (Official Docs), the Hugging Face transformers library, and the necessary dependencies (e.g., NumPy, Pandas).

pip install numpy.

pip install pandas. - Pre-trained BERT model: You can use a pre-trained BERT model provided by the Hugging Face transformers library. pip install transformers

Once you have these prerequisites, you can implement BERT in TensorFlow with the Hugging Face transformers library. You can follow a tutorial or guide to learn more about the steps involved in implementing NLP tasks using BERT in TensorFlow with the Hugging Face transformers library.

Implementation Using BERT

Using pre-trained model BERT, we will implement a few NLP tasks like masked word prediction, Part-of-speech (POS) tagging and, at last, Intent Classification.

Imports

Import required pre-trained models to perform the required tasks from the transformer

Tokenization

The tokenizer handles preparing text for the BERT pre-trained models, including BertForMaskedLM. It handles tokenizing, converting tokens to BERT vocabulary IDs, adding special tokens, and adding model-specific paddings, among other things.

Bert for Masked Word Prediction

Masked word prediction is a natural language processing (NLP) task in which the goal is to predict a word that has been masked or replaced with a placeholder token (such as [MASK]) in a sentence or paragraph. This task is often used to evaluate the language understanding capabilities of machine learning pre-trained models. It requires the model to consider the context of the masked word and the relationships between words in the sentence to make an accurate prediction.

Output:

POS Tagging with BERT

POS tags identify the grammatical function that a token performs in a sentence. We must classify each token under a label to perform the required task. The global tagset, which consists of 12 tags, will be used, most of which are ones you already know (e.g. nouns, verbs, adjectives, etc.).

The below code performs token classification, which should be further finetuned upon the desired dataset to predict POS tags for each token in the input text, such as ['DET', 'VERB', 'DET', 'NOUN', 'ADP', 'NOUN', 'ADP', 'VERB', 'NOUN', '.'].

Output:

Intent Classification with BERT

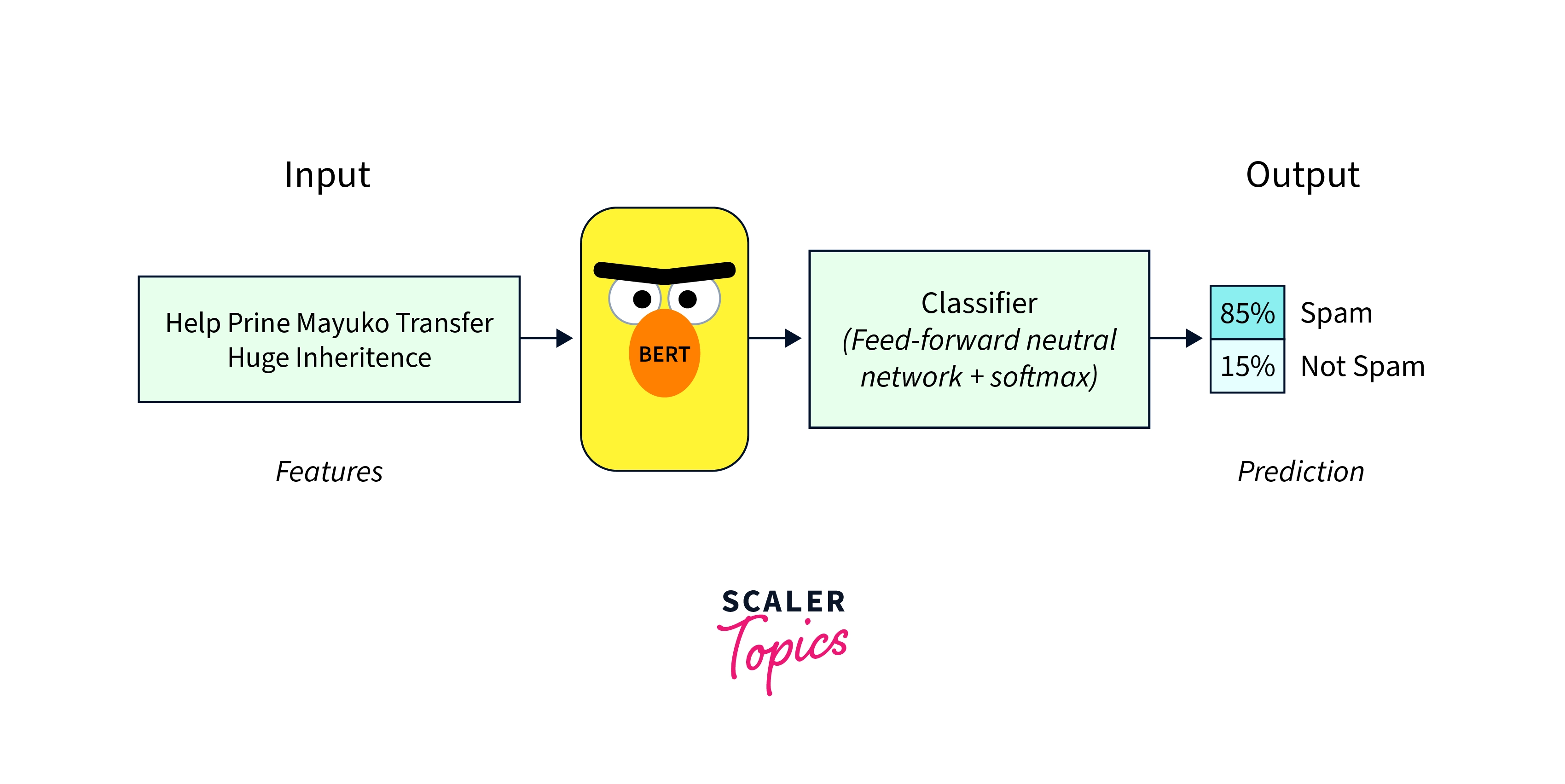

Intent classification is the task of identifying the intention or purpose behind a given input text. It is commonly used in natural language processing (NLP) applications such as chatbots and voice assistants. One way to perform intent classification is by using the BERT model.

Output:

For example:

If your list of intents is [book_restaurant, cancel_restaurant], then a predicted_intent value of 0 would mean that the model predicts the intent to be book_restaurant, and a value of 1 would mean that the model predicts the intent to be cancel_restaurant.

Conclusion

- One of the key strengths of BERT is its ability to handle a wide range of NLP tasks with high accuracy, including tasks such as language translation, question answering, and text classification.

- Additionally, BERT is trained on a large dataset and can generalize well to new, unseen data, making it a useful tool for a wide range of NLP applications.

- Overall, the BERT model has demonstrated significant promise in the field of NLP and has been widely adopted by researchers and practitioners in industry and academia.