Introduction to Stanford NLP

Overview

The NLP is not only restricted to the English language and its processing. The Stanford NLP is a Python library that helps us to train our NLP model in numerous non-English languages like Chinese, Hindi, Japanese, etc. We can define the StanfordNLP as the framework having collections of numerous pre-trained models (state-of-the-art models). These models are developed using another Python library, i.e., PyTorch. The Stanford NLP can deal with and work with numerous languages, which is why the Stanford NLP library is popular. This library has many pre-trained neural network models that support more than 50 human languages featuring 73 treebanks.

Introduction

A question always comes to a learner's mind is whether we can use NLP for another language than English or train an NLP model for other languages as well. The answer is yes, and the NLP is not restricted to the English language and its processing.

As we all know, all languages have rules (grammar, syntax, semantics, etc.) and various linguistic nuances. But there are only a small number of datasets available for other languages as we have for the English language. So, to solve this problem, we have the Stanford NLP.

StanfordNLP is a Python natural language analysis package. It contains tools that can be used in a pipeline to convert a string containing human language text into lists of sentences and words, to generate base forms of those words, their parts of speech, and morphological features, and to give a syntactic structure dependency parse, which is designed to be parallel among more than 70 languages, using the Universal Dependencies formalism. In addition, it can call the CoreNLP Java package and inherits additional functionality from there, such as constituency parsing, coreference resolution, and linguistic pattern matching.

Let us learn about Stanford NLP in detail in the next section.

What is StanfordNLP and Why Should You Use it?

The Stanford NLP is a Python library that helps us to train our NLP model in numerous English and non-English languages like Chinese, Hindi, Japanese, etc. In this article, we will see a tutorial regarding using the Hindi language using the Stanford NLP.

The Stanford NLP can deal with and work with numerous languages, which is why the Stanford NLP library is popular. For example, the Stanford team has developed a software package in CoNLL 2018 that works on Universal Dependency Parsing. The Stanford team uses the Python interface for this software, so the Stanford NLP combines the Python interface and the Stanford team's software package.

Let us see the various terms we used in the previous section individually.

- CoNLL- It is the annual Natural Language Learning conference first held in 2018, and the team represented all the global level research institutes that worked in the field of NLP. They try to solve the problems related to NLP. One of the most recent tasks that they solved was to parse the unstructured data from multiple languages into some useful annotations using the Universal Dependencies.

- Universal Dependency- UD is a framework whose main work is to maintain consistency in annotations. Therefore, it does not consider the parsing language and generates the annotations from the parsed input.

We can define the StanfordNLP as the framework with collections of numerous pre-trained (state-of-the-art models) models. These models are developed using another Python library, i.e., PyTorch.

So far, we have discussed a lot about Stanford NLP. Let us now see why we should use it and what makes it so popular.

- We do not need a lot of setup before using this library as it is implemented using Native Python.

- It has many pre-trained neural network models supporting over 50 human languages featuring 73 treebanks.

- It is also a sound library having a stable Python interface that the CoreNLP maintains.

- It also provides quite a stable neural network pipeline that provides us features like:

- POS Tagging (Parts of Speech Tagging)

- Morphological Feature Tagging

- Dependency Parsing

- Tokenization

- MWT Expansion or Multi-word Token Expansion

- Lemmatization

- Syntax Analysis

- Semantic Analysis

Setting Up StanfordNLP in Python

Let us now learn how we can set up the Stanford NLP. In this tutorial, we will use the Anaconda environment for the demonstration (but you can use any IDE or environment of your choice). Also, you must ensure you are using Python version 3.6.8 or above.

- (Optional) You can create a different environment for this demo. So, for making an environment and activating it. Use the below commands.

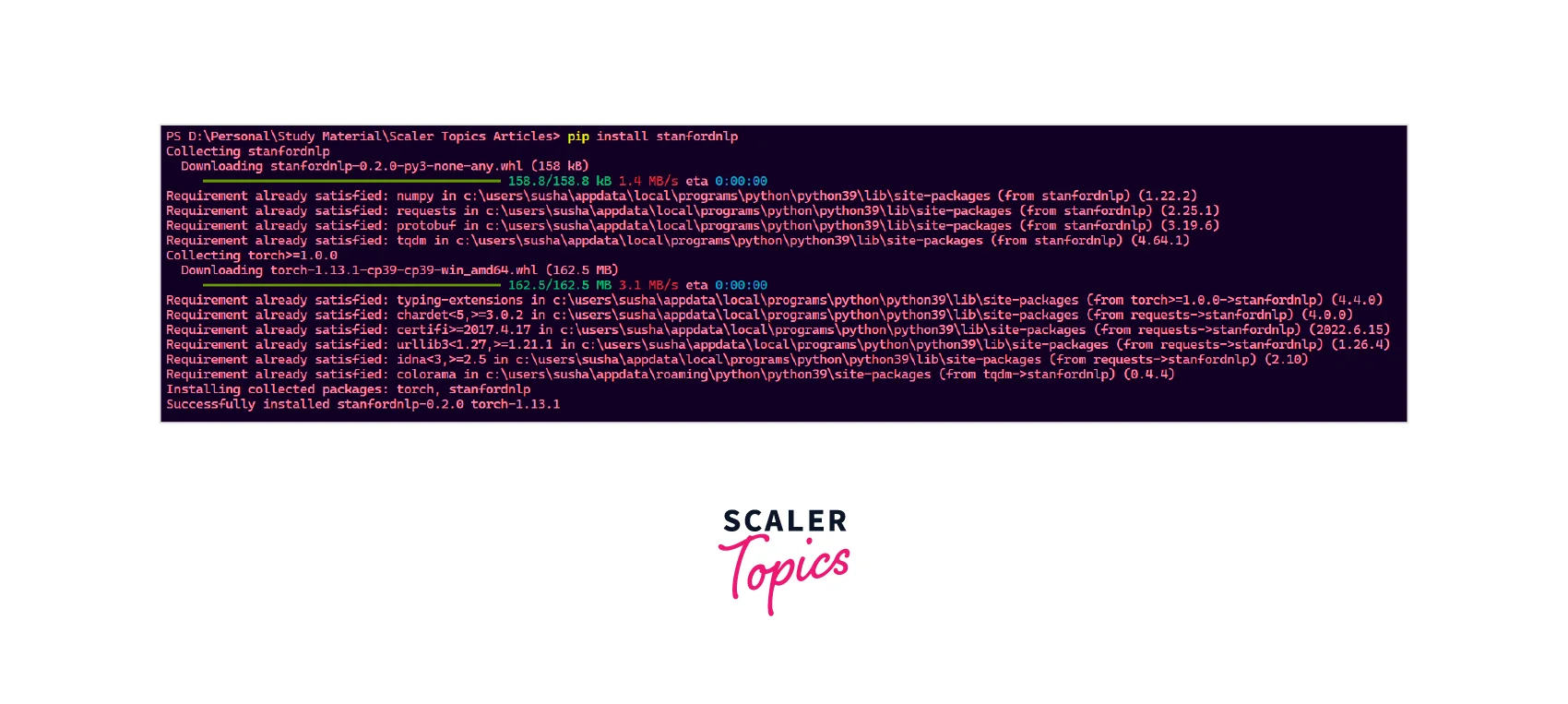

- Install the StanfordNLP library on the local environment. Use the below commands.

Output:

- We need to import the library first to use the various data models, functions, and methods. Use the below commands.

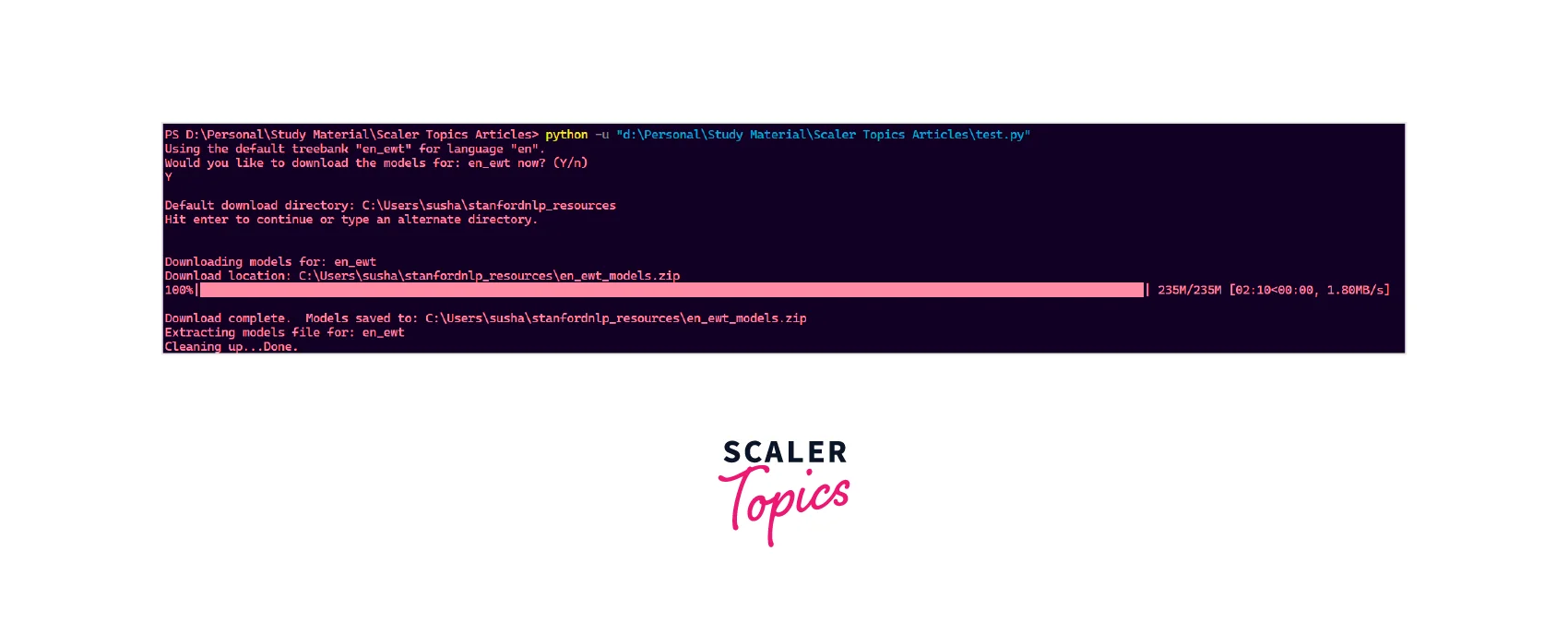

After that, we can download any language model of our choice. For example, if we want to download the English language model, we can use the below commands.

Output:

After downloading the model, we can perform all the pipelining tasks like- Dependency Parsing, Multi-Word Token Expansion, Lemmatisation, Parts of Speech Tagging, Tokenization, etc.

One thing that we should make sure of is that this is a huge library so it will take considerable computational power.

Using StanfordNLP to Perform Basic NLP Tasks

Let us perform some of the basic NLP tasks for more clarity.

- Tokenization

Tokenization is breaking down a piece of text into small units called tokens. A token may be a word, part of a word, or just characters like punctuation. It is one of the most foundational NLP tasks and a difficult one because every language has its grammatical constructs, which are often difficult to write down as rules. We can use the print_tokens() function to print all the tokens. The overall command for the same is:

Output:

The command will provide us with an object containing all the tokens' indexes present in the first sentence.

- Lemmatization

Lemmatization is a method responsible for grouping different inflected forms of words into the root form, having the same meaning. It is similar to stemming. In turn, it gives the stripped word that has some dictionary meaning. The Morphological analysis would require the extraction of the correct lemma of each word.

For example, Lemmatization identifies the base form of ‘troubled’ to ‘trouble’, denoting some meaning. Lemmatization means generating lemmas out of the provided text. We can use the code to extract all the lemmas out of the text.

Output:

- Parts of Speech (PoS) Tagging

The Parts of Speech tagging is one of the prime stages of the entire NLP process that deals with Word Sense Disambiguation and it helps in achieving a high level of accuracy of the meaning of the word. To perform the POS tagging, we need to use a dictionary of the language that we are working on. The dictionary contains all the POS tags and their meaning. Let us take an example for more clarity.

Output:

- Dependency Extraction

Similarly, if we want to print out the dependencies, we can use the code.

Output:

The above command will print out all the dependency relations of the first sentence.

Implementing StanfordNLP on the Hindi Language

Just as we have seen the working of the English model, we can use the Hindi model. So let us learn how to set up the Stanford NLP for Hindi.

- (Optional) You can create a different environment for this demo. So, for making an environment and activating it. Use the below commands.

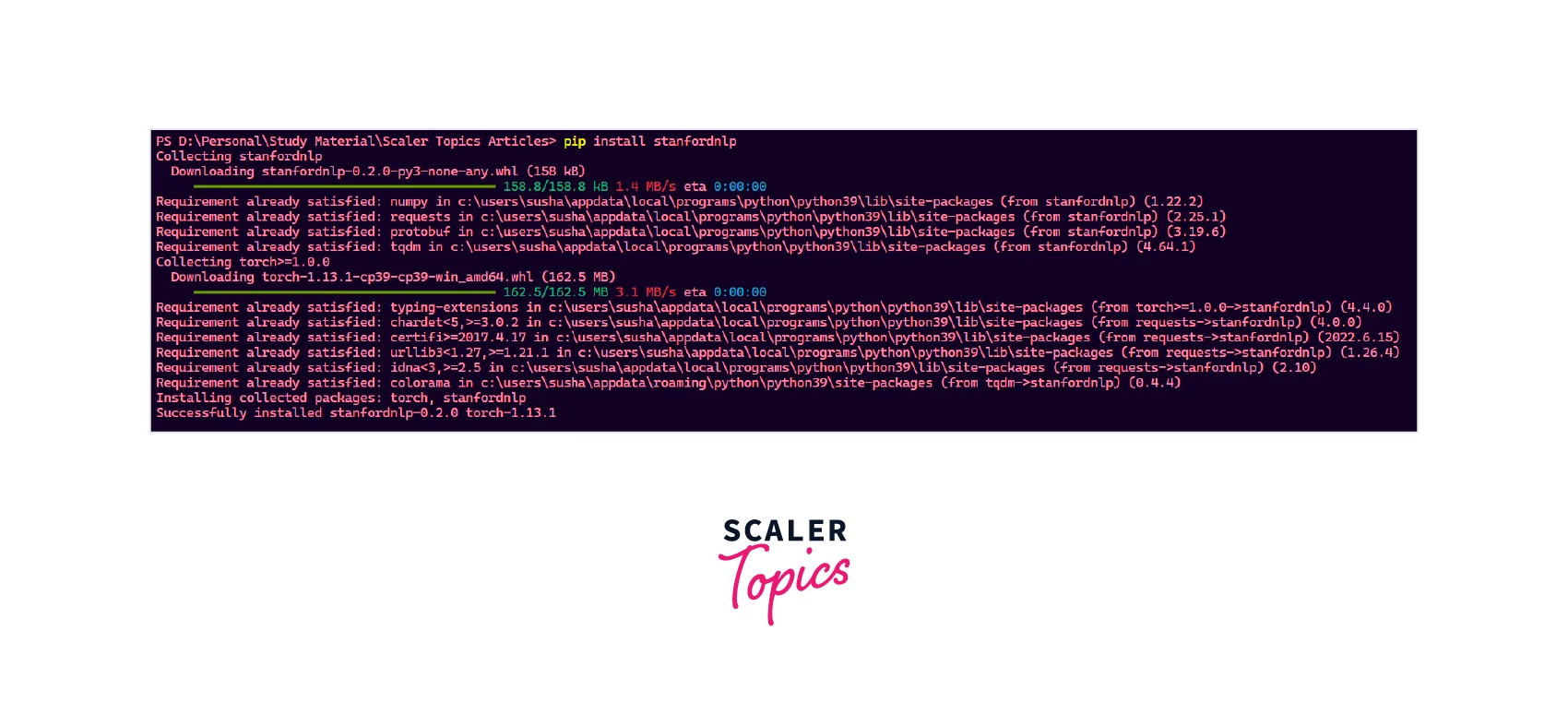

- Install the StanfordNLP library on the local environment. Use the below commands.

Output:

- We need to import the library first to use the various data models, functions, and methods. Use the below commands.

After that, we can download any language model of our choice.

First, we need to download the Hindi language model using the code for using the Hindi model.

Output:

The above code will take some time as it is a huge model. We can also use the numerous functions already discussed in this model. We can use functions like nlp(), print_dependencies(), etc.

Using CoreNLP's API for Text Analytics

As previously discussed, the CoreNLP is a very accurate and precisely created, time-tested NLP tool kit. Also, the Stanford NLP is the official Python interface of the CoreNLP API. So let us now learn how to use it to perform text analytics.

The StanfordNLP library is quite simple to use. We only require two to three lines of code to start using the sophisticated APIs of the CoreNLP.

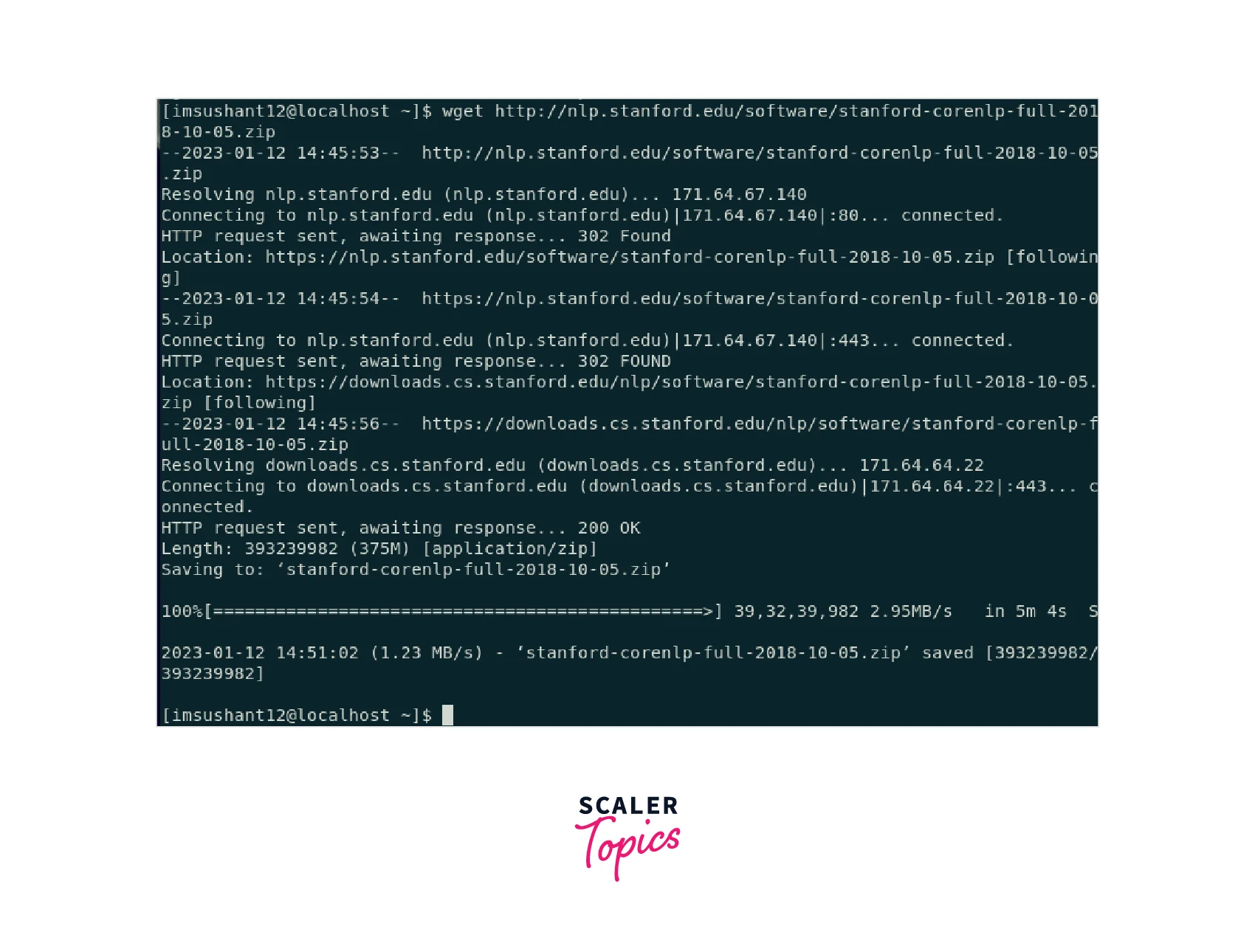

The process starts by first downloading the CoreNLP project package. We can use the command line to download the CoreNLP package. For the Linux shell, we can use the command:

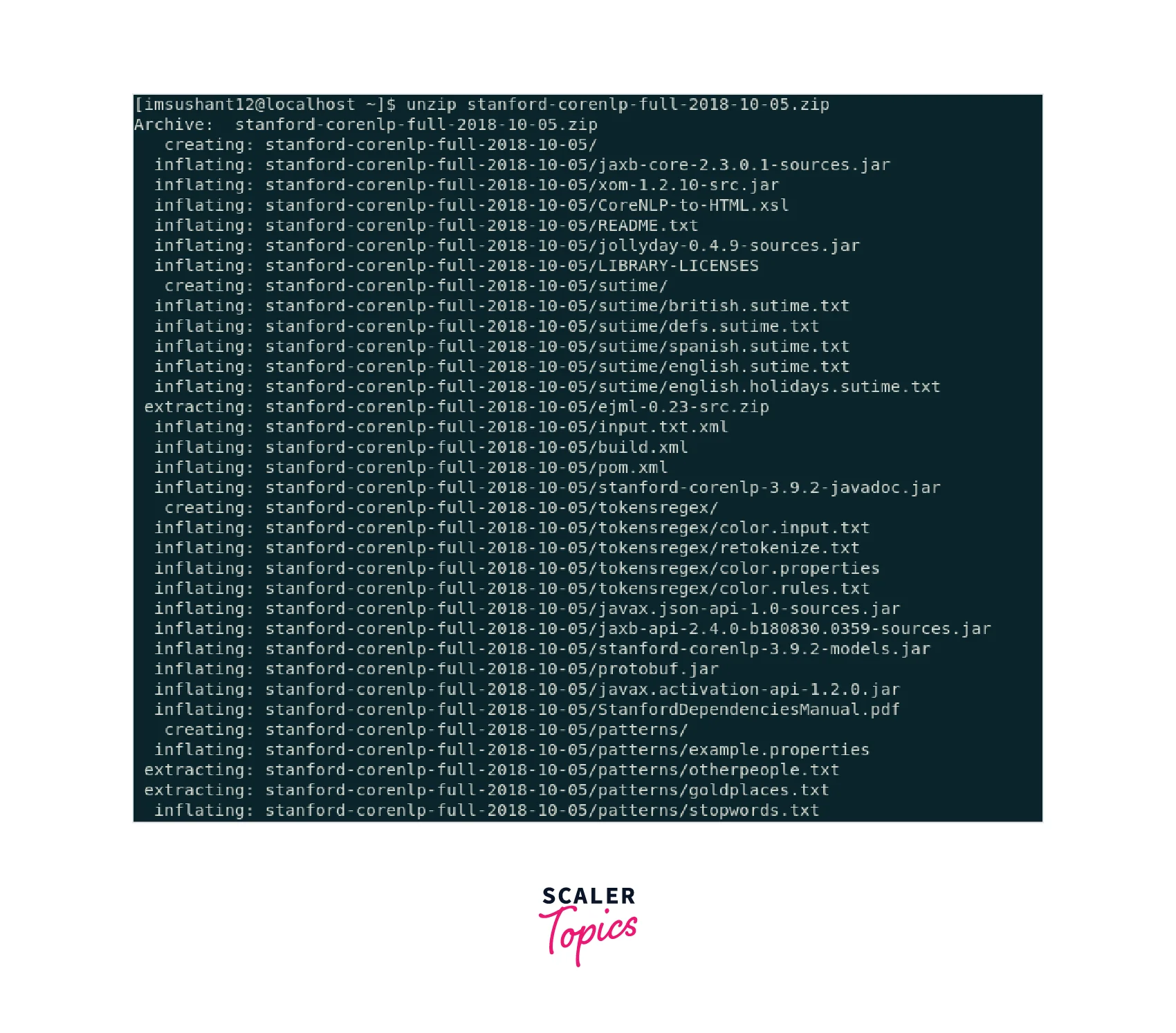

once, we have downloaded the zip file, we need to unzip it. We can manually do this using the GUI tools and software or we can use the below command.

Finally, we have all the setup. We just need to start the CoreNLP server using the below command (make sure you have Java's JDK and JRE installed on your system because the CoreNLP has been somewhat limited to the Java ecosystem until now).

Now after all the setup, we first need to set up the Core NLP client using the below code:

After setting up the server, we can now perform POS tagging and dependency parsing.

Similarly, we can perform entity recognition and co-reference chaining using the various functions of the StanfordNLP library.

Conclusion

- The NLP is not restricted to the English language and its processing. For example, the Stanford NLP is a Python library that helps us to train our NLP model in numerous non-English languages like Chinese, Hindi, Japanese, etc.

- The Stanford NLP can deal and work with numerous languages, which is why the Stanford NLP library is popular. The library has many pre-trained neural network models supporting over 50 human languages featuring 73 treebanks.

- The StanfordNLP framework has collections of numerous pre-trained models (state-of-the-art models). These models are developed using another Python library, i.e., PyTorch.

- The Stanford team has developed a software package in CoNLL 2018 that works on Universal Dependency Parsing. Universal Dependency is a framework whose main work is maintaining consistency in annotations.

- The Stanford team uses the Python interface for this software, so the Stanford NLP combines the Python interface and the Stanford team's software package.