What is ChatGPT?

Overview

The language model known as ChatGPT was created by OpenAI with conversational use cases in mind. It is based on the architecture of the GPT-3 (Generative Pre-trained Transformer). The algorithm has been enhanced for generating tailored and interesting responses in a chat interface using a different dataset. Natural language processing (NLP) methods are used by ChatGPT to comprehend, decipher, and produce human language.

This blog will explore the best chatgpt alternatives, such as Microsoft Bing, Perplexity AI, Google BardAI, Jasper Chat, Chatsonic, Claude 2, Llama 2, HuggingChat, Pi, GitHub Copilot X, OpenAI Playground, and Quora Poe.

Understanding the Architecture of ChatGPT: Transformative Language Models (TLM)

ChatGPT is powered by a neural network architecture known as the Transformer. The Transformer architecture has been instrumental in enabling the success of ChatGPT and other large language models.

Transformer Architecture

The Transformer architecture was introduced in a groundbreaking paper titled "Attention is All You Need" by Vaswani et al. in 2017. It revolutionized the field of natural language processing (NLP) by addressing the limitations of previous sequential models, such as recurrent neural networks (RNNs) and convolutional neural networks (CNNs).

The Transformer architecture is based on self-attention mechanisms, which allow the model to weigh the importance of different words in a sentence when generating predictions. Rather than relying on sequential processing, the Transformer can consider the entire context of a sentence simultaneously, making it more efficient and effective for NLP tasks.

Key Components of the Transformer Architecture

The Transformer architecture consists of several key components, including:

- Encoder:

The encoder takes an input sequence, such as a sentence, and processes it through a stack of identical layers. Each layer has two sub-layers: a multi-head self-attention mechanism and a position-wise feed-forward neural network. The self-attention mechanism allows the model to attend to different parts of the input sequence while the feed-forward network applies non-linear transformations. - Decoder:

The decoder also consists of a stack of identical layers with an additional sub-layer that performs multi-head attention over the encoder's output. Given the previous words, the decoder is trained to predict the next word in the target sequence. - Attention Mechanism:

The attention mechanism is a crucial component of the Transformer architecture. It enables the model to assign weights to different parts of the input sequence based on their relevance to the current word being processed. This allows the model to focus on the most important information during prediction. - Positional Encoding:

Since the Transformer model does not have an inherent notion of word order, positional encoding is used to provide positional information to the model. Positional encoding is added to the input embeddings to convey the relative positions of words in the sequence. - Multi-Head Attention:

Multi-head attention allows the model to simultaneously attend to different parts of the input sequence. It encompasses multiple parallel attention mechanisms, each focusing on a different learned projection of the input sequence. This enables the model to capture different types of information and improves its ability to learn complex relationships.

Fine-Tuning Process of ChatGPT

Preparing a training dataset, incorporating the prepared dataset into the code, and selecting the fine-tuning method are all necessary steps in fine-tuning ChatGPT. The code will then fine-tune the model, improving its accuracy and language processing capabilities for that domain or use case. It may take a number of iterative rounds of evaluation and fine-tuning before ChatGPT is fully optimized for a given task.

The fine-tuning process can be done using prompt engineering, which involves providing prompts to the model and fine-tuning it to generate the desired responses. Here are the steps to fine-tune ChatGPT for a specific use case.

- Step 1:

Define the use case. - Step 2:

Collect and preprocess data. - Step 3:

Prepare data for training. - Step 4:

Fine-tune the model. - Step 5:

Evaluate the model.

To fine-tune ChatGPT, you can use the transformer architecture, which is based on the idea of self-attention. With the use of this technique, the model is able to weigh the relative relevance of the various input sequence components when producing the output, which helps it comprehend the context of the input and produce more precise and relevant results.

Here is an example of how to fine-tune ChatGPT using Hugging Face's Transformers library in Python:

Fine-tuning ChatGPT can lead to impressive results and help unlock its full potential for a specific task or domain.

Role of Reinforcement Learning from Human Feedback (RLHF) in ChatGPT

Reinforcement Learning from Human Feedback (RLHF) plays a crucial role in training ChatGPT. RLHF is a technique used to fine-tune models by incorporating feedback from human evaluators.

Training Process of ChatGPT using RLHF

During the initial training phase, ChatGPT is trained using a two-step process. The first step involves pre-training the model using a large dataset from the internet. This pre-training phase helps the model learn grammar, facts, and reasoning.

After pre-training, the model goes through a second step called fine-tuning. This is where RLHF comes into play. In the fine-tuning stage, human evaluators provide feedback to guide the model's responses toward more desirable behavior.

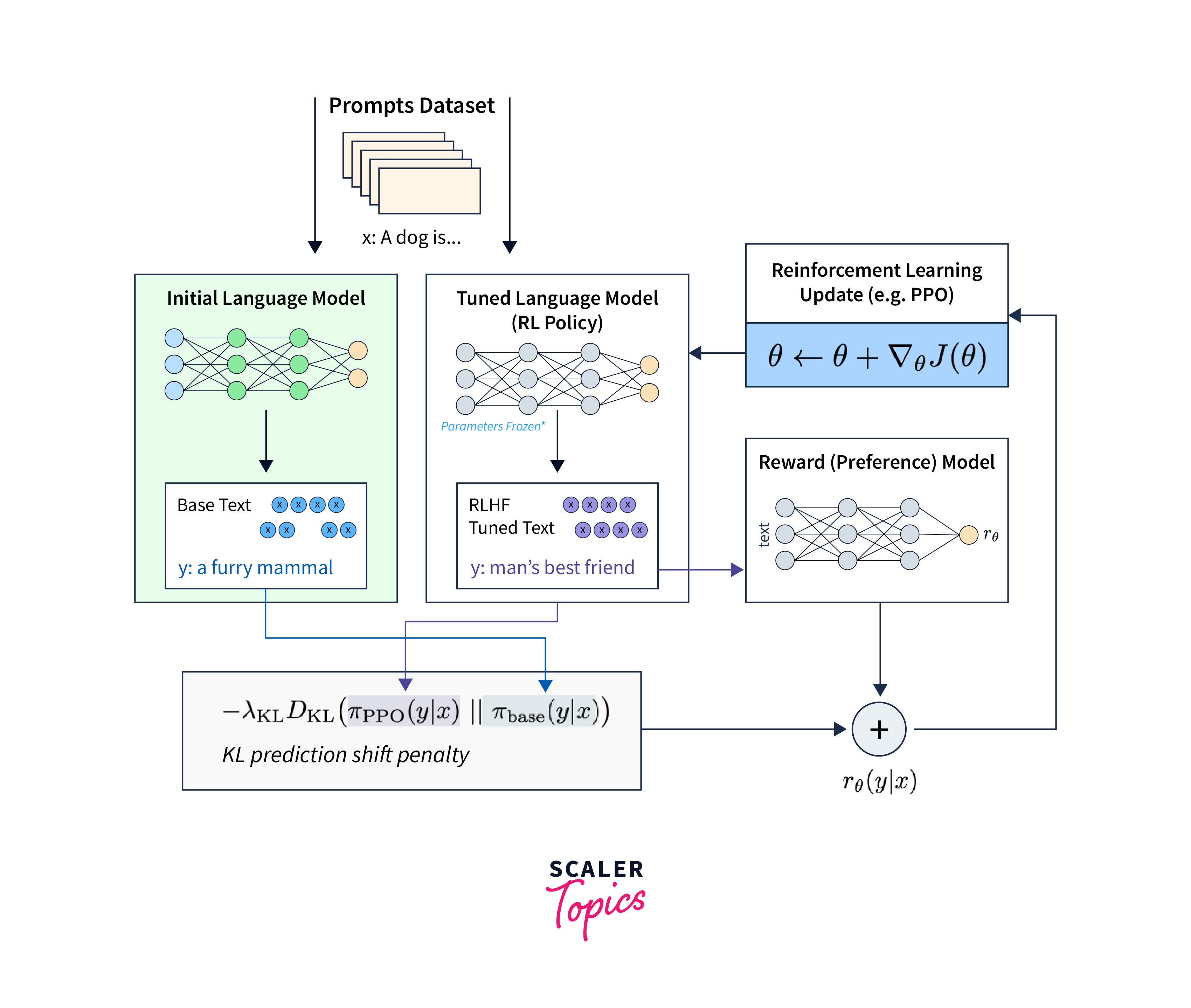

How RLHF Works?

RLHF is a technique that uses a reward model to optimize the model's behavior. Human evaluators interact with the model and provide feedback in the form of rankings or ratings for different model-generated responses. This feedback helps create a reward signal that guides the model toward generating more appropriate and helpful responses.

The model is then fine-tuned using Proximal Policy Optimization, a reinforcement learning algorithm. The fine-tuning process involves updating the model to improve its performance based on the feedback received.

Benefits of RLHF in ChatGPT

- RLHF is crucial in training ChatGPT because it allows the model to learn from human expertise and preferences. By incorporating human feedback, the model can be guided towards generating more accurate, informative, and contextually appropriate responses.

- RLHF helps address some of the limitations of purely supervised learning approaches. It allows the model to generalize better, handle a wider range of inputs, and generate more coherent and reliable responses.

Limitations and Strengths of ChatGPT Practical

Limitations

- Lack of true insight:

ChatGPT may generate shallow responses because it is trained to generate words based on input and may need to understand human language's complexity fully. - Biases in training data:

Like many AI models, ChatGPT can be influenced by biases in its training data, which can negatively affect the model's results. - Difficulty maintaining context:

While ChatGPT can remember various questions to continue the conversation, it may still need help maintaining context over longer interactions, leading to less coherent responses. - Inability to ask clarifying questions:

ChatGPT cannot ask clarifying questions to understand ambiguous or incomplete user inputs, sometimes resulting in inaccurate or irrelevant responses. - Potential for harmful or biased outputs:

ChatGPT may generate responses that are inappropriate, offensive, or biased, as it learns from the data it is trained on, which can include biased or harmful content.

Strengths:

- Conversational ability:

ChatGPT excels at having conversational or Q&A-style exchanges, making it suitable for chatbot applications and interactive dialogue. - Personalized and engaging:

ChatGPT has been fine-tuned on a different dataset and optimized for conversational use cases, providing a more personalized and engaging experience for users interacting through a chat interface. - Wide range of tasks:

ChatGPT can assist with a wide range of tasks that require the understanding and generation of natural language, making it versatile for various applications. - Continual improvement:

Regular model updates and user feedback contribute to the ongoing improvement of ChatGPT, helping to address its limitations and enhance its performance.

Practical Applications of ChatGPT

ChatGPT has a wide range of practical applications across different industries and domains. Some examples include

-

Customer Support

ChatGPT can be used in customer support to provide automated assistance to users. It can answer frequently asked questions, troubleshoot common issues, and provide relevant information to customers. -

Virtual Assistants

ChatGPT can function as a virtual assistant, helping users with various tasks such as scheduling appointments, setting reminders, and providing information on a wide range of topics. -

Content Generation

ChatGPT is capable of generating content for different purposes, including blogs, articles, social media posts, and other written materials. It can help automate the content creation process and provide assistance to content creators. -

Language Translation

ChatGPT can assist with language translation, making it easier for users to communicate and understand different languages. It can help bridge the language barrier and facilitate effective communication. -

Education and Tutoring

In educational settings, ChatGPT can be used to provide personalized tutoring services. It can answer student questions, provide explanations, and facilitate interactive learning experiences. -

Creative Writing

ChatGPT can be used as a tool for creative writing, helping authors and screenwriters with generating ideas, developing storylines, and creating dialogue. It can assist in the creative process and provide inspiration.

These are just a few examples of the practical applications of ChatGPT. The versatility of the model allows for its use in numerous industries and domains.

FAQs

Q. What are AI Chatbots?

A. Artificial intelligence (AI) chatbots are computer programs that simulate human conversation and provide automated responses to user inquiries through the use of natural language processing (NLP) and machine learning algorithms. Chatbots can be used for a variety of applications, such as customer support, virtual assistants, content generation, and language translation.

Q. Is There Any Alternative to ChatGPT?

A. Yes, there are several chatgpt alternatives available in the market, including AI-Writer, ChatSonic, DeepL Write, Open Assistant, Perplexity AI, Rytr, YouChat, GitHub Copilot X, Google BardAI, HuggingChat, Jasper Chat, Llama 2, Microsoft Bing, OpenAI Playground, and Quora Poe.

Q. Is There a Free Version of ChatGPT?

A. Yes, there is a free version of ChatGPT available for public use. OpenAI has released the model for testing to the general public in November 2022.

Q. Is There Any Other Tool like ChatGPT?

A. Yes, several other tools like ChatGPT are available in the market, including Google BardAI, Microsoft Bing Chat, Chatsonic, GitHub Copilot, Wix ADI, Jasper.ai, and WriterZen.

Q. What Is Google Equivalent to ChatGPT?

A. Google's equivalent to ChatGPT is Bard AI, powered by Google's in-house PaLM 2 large language model. Although Bard's PaLM 2 model is similar to ChatGPT's GPT at the fundamental architectural level, there is a noticeable difference in the practical capabilities of the two language models.

Conclusion

- ChatGPT is a language model developed by OpenAI specifically designed for conversational use cases. It has been fine-tuned on a different dataset and optimized for generating personalized and engaging responses in a chat interface.

- ChatGPT has several limitations, including a lack of true insight, training data biases, difficulty maintaining context, inability to ask clarifying questions, and potential for harmful or biased outputs.

- However, it has several strengths, including conversational ability, personalized and engaging responses, a wide range of tasks, and continual improvement.

- There are several chatgpt alternatives available in the market, including Google BardAI, Microsoft Bing Chat, Chatsonic, and GitHub Copilot.