Multi-processor Scheduling

Overview

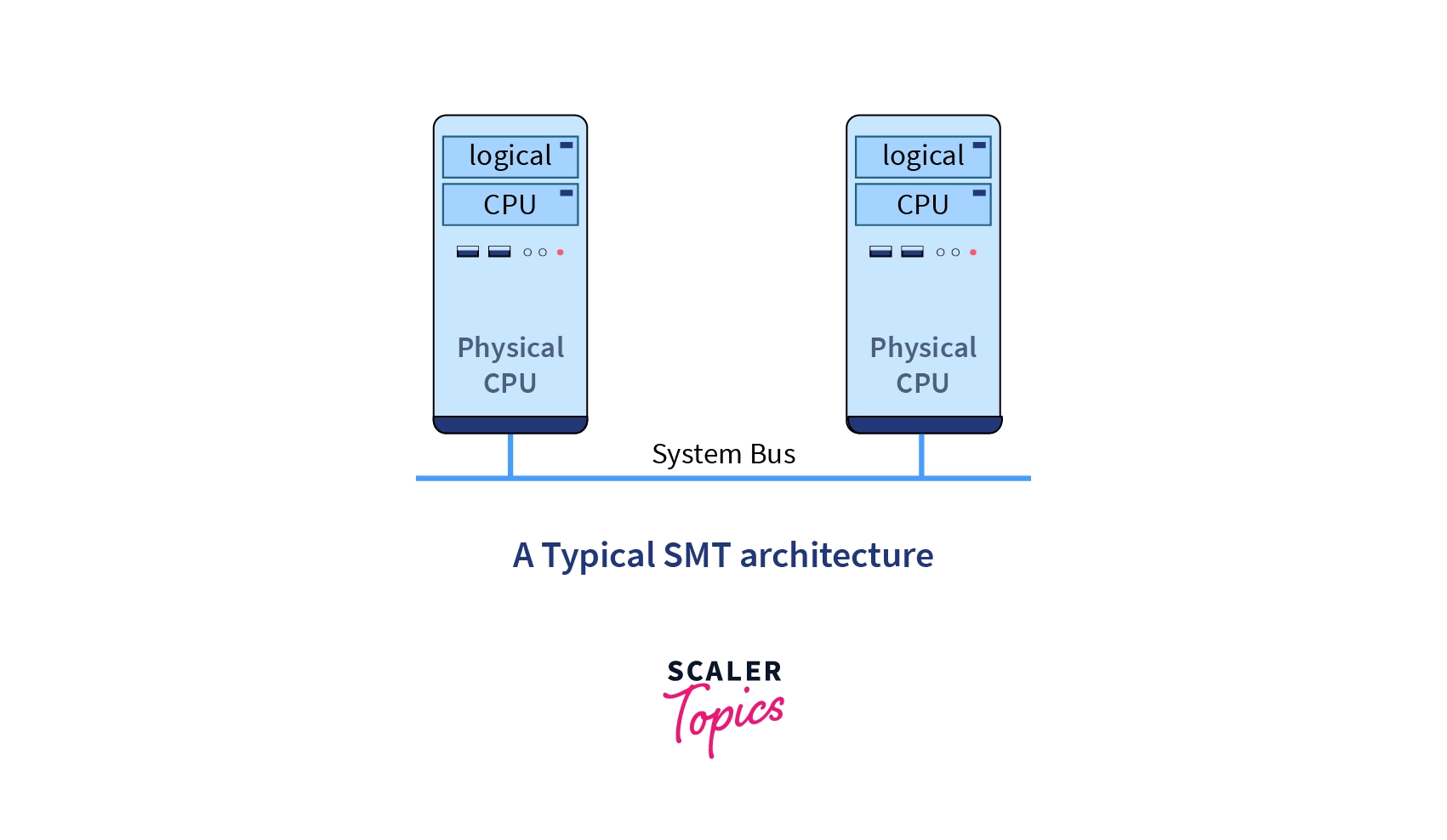

A Multi-processor is a system that has more than one processor but shares the same memory, bus, and input/output devices. In multi-processor scheduling, more than one processors(CPUs) share the load to handle the execution of processes smoothly.

The scheduling process of a multi-processor is more complex than that of a single processor system. The CPUs may be of the same kind(homogeneous) or different(heterogeneous).

The multiple CPUs in the system share a common bus, memory, and other I/O devices. There are two approaches to multi-processor scheduling - symmetric and asymmetric Multi-processor scheduling.

Multiple Processors Scheduling in Operating System

A multi-processor is a system that has more than one processor but shares the same memory, bus, and input/output devices. The bus connects the processor to the RAM, to the I/O devices, and to all the other components of the computer.

The system is a tightly coupled system. This type of system works even if a processor goes down. The rest of the system keeps working. In multi-processor scheduling, more than one processors(CPUs) share the load to handle the execution of processes smoothly.

The scheduling process of a multi-processor is more complex than that of a single processor system because of the following reasons.

- Load balancing is a problem since more than one processors are present.

- Processes executing simultaneously may require access to shared data.

- Cache affinity should be considered in scheduling.

Approaches to Multiple Processor Scheduling

-

Symmetric Multiprocessing: In symmetric multi-processor scheduling, the processors are self-scheduling. The scheduler for each processor checks the ready queue and selects a process to execute. Each of the processors works on the same copy of the operating system and communicates with each other. If one of the processors goes down, the rest of the system keeps working.

- Symmetrical Scheduling with global queues: If the processes to be executed are in a common queue or a global queue, the scheduler for each processor checks this global-ready queue and selects a process to execute.

- Symmetrical Scheduling with per queues: If the processors in the system have their own private ready queues, the scheduler for each processor checks their own private queue to select a process.

-

Asymmetric Multiprocessing: In asymmetric multi-processor scheduling, there is a master server, and the rest of them are slave servers. The master server handles all the scheduling processes and I/O processes, and the slave servers handle the users' processes. If the master server goes down, the whole system comes to a halt. However, if one of the slave servers goes down, the rest of the system keeps working.

Processor Affinity

A process has an affinity for a processor on which it runs. This is called processor affinity.

Let's try to understand why this happens.

- When a process runs on a processor, the data accessed by the process most recently is populated in the cache memory of this processor. The following data access calls by the process are often satisfied by the cache memory.

- However, if this process is migrated to another processor for some reason, the content of the cache memory of the first processor is invalidated, and the second processor's cache memory has to be repopulated.

- To avoid the cost of invalidating and repopulating the cache memory, the Migration of processes from one processor to another is avoided.

There are two types of processor affinity.

- Soft Affinity: The system has a rule of trying to keep running a process on the same processor but does not guarantee it. This is called soft affinity.

- Hard Affinity: The system allows the process to specify the subset of processors on which it may run, i.e., each process can run only some of the processors. Systems such as Linux implement soft affinity, but they also provide system calls such as sched_setaffinity() to support hard affinity.

Load Balancing

In a multi-processor system, all processors may not have the same workload. Some may have a long ready queue, while others may be sitting idle. To solve this problem, load balancing comes into the picture. Load Balancing is the phenomenon of distributing workload so that the processors have an even workload in a symmetric multi-processor system.

In symmetric multiprocessing systems which have a global queue, load balancing is not required. In such a system, a processor examines the global ready queue and selects a process as soon as it becomes ideal.

However, in asymmetric multi-processor with private queues, some processors may end up idle while others have a high workload. There are two ways to solve this.

- Push Migration: In push migration, a task routinely checks the load on each processor. Some processors may have long queues while some are idle. If the workload is unevenly distributed, it will extract the load from the overloaded processor and assign the load to an idle or a less busy processor.

- Pull Migration: In pull migration, an idle processor will extract the load from an overloaded processor itself.

Multi-Core Processors

A multi-core processor is a single computing component comprised of two or more CPUs called cores. Each core has a register set to maintain its architectural state and thus appears to the operating system as a separate physical processor. A processor register can hold an instruction, address, etc. Since each core has a register set, the system behaves as a multi-processor with each core as a processor.

Symmetric multiprocessing systems which use multi-core processors allow higher performance at low energy.

Symmetric multiprocessor

In Symmetric multi-processors, the memory has only one operating system, which can be run by any central processing unit. When a system call is made, the CPU on which the system call was made traps the kernel and processed that system call. The model works to balance processes and memory dynamically. As the name suggests, it uses symmetric multiprocessing to schedule processes, and every processor is self-scheduling. Each processor checks the global or private ready queue and selects a process to execute it.

Note: The kernel is the central component of the operating system. It connects the system hardware to the application software.

There are three ways of conflict that may arise in a symmetric multi-processor system. These are as follows.

- Locking system: The resources in a multi-processor are shared among the processors. To the access safe of these resources to the processors, a locking system is required. This is done to serialize the access of the resources by the processors.

- Shared data: Since multiple processors are accessing the same data at any given time, the data may not be consistent across all of these processors. To avoid this, we must use some kind of strategy or locking scheme.

- Cache Coherence: When the resource data is stored in multiple local caches and shared by many clients, it may be rendered invalid if one of the clients changes the memory block. This can be resolved by maintaining a consistent view of the data.

Master-Slave Multiprocessor

In a master-slave multi-processor, one CPU works as a master while all others work as slave processors. This means the master processor handles all the scheduling processes and the I/O processes while the slave processors handle the user's processes. The memory and input-output devices are shared among all the processors, and all the processors are connected to a common bus. It uses asymmetric multiprocessing to schedule processes.

Virtualization and Threading

Virtualization is the process of running multiple operating systems on a computer system. So a single CPU can also act as a multi-processor. This can be achieved by having a host operating system and other guest operating systems.

- Different applications run on different operating systems without interfering with one another.

- A virtual machine is a virtual environment that functions as a virtual computer with its CPU, memory, network interface, and storage, created on a physical hardware system.

- In a time-sharing OS, 100ms(millisecond) is allocated to each time slice to give users a reasonable response time. But it takes more than 100ms, maybe 1 second or more. This results in a poor response time for users logged into the virtual machine.

- Since the virtual operating systems receive a fraction of the available CPU cycles, the clocks in virtual machines may be incorrect. This is because their timers do not take any longer to trigger than they do on dedicated CPUs.

Conclusion

- A multi-processor is a system that has more than one processor but shares the same memory, bus, and input/output devices.

- In multi-processor scheduling, more than one processor (CPUs) shares the load to handle the execution of processes smoothly.

- In symmetric multi-processor scheduling, the processors are self-scheduling. The scheduler for each processor checks the ready queue and selects a process to execute.

- In asymmetric multi-processor scheduling, there is a master server, and the rest of them are slave servers.

- A process has an affinity for a processor on which it runs. This is called processor affinity.

- Load Balancing is the phenomenon of distributing workload so that the processors have an even workload in a symmetric multi-processor system.

- A multi-core processor is a single computing component comprised of two or more CPUs.

- Virtualization is the process of running multiple operating systems on a computer system.